Kong: Kong memory usage is continuously increasing even when doing nothing

Summary

I have Kong deployed in Kubernetes with only one API configured and 3 plugins activated (key-auth, udp-log, rate-limiting) and there is no traffic. I've set my container memory limit to 512Mo which I assume to be enough to handle a kong that do nothing. What I observe is that the memory usage is continuously increasing at a constant rate and never go down. That lead to my pods being OOMKilled at a frequent rate.

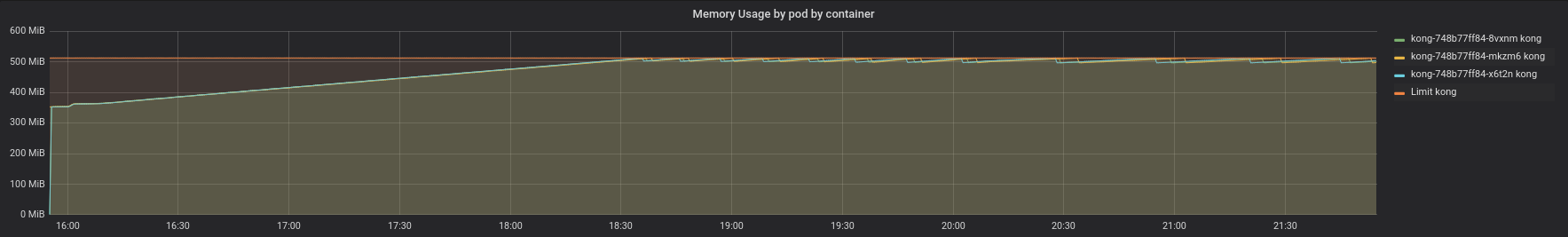

Here is a graph just after kong migration to 0.13.0 (but the issue was the same with 0.12.3 and 0.11.2):

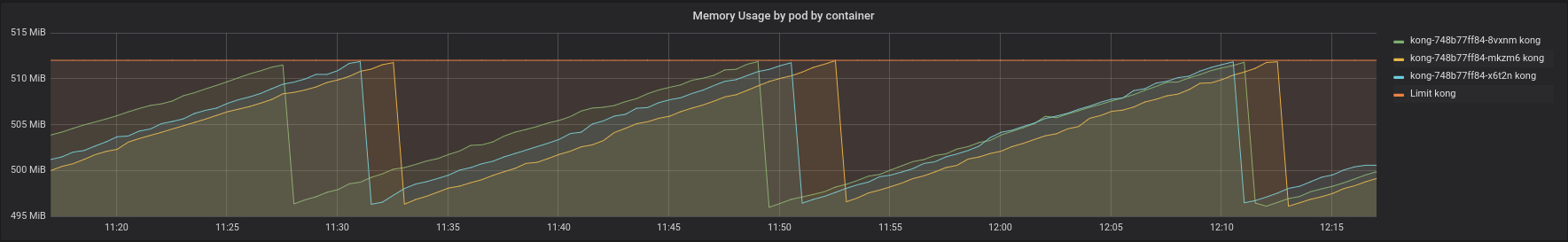

And a more detailed view:

There is nothing interesting in logs, just the sign that it get OOMKilled:

2018/03/28 15:36:13 [notice] 7#0: signal 17 (SIGCHLD) received from 80

2018/03/28 15:36:13 [alert] 7#0: worker process 80 exited on signal 9

2018/03/28 15:36:13 [notice] 7#0: start worker process 156

2018/03/28 15:36:13 [notice] 7#0: signal 29 (SIGIO) received

Additional Details & Logs

- Kong version 0.13.0 (but same issue in 0.12.3 and 0.11.2)

Here is an extract of the root endpoint on admin api:

curl -s kong:8001 |jq

{

"plugins": {

"enabled_in_cluster": [

"key-auth",

"udp-log",

"rate-limiting"

],

"available_on_server": {

"response-transformer": true,

"correlation-id": true,

"statsd": true,

"jwt": true,

"cors": true,

"basic-auth": true,

"key-auth": true,

"ldap-auth": true,

"http-log": true,

"oauth2": true,

"hmac-auth": true,

"acl": true,

"datadog": true,

"tcp-log": true,

"ip-restriction": true,

"request-transformer": true,

"file-log": true,

"bot-detection": true,

"loggly": true,

"request-size-limiting": true,

"syslog": true,

"udp-log": true,

"response-ratelimiting": true,

"aws-lambda": true,

"runscope": true,

"rate-limiting": true,

"request-termination": true

}

},

"tagline": "Welcome to kong",

"configuration": {

"error_default_type": "text/plain",

"client_ssl": false,

"lua_ssl_verify_depth": 1,

"trusted_ips": {},

"prefix": "/usr/local/kong",

"nginx_conf": "/usr/local/kong/nginx.conf",

"cassandra_username": "kong",

"admin_ssl_cert_csr_default": "/usr/local/kong/ssl/admin-kong-default.csr",

"dns_resolver": {},

"pg_user": "kong",

"mem_cache_size": "128m",

"ssl_ciphers": "ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256",

"cassandra_password": "******",

"custom_plugins": {},

"pg_host": "127.0.0.1",

"nginx_acc_logs": "/usr/local/kong/logs/access.log",

"proxy_listen": [

"0.0.0.0:8000"

],

"client_ssl_cert_default": "/usr/local/kong/ssl/kong-default.crt",

"ssl_cert_key_default": "/usr/local/kong/ssl/kong-default.key",

"db_update_frequency": 5,

"db_update_propagation": 3,

"nginx_err_logs": "/usr/local/kong/logs/error.log",

"cassandra_port": 9042,

"dns_order": [

"LAST",

"SRV",

"A",

"CNAME"

],

"dns_error_ttl": 1,

"cassandra_lb_policy": "RoundRobin",

"nginx_optimizations": true,

"database": "cassandra",

"pg_database": "kong",

"nginx_worker_processes": "auto",

"lua_package_cpath": "",

"lua_package_path": "./?.lua;./?/init.lua;",

"nginx_pid": "/usr/local/kong/pids/nginx.pid",

"upstream_keepalive": 60,

"admin_access_log": "/dev/stdout",

"client_ssl_cert_csr_default": "/usr/local/kong/ssl/kong-default.csr",

"proxy_listeners": [

{

"ssl": false,

"ip": "0.0.0.0",

"proxy_protocol": false,

"port": 8000,

"http2": false,

"listener": "0.0.0.0:8000"

}

],

"proxy_ssl_enabled": false,

"lua_socket_pool_size": 30,

"plugins": {

"response-transformer": true,

"correlation-id": true,

"statsd": true,

"jwt": true,

"cors": true,

"basic-auth": true,

"key-auth": true,

"ldap-auth": true,

"http-log": true,

"request-termination": true,

"hmac-auth": true,

"rate-limiting": true,

"datadog": true,

"tcp-log": true,

"runscope": true,

"aws-lambda": true,

"response-ratelimiting": true,

"acl": true,

"loggly": true,

"syslog": true,

"request-size-limiting": true,

"udp-log": true,

"file-log": true,

"request-transformer": true,

"bot-detection": true,

"ip-restriction": true,

"oauth2": true

},

"proxy_access_log": "/dev/stdout",

"cassandra_consistency": "ONE",

"client_max_body_size": "0",

"admin_error_log": "/dev/stderr",

"admin_ssl_cert_default": "/usr/local/kong/ssl/admin-kong-default.crt",

"dns_not_found_ttl": 30,

"pg_ssl": false,

"cassandra_ssl": false,

"log_level": "notice",

"cassandra_repl_strategy": "NetworkTopologyStrategy",

"latency_tokens": false,

"cassandra_repl_factor": 1,

"cassandra_data_centers": [

"DC1:2"

],

"real_ip_header": "X-Real-IP",

"kong_env": "/usr/local/kong/.kong_env",

"cassandra_schema_consensus_timeout": 10000,

"dns_hostsfile": "/etc/hosts",

"admin_listeners": [

{

"ssl": false,

"ip": "0.0.0.0",

"proxy_protocol": false,

"port": 8001,

"http2": false,

"listener": "0.0.0.0:8001"

}

],

"pg_ssl_verify": false,

"dns_no_sync": false,

"nginx_kong_conf": "/usr/local/kong/nginx-kong.conf",

"cassandra_timeout": 60000,

"cassandra_ssl_verify": false,

"cassandra_contact_points": [

"cassandra.default.svc.cluster.local"

],

"server_tokens": false,

"real_ip_recursive": "off",

"proxy_error_log": "/dev/stderr",

"client_ssl_cert_key_default": "/usr/local/kong/ssl/kong-default.key",

"nginx_daemon": "off",

"anonymous_reports": false,

"ssl_cipher_suite": "modern",

"dns_stale_ttl": 4,

"pg_port": 5432,

"db_cache_ttl": 3600,

"client_body_buffer_size": "8k",

"admin_ssl_cert_key_default": "/usr/local/kong/ssl/admin-kong-default.key",

"nginx_admin_acc_logs": "/usr/local/kong/logs/admin_access.log",

"ssl_cert_csr_default": "/usr/local/kong/ssl/kong-default.csr",

"cassandra_keyspace": "kong",

"ssl_cert_default": "/usr/local/kong/ssl/kong-default.crt",

"admin_ssl_enabled": false,

"admin_listen": [

"0.0.0.0:8001"

]

},

"version": "0.13.0",

"lua_version": "LuaJIT 2.1.0-beta3"

}

All 8 comments

Hi @mbugeia,

Thank you for the report. May I ask you: how many workers are running in your container? And where is your Cassandra cluster running/how many nodes does it have? This will help us trying to replicate this issue.

Thanks!

Hi,

There are 16 worker per container (16 CPU on the node). The cassandra cluster is running on VM outside the kubernetes cluster, it has 5 nodes.

Let me know if you need more informations.

how do you create the containers, from what sources/images? any custom templates in use?

My Dockerfile is basically a debian strech image with this:

RUN set -x \

&& echo "deb https://kong.bintray.com/kong-community-edition-deb stretch main" \

> /etc/apt/sources.list.d/kong.list \

&& apt-get update \

&& apt-get install -y openssl libpcre3 perl \

&& apt-get install -y --allow-unauthenticated kong-community-edition=${KONG_VERSION} \

&& rm -rf /var/lib/apt/lists/*

And the entrypoint:

kong prepare -p "/usr/local/kong"

exec /usr/local/openresty/nginx/sbin/nginx -c /usr/local/kong/nginx.conf -p /usr/local/kong/

I do not use any custom template.

I encountered this too, which made me confused.

I hope this is related to this issue.

In a Kong document, it says

For performance reasons, Kong avoids database connections when proxying requests, and caches the contents of your database in memory. The cached entities include Services, Routes, Consumers, Plugins, Credentials, etc...

Does it mean that memory usage keeps increasing if you keep adding more customers naturally(adding more data to database, caching more data, then using more memory)?

@jaemyunlee Glad you asked! No, it does not mean that the memory usage keeps increasing indefinitely. Cached items are evicted when the cache is full based on an LRU algorithm. Maybe this can be clarified in the documentation, if you are willing to propose a Pull Request to https://github.com/Kong/docs.konghq.com.

@mbugeia It seems like the behavior you are seeing simply is the nginx workers reaching the virtual

memory limit set by the OS? As a guideline, you should keep in mind that:

- nginx workers running a LuaJIT VM in Kong typically allocate around 500Mb of virtual memory, but this number can grow up if;

- you use plugins allocating objects in the LuaJIT VM (most likely). The LuaJIT VM can grow to up to 2GB in size (although that is unlikely in Kong)

- there is a leak in the Lua-land, or a third party C module (unlikely)

As such, I'd consider studying how much memory your Kong nginx workers typically need first, before imposing any limits on them.

Sorry I forgot about this issue but you were right ! We ended up setting the number of worker to 1 since since we never more than 1 core to 1 kong pod for our usecase. Thanks !

Most helpful comment

@jaemyunlee Glad you asked! No, it does not mean that the memory usage keeps increasing indefinitely. Cached items are evicted when the cache is full based on an LRU algorithm. Maybe this can be clarified in the documentation, if you are willing to propose a Pull Request to https://github.com/Kong/docs.konghq.com.

@mbugeia It seems like the behavior you are seeing simply is the nginx workers reaching the virtual

memory limit set by the OS? As a guideline, you should keep in mind that:

As such, I'd consider studying how much memory your Kong nginx workers typically need first, before imposing any limits on them.