Keras: custom metric MAE and RMSE are the same

This is a reproducible example:

consider this

from keras.models import Sequential

from keras.layers import Dense, Activation

import numpy as np

import keras.backend as K

def rmse (y_true, y_pred):

return K.sqrt(K.mean(K.square(y_pred -y_true), axis=-1))

model = Sequential([

Dense(32, input_shape=(50,)),

Activation('relu'),

Dense(1),

])

model.compile(optimizer='adam', loss='mse', metrics=['mae',rmse])

data = np.random.random((1000, 50))

labels = np.random.randint(2, size=(1000, 1))

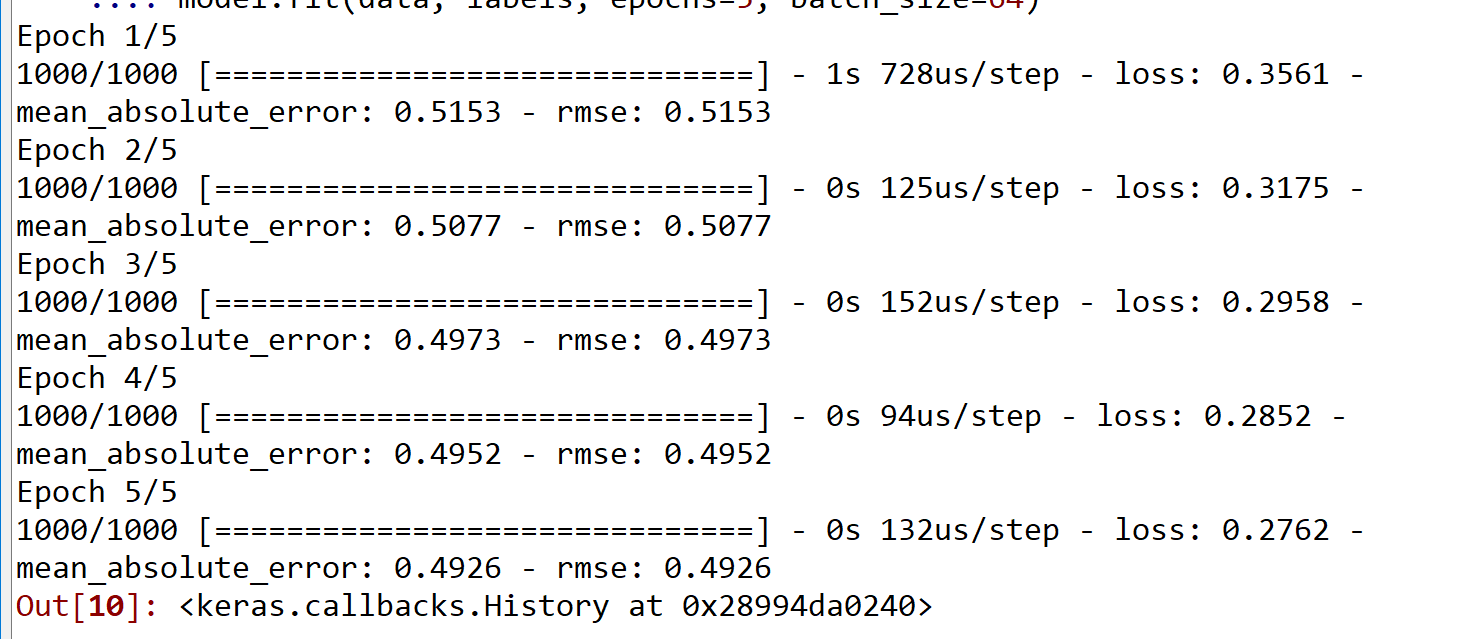

model.fit(data, labels, epochs=5, batch_size=64)

Both RMSE and MAE are the same. This happens when i placed them together. Is there a bug?

the output is:

All 12 comments

Rmse calculation is wrong. Try to get rid of axis=-1 - I had problems with RMSE before and removing the axis argument worked for me

thanks man. Without the axis = -1, it seems to be correct. The axis -1 is based on the original metrics. May i ask why was the axis -1 needed in the first place? (for the Original metrics defined in tf)

I have no idea in this case to be honest - if nothing else it should be axis=0. Otherwise it was doing square, mean of each number (i.e. nothing) and square root of the square (i.e. absolute value of original calculation) so it was just returning mae

yes thinking about it, the mean should be computed along axis = 0, otherwise, it will simply return the original vector.

but in the case of axis = -1, wouldnt there be an error since we still have a vector even after the sqrt?

It is possible that somewhere in Keras code it calls the mean on the vector

since most losses/metrics have mean as the last operation (mse, Mae, logloss, etc).

I will investigate...

On Tue, Jul 17, 2018, 10:53 PM Germayne notifications@github.com wrote:

yes thinking about it, the mean should be computed along axis = 0,

otherwise, it will simply return the original vector.but in the case of axis = -1, wouldnt there be an error since we still

have a vector even after the sqrt?—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

https://github.com/keras-team/keras/issues/10706#issuecomment-405800716,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AX4ZrRo-iZr4h6j5Y8TTFiQIEBme5HuLks5uHrFAgaJpZM4VSa6A

.

Update:

At least in losses we have this line that explicitly calls K.mean on the loss

https://github.com/keras-team/keras/blob/f86bc57d621fb0325e928271b246d197611b0e65/keras/engine/training_utils.py#L422

@tRosenflanz if so, like you mentioned above, the 'mean' in my code didnt work, and computed a vector of absolute differences with k.mean called at the end. Resulting in MAE as output.

This definitely also explains why MSE code work as opposed to my custom RMSE

ty. You have been really helpful

Okay, this explains the behaviour pretty well at this point - although this is not documented explicitly from what I can tell- the losses and metrics are computed based on the mean implicitly. Can you please close the issue ?

@uwu-ai what version of Keras/tf and what is the shape of your output?

Have you tried passing different axis arguments into the mean?

Output shape is 1,1 or Batch_size,1,1?

Does your generator output sample weights by any chance?

Can you disable that and see what loss you get? This way it will be easier to understand how rmse and mse actually correlate

Most helpful comment

Rmse calculation is wrong. Try to get rid of

axis=-1- I had problems with RMSE before and removing the axis argument worked for me