Keras: Training loss decrases (accuracy increase) while validation loss increases (accuracy decrease)

Hi all,

I am working on a very sparse dataset with the point of predicting 6 classes.

I have tried working with a lot of models and architectures, but the problem remains the same.

When I start training, the acc for training will slowly start to increase and loss will decrease where as the validation will do the exact opposite.

I have really tried to deal with overfitting, and I simply cannot still believe that this is what is coursing this issue.

What have I tried

Transfer learning on VGG16:

- exclude top layer and add dense layer with 256 units and 6 units softmax output layer

- finetune the top CNN block

- finetune the top 3-4 CNN blocks

To deal with overfitting I use heavy augmentation in Keras and dropout after the 256 dense layer with p=0.5.

Creating own CNN with VGG16-ish architecture:

- including batch normalization wherever possible

- L2 regularization on each CNN+dense layer

- Dropout from anywhere between 0.5-0.8 after each CNN+dense+pooling layer

- Heavy data augmentation in "on the fly" in Keras

Realising that perhaps I have too many free parameters:

- decreasing the network to only contain 2 CNN blocks + dense + output.

- dealing with overfitting in the same manner as above.

Without exception all training sessions are looking like this:

The last mentioned architecture looks like this:

reg = 0.0001

model = Sequential()

model.add(Conv2D(8, (3, 3), input_shape=input_shape, padding='same',

kernel_regularizer=regularizers.l2(reg)))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(0.7))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.5))

model.add(Conv2D(16, (3, 3), input_shape=input_shape, padding='same',

kernel_regularizer=regularizers.l2(reg)))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(0.7))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(16, kernel_regularizer=regularizers.l2(reg)))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(6))

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy', optimizer='SGD',metrics=['accuracy'])

And the data is augmented by the generator in Keras and is loaded with flow_from_directory:

train_datagen = ImageDataGenerator(rotation_range=10,

width_shift_range=0.05,

height_shift_range=0.05,

shear_range=0.05,

zoom_range=0.05,

rescale=1/255.,

fill_mode='nearest',

channel_shift_range=0.2*255)

train_generator = train_datagen.flow_from_directory(

train_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

shuffle = True,

class_mode='categorical')

All 14 comments

Interesting problem!

train_generatorlooks fine to me, but where does your validation data come from? Is it processed in the same way as the training data (e.gmodel.fit(validation_split)or similar)?I assume your plots show epochs horizontally? I think overfitting could definitely happen after 10-20 epochs for many models and datasets, despite augmentation. What batch size do you use?

What do you mean by sparse? You're using convolutional layers and

ImageDataGenerator, which tend to work best for image classification rather than matrices with mostly zeros and ones few and far between.

- The validation data is generated as

validation_datagen = ImageDataGenerator(rescale=1/255.)

validation_generator = validation_datagen.flow_from_directory(

validation_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

shuffle = True,

class_mode='categorical')

And then the model is fitted by

train_history = model.fit_generator(

train_generator,

steps_per_epoch=nb_train_samples // batch_size,

epochs=epochs,

validation_data=validation_generator,

validation_steps=nb_validation_samples // batch_size,

callbacks=[checkpointer, plot_losses])

I have been using batch sizes of 4, 8 and 16.

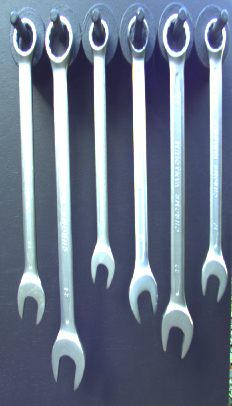

Sparse in the sense that I haven't much data available for training. It is images of wrenches where I need to predict the position of the wrench of size 19, i.e. for the image below wrench 19 is located on the first position from left to right, so a correct output of the model should be [1, 0, 0, 0, 0, 0].

The data is collected by myself and yields a total of some 250 for training and 125-ish for validation. The training data includes images of wrench 19 in all positions, and these images should be heavily augmented by Keras during training.

One thing that have crossed my mind a few times now is the fear of the "flow_from_directly" not passing correct labels for each image in the batch. Looking at an attentionmap for one of the training images after some 50 epochs, it look like this:

Mostly concerned with what's not the actual tools but the boarder of table from where they are hanging. This is just shown for class 1 - two other looks quite similar and the remaining 3 does not show any heat to any region of the image no matter what image is fed.

Please ask for more details, if this would help.

seems you overfit the data

@cherryunix, how could I go any further to prevent that? I think I already took any available measures to prevent overfitting the data.

Training deep learning models it more of a craft than an exact science unfortunately.

You have some varying issues with your method (small batch size for normalization, possibly too different validation and training augmentation, way too few images, etc.) and you're definitely overfitting.

It's not reasonable to expect the learned filters to work out wrench sizes. In fact, a common goal of the models you've tried is to _not_ care about the size of detected features. For CNNs the convolution kernels are applied to every possible position in each layer, and the wrenches look very similar. As the pixels become more abstract in higher layers the positions and sizes will have even less impact because of pooling and the like.

If I were you I would try just one initial convolution layer (for getting rid of image lighting and hopefully enhancing wrench edges etc.), and then a LSTM from the top row to the bottom row (maybe bidirectional too). Take the final output, flatten and softmax.

@cherryunix Agree with @carlthome about overfitting. Use model.summary() for the number of parameters used in your model and compare it to the number of your images in train_data_dir. My guess is that there is a huge imbalance there. Checkout Hyperpot (example ). It will make it easier to fine tune your hyperparameters and you can also add/remove layers in your model.

@prashanthdumpuri thanks for your answer. I agree with the overfitting for now as well. I have 250-ish images in my training dir - these are then augmented never the less. However, for the CNNs I have tried training have some 10 million-ish trainable parameters.

@JesperChristensen89 I'm also facing a similar issue. Were able to get it working without overfitting?

@sivagnanamn I actually concluded that in my case a CNN was not able to learn how to discriminate different sizes of the exact same object. I ended up training an object detector insted to first locate each opening and eye on the wrench. This I could use to compute the length for each wrench and thereby solving the problem. This worked perfectly!

@deltaoui see my comment just above.

Facing similar issue, though with a diff problem, interpreting seismic data for Oil Wells. It fits perfectly on training set, but does not generalize well on test set! Trying various options.

same issue on my model also. I am using VGG16 pre-trained model for image classification, I got 99% accuracy in train data, but validation is 89% accuracy, how to reduce overfitting. please help me how to solve overfitting.

here my model.

[from keras.applications import VGG16

VGG_model=VGG16(weights="imagenet",include_top=False,input_shape=(64,64,3))]

for layer in VGG_model.layers:

print(layer,layer.trainable)

](url)

from keras import models

from keras import layers

from keras import optimizers

from keras.layers import BatchNormalization

from keras import regularizers

Create the model

model = models.Sequential()

[# Add the vgg convolutional base model

model.add(VGG_model)

model.add(BatchNormalization())

Add new layers

model.add(layers.Flatten())

model.add(layers.Dense(1024, kernel_regularizer=regularizers.l1_l2( l2=0.0001),activation='relu'))

model.add(layers.Dropout(0.30))

model.add(layers.Dense(6, activation='softmax'))

Show a summary of the model. Check the number of trainable parameters

model.summary()](url)

from keras.preprocessing.image import ImageDataGenerator

datagen = ImageDataGenerator(

featurewise_center=False, # set input mean to 0 over the dataset

samplewise_center=False, # set each sample mean to 0

featurewise_std_normalization=False, # divide inputs by dataset std

samplewise_std_normalization=False, # divide each input by its std

zca_whitening=False, # apply ZCA whitening

zca_epsilon=1e-06, # epsilon for ZCA whitening

rotation_range=0, # randomly rotate images in 0 to 180 degrees

width_shift_range=0.1, # randomly shift images horizontally

height_shift_range=0.1, # randomly shift images vertically

shear_range=0., # set range for random shear

zoom_range=0., # set range for random zoom

channel_shift_range=0., # set range for random channel shifts

# set mode for filling points outside the input boundaries

fill_mode='nearest',

cval=0., # value used for fill_mode = "constant"

horizontal_flip=True, # randomly flip images

vertical_flip=False, # randomly flip images

# set rescaling factor (applied before any other transformation)

rescale=None,

# set function that will be applied on each input

preprocessing_function=None,

# image data format, either "channels_first" or "channels_last"

data_format=None,

# fraction of images reserved for validation (strictly between 0 and 1)

validation_split=0.0)

history = model.fit_generator(

datagen.flow(X_train,y_train),

steps_per_epoch=X_train.shape[0]/32 ,

epochs=50,

validation_data=(X_test,y_test),

validation_steps=X_test.shape[0]/32,

verbose=1)

try ordinal target? your targets are all very similar, not like cats vs dogs.

Can you elaborate pls

On Aug 11, 2019 21:23, "hwunlams" notifications@github.com wrote:

try ordinal target? your targets are all very similar, not like cat or dogs

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

https://github.com/keras-team/keras/issues/8471?email_source=notifications&email_token=ADIW7N335WD35JO46VM3UKTQEAYYBA5CNFSM4EDRGBKKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD4BDO4I#issuecomment-520238961,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ADIW7NYTI6NI6RPERGH34BTQEAYYBANCNFSM4EDRGBKA

.

Most helpful comment

@sivagnanamn I actually concluded that in my case a CNN was not able to learn how to discriminate different sizes of the exact same object. I ended up training an object detector insted to first locate each opening and eye on the wrench. This I could use to compute the length for each wrench and thereby solving the problem. This worked perfectly!