Keras: Keras Progbar Logger is not working as expected

When using model.fit_generator, the progress-bar does not work as expected.

As noted on StackOverflow, the following verbosity levels exist (which should be explained in the documentation):

- 0: No logging

- 1: Displaying a progress-bar for each batch

- 2: Displaying one line per epoch

Verbosity-Modes 0 and 2 work as expected, but mode 1 (the default mode) creates the following output:

Epoch 2/100

64/50000 [..............................] - ETA: 323s - loss: 2.3547 - acc: 0.0625

128/50000 [..............................] - ETA: 181s - loss: 2.3319 - acc: 0.0781

192/50000 [..............................] - ETA: 133s - loss: 2.3233 - acc: 0.1042

256/50000 [..............................] - ETA: 110s - loss: 2.3243 - acc: 0.1055

384/50000 [..............................] - ETA: 86s - loss: 2.3267 - acc: 0.0990

512/50000 [..............................] - ETA: 73s - loss: 2.3213 - acc: 0.1094

640/50000 [..............................] - ETA: 66s - loss: 2.3184 - acc: 0.1109

704/50000 [..............................] - ETA: 63s - loss: 2.3188 - acc: 0.1080

832/50000 [..............................] - ETA: 59s - loss: 2.3177 - acc: 0.1058

960/50000 [..............................] - ETA: 56s - loss: 2.3176 - acc: 0.1031

1088/50000 [..............................] - ETA: 53s - loss: 2.3146 - acc: 0.1048

I already identified the problem in the source-code. It uses \b and \r both, which does not work as expected on Windows and Ubuntu 16.04 with PyCharm 2016.3.3. I was able to fix this issue, by simply removing line 257 (sys.stdout.write('\b' * prev_total_width)) because \r is a carriage return to the start of the line anyway. I've also tested this on Linux and removing line 257 does not break existing functionality.

Is there a reason, why this additional \b exists? Is it required on Mac? If not, I would file a pull-request to remove that line and fix the progress-bar output on Windows.

All 55 comments

I am getting a similar issue in jupyter notebooks right now

I've got this same issue on windows w/ jupyter, except on windows chrome it produces output like this:

https://i.imgur.com/Iko46QP.png

On firefox on linux the same issue is happening, but firefox does not render the "b" character

can confirm that deleting the line @apacha mentions fixes the issue

Tried this fix on the newest Keras 2.0.2 version and something seems to have changed, because if I remove line 257 now, the output resembles to the following

762/782 [============================>.] - ETA: 0s - loss: 1.4444 - acc: 0.4882769/782 [============================>.] - ETA: 0s - loss: 1.4417 - acc: 0.4894775/782 [============================>.] - ETA: 0s - loss: 1.4387 - acc: 0.4906Epoch 00000: val_acc improved from -inf to 0.57940, saving model to assignment_2.h5

782/782 [==============================] - 9s - loss: 1.4362 - acc: 0.4914 - val_loss: 1.2375 - val_acc: 0.5794

Epoch 2/100

778/782 [============================>.] - ETA: 0s - loss: 1.0792 - acc: 0.6277Epoch 00001: val_acc improved from 0.57940 to 0.63520, saving model to assignment_2.h5

782/782 [==============================] - 7s - loss: 1.0793 - acc: 0.6275 - val_loss: 1.0735 - val_acc: 0.6352

Epoch 3/100

757/782 [============================>.] - ETA: 0s - loss: 0.9665 - acc: 0.6693763/782 [============================>.] - ETA: 0s - loss: 0.9668 - acc: 0.6693769/782 [============================>.] - ETA: 0s - loss: 0.9663 - acc: 0.6693775/782 [============================>.] - ETA: 0s - loss: 0.9659 - acc: 0.6694Epoch 00002: val_acc improved from 0.63520 to 0.67660, saving model to assignment_2.h5

782/782 [==============================] - 7s - loss: 0.9665 - acc: 0.6692 - val_loss: 0.9512 - val_acc: 0.6766

Epoch 4/100

97/782 [==>...........................] - ETA: 5s - loss: 0.9059 - acc: 0.6862103/782 [==>...........................] - ETA: 5s - loss: 0.9078 - acc: 0.6849110/782 [===>..........................] - ETA: 5s - loss: 0.9109 - acc: 0.6844116/782 [===>..........................] - ETA: 5s - loss: 0.9073 - acc: 0.6856

which is even worse than printing one entry per line.

Update:

Hmm... getting this thing now in Keras 1.2.2 too. Check, if this new behavior might be a PyCharm bug.

Ok, this new behavior is a PyCharm bug. Removing line 257 still fixes the issue. I will create a pull-request.

Created a bug-report for the PyCharm bug: https://youtrack.jetbrains.com/issue/PY-23420

Having the same issue on the latest version

Unfortunately @fchollet refused my pull-request, so maybe someone else should submit it again or at least confirm that it fixes the issue. It does so on Windows and Linux, however, I haven't tested it on Mac.

Since Keras supports Python 2 and Python 3 (does it?) we have to stick with the sys.stdout.write-functionality. Otherwise I would have proposed that we switch to the Python 3 print("...", end="", flush=True)-functionality.

It seems when I commented out that line I get a keras error now and I can't compile keras.

When you comment out line 257 in general_utils.py you get a Keras error? That sounds strange, since Travis has been green on the pull-request.

Can you elaborate on the error?

Actually it works, that was a great fix!

This works in my old keras version 1.x , I monkey patched it to test it in linux.

The rejection of the PR now forces me to monkey-patch each installation of Keras... @fchollet it would be great if you could elaborate on the precise problem you've encountered, or if some other maintainer could take a look at this issue and/or the PR. A working progress-bar would be a nice thing and notice, that the problem is not restricted to Windows, but also applies to Linux, which you claim are 95% of all users.

is this problem solved? I still have the output mentioned above when verbose set to 1

Apparently not... My PR was rejected.

And the maintainers constantly ignore this for 5 months now. 😡

But you can monkey-patch your installation by searching generic_utils.py in your python installation folder and comment one line as described above.

As recommended by @juharris, tqdm actually looks very much like the thing needed here to get rid of the solution that is currently employed which does not work on every system.

Manged to fix the problem by making the terminal window bigger

@ramadawn Under which circumstances does resizing the terminal solve this issue? I guess you had a too small window, which caused a similar effect (which can apparently be solved by making it big enough that a complete line fits into the window). But notice that this issue is of much more fundamental nature and actually affects even very wide terminal windows.

After I cut out the line you suggested the problem persisted. I had the problem when the terminal was small. I increased the size and then reran my model and the problem went away. I haven't test resizing the window without your fix.

I haven't test resizing the window without your fix.

@ramadawn Then please do so. And afterwards, please confirm, that the above mentioned line does indeed fix the issue, so the maintainers don't get the impressions that it's just an issue of window size, but an actual issue in the code-base that needs to be fixed.

I'm seeing this behaviour with keras 2.0.8 in a jupyter notebook

Getting same thing with keras 2.0.8 in a notebook top

this problem is not resolved yet

My fix is the following:

Comment out lines 302 to 306 of generic_utils.py, and replace them with a print of the r character, like so:

#if self._dynamic_display:

# sys.stdout.write('\b' * prev_total_width)

# sys.stdout.write('\r')

#else:

# sys.stdout.write('\n')

sys.stdout.write('\r')

This produces the expected behavior for me: Running nohup myprogram.py & and then tail -f nohup.out shows the progress bar moving rightward at the bottom line of the terminal, instead of taking up 100s or 1000s of lines as described above.

My fix is the following:

comment line 299 in generic_ulils.py:

prev_total_width = self.total_width

#sys.stdout.write('\b' * prev_total_width)

sys.stdout.write('\r')

sys.stdout.flush()

I was getting a weird progress bar in pycharm in the keras version 2.1.3. But it is working fine with the keras that come along with tensorflow,the keras version inside it is version 2.0.8-tf.

The weird progress bar prints a new progress bar in a new line for each batch processed.

So I was getting 1000+ bars for a single epoch processed as I had 1000+ batches to be fed as input.

I changed the Line no:339:

from sys.stdout.write('\n') to sys.stdout.write('\r') and it fixed the issue.

Is this a valid fix that works for every platform? Should I send a Pull request?

@Vijayabhaskar96 : Please go ahead and file a pull request, if your solution works on all platforms, as this is major concern of @fchollet. See https://github.com/keras-team/keras/pull/6053, which was rejected, because my workaround was supposedly showing undesirable behavior on some platforms. When last checking, I also noticed, that the keras-tf and keras versions are no longer aligned. Please collect reliable proof from all different platforms, that this fixes the issue, before filing the PR.

I also saw this behavior in Keras 2.1.0 with Spyder 3.2.6 on Python 3.6

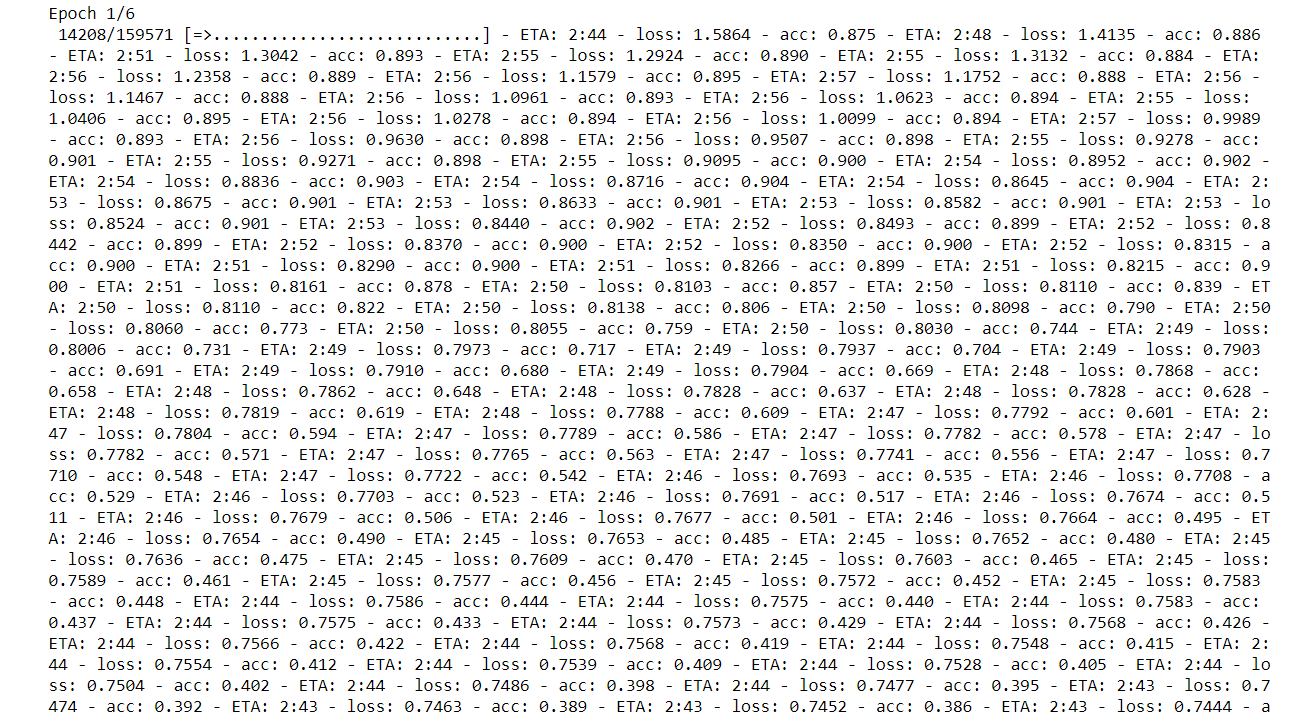

I don't know if this is related, but I was getting output like this from keras. I'm currently attempting to upgrade versions of keras cudatoolkit and tensorflow to see if that has any effect

@kotoroshinoto Seems like your output window was too small. That's likely the cause why you saw this strange output.

I'm using a jupyter notebook. Perhaps there is a setting that controls the output width.

plus if you look closely, the text is repeating before it wraps, usually its the auto-wrapping that causes this kind of thing, but that isn't happening.

Updating to Keras 2.1.2 corrected print out formatting issues for me.

I updated but I was still getting weird output.

I haven't tried it since my system restart yet.

@kotoroshinoto I had the exact same issue. After I removed tqdm import, everything works good again.

ah, well luckily there is a keras-tqdm package with a callback method I can use.

That has resolved my irritation for now , at least with the fit method

incidentally, the other methods that work with the GPU, such as predict, predict_proba, evaluate, etc, should probably get the ability to use callbacks so we can do things like TQDM with those

https://github.com/bstriner/keras-tqdm

^ in case you were wondering what I was talking about

@Vijayabhaskar96's solution worked for me

I got output very similar to @kotoroshinoto fitting models in a Jupyter notebook. I installed keras-tqdm, and it worked perfectly. In your fit function, set verbose=0, and callbacks=[TQDMNotebookCallback()].

@kotoroshinoto I had the exact same issue. After I removed tqdm import, everything works good again.

@QRiner This fixed my issue. I commented out import tqdm from tqdm and the progress bar behavior went back to normal.

I've also fixed the issue reported by @kotoroshinoto by removing tqdm import and restarting the kernel.

Also experienced this issue and fixed by not importing tqdm. Was interesting to see that the issue existed in jupyter notebooks and pycharm but not when executing the py file from the command prompt.

This is the problem of console of PyCharm and Jupyter notebooks, not the problem of Keras.

I don't know if this is related, but I was getting output like this from keras. I'm currently attempting to upgrade versions of keras cudatoolkit and tensorflow to see if that has any effect

I have updated Keras generic_utils.py as follows to make it work

# sys.stdout.write(bar) # <<<<<<<<<<<<<< Comment

......

sys.stdout.write(bar + info) # <<<<<<<<<<<<<< Add

# sys.stdout.write(info) # <<<<<<<<<<<<<< Comment

sys.stdout.flush()

``

I don't know why this is happening though... Odd.

I don't know if this is related, but I was getting output like this from keras. I'm currently attempting to upgrade versions of keras cudatoolkit and tensorflow to see if that has any effect

I have updated Keras generic_utils.py as follows to make it work

# sys.stdout.write(bar) # <<<<<<<<<<<<<< Comment

......

sys.stdout.write(bar + info) # <<<<<<<<<<<<<< Add

# sys.stdout.write(info) # <<<<<<<<<<<<<< Comment

sys.stdout.flush()

``

I don't know why this is happening though... Odd.

Thank you ibutenko, your solution fixed it for me as well!

I've gone so far as to uninstall my Anaconda and reinstalling it, and recreating my environments, and nothing fixed it for me. I previously used tqdm notebook in the file, but even though I've commented it out an no longer used it anywhere in the notebook, I still had the problem. I didn't even reinstall tqdm in the environment when I recreated it, and it still did the crazy print error.

Thanks a million!

The solution of @ibutenko did not work for me unfortunately, however, I found a fix which did not need any modification of sources: install ipykernel and import it in your code:

pip install ipykernel

Then

import ipykernel

In fact, in the Keras generic_utils.py file, one probematic line was (for me):

if self._dynamic_display:

sys.stdout.write('\b' * prev_total_width)

sys.stdout.write('\r')

else:

sys.stdout.write('\n')

And, the value self._dynamic_display was initiated such as:

self._dynamic_display = ((hasattr(sys.stdout, 'isatty') and

sys.stdout.isatty()) or

'ipykernel' in sys.

So, loading ipykerneladded it to sys.modules and fixed the problem for me.

pip install ipykernel

Then

import ipykernel

This is the best solution without needing to modify any code. The keras-tqdm package did not work for me in PyCharm either. Really unfortunate that this bug still exists (whether it is PyCharm or Keras fault).

It's not only problem of PyCharm. A had same issue with JupyterLab.

Replaced line 388 in keras/utils/generic_utils.py

sys.stdout.write('n') by sys.stdout.write('br')

Works for me on Win10 and keras version 2.3.1

This issue has been open for nearly three years now. Therefore, I conclude that Keras is dead and no longer being maintained. It has over 2700 open issues and no issues have been resolved by the maintainers for at least three months. It seems that @fchollet and Google have no interest in maintaining this framework anymore. Luckily, I have already moved on.

There are some bug-fixes described in this thread that show how to work around the problem, a pull-request that would have fixed the issue was rejected for no good reasons, therefore, I don't see why this issue should remain open.

R.I.P. Keras. Hello PyTorch.

I'm from tensorflow2.

This problem still remains in tf2.1, but I figured out how to solve this problem with this commit.

I'm not sure why this commit is not applied to tf2.1 even it's merged.

@soomiles I'm in the same boat, I made the PR so I wouldn't have to add that line to TF everytime I clean install it, however it's not being included in the latest TF releases lol. Not sure what's going on, I might open an issue

Edit: Opened issue here: https://github.com/tensorflow/tensorflow/issues/38883

I had the same problem as @kotoroshinoto, on Spyder 4.1.1 and TF 2.1.0.

epoch 1/5

1560/59563 [..............................] - ETA: 3:26 - train_loss: 0.0106 - ETA: 14:17 - train_loss: 0.0518 - ETA: 10:36 - train_loss: 0.0486 - ETA: 6:13 - train_loss: 0.0515 - ETA: 5:48 - train_loss: 0.0507 - ETA: 4:39 - train_loss: 0.0766 - ETA: 4:31 - train_loss: 0.0364 - ETA: 4:04 - train_loss: 0.0206 - ETA: 4:01 - train_loss: 0.0182 - ETA: 3:46 - train_loss: 0.0144 - ETA: 3:44 - train_loss: 0.0060 - ETA: 3:34 - train_loss: 0.0041 - ETA: 3:33 - train_loss: 0.0044 - ETA: 3:27 - train_loss: 0.0169

I solved the problem by increasing the Progbar update interval to ~0.5s.

tf.keras.utils.Progbar(target, stateful_metrics=metrics_names, interval=0.5)

For anyone still looking for a solution, I could only get this working correctly on Windows Git Bash by combining ipykernel (as suggested by @AntoninGAY) with PYTHONUNBUFFERED=1 environment variable (=python -u command line parameter) .

For who is getting this error in new keras or in google colab, try adding iPython:

import tensorflow as tf

import numpy as np

from tensorflow import keras

import IPython

%matplotlib inline

model = tf.keras.Sequential([

keras.layers.Dense(units = 1, input_shape=[1])

])

model.compile(

optimizer = 'adam',

loss = 'mean_squared_error'

)

model.summary()

xs = np.array([-1.0, 0.0, 1.0, 2.0, 3.0, 4.0], dtype=float)

ys = np.array([-.0, 1.0, 4.0, 7.0, 10.0, 13.0], dtype=float)

from tqdm.keras import TqdmCallback

model.fit(xs, ys, epochs=500, verbose=0, callbacks=[TqdmCallback(verbose=0)])

Most helpful comment

I don't know if this is related, but I was getting output like this from keras. I'm currently attempting to upgrade versions of keras cudatoolkit and tensorflow to see if that has any effect