Keras: Split train data into training and validation when using ImageDataGenerator and model.fit_generator

Its okay if I am keeping my training and validation image folder separate .

But when i am trying to put them into one folder and then use Imagedatagenerator for augmentation and then how to split the training images into train and validation so that i can fed them into model.fit_generator.

train_datagen = ImageDataGenerator(rescale=1./255,

shear_range=0.2,

zoom_range=0.2)

train_generator = train_datagen.flow_from_directory(

train_data_dir,

target_size=(img_width, img_height),

batch_size=16,

class_mode='binary')

model.fit_generator(

train_generator,

samples_per_epoch=??,

nb_epoch=nb_epoch,

validation_data=??,

nb_val_samples=??)

All 42 comments

In the future it would be better for you to ask these types of question on StackOverflow, however, it is as simple as defining two ImageDataGenerators (one for train and one for validation):

Note: Validation and Train folders SHOULD be separate.

train_datagen = ImageDataGenerator(rescale=1./255, shear_range=0.2, zoom_range=0.2)

val_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

train_data_dir,

target_size=(img_width, img_height),

batch_size=16,

class_mode='binary')

val_generator = val_datagen.flow_from_directory(

val_data_dir,

target_size=(img_width, img_height),

batch_size=16,

class_mode='binary')

# steps_per_epoch should be (number of training images total / batch_size)

# validation_steps should be (number of validation images total / batch_size)

model.fit_generator(train_generator,

steps_per_epoch=fill_in,

validation_data=val_generator,

validation_steps=fill_in,

epochs=nb_epoch)

@kentsommer i have asked the issue and some other person aso but there was no reply thats why asked here...

you didn't understand my question, I know the thing you said,

My question is how to use model.fit_generator (imagedatagenerator ) to split training images into train and test.

I have one dataset of images of two class for training , i just want to separate it in the runtime into train and validation and use imagedatagenerator at the same time.

The validation_split option is not implemented in the fit_generator. So how can we get a fraction of validation data from the the training set on the fly while using ImageDataGenerator and flow ?

@Euphemiasama

My apologies @hellorp1990 I missed your reply and so this is a much later response than I would have liked.

What you would like to do is not possible using flow. Without "knowing" your whole dataset (which flow obviously does not), there is no way to split fractionally.

However, all that being said... It would not be terribly difficult to write something that takes your single dataset folder (or whatever the dataset is stored in), and splits it into validation and training sets which can then be used as shown in my example above.

I realize this probably is not the answer you were looking for, but the Keras functions cannot (at least currently) perform what you would like to do.

It's very simple, split your data set indices when they are read e.g., with glob

train_samples, validation_samples = train_test_split(Image_List, test_size=0.2)

and then feed train sample to train generator and test sample to validation generator.

here is more example:

`rasterList = glob.glob(os.path.join(imDir, '*.tif'))

Splitting data into training and testing

train_samples, validation_samples = train_test_split(rasterList, test_size=0.2)`

To generate a random image index in the training generator you can use

process_line = np.random.randint(len(rasterList))

and in the validation generator

process_line = np.random.randint(len(train_samples), len(rasterList))

I came up with a full example; it does not use flow, as kentsommer pointed out, but it can be applied in the pre-processing phase to split your dataset into test/training set (sort of 'splitEachLabel' of MATLAB's ImageDatastores). It is a bit naive, and it can be largely improved (I am new to Python), but it works.

Specifically, it founds each label (which in my case are encoded in the names of the jpg files), performs a simple permutation using numpy, and then store results in train and test dirs, which are organized as shown in this article.

def arrange_dataset(directory, file_extension='*.jpg'):

# Directory should be the dataset directory.

if not os.path.exists(directory):

return 0

cur_dir = os.getcwd()

os.chdir(directory)

work_dir = os.getcwd()

file_list = glob.glob('*.jpg')

train_dir = os.path.abspath('train')

test_dir = os.path.abspath('test')

if not os.path.exists(train_dir):

os.mkdir(train_dir)

if not os.path.exists(test_dir):

os.mkdir(test_dir)

os.chdir(cur_dir)

# set init label

init_label = file_list[0][0:4]

current_list = []

for file in file_list:

label = file[0:4]

if label == init_label:

if not os.path.exists(os.path.join(train_dir, label)):

os.mkdir(os.path.join(train_dir, label))

if not os.path.exists(os.path.join(test_dir, label)):

os.mkdir(os.path.join(test_dir, label))

current_list.append(file)

if (label != init_label) or (file == file_list[-1]):

partial_train, partial_test = ImageReadUtils.arrange_dataset_2(current_list)

for train_file in partial_train:

os.rename(os.path.join(work_dir, train_file), os.path.join(train_dir, init_label, train_file))

for test_file in partial_test:

os.rename(os.path.join(work_dir, test_file), os.path.join(test_dir, init_label, test_file))

current_list[:] = []

init_label = label

current_list.append(file)

def arrange_dataset_2(file_list, train_split=0.8):

if len(file_list) == 2:

train_split = 0.5

elif len(file_list) == 3:

train_split = 0.66

elif len(file_list) == 4:

train_split = 0.75

random_set = np.random.permutation(len(file_list))

train_list = random_set[:round(len(random_set)*0.8)]

test_list = random_set[-(len(file_list) - len(train_list))::]

train_images = []

test_images = []

for index in train_list:

train_images.append(file_list[index])

for index in test_list:

test_images.append(file_list[index])

return train_images, test_images

Again, it has a lot of spots where it can be improved, but I hope it helps as a starting point.

Thanks @anhelus. An example of 'splitEachLabel' from MATLAB's ImageDatastores:

[trainingSet, testSet] = splitEachLabel(imds, 0.3, 'randomize');

It would be great if keras has this feature.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed after 30 days if no further activity occurs, but feel free to re-open a closed issue if needed.

I'd propose an additional optional parameter to flow_from_directory(..., select_file=None)

It would filter out some files, so we can initialize train/test iterator from the same directory and even have n-fold cross validation. Personally I patched flow_from_directory and split my data so:

train_iter = ... flow_from_directory(..., select_file=lambda fname: hash(fname) % 10 != 0)

test_iter = ... flow_from_directory(..., select_file=lambda fname: hash(fname) % 10 == 0)

(beware hash for python3)

If this makes sense I can make a PR

Hi,

I have written a helper function that can help you do exactly this: preprocess any directory which contains subdirectories (that represent categories) into a training & testing set, based on a % of split you want.

The code:

def split_dataset_into_test_and_train_sets(all_data_dir, training_data_dir, testing_data_dir, testing_data_pct):

# Recreate testing and training directories

if testing_data_dir.count('/') > 1:

shutil.rmtree(testing_data_dir, ignore_errors=False)

os.makedirs(testing_data_dir)

print("Successfully cleaned directory " + testing_data_dir)

else:

print("Refusing to delete testing data directory " + testing_data_dir + " as we prevent you from doing stupid things!")

if training_data_dir.count('/') > 1:

shutil.rmtree(training_data_dir, ignore_errors=False)

os.makedirs(training_data_dir)

print("Successfully cleaned directory " + training_data_dir)

else:

print("Refusing to delete testing data directory " + training_data_dir + " as we prevent you from doing stupid things!")

num_training_files = 0

num_testing_files = 0

for subdir, dirs, files in os.walk(all_data_dir):

category_name = os.path.basename(subdir)

# Don't create a subdirectory for the root directory

print(category_name + " vs " + os.path.basename(all_data_dir))

if category_name == os.path.basename(all_data_dir):

continue

training_data_category_dir = training_data_dir + '/' + category_name

testing_data_category_dir = testing_data_dir + '/' + category_name

if not os.path.exists(training_data_category_dir):

os.mkdir(training_data_category_dir)

if not os.path.exists(testing_data_category_dir):

os.mkdir(testing_data_category_dir)

for file in files:

input_file = os.path.join(subdir, file)

if np.random.rand(1) < testing_data_pct:

shutil.copy(input_file, testing_data_dir + '/' + category_name + '/' + file)

num_testing_files += 1

else:

shutil.copy(input_file, training_data_dir + '/' + category_name + '/' + file)

num_training_files += 1

print("Processed " + str(num_training_files) + " training files.")

print("Processed " + str(num_testing_files) + " testing files.")

This Link is very useful.

In my project there are some requirements:

- Split on the list of files, not on physical image set due to large file size. Also I need to make multiple splits with different ratios for experiment.

- Get image's index and file name when using next() function to verify and analyze data and network manually

I found these links are very useful: - Split on the list of files:

https://stackoverflow.com/questions/42443936/keras-split-train-test-set-when-using-imagedatagenerator - return filename from next() function:

https://github.com/keras-team/keras/issues/3296

@daanraman i just want to ask what does "testing_data_pct" signifies in your code??

Percentage of data to use for testing

Sent from my phone

On 10 May 2018, at 13:52, anamika06jain notifications@github.com wrote:

@daanraman i just want to ask what does "testing_data_pct" signifies in your code??

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

one more thing... "training_data_dir" and "testing_data_dir" are what we

want to create. so in the all_dir folder i have created two folders Train

and Test and in Training_dir i have passed the address of Train folder and

in Testing_Dir i have passed the addres of Test folder. That is what i

supposed to do ???

On Thu, May 10, 2018 at 5:40 PM, Daan Raman notifications@github.com

wrote:

[image: Boxbe] https://www.boxbe.com/overview This message is eligible

for Automatic Cleanup! ([email protected]) Add cleanup rule

https://www.boxbe.com/popup?url=https%3A%2F%2Fwww.boxbe.com%2Fcleanup%3Fkey%3DoGtSqDrzujP6M5xxaRZabl1pas7K1ME%252Fi4pqd%252BWnxSI%253D%26token%3DSGSGPWSD7ihUR%252FfW6gUmOIu%252FWeb8HHVe7E5TRglsJQclimJ0fbo%252FgqPRKauMiV5RKdL3TD8uxW%252BjTYutoYMJ%252Fs%252F1b4Z5tB83eQNYb9uGRqat8CGRqbqkidNRBEXSCOZf9bZSayFkuzBIXipvQkJTqA%253D%253D&tc_serial=39119003489&tc_rand=1822692976&utm_source=stf&utm_medium=email&utm_campaign=ANNO_CLEANUP_ADD&utm_content=001

| More info

http://blog.boxbe.com/general/boxbe-automatic-cleanup?tc_serial=39119003489&tc_rand=1822692976&utm_source=stf&utm_medium=email&utm_campaign=ANNO_CLEANUP_ADD&utm_content=001Percentage of data to use for testing

Sent from my phone

On 10 May 2018, at 13:52, anamika06jain notifications@github.com

wrote:@daanraman i just want to ask what does "testing_data_pct" signifies in

your code??—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

https://github.com/keras-team/keras/issues/5862#issuecomment-388035324,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AjdFLdWJ5A0YTphJlNmTzr58aZjPpC2aks5txC4zgaJpZM4MhmVi

.

--

Thanks and regards

Anamika Jain

Yes, sounds about right @anamika06jain !

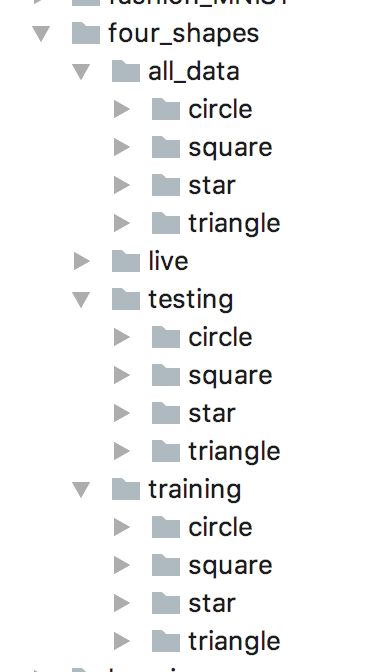

It's been a while since I used this code, but below a screenshot of a dataset I found in my projects:

Hope this helps,

Daan

@daanraman I have tried your code, but it just said:

Refusing to delete testing data directory [testing_data_dir] as we prevent you from doing stupid things!

Refusing to delete testing data directory [training_data_dir] as we prevent you from doing stupid things!

Processed 0 training files.

Processed 0 testing files.

I put my files in this order:

- Data

- All_data_dir

- training_data_dir

- testing_data_dir

and run split_dataset_into_test_and_train_sets("./all_data_dir", "./training_data_dir", "./testing_data_dir", testing_data_pct)

Hi @lucis13 - just checked an example I have here, and you need to construct the entire path to the folders, not a relative path. So for example:

datapath = os.path.realpath(os.path.join(pwd, '../../../datasets/MNIST/'))

all_data_dir = os.path.join(datapath, "all_data")

training_data_dir = os.path.join(datapath, "training")

testing_data_dir = os.path.join(datapath, "testing")

live_data_dir = os.path.join(datapath, "live")

I just found this in the documentation given here.

You have to specify validation_split in the ImageDataGenerator and specify subset for each generator as shown below:

from keras.preprocessing.image import ImageDataGenerator

data_generator = ImageDataGenerator(rescale=1./255, validation_split=0.33)

train_generator = data_generator.flow_from_directory(TRAINING_DIR, target_size=(IMAGE_SIZE, IMAGE_SIZE), shuffle=True, seed=13,

class_mode='categorical', batch_size=BATCH_SIZE, subset="training")

validation_generator = data_generator.flow_from_directory(TRAINING_DIR, target_size=(IMAGE_SIZE, IMAGE_SIZE), shuffle=True, seed=13,

class_mode='categorical', batch_size=BATCH_SIZE, subset="validation")

An important follow-up to @VedantMistry13 's comment:

This will ALSO augment the validation split.

@VedantMistry13 Is validation_split splits randomly or does it just take the last %x (%33 in your case) elements in dataset? Keras' validation_splits are always dedicated to take last %x elements.

@mburaksayici I think the first x% of data will be cut for validate. You can verify it here: https://github.com/keras-team/keras-preprocessing/blob/master/keras_preprocessing/image.py#L1409

@daanraman thanks a lot for this function, however in testing it I get an error when trying to move a file to the same place, you might want to handle that (just pass on exception or use shutil.move)

Also, this code creates a 'validation' and 'train' folder in each 'train' and 'validation' folder. Here is my version with some corrections:

def split_dataset_into_test_and_train_sets(all_data_dir, training_data_dir, testing_data_dir, testing_data_pct):

# Recreate testing and training directories

if testing_data_dir.count('/') > 1:

shutil.rmtree(testing_data_dir, ignore_errors=False)

os.makedirs(testing_data_dir)

print("Successfully cleaned directory " + testing_data_dir)

else:

print(

"Refusing to delete testing data directory " + testing_data_dir + " as we prevent you from doing stupid things!")

if training_data_dir.count('/') > 1:

shutil.rmtree(training_data_dir, ignore_errors=False)

os.makedirs(training_data_dir)

print("Successfully cleaned directory " + training_data_dir)

else:

print(

"Refusing to delete testing data directory " + training_data_dir + " as we prevent you from doing stupid things!")

num_training_files = 0

num_testing_files = 0

for subdir, dirs, files in os.walk(all_data_dir):

category_name = basename(subdir)

# Don't create a subdirectory for the root directory

print(category_name + " vs " + basename(all_data_dir))

if category_name in map(basename, [all_data_dir, training_data_dir, testing_data_dir]):

continue

training_data_category_dir = join(training_data_dir, category_name)

testing_data_category_dir = join(testing_data_dir, category_name)

if not exists(training_data_category_dir):

os.mkdir(training_data_category_dir)

if not exists(testing_data_category_dir):

os.mkdir(testing_data_category_dir)

for file in files:

input_file = os.path.join(subdir, file)

if np.random.rand(1) <= testing_data_pct:

shutil.move(input_file, join(testing_data_dir, category_name, file))

num_testing_files += 1

else:

shutil.move(input_file, join(training_data_dir, category_name, file))

num_training_files += 1

print("Processed " + str(num_training_files) + " training files.")

print("Processed " + str(num_testing_files) + " testing files.")

One thing I noticed about the above function is that using the train/val split percentage as a probability for each file to go to validation doesn't produce a validation set in the expected size. I've expanded this function to properly (I believe) give a validation set that's the size of the dataset times the split percentage. It also lets the user choose whether to stratify or not, and use a random seed to get reproducible results.

The function returns a dictionary s.t. dict['train'][class name] = amount of samples of that class in that group. Same with dict['validation'][class name]

import math

import os

import random

import shutil

from collections import defaultdict

from os.path import abspath, join, basename, exists

def get_containing_folder_name(path):

# dirname has inconsistent behavior when path has a trailing slash

full_containing_path = abspath(join(path, os.pardir))

return basename(full_containing_path)

"""

this code adapted from https://github.com/keras-team/keras/issues/5862#issuecomment-356121051

"""

def split_dataset_into_test_and_train_sets(all_data_dir, training_data_dir, testing_data_dir, testing_data_pct,

stratify=True, seed=None):

prev_state = None

if seed:

prev_state = random.getstate()

random.seed(seed)

# Recreate testing and training directories

if testing_data_dir.count('/') > 1:

shutil.rmtree(testing_data_dir, ignore_errors=False)

os.makedirs(testing_data_dir)

print("Successfully cleaned directory", testing_data_dir)

else:

print(

testing_data_dir, "not empty, did not remove contents")

if training_data_dir.count('/') > 1:

shutil.rmtree(training_data_dir, ignore_errors=False)

os.makedirs(training_data_dir)

print("Successfully cleaned directory", training_data_dir)

else:

print(training_data_dir, "not empty, did not remove contents")

files_per_class = defaultdict(list)

for subdir, dirs, files in os.walk(all_data_dir):

category_name = basename(subdir)

# Don't create a subdirectory for the root directories

if category_name in map(basename, [all_data_dir, training_data_dir, testing_data_dir]):

continue

# filtered past top-level dirs, now we're in a category dir

files_per_class[category_name].extend([join(abspath(subdir), file) for file in files])

# keep track of train/validation split for each category

split_per_category = defaultdict(lambda: defaultdict(int))

# create train/validation directories for each class

class_directories_by_type = defaultdict(lambda: defaultdict(str))

for category in files_per_class.keys():

training_data_category_dir = join(training_data_dir, category)

if not exists(training_data_category_dir):

os.mkdir(training_data_category_dir)

class_directories_by_type['train'][category] = training_data_category_dir

testing_data_category_dir = join(testing_data_dir, category)

if not exists(testing_data_category_dir):

os.mkdir(testing_data_category_dir)

class_directories_by_type['validation'][category] = testing_data_category_dir

if stratify:

for category, files in files_per_class.items():

random.shuffle(files)

last_index = math.ceil(len(files) * testing_data_pct)

# print('files upto index {} to val'.format(last_index))

# print('category {} train/validation: {}/{}'.format(category, len(files[:last_index]),

# len(files[last_index:])))

for file in files[:last_index]:

testing_data_category_dir = class_directories_by_type['validation'][category]

# print('moving {} to {}'.format(file, join(testing_data_category_dir, basename(file))))

shutil.move(file, join(testing_data_category_dir, basename(file)))

split_per_category['validation'][category] += 1

for file in files[last_index:]:

training_data_category_dir = class_directories_by_type['train'][category]

# print('moving {} to {}'.format(file, join(training_data_category_dir, basename(file))))

shutil.move(file, join(training_data_category_dir, basename(file)))

split_per_category['train'][category] += 1

else: # not stratified, move a fraction of all files to validation

files = []

for file_list in files_per_class.values():

files.extend(file_list)

random.shuffle(files)

last_index = math.ceil(len(files) * testing_data_pct)

for file in files[:last_index]:

category = get_containing_folder_name(file)

directory = class_directories_by_type['validation'][category]

shutil.move(file, join(directory, basename(file)))

split_per_category['validation'][category] += 1

for file in files[last_index:]:

category = get_containing_folder_name(file)

directory = class_directories_by_type['train'][category]

shutil.move(file, join(directory, basename(file)))

split_per_category['train'][category] += 1

if seed:

random.setstate(prev_state)

return split_per_category

@Krumpet Great! Thanks for the additions!

@Krumpet Thanks for the function. I had to change

last_index = math.ceil(len(files) * testing_data_percentage)

to

last_index = int(math.ceil(len(files) * testing_data_percentage))

to make it work though. Might be a python2/3 issue?

Maybe this can be closed. Check docs https://keras.io/preprocessing/image/

ImageDataGenerator class have:

validation_split: Float. Fraction of images reserved for validation (strictly between 0 and 1).

And then, flow_from_directory method

subset: Subset of data ("training" or "validation") if validation_split is set in ImageDataGenerator.

Code Example:

datagen = ImageDataGenerator(

rescale=1./255,

validation_split=0.2)

train_generator = datagen.flow_from_directory(

'data/folder', subset='training')

val_generator = datagen.flow_from_directory(

'data/folder', subset='validation')

model.fit_generator(

train_generator,

steps_per_epoch=2000,

epochs=50,

validation_data=val_generator,

validation_steps=800)

By the way, the subset trick also works with the flow method

Apologies for bumping this thread. But, I was under the impression that the validation set shouldn't be augmented. Was choosing to augment the validation set a conscious choice or is it simply a limitation of the ImageDataGenerator? If it was a conscious choice, then choosing to use the validation generator with data augmentation would be bad practice, no?

Apologies for bumping this thread. But, I was under the impression that the validation set shouldn't be augmented. Was choosing to augment the validation set a conscious choice or is it simply a limitation of the ImageDataGenerator? If it was a conscious choice, then choosing to use the validation generator with data augmentation would be bad practice, no?

I think you are right. To avoid augmenting validation set I wonder if something like this would work

datagen_train = ImageDataGenerator(

horizontal_flip=True,

preprocessing_function=preprocess_input,

validation_split=0.1

)

datagen_val = ImageDataGenerator(

preprocessing_function=preprocess_input,

validation_split=0.1

)

train_generator = datagen_train.flow_from_directory(

directory=train_dir

subset='training',

shuffle=False

)

val_generator = datagen_val.flow_from_directory(

directory=train_dir

subset='validation',

shuffle=False

)

So essentially we split the dataset into train and validation twice but we grab the train piece for train_generator and the validation piece for val_generator. Or am I wrong?

@bitnahian It is fine. The generator is just normalizing the images. The bad thing on this approach is, it is too slow. Really bad performance fitting the images. I would prefer to use another generator like the one GapCV.

You can process the images with this line:

images = Images('data_set_name', data_set_folder, config=['resize=({},{})'.format(img_height, img_width), 'store', 'stream'])

where:

data_set = final data_set.h5 file name

data_set_folder = folder where you have your images in sub-folders like:

sub_folder_01:

img_01

...

sub_folder_N:

img_01

....

that line will create a h5 file with your images already processed. Then you can load that file whenever you want with:

images = Images(config=['stream'])

images.load(data_set)

Then you can split the data set between train and test and set the generator:

# split data set

images.split = 0.2

X_test, Y_test = images.test

# generator

images.minibatch = batch_size

gap_generator = images.minibatch

total_train_images = images.count - len(X_test)

n_classes = len(images.classes)

use the generator as usual:

model.fit_generator(

generator=gap_generator,

validation_data=(X_test, Y_test),

steps_per_epoch=total_train_images // batch_size,

epochs=nb_epochs,

verbose=1

)

I did this test last week on performance flow_from_directory vs GapCV minibatch.

I got flow_from_directory 1h:12min to complete a full training 50 epochs vs

minibatch 2min:40sec to complete the same training.

by trying gapcv-1.0.0 with python3.6 it ends up with:

TypeError: __init__() got an unexpected keyword argument 'dataset'

by processing already the first line:

images = Images(dataset=data, labels=labels)

@Stancoo Hi, Thank you for trying Gapcv. Please try:

images = Images(images=data, labels=labels)

I'll update the readme file, since we change data_set to images instead.

Let me know if this works for you and if you have more questions. I'll be happy to help. :)

@Stancoo

How will we apply the ImageAugmentation using the ImageDataGenerator after the steps mentioned in gapcv comment?

@Stancoo

I am using grayscale images by using the grey value in config which is converting the images in the form (batch_size, height, width) but I also want to add another dimension to make the images of the shape (batch_size, height, width, 1) but I can't find any option in gapcv.

@Divyanshupy You can post the issues you are having with and example here: https://github.com/gapml/CV/issues

I can help you there. Thanks!

Dear all, Please am getting this error

File "

runfile('C:/Transfer/Research Things/PostDoc/Coding/Paper1/tensorflow_keras_cnn_model.py', wdir='C:/Transfer/Research Things/PostDoc/Coding/Paper1')

File "C:\Users\Oyelade\Anaconda3\libsite-packages\spyder_kernels\customize\spydercustomize.py", line 827, in runfile

execfile(filename, namespace)

File "C:\Users\Oyelade\Anaconda3\libsite-packages\spyder_kernels\customize\spydercustomize.py", line 110, in execfile

exec(compile(f.read(), filename, 'exec'), namespace)

File "C:/Transfer/Research Things/PostDoc/Coding/Paper1/tensorflow_keras_cnn_model.py", line 186, in

workers=0

File "C:\Users\Oyelade\Anaconda3\libsite-packages\tensorflow\python\kerasengine\training.py", line 1426, in fit_generator

initial_epoch=initial_epoch)

File "C:\Users\Oyelade\Anaconda3\libsite-packages\tensorflow\python\kerasengine\training_generator.py", line 184, in model_iteration

batch_size = int(nest.flatten(batch_data)[0].shape[0])

IndexError: tuple index out of range

The fit_generator used is as shown below:

`train_dataset=train_fn_inputs(batch_size, aug)

val_data=validation_fn_inputs(batch_size, aug)

total_records = 44712

val_records = 11178

steps_per_epoch=int(total_records // batch_size)

hist=model.fit_generator(aug.flow(X_def, y_def, batch_size=batch_size),

#get_batches(X_def, y_def, batch_size),

#train_dataset,

steps_per_epoch=steps_per_epoch, #(training_df.shape[0])//batchsize,

epochs=5,

verbose = 1,

#callbacks=[early_stopping],

validation_data=val_data,

validation_steps=val_records//batch_size,

workers=0

)`

And my generator is as shown below

`def train_fn_inputs(bs, aug=None):

aug=None

train_files, total_records = get_training_data_old()

steps_per_epoch = int(total_records / batch_size)

# Create folder to store extracted images

folder_path = './ExtractedImages'

shutil.rmtree(folder_path, ignore_errors = True)

os.mkdir(folder_path)

raw_dataset = tf.data.TFRecordDataset(train_files) #.repeat()

parsed_image_dataset = raw_dataset.map(_parse_image_function).repeat().shuffle(buffer_size=buf_size).batch(batch_size).make_initializable_iterator()

image, label = parsed_image_dataset.get_next()

while True:

# if the data augmentation object is not None, apply it

if aug is not None:

(images, labels) = next(aug.flow(image, label, batch_size=bs))

yield (np.array(image), np.array(label))`

Apologies for bumping this thread. But, I was under the impression that the validation set shouldn't be augmented. Was choosing to augment the validation set a conscious choice or is it simply a limitation of the ImageDataGenerator? If it was a conscious choice, then choosing to use the validation generator with data augmentation would be bad practice, no?

I think you are right. To avoid augmenting validation set I wonder if something like this would work

datagen_train = ImageDataGenerator( horizontal_flip=True, preprocessing_function=preprocess_input, validation_split=0.1 ) datagen_val = ImageDataGenerator( preprocessing_function=preprocess_input, validation_split=0.1 ) train_generator = datagen_train.flow_from_directory( directory=train_dir subset='training', shuffle=False ) val_generator = datagen_val.flow_from_directory( directory=train_dir subset='validation', shuffle=False )So essentially we split the dataset into train and validation twice but we grab the train piece for

train_generatorand the validation piece forval_generator. Or am I wrong?

I have the same question. Is this correct?

Here is my approach, to split both train and valid data while keeping augmentation changes only for training data. seed will make sure that data is randomized similarly.

datagen_train = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1./255,

validation_split=0.2,

rotation_range=20,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

vertical_flip=True,

fill_mode='nearest')

datagen_val = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1./255,

validation_split=0.2)

train_generator = datagen_train.flow_from_directory(

data_root,

seed=42,

target_size=(IMAGE_SIZE, IMAGE_SIZE),

batch_size=BATCH_SIZE,

shuffle=True,

subset='training')

val_generator = datagen_val.flow_from_directory(

data_root,

seed=42,

target_size=(IMAGE_SIZE, IMAGE_SIZE),

batch_size=BATCH_SIZE,

shuffle=True,

subset='validation')

Here is my approach, to split both train and valid data while keeping augmentation changes only for training data.

seedwill make sure that data is randomized similarly.datagen_train = tf.keras.preprocessing.image.ImageDataGenerator( rescale=1./255, validation_split=0.2, rotation_range=20, width_shift_range=0.2, height_shift_range=0.2, shear_range=0.2, zoom_range=0.2, horizontal_flip=True, vertical_flip=True, fill_mode='nearest') datagen_val = tf.keras.preprocessing.image.ImageDataGenerator( rescale=1./255, validation_split=0.2) train_generator = datagen_train.flow_from_directory( data_root, seed=42, target_size=(IMAGE_SIZE, IMAGE_SIZE), batch_size=BATCH_SIZE, shuffle=True, subset='training') val_generator = datagen_val.flow_from_directory( data_root, seed=42, target_size=(IMAGE_SIZE, IMAGE_SIZE), batch_size=BATCH_SIZE, shuffle=True, subset='validation')

This looks good, have you done any tests to make sure there is no leakage/overlap?

The seed makes sure that the data is randomized in the same order. Moreover, I got similar validation accuracy with this method and having different folders for train/val data.

Most helpful comment

I just found this in the documentation given here.

You have to specify

validation_splitin theImageDataGeneratorand specifysubsetfor each generator as shown below: