Keras: Add class_weight for validation and evaluation

afaict, class_weight is only used for training loss. would be useful to implement it also for validation (when using validation_data) and evaluation

All 12 comments

This issue has been automatically marked as stale because it has not had recent activity. It will be closed after 30 days if no further activity occurs, but feel free to re-open a closed issue if needed.

@probot-stale[bot], please stop staling my bugs. this is indeed still an issue.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed after 30 days if no further activity occurs, but feel free to re-open a closed issue if needed.

Not stale

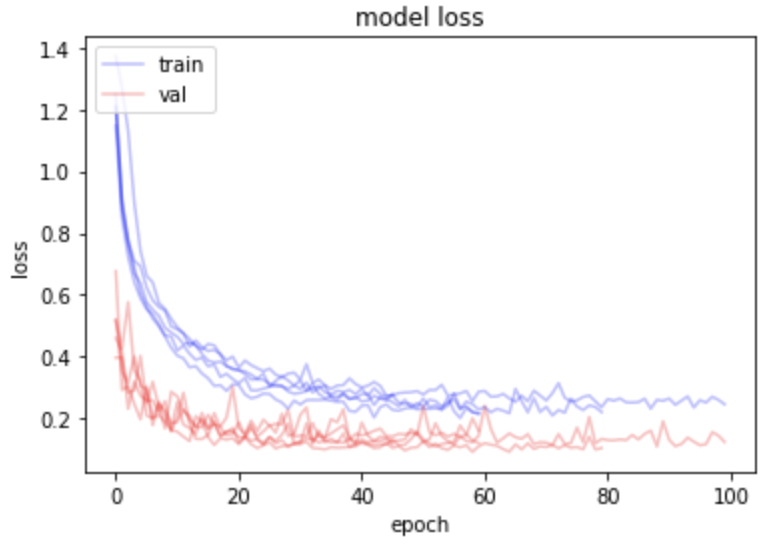

Would a PR fixing this be accepted? Or is this the intended design? It results in strange-looking loss history, due to the training loss being greater than the validation loss (here the class_weights are all 2.0):

Hi, I am also hitting this issue when using fit_generator and weighted_metrics. Namely during testing weighted_metrics are evaluated without class weights contrary to the documentation, that says

weighted_metrics: List of metrics to be evaluated and weighted by sample_weight or class_weight during training and testing.

which looks like a bug to me.

The issue stems from the fact that test_on_batch(and other evaluation functions) is absolutely unaware of class_weights parameter. E.g. test_on_batch calculates weights like:

x, y, sample_weights = self._standardize_user_data(

x, y,

sample_weight=sample_weight)

The solution might be trivial -- add class_weight parameter to test_on_batch, passthrough its value from fit_generator and calculate weights as

x, y, sample_weights = self._standardize_user_data(

x, y,

sample_weight=sample_weight,

class_weight=class_weight)

I can send a PR fixing this issue if the proposed solution seems reasonable?

have the same issue.

But I think your solution is not enough: In my case the imbalance of classes in train and validation set are different. Thus it would be very useful to give the fit_generator two different class_weight dictionaries for the train and for the validation set. E.g. class_weight_train, class_weight_val

if class_weight_val is not given, default should be class_weight_val=class_weight_train

Work around is of cause to adjust the validation generator and/or train generator to output samples with lower relative count multiple times to balance everything

I see that this hasn't been added to Keras - has anyone found a workaround solution for this?

Is there any solution please to add class_weight to the evaluate() method?

I need to mask missing values in my multitask prediction target.

This works with fit(class_weight) but not with fit_generator or fit(validation_data=(...)).

Is there a quick solution to this problem? I would need it very soon.

I also encountered this issue, which looks like a bug to me. For all use cases I can envision, if I decide to use class_weights, I would like them to be applied to both the training and validation loss. Am I missing something?

For everyone else encountering this and coming across this thread, check out the discussion at #8591 for an explanation why this was not changed.

Most helpful comment

Is there any solution please to add class_weight to the evaluate() method?