Keras: "Merge" versus "merge", what is the difference?

I came back to Keras after almost three months and saw a lot of change. I was trying to update my previously working network to Keras 1.1, when I encountered the following issue.

My model compiles with merge but it doesn't with Merge. Eventually, I found it out by trial and error and made my code work. My model is a Siamese network having two channels, with a 256-D Dense layer each at the end (call them C1 and C2). Then I do C1-C2, add another dense in front of C1-C2 layer output, and then the loss. I previously used "Siamese" and "add_shared_layer", but they don't exist now, so I have to switch to functional API. My code is as follows:

def create_base_seqNetwork(input_dim):

seq = Sequential()

seq.add(Convolution2D(64, 11, 11, border_mode='same', trainable=True, init='he_normal', activation='relu',

W_regularizer=l2(regularizer), subsample=(2, 2), input_shape=input_dim))

seq.add(MaxPooling2D(pool_size=(2, 2)))

seq.add(Convolution2D(64, 5, 5, border_mode='same', trainable=True, init='he_normal', activation='relu',

W_regularizer=l2(regularizer)))

seq.add(MaxPooling2D(pool_size=(2, 2)))

seq.add(Convolution2D(64, 3, 3, border_mode='same', trainable=True, init='he_normal', activation='relu',

W_regularizer=l2(regularizer)))

seq.add(Convolution2D(64, 3, 3, border_mode='same', trainable=True, init='he_normal', activation='relu',

W_regularizer=l2(regularizer)))

seq.add(Flatten())

seq.add(Dropout(0.5))

seq.add(Dense(1000, trainable=True, init='he_normal', activation='relu', W_regularizer=l2(regularizer)))

seq.add(Dropout(0.5))

seq.add(Dense(256, trainable=True, init='he_normal', activation='linear', W_regularizer=l2(regularizer)))

seq.add(Dropout(0.5))

return seq

input_dim = (imgChannels,imgHeight,imgWidth)

base_network = create_base_seqNetwork(input_dim)

input_a = Input(shape=input_dim)

input_b = Input(shape=input_dim)

# because we re-use the same instance `base_network`,

# the weights of the network

# will be shared across the two branches

processed_a = base_network(input_a)

processed_b = base_network(input_b)

processed_b = Lambda(lambda x:-x)(processed_b)

############################################################

# replace merge by Merge and the network won't compile

model = merge([processed_a, processed_b], mode='sum')

############################################################

model = Dense(512,init='he_normal',activation='relu',trainable=True)(model)

model = Dropout(0.5)(model)

model = Dense(1,init='he_normal',activation='linear',trainable=True)(model)

model = Model(input=[input_a, input_b], output=model)

printing("Built the model")

sgd = SGD(lr=LearningRate, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(loss=someLoss, optimizer=sgd)

printing("Compilation finished")

So as the question title says, what is the difference between "merge" and "Merge". I think "Merge" is used when you want to sum/concat/mul two Sequential() models. The "merge" is used when you are working with functional API. However, this is not written anywhere in the documentation. Additionally, Sequential documentation has a section called the "Merge" layer, but when I navigate to the functional API example, there is "merge" everywhere. This is a subtle difference, not very easy to observe unless written explicitly.

Additionally, you can import "Merge/merge" in at least four different ways:

from keras.layers import merge, # works

from keras.layers import Merge, # doesn't work

from keras.engine.topology.merge, # works

from keras.engine.toplogy.Merge # doesn't work

So are two "merge" and the other two "Merge" any different?

If "merge" and "Merge" are indeed substantially different, apart from making this clear, we will also need to change two LSTM diagrams, one in "Guide to Sequential Model" and the other one in the "Guide to Functional API". They both mention, "merge_1(Merge)". So it is natural to think there is only one such layer, and in my case, that was "Merge" for a long time.

All 64 comments

- Merge is a layer.

- Merge takes layers as input

- Merge is usually used with Sequential models

- merge is a function.

- merge takes tensors as input.

- merge is a wrapper around Merge.

- merge is used in Functional API

Using Merge:

left = Sequential()

left.add(...)

left.add(...)

right = Sequential()

right.ad(...)

right.add(...)

model = Sequential()

model.add(Merge([left, right]))

model.add(...)

using merge:

a = Input((10,))

b = Dense(10)(a)

c = Dense(10)(a)

d = merge([b, c])

model = Model(a, d)

@farizrahman4u Ok, I understood. I think this should be put somewhere in documentation. I am closing this issue now.

I think so - it is quite confusing, should be written very clearly! @farizrahman4u's comment looks very nice and clear :)

@farizrahman4u Using Merge() with Keras 2.0 throws a deprecation warning though it still works. The Keras 2.0 docs here seems to now only have a keras.layers.merge. Any idea how to updae Merge usage in sequential models in Keras 2.0?

New API has explicit sum/concat/multiply layers (https://keras.io/layers/merge/).

@keunwoochoi @farizrahman4u So now the new Keras API takes only tensors as inputs? What about if we have two sequential models and would like to sum them? Previously we would use Merge, but now it seems that we can give only tensors and use only merge.

If there are two sequential models and you want to merge them, isn't it already a structure that requires to use Functional model rather than Sequential model? -- and you can assume those two sequential models as layers and use the output tensors of those models as an input if merge layer.

On 25 Mar 2017, at 20:05, Parag S. Chandakkar notifications@github.com wrote:

@keunwoochoi @farizrahman4u So now the new Keras API takes only tensors as inputs? What about if we have two sequential models and would like to sum them? Previously we would use Merge, but now it seems that we can give only tensors and use only merge.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

@keunwoochoi Previously you didn't have to use Functional graph, if I remember correctly. See @farizrahman4u answer above. I will copy the relevant snippet from that answer here:

left = Sequential()

left.add(...)

left.add(...)

right = Sequential()

right.ad(...)

right.add(...)

model = Sequential()

model.add(Merge([left, right]))

model.add(...)

So I don't think we can assume outputs of left and right as tensors and use it in merge. I actually like this change as it removes the confusion between Merge and merge.

Oh, I see, I was writing via email and missed it.

I actually like this change as it removes the confusion between Merge and merge.

Absolutely agreed.

On 25 Mar 2017, at 20:22, Parag S. Chandakkar notifications@github.com wrote:

I actually like this change as it removes the confusion between Merge and merge.

@keunwoochoi Thanks for that. Using the functional API was indeed the way to go.

Can you please confirm that the Keras 1.2.2 code

from keras.engine import merge

m = merge([init, x], mode='sum')

is equivalent to this Keras 2.0.2 code:

from keras.layers import add

m = add([init, x])

@apacha Yes, they are equivalent..

Where is Merge in keras 2?

The Merge layer is deprecated and will be removed after 08/2017. Use instead layers from keras.layers.merge, e.g. add, concatenate, etc.

left = Sequential() left.add(...) left.add(...) right = Sequential() right.add(...) right.add(...) model = Sequential() model.add(Merge([left, right])) model.add(...)

How does one do this under Keras 2?

I've tried:

model = Sequential()

model.add(Dot(axes=1)([left, right]))

but I get:

Layer dot_1 was called with an input that isn't a symbolic tensor. Received type:

. Full input: [ , ]. All inputs to the layer should be tensors.

I see above here some comments alluding to this, but I'm not clear what the recommended approach is now.

Any guidance or examples would be appreciated.

nlothian

maybe this helps

is_keras_tensor

>> from keras import backend as K

>>> np_var = numpy.array([1, 2])

>>> K.is_keras_tensor(np_var) # A numpy array is not a symbolic yensor.

ValueError

>>> k_var = tf.placeholder('float32', shape=(1,1))

>>> K.is_keras_tensor(k_var) # A variable created directly from tensorflow/theano is not a Keras tensor.

False

>>> keras_var = K.variable(np_var)

>>> K.is_keras_tensor(keras_var) # A variable created with the keras backend is a Keras tensor.

True

>>> keras_placeholder = K.placeholder(shape=(2, 4, 5))

>>> K.is_keras_tensor(keras_placeholder) # A placeholder is a Keras tensor.

True

this is wrong

model.add(Dot(axes=1)([left, right]))

i don't understand by these line of code what you tried to add to Sequential

model.add(Dot(axes=1)([left, right]))

is my attempt to do the same as

model.add(Merge([left, right]))

Check out documentation about merge layers in Keras 2.0.

@keunwoochoi Thanks, I've spent a lot of time on that page!

I'd note that the documentation for Dot says

Layer that computes a dot product between samples in two tensors.

and I understand that is the error I'm getting because I'm trying to merge layers, not tensors.

So how do I merge two Sequential layers in Keras 2?

I'd like to do exactly the example @parag2489 posted at https://github.com/fchollet/keras/issues/3921#issuecomment-289237130

I'm kind of getting the impression that isn't possible anymore? If someone could confirm that it would be useful.

I've made an attempt at doing the equivalent using the functional API, but it'd be easier to get this working if possible.

Alright, didn't know that. I've used it without any confusion so far but I think that's because I was relying on Model, not Sequential. I have no idea how it supposed to work when the input is a list of two Sequential models, but could you try with Model API? Say,

from keras.layers.merge import Dot, Sum

left_input = Input(shape)

left = something()(left_input)

left = some_other(left)

right_input = Input(shape)

right = something()(right_input)

right = some_other(right)

model = Sequential()

model.add(Dot()([left, right])) # or Sum(), or whatever.

model.add(...)

or even the model is more about tensor as left and right are.

EDIT: Update Merge()

EDIT AGAIN: no Merge but Dot().

@fchollet I think the documentation is still lack of a crystal clear explanation of tensor. Merge layers documentation says It takes as input a list of tensors,, but people seem to get confused a bit.

Also it would be good if there's example on those layers. I can work on examples, but I think the getting started part can be in more details. Speaking of which, probably it'd be really good if we have separate menu for 1. how to get started to USE keras and 2. how to get started to Develope keras (e.g., customising layers, callbacks, etc,.. those seems still a little bit lack of comprehensive explanation, you know, no one complains anything about keras's user experience but only developing or debugging experience.)

@keunwoochoi I'm looking at doing that now. It's not an easy conversion at all though.

Or, keep the sequential model but then add input and then output_left = model_left(input_left)?

On 15 Jun 2017, at 04:29, Nick Lothian notifications@github.com wrote:

@keunwoochoi I'm looking at doing that now. It's not an easy conversion at all though.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

Hi All! I read this long post and even did not understand final solution how do I merge two Sequential layers in Keras 2? Please help with example.

I am also having the same issue how do i replace

merged = merge([avged, maxed])

above line in keras 2.0.5

There doesn't seem to be a way to concatenate 2 sequential layers anymore...

According @keunwoochoi it is possible, but may be just other solution with the same result.

I expect to get good example from "Contributor", because I did not find this information in Keras

documentation.

@tiru1930

>>> from keras.layers.merge import Concatenate

>>> merged = Concatenate()([avged, maxed])

@vladT0 @wtesler I think the code up there would work. The idea is to consider Sequential models as layers and build a Functional model using them.

@keunwoochoi

Thank you i just tried Concatenate option didn't work out gave dimension mismatch error. So tired below and worked for me

add_layer = layers.Add()

merged=add_layer([avged, maxed])

I used add because legacy layers merge by default it is taking mode value is 'sum'

merged = merge([avged, maxed]) == merged = merge([avged, maxed],mode='sum')

above line in keras 2.0.5

This should probably get fixed before 08/17.

On Tue, Jun 27, 2017 at 2:52 PM, Tiru notifications@github.com wrote:

@keunwoochoi https://github.com/keunwoochoi

Thank you i just tried Concatenate option didn't work out gave dimension

mismatch error. So tired below and worked for meadd_layer = layers.Add()

merged=add_layer([avged, maxed])I used add because legacy layers merge by default it is taking mode value

is 'sum'

merged = merge([avged, maxed]) == merged = merge([avged, maxed],mode='sum')

above line in keras 2.0.5—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

https://github.com/fchollet/keras/issues/3921#issuecomment-311335418,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AFdLCGFAN2njP1TN_Vl7_GKh0MXi4Fwjks5sIO0VgaJpZM4KKpaO

.

@keunwoochoi

I hope this code will work, but how to import Merge function ( with capital M )?

https://github.com/fchollet/keras/issues/3921#issuecomment-308597601

Keras 1.2.2:

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation, Reshape, Merge

from keras.layers.embeddings import Embedding

models = []

model_1 = Sequential()

model_1.add(Embedding(10, 6, input_length=1))

model_1.add(Reshape(target_shape=(6,)))

models.append(model_1)

model_2 = Sequential()

model_2.add(Embedding(3, 2, input_length=1))

model_2.add(Reshape(target_shape=(2,)))

models.append(model_2)

..........

self.model = Sequential()

self.model.add(Merge(models, mode='concat'))

self.model.add(Dropout(0.01))

..........

How to implement it to the Keras 2.0.5 ?

Could you please write here just a working example. It will be very suitable for all developers and even may be to have in mind

The Merge layer is deprecated and will be removed after 08/2017. Use instead layers from

keras.layers.merge, e.g. add, concatenate, etc.

Thanks in advance.

I have this code which compiles and I think works. I'd point out that I don't use _Merge_ itself, but instead the _dot_ method, which I think does the equivalent in the old API.

Regarding documentation: yes please! Specifically examples using each API.

I'm still not entirely sure why there are two APIs and when you should choose one vs the other.

input1 = Input(shape=(2, ), dtype = 'int32', name = 'input_layer1')

#input2 = Input(shape=(1, ), dtype = 'int32', name = 'input_layer2')

model_word = Embedding(input_dim=max_features, output_dim=dim_proj, embeddings_initializer="uniform", name="word_embedding")(input1)

model_context = Embedding(input_dim=max_features, output_dim=dim_proj, embeddings_initializer="uniform", name="context_embedding")(input1)

m = dot([model_word, model_context], axes=1)

m = Flatten()(m)

predictions = Dense(1, activation='sigmoid', name="dense")(m)

model = Model(inputs=input1, outputs=predictions)

model.compile(loss='mse', optimizer='rmsprop')

In my opinion sintax in Keras 1.2.2 was absolutelly clear and nice. I expect new version shall be better, but I can not understand why team could not give us even one clear example with Merge and Sequential. It is out of my understanding. If that is impossible, just to write impossible and to show source code replacement.

When they removed Merge from version 2. they had in mind something what can improve Keras, I hope!

@vladT0 I updated the code above.

I think the new API was designed for the consistency with other layers in general.

# the new Add(), Dot() and also all the other layers

output_tensor = Layer()(input_tensor1, input_tensor2)

# ONLY for the old merge layer

output_tensor = Layer([input_tensor1, input_tensor2])

I'd say the problem is more about the documentation then the API itself.

@vladT0

self.model = Sequential()

self.model.add(Merge(models, mode='concat'))

Is this code even supposed to work on keras 1.2? Like how? Sequential models do not take more than one input. The single and the only input's shape should be specified when you initiate Sequential(). Your code seems like a model that takes TWO inputs, then different embeddings applied, and then merged. Such a structure is only available with Functional API.

@keunwoochoi

self.model = Sequential()

self.model.add(Merge(models, mode='concat'))

Yes, this code is perfectly working in Keras 1.2.2 and I have working application with final ( expected ) result. If you would like we can make a conf. call with you and I can show :)

@keunwoochoi Your example with Dot throws:

str(inputs) + '. All inputs to the layer '

ValueError: Layer dot_1 was called with an input that isn't a symbolic tensor. Received type:

and yes, I need to merge 2 layers, not tensors.

Okay, but even it worked in the previous version, it is definitely a structure that should be implemented with functional API. I don't understand how a Merge layer can work well in a Sequential model. In Sequential models, the one and only one current output is the input for the new, added layer. But how a Merge layer would take two inputs? It is very ambiguous and as a user I wouldn't be confident about how the code would work.

So my strong opinion is to convert the code to Functional API because your structure seems what it's designed for.

By the way, #7169 will add two examples in Add and add. It would be straightforward to learn how to use other merge layers with them.

@keunwoochoi

So my strong opinion is to convert the code to Functional API because your structure seems what it's designed for.

Could you please help to convert it to the code with Functional API ? I am not sure I understand how it is working.

Layer (type) Output Shape Param # Connected to

====================================================================================================

embedding_1 (Embedding) (None, 1, 6) 42

____________________________________________________________________________________________________

reshape_1 (Reshape) (None, 6) 0

____________________________________________________________________________________________________

embedding_2 (Embedding) (None, 1, 2) 6

____________________________________________________________________________________________________

reshape_2 (Reshape) (None, 2) 0

____________________________________________________________________________________________________

embedding_3 (Embedding) (None, 1, 6) 72

____________________________________________________________________________________________________

reshape_3 (Reshape) (None, 6) 0

____________________________________________________________________________________________________

embedding_4 (Embedding) (None, 1, 10) 310

____________________________________________________________________________________________________

reshape_4 (Reshape) (None, 10) 0

____________________________________________________________________________________________________

embedding_5 (Embedding) (None, 1, 6) 42

____________________________________________________________________________________________________

reshape_5 (Reshape) (None, 6) 0

____________________________________________________________________________________________________

embedding_6 (Embedding) (None, 1, 6) 54

____________________________________________________________________________________________________

reshape_6 (Reshape) (None, 6) 0

____________________________________________________________________________________________________

embedding_8 (Embedding) (None, 1, 50) 122800

____________________________________________________________________________________________________

reshape_8 (Reshape) (None, 50) 0

____________________________________________________________________________________________________

dense_1 (Dense) (None, 1) 2

____________________________________________________________________________________________________

dense_2 (Dense) (None, 1) 2

____________________________________________________________________________________________________

embedding_9 (Embedding) (None, 1, 50) 258300

____________________________________________________________________________________________________

reshape_9 (Reshape) (None, 50) 0

____________________________________________________________________________________________________

embedding_10 (Embedding) (None, 1, 50) 248500

____________________________________________________________________________________________________

reshape_10 (Reshape) (None, 50) 0

____________________________________________________________________________________________________

dropout_1 (Dropout) (None, 188) 0 merge_1[0][0]

____________________________________________________________________________________________________

dense_3 (Dense) (None, 1000) 189000 dropout_1[0][0]

____________________________________________________________________________________________________

activation_1 (Activation) (None, 1000) 0 dense_3[0][0]

____________________________________________________________________________________________________

dense_4 (Dense) (None, 500) 500500 activation_1[0][0]

____________________________________________________________________________________________________

activation_2 (Activation) (None, 500) 0 dense_4[0][0]

____________________________________________________________________________________________________

dense_5 (Dense) (None, 1) 501 activation_2[0][0]

____________________________________________________________________________________________________

activation_3 (Activation) (None, 1) 0 dense_5[0][0]

====================================================================================================

Sure, but perhaps after you read the api doc?Cuz it is actually quite straightforward.

On 3 Jul 2017, at 10:04, VladT0 notifications@github.com wrote:

@keunwoochoi

So my strong opinion is to convert the code to Functional API because your structure seems what it's designed for.

Could you please help to convert it to the code with Functional API ? I am not sure I understand how it is working.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

I have read API doc and I have tried to convert 2 embedding layers. I will write old source code and new one and you can check, if I am on the correct way.

Keras 1.2.2:

model_1 = Sequential()

model_1.add(Embedding(7, 30, input_length=1))

model_1.add(Reshape(target_shape=(30,)))

models.append(model_1)

model_2 = Sequential()

model_2.add(Embedding(3, 2, input_length=1))

model_2.add(Reshape(target_shape=(2,)))

models.append(model_2)

Keras 2.0.5:

model_1 = Input(shape=(7, ), name = 'model_1')

model_1 = Embedding(7,6, input_length=1)(model_1)

model_1 = Reshape(target_shape=(6,))(model_1)

model_2 = Input(shape=(3, ), name = 'model_2')

model_2 = Embedding(3,2, input_length=1)(model_2)

model_2 = Reshape(target_shape=(2,))(model_2)

I assume each Embedding layer will be here as separate input, or I am incorrect. Because finally

I want to merge all Embedding layer to one, how you saw in source code from me.

@keunwoochoi Seems functional API is not working, I have done something wrong and I think the main

problem to define input shape and especially batch_size ( 2D tensor with shape: (batch_size, sequence_length)) for Embedding layer. It is working with Sequential model, but not with functional API. Please help me to fix Input statment.

Edit: Seems I found solution. Input was changed to

x0 = Input(shape=(1,), name = 'model_1')

model_1 = Embedding(7,6, input_length=1)(x0)

and it is working now.

For those having trouble still due to the documentation not being updated, I can confirm "merged = Concatenate()([avged, maxed])" worked on merging layers (not tensors)

I gave up to get support or help from Keras developing team and finally found solution myself, but lost a lot of time and documentation is very bad. I agree. We have no direct access to high skilled guys from developing team, and it is clear, they need to have time for further developing.

I understand your frustration. But it's an open-source project and overall things are explained, it's just there are corner cases in many places that the documentation is not covering at the moment. Now that you become able to help others, could you clarify what was the exact problem as well as the solution you found out? You can PR the suggestion, otherwise I'd do it and we can discuss here before.

My task was to rewrite code from Keras 1.2.2 to Keras 2.0.5. Conclustion from my side, please correct me, if I am wrong: Sequential model is not working with Merge layers anymore, we have to use functional API.

Keras 1.2.2

models = []

model_1 = Sequential()

model_1.add(Embedding(7, n1, input_length=1))

model_1.add(Reshape(target_shape=(n1,)))

models.append(model_1)

.................

model_11 = Sequential()

model.11.add(Embedding(422, n2, input_length=1))

model_11.add(Reshape(target_shape=(n2,)))

models.append(model_11)

self.model = Sequential()

self.model.add(Merge(models, mode='concat'))

..............

Keras 2.0.5

models = []

i0 = Input(shape=(1,), name = 'model_1')

model_1 = Embedding(7,6, input_length=1)(i0)

model_1 = Reshape(target_shape=(6,))(model_1)

models.append(model_1)

...............................

i11 = Input(shape=(1, ), name = 'model_11')

model_11 = Embedding(4970,50, input_length=1)(i11)

model_11 = Reshape(target_shape=(50,))(model_11)

models.append(model_11)

.................

merged = Concatenate()(models)

x = Dropout(0.015)(merged)

.................

Use keras.layers.concatenate (and similar like add, etc.) to merge and output tensors.

@farizrahman4u I think everyone seeks a simple answer to the following question: _"Is there any possible way to add a merge layer into Sequential models?"_

I'm all the way with @vladT0 opinion (https://github.com/fchollet/keras/issues/3921#issuecomment-328270944): there is no workaround for merging layers using the sequential model anymore, it seems the development team considered merging as an advanced feature for Sequential models... Neither concat or Concatenate can do the job with Sequential layers as inputs, so everyone has to turn to Functional API to build such networks in Keras 2.0.6. ...

Hi everyone. This seems to be the most visited issue here. Let me clear this up once and for all.

Keras 0.x

Merge- A layer used in early versions of Keras for combining inputs from 2 or more Sequential models and was also used internally in the oldGraphcontainer. The layer took models as arguments, not tensors:

model1 = Sequential()

model1.add(...)

model2 = Sequential()

model2.add(...)

model3 = Sequential()

model3.add(Merge([model1, model2], mode='sum')

model3.add(...)

model.3.compile(...)

model3.fit(...)

If you wanted a custom merge mode, you could pass a lambda as the mode argument.

This syntax is now very very deprecated.

Keras 1.x

merge- In Keras 1.0, the functional API was introduced, and part of it was themergefunction. It is simply a functional wrapper over aMergeobject, and it operates on tensors, NOT models.

input1 = Input((10,))

x1 = Dense(10)(input1)

input2 = Input((10,))

x2 = Dense(10)(input2)

y = merge([x1, x2], 'sum')

Keras 2.x

- In Keras 2+, instead of having a single

Mergelayer andmergewith amodeargument for toggling different modes, we have separate layers (and corresponding functions) for each mode. You may use the layer or the wrapper function, I personally prefer the later.

e.g:

input1 = Input((10,))

x1 = Dense(10)(input1)

input2 = Input((10,))

x2 = Dense(10)(input2)

y = add([x1, x2]) # other modes: multiply, concatenate, dot

# Alternatively, using a layer object:

y = Add()([x1, x2]) # other layers: Multiply, Concatenate, Dot

If you want a custom merge mode, you could either write a custom layer that inherits from keras.layers._Merge (see code for existing merge layers and functions in keras/layers/merge.py), or use aLambda` layer:

def weighted_sum(X):

x1, x2 = X

return 0.2 * x1 + 0.8 * x2

y = Lambda(weighted_sum, output_shape=(None, dim)) # output_shape argument not required for TF backend

Converting old Keras 0.x code with Sequential + Merge to Keras 2:

First off, Sequential is "kinda" deprecated, but I still prefer it when there are large stacks of layers.

This is the corresponding Keras 2 code (still using Sequential) for the 0.x code up top in this comment:

model1 = Sequential()

model1.add(...)

model2 = Sequential()

model2.add(...)

x = add(model1.output, model2.output])

model3 = Sequential()

model3.add(...)

model3_output = model3(x)

model = Model([model1.input, model2.input], model3_output) # wrap everything up in a functional

model.compile(...)

model.fit(...)

Hope this helps.

@farizrahman4u Your last code snippet uses deprecated syntax for the Sequential + Merge section, doesn't it? That Merge operation shows a deprecation message. And that legacy API causes models to be deserialized improperly so you can't actually save the models and re-use them.

As best I can tell, Sequential models are simply not supported and anybody using Sequential models with the old Merge functionality needs to re-write their code using the functional API. That kinda sucks.

@seinberg That was a copy pasta error. My bad. Fixed.

I built a project using (Merge, merge) layer once, and use share layer in another. The problem I can't find any documentation that explains how merge or share layer works like convolution or max-pooling layers.

I will be grateful if anyone can direct me or suggest some paper to me that helps me to understand how these layers work ???

Any further update on this? The documentation seems to imply the lower case versions (ex. add() instead Add()) are for the functional API. But the example of using Add() use the functional API, not the Sequential. So I am a bit confused.

The documentation seems to imply the lower case versions (ex. add() instead Add()) are for the functional API.

Yes, I wrote the documentation examples but that part was @fchollet's suggestion, so I agree that add() > Add().

But the example of using Add() use the functional API, not the Sequential. So I am a bit confused.

Is it possible to use merge layers in Sequential models? (then how could it be Sequential?) I might be wrong, but at least that's how I understood and that's why both of them are using Functional API.

[EDIT] Ok, as in the @farizrahman4u's example, it can be used as a first layer of a Sequential model. Probably worth changing/updating the documentation with @farizrahman4u's example? I'm not following this issue anymore but if someone could PR that'd be great.

Thanks keunwoochoi. Your explanation makes good sense. I guess the best thing is use Add in layer based scenarios, and add() in functions. At least that is what after reading the sources.

@farizrahman4u thanks for your explanation contrasting the different API choices. I bit the bullet and rewrote my existing model using the functional API because of the deserialization problem of Merge. Sadly I can report that with the identical network, my training is slowed down materially. No other code changes were made. Is there anything obvious that comes to mind?

Using Sequential model as your example for "Keras 0.x" shows vs functional as your "Keras 2.x" is much faster.

Old:

INFO 2018-02-07 13:32:01,933 task Training...

_INFO 2018-02-07 13:32:45,615 task begin epoch 0

INFO 2018-02-07 13:32:53,890 task begin epoch 1_

INFO 2018-02-07 13:32:54,763 task begin epoch 2

INFO 2018-02-07 13:32:55,580 task Done training.

New:

INFO 2018-02-07 13:38:50,433 task Training...

_INFO 2018-02-07 13:41:54,559 task begin epoch 0

INFO 2018-02-07 13:43:14,763 task begin epoch 1_

INFO 2018-02-07 13:43:15,918 task begin epoch 2

INFO 2018-02-07 13:43:16,991 task Done training.

@farizrahman4u Thanks for the explanation. I'm trying to convert my old 0.x way to 2.x. I was using three Sequential layers: LSTM, attention, and a combination layer. The combination layer does the pairwise multiplication. I tried to follow your suggestions. But it seems that I missed something. I got cannot obtain value for tensor Tensor("sequential_1_input:0", shape=(?, 200), dtype=float32) at layer "sequential_1_input".

Any suggestions? Thanks.

//Build LSTM layer...

LSTM_layer = Sequential()

LSTM_layer.add(Embedding(MAX_NB_WORDS, HIDDEN_EMBEDDING_DIM,

input_length=MAX_LENGTH, embeddings_initializer='uniform'))

LSTM_layer.add(LSTM(HIDDEN_EMBEDDING_DIM, dropout=0.2, recurrent_dropout=0.2, return_sequences=True))

//Build a layer using LSTM outputs

attention_layer = Sequential()

attention_layer.add(LSTM_layer)

attention_layer.add(Attention())

attention_layer.add(RepeatVector(HIDDEN_EMBEDDING_DIM))

attention_layer.add(Permute((2, 1)))

// I used to do layer_all.add(multiply([LSTM_layer, attention_layer])) for multiply

x = multiply([LSTM_layer.get_output_at(0), attention_layer.get_output_at(0)])

layer_all = Sequential()

layer_all .add(Lambda(lambda x: K.sum(x, axis=-2), input_shape=(200,64)))

layer_all .add(Dropout(0.5))

layer_all .add(Dense(1, activation='sigmoid'))

layer_all_output = layer_all (x)

model = Model(LSTM_layer.get_input_at(0), model3_output)

I'm trying to update a resnet model to Keras 2.0 and i was curious how you would update the line:

x = merge([x, input_tensor], mode='sum', name=merge_name)

to use add?

x = Add()([x, input_tensor])

seems fine, but it won't allow me to use the name argument. Is there something i should be doing instead?

Does this not work?

x = Add(name='merge_name')([x, input_tensor])

I actually got it figured out! I think at some point the allowed characters in the tensorflow naming scheme changed. The name was trying to use a '+' which wasn't actually valid.

This might be useful on how to do merging using Keras 2.

https://machinelearningmastery.com/keras-functional-api-deep-learning/

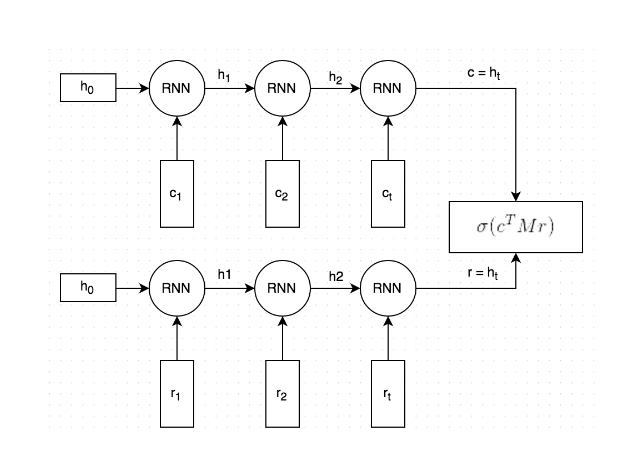

Can someone please guide me on how to implement this model in Keras ? I am confused how to combine the outputs of both LSTM's ? Especially how to computer sigmoid(CMR)

Most helpful comment

Using

Merge:using

merge: