Joss-reviews: [REVIEW]: MiraPy: Python Package for Deep Learning in Astronomy

Submitting author: @swapsha96 (Swapnil Sharma)

Repository: https://github.com/mirapy-org/mirapy

Version: v0.1.0

Editor: @mbobra

Reviewers: @DanielLenz, @mbobra

Archive: Pending

Status

Status badge code:

HTML: <a href="http://joss.theoj.org/papers/ff93bd7cf84685c479c74a5c84d12637"><img src="http://joss.theoj.org/papers/ff93bd7cf84685c479c74a5c84d12637/status.svg"></a>

Markdown: [](http://joss.theoj.org/papers/ff93bd7cf84685c479c74a5c84d12637)

Reviewers and authors:

Please avoid lengthy details of difficulties in the review thread. Instead, please create a new issue in the target repository and link to those issues (especially acceptance-blockers) by leaving comments in the review thread below. (For completists: if the target issue tracker is also on GitHub, linking the review thread in the issue or vice versa will create corresponding breadcrumb trails in the link target.)

Reviewer instructions & questions

@mbobra & @DanielLenz, please carry out your review in this issue by updating the checklist below. If you cannot edit the checklist please:

- Make sure you're logged in to your GitHub account

- Be sure to accept the invite at this URL: https://github.com/openjournals/joss-reviews/invitations

The reviewer guidelines are available here: https://joss.readthedocs.io/en/latest/reviewer_guidelines.html. Any questions/concerns please let @mbobra know.

✨ Please try and complete your review in the next two weeks ✨

Review checklist for @mbobra

Conflict of interest

- [x] As the reviewer I confirm that I have read the JOSS conflict of interest policy and that there are no conflicts of interest for me to review this work.

Code of Conduct

- [x] I confirm that I read and will adhere to the JOSS code of conduct.

General checks

- [x] Repository: Is the source code for this software available at the repository url?

- [x] License: Does the repository contain a plain-text LICENSE file with the contents of an OSI approved software license?

- [x] Version: Does the release version given match the GitHub release (v0.1.0)?

- [x] Authorship: Has the submitting author (@swapsha96) made major contributions to the software? Does the full list of paper authors seem appropriate and complete?

Functionality

- [x] Installation: Does installation proceed as outlined in the documentation?

- [ ] Functionality: Have the functional claims of the software been confirmed?

- [ ] Performance: If there are any performance claims of the software, have they been confirmed? (If there are no claims, please check off this item.)

Documentation

- [ ] A statement of need: Do the authors clearly state what problems the software is designed to solve and who the target audience is?

- [ ] Installation instructions: Is there a clearly-stated list of dependencies? Ideally these should be handled with an automated package management solution.

- [ ] Example usage: Do the authors include examples of how to use the software (ideally to solve real-world analysis problems).

- [ ] Functionality documentation: Is the core functionality of the software documented to a satisfactory level (e.g., API method documentation)?

- [ ] Automated tests: Are there automated tests or manual steps described so that the function of the software can be verified?

- [ ] Community guidelines: Are there clear guidelines for third parties wishing to 1) Contribute to the software 2) Report issues or problems with the software 3) Seek support

Software paper

- [x] Authors: Does the

paper.mdfile include a list of authors with their affiliations? - [ ] A statement of need: Do the authors clearly state what problems the software is designed to solve and who the target audience is?

- [ ] References: Do all archival references that should have a DOI list one (e.g., papers, datasets, software)?

Review checklist for @DanielLenz

Conflict of interest

- [x] As the reviewer I confirm that I have read the JOSS conflict of interest policy and that there are no conflicts of interest for me to review this work.

Code of Conduct

- [x] I confirm that I read and will adhere to the JOSS code of conduct.

General checks

- [x] Repository: Is the source code for this software available at the repository url?

- [x] License: Does the repository contain a plain-text LICENSE file with the contents of an OSI approved software license?

- [x] Version: Does the release version given match the GitHub release (v0.1.0)?

- [x] Authorship: Has the submitting author (@swapsha96) made major contributions to the software? Does the full list of paper authors seem appropriate and complete?

Functionality

- [x] Installation: Does installation proceed as outlined in the documentation?

- [x] Functionality: Have the functional claims of the software been confirmed?

- [x] Performance: If there are any performance claims of the software, have they been confirmed? (If there are no claims, please check off this item.)

Documentation

- [x] A statement of need: Do the authors clearly state what problems the software is designed to solve and who the target audience is?

- [x] Installation instructions: Is there a clearly-stated list of dependencies? Ideally these should be handled with an automated package management solution.

- [x] Example usage: Do the authors include examples of how to use the software (ideally to solve real-world analysis problems).

- [x] Functionality documentation: Is the core functionality of the software documented to a satisfactory level (e.g., API method documentation)?

- [x] Automated tests: Are there automated tests or manual steps described so that the function of the software can be verified?

- [x] Community guidelines: Are there clear guidelines for third parties wishing to 1) Contribute to the software 2) Report issues or problems with the software 3) Seek support

Software paper

- [x] Authors: Does the

paper.mdfile include a list of authors with their affiliations? - [x] A statement of need: Do the authors clearly state what problems the software is designed to solve and who the target audience is?

- [ ] References: Do all archival references that should have a DOI list one (e.g., papers, datasets, software)?

All 58 comments

Hello human, I'm @whedon, a robot that can help you with some common editorial tasks. @wtgee, @DanielLenz it looks like you're currently assigned to review this paper :tada:.

:star: Important :star:

If you haven't already, you should seriously consider unsubscribing from GitHub notifications for this (https://github.com/openjournals/joss-reviews) repository. As a reviewer, you're probably currently watching this repository which means for GitHub's default behaviour you will receive notifications (emails) for all reviews 😿

To fix this do the following two things:

- Set yourself as 'Not watching' https://github.com/openjournals/joss-reviews:

- You may also like to change your default settings for this watching repositories in your GitHub profile here: https://github.com/settings/notifications

For a list of things I can do to help you, just type:

@whedon commands

Attempting PDF compilation. Reticulating splines etc...

👋 @wtgee @DanielLenz Thanks for agreeing to review this submission! Whedon generated a checklist for you above. Feel free to ask me any questions -- I'm always happy to help.

@swapsha96 I have a couple comments as editor.

- Can you please clarify how this submission differs from AstroML?

- Can you please cite Keras in your paper?

- Can you please document the Jupyter notebooks in your tutorial section (at the moment they contain mostly code and no Markdown cells)? And write a short summary of each notebook and put that information in the tutorial section of the MiraPy Read The Docs site?

- Can you please document the functions? E.g. I don't know how

AtlasVarStarClassifierdiffers from the native Keras classifiers and I don't know which input parameters (and what form) to pass into this function.

Please let me know if you want more clarification or any help with this.

@mbobra Thank you for your input! I have made the changes to the best of my understanding. Please let me know what other changes I need to do.

AstroML is built on top of scikit-learn which has design constraints that make it impossible to extend to deep learning techniques such as large sequence data (eg lightcurve) classification, CNN, and encoder-decoder networks. Importantly it does not support GPU which is very important for large matrix multiplications, processing large astronomical data, etc. Since a large amount of data is collected every day, we decided to create a new package that takes cares of that. We are developing it in such a way that not only astronomers can use MiraPy's existing features but they can extend its applications to their field of interests.

I cited Keras in the paper. Thanks, I missed that.

I have added markdown cells in all tutorial notebooks. Also, I have written a summary of all notebooks in the docs as per your suggestion.

Tutorial repository

Tutorial section in doc

- We had written it in

__init__function instead of putting it under the class name which is why the documentation didn't show that. Apologies for that. I have fixed the problem now.

AtlasVarStarClassifier doc

@whedon generate pdf

Attempting PDF compilation. Reticulating splines etc...

I've added a few points that should be completed before we can proceed with the submission.

The project is in great shape and I'm sure the authors could address these points quickly. However, I'm not sure if the scope of the project is sufficient: It's mostly tailored towards a very specific set of tasks, and is essentially a shallow wrapper around keras. @mbobra , could you comment and provide JOSS's policy on that?

This software is written using specific examples but can be used to apply Deep Learning techniques in various other problems depending upon the use cases. For example, we experimented astronomical image reconstruction on just 39 images of some Messier's objects but it can be extended to images of galaxies from NASA's SDSS catalog and images captured by future James Webb Telescope. In tutorials, we demonstrated classification of variable stars using Lightcurves on OGLE dataset but the model can be applied on ATLAS variable star dataset and datasets from future projects.

MiraPy is in its initial stage and requires huge improved improvements and feedback. I hope to make this package more generalized in future.

@mbobra @wtgee @DanielLenz The issues are now closed. What else do I need to look into?

@DanielLenz that is a good question.

We generally do not consider software that serve solely as wrappers, unless they add some additional functionality. If the submission does add something unique/different, that the underlying software does not do, then I think that could be in scope. The other criteria we sometimes apply is: would/should this software be cited if used in a paper? Or would the underlying software be the only thing that contributes significantly to the results?

I used this software to beat state of the art results of different algorithms for image reconstruction of astronomical images (tutorials). This software has a CNN model that improves 'per-class accuracy' of pulsars from 78% to 91%. MiraPy can be used for transfer learning in classification of ATLAS variable star catalog which will be released in future. We have a curve fitting module (in initial stage) which uses autograd for faster gradient computation. These are few to mention as such. We will be bringing more features and improvements in future. Do you need more reasons for usage as such?

Thanks for the discussion, I'm curious to see what @wtgee thinks.

In the meantime, could you add references to all the Python libraries that you're using (not only in mirapy itself, but also in the tutorial)? Examples are sklearn, matplotlib, and numpy.

Furthermore, please add references (and DOIs, if they exist) to the data sets which you use in the tutorials.

Lastly, it would be great to have a new release (github & PyPI) after all the changes at some point before we wrap up the review.

@wtgee Please let me know if you need any help with your review!

@whedon generate pdf

Attempting PDF compilation. Reticulating splines etc...

@whedon generate pdf

Attempting PDF compilation. Reticulating splines etc...

@whedon generate pdf

Attempting PDF compilation. Reticulating splines etc...

Thanks for adding the DOIs for the data sets!

I think it would be appropriate to also cite the different python packages in the paper, many of them have accompanying publications or other bibtex items (e.g. for sklearn).

@whedon remind @wtgee in one hour

Reminder set for @wtgee in one hour

:wave: @wtgee, please update us on how your review is going.

@whedon generate pdf

Attempting PDF compilation. Reticulating splines etc...

@whedon generate pdf

Attempting PDF compilation. Reticulating splines etc...

@mbobra @DanielLenz @whedon I've added citations of different Python packages in the paper.

I see one check is left in Daniel's list. So what's next?

👋@openjournals/joss-eics, @wtgee is no longer available to review this submission. Should I assign another reviewer or move forward with only one review of the submission?

@mbobra - I think we have two options here: 1) you do a review of the submission yourself, 2) we find a new reviewer.

Either is fine with me.

It's been a week. What's going to happen next?

@swapsha96 I am trying to find you a new reviewer. If I can't do that in the next couple days, I will review it myself. I'm really sorry about this delay -- I know you want to get this published sooner than later. I did not anticipate that a reviewer would drop out. Please bear with me while we get through this.

I understand that and I just wanted to know the status. Thanks for telling me.

@whedon remove @wtgee as reviewer

OK, @wtgee is no longer a reviewer

@whedon add @mbobra as reviewer

OK, @mbobra is now a reviewer

@swapsha96 I'm reviewer for this submission now. I will start reviewing this week, but I probably won't finish until next week. I'm really sorry about the delay.

No problem. Thanks for letting me know.

👋 @swapsha96 I'm looking through the General Checks, which asks the following question:

Has the submitting author (@swapsha96) made major contributions to the software? Does the full list of paper authors seem appropriate and complete?

It looks like @akhilsinghal1234 made most of the contributions to the software -- I only see six commits by you (all on the JOSS paper) to the master branch. Do you agree?

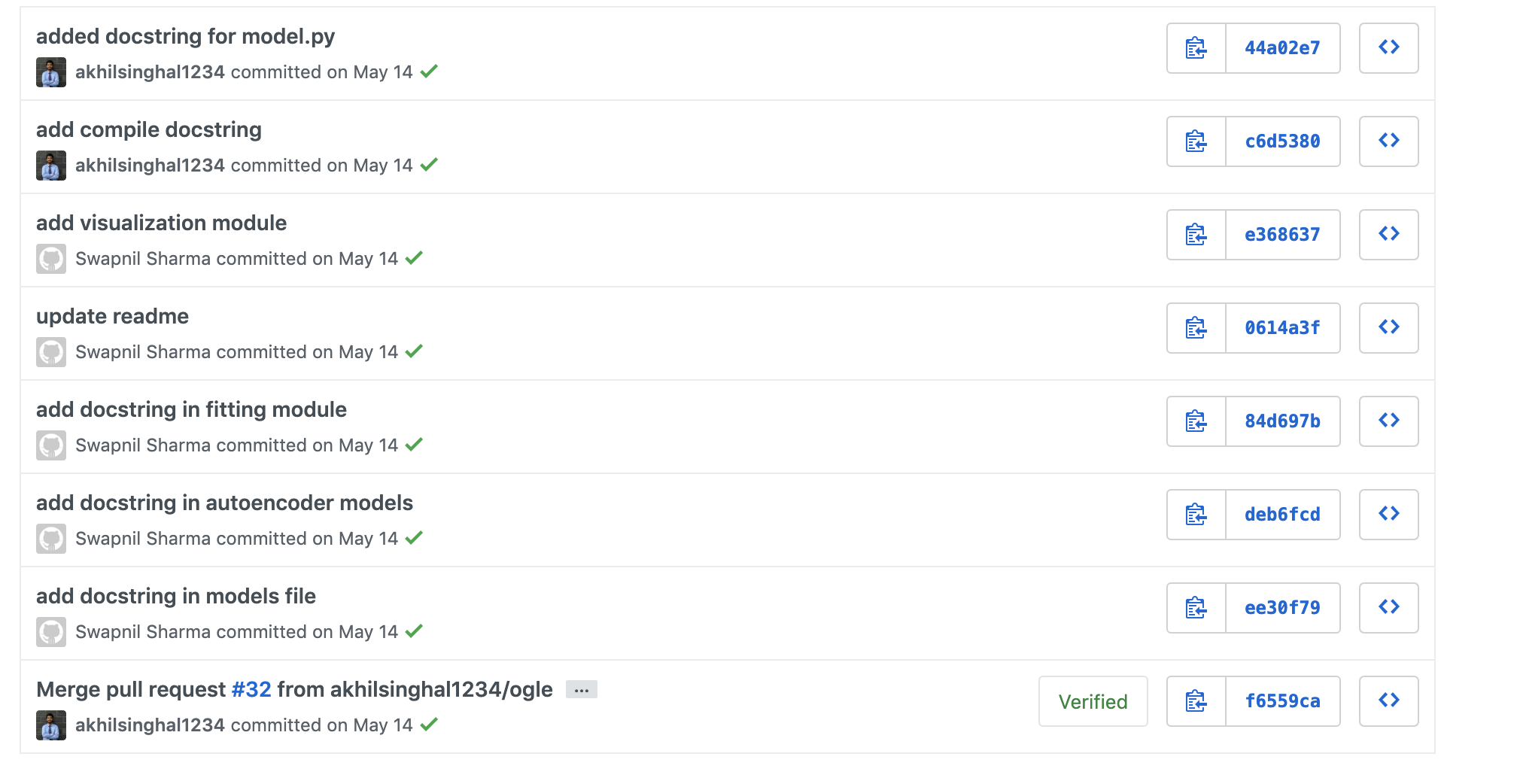

No! I don't why total number of commits are 205 but it says He made 45 commits and I made 6 commits. I started this project and Github says I wrote only 150 lines. WTH! 😆 Does anyone know why lots of my commits are not considered in contributor section? (Below is an screenshot)

(I can't believe I sound so arrogant here 😅)

I see. The contributor section counts commits by Github users, which are @swapsha96 and @akhilsinghal1234. It looks like there were other commits under the name Swapnil Sharma, which is not associated with a Github account. So those commits must have been via some other mechanism.

Is that a problem that I need to address in some way?

This may have happened due to a local Git user/email configuration that didn't match the GitHub account—it looks strange at first glance, but I don't think this is a major issue, since it's clear upon closer inspection that @swapsha96 is a major contributor. Good catch though, @mbobra!

@swapsha96 I spent the afternoon playing with MiraPy. I agree with @DanielLenz that it is essentially a shallow wrapper around Keras. Therefore, this does not meet our submission requirements (it falls under the 'thin wrapper' category).

However, I do think MiraPy provides excellent tutorials on how to use machine learning to accomplish the tasks outlined on the tutorials page. I would strongly recommend this for publication in JOSE.

cc @openjournals/joss-eics

I didn't about JOSE. Thanks for letting me know. Please close this issue then.

Thanks, @swapsha96. Please let me know if you want any help with a submission to JOSE. I'm happy to help. Good luck!

Also thank you to @DanielLenz for reviewing -- we really appreciate your time and effort.

I have withdrawn this submission—thanks all.