Javascript: Why use prefer-destructuring on arrays?

Hello :)

Take this example

const test = {};

const something = [1, 2, 3];

test.value = something[0]; // triggers error

I guess

[test.value] = something;

fixes it, but it is just unreadable.

I am curious why forcing this rule on arrays?

All 28 comments

Arrow function syntax and object destructuring are also "unreadable" until you get used to them :-)

I believe ljharb would say that accessing a specific element inside an array is a sign of "code smell". In general, most of the time you are going to need to do array operations, you are going to be performing them on the entire contents of an array. If you're using an array that you need to access by individual element, you might be using the wrong data structure.

Indeed; an array, conceptually, is an atomic list of things - indexing into it, as opposed to operating in the whole list, is a smell.

Alternatively, the array might be being used as a tuple - in which case, it has a structure, and “destructuring” it is the appropriate semantic.

Can you provide a more concrete use case, with actual code?

I also don't like forcing destructuring of arrays, not because something specific - it just not feels good to me, or at least not always.

But yea, @ericblade and @ljharb are right. When you getting few items it is good to have names (from the destruction, mind usually follows logical path and that makes the things easier), otherwise if you use one item, then it not make sense (probably?).

I'm not sure, i'm 50/50 here :D

What should I write instead of exampleArr[7] = exampleArr[4]; to pass this rule? Thanks.

@asendro that line is mutating (something we discourage) and using hardcoded indexes (something we discourage), so I’d need more context to understand why you’re doing either.

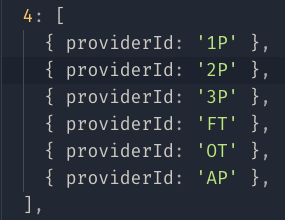

I have an object with numbers as keys, and values are array of objects. Because I have same values for some indexes, I don't want to repeat same code more times.

const exampleArr = {

4: [

{ providerId: '1P' },

{ providerId: '2P' },

{ providerId: '3P' },

{ providerId: 'FT' },

{ providerId: 'OT' },

{ providerId: 'AP' },

],

};

exampleArr[7] = exampleArr[4];

In that case, it's an object, not an array - but i'd suggest:

const examples = [

{ providerId: '1P' },

{ providerId: '2P' },

{ providerId: '3P' },

{ providerId: 'FT' },

{ providerId: 'OT' },

{ providerId: 'AP' },

];

const exampleArr = {

4: examples,

7: examples,

};

I did it like that, but I thought there is different approach. Thanks anyway.

I do have a case that I find a bit odd to have prefer-destructuring suggested to me:

I have an object, say user, which is normalized in my storage. This means that user.address has a value of 1 instead of the actual address. I have a 'table' (not actually a table, but let's call it that) called addresses that's an object which has all the addresses - the key is the address ID, the value is the address value itself.

For testing purposes (in a test suite), I need to mock said user. I then need to manually denormalize the address to have it inside the user (because I'm testing a component that expects the user object to be denormalized already). If I do:

user.address = addresses[1];

I get the 'prefer-destructuring' error. I end up having to do:

[, user.address] = addresses;

which looks odd to me. Destructuring makes sense for the first item or for items in a sequence, starting from a given point, but for a single specific index that's not 0, I feel like it complicates the code. Should I be doing something differently for this case?

@lucabezerra This is definitely a tricky case. What I'd expect, though, is that you'd have standalone and pure normalize/denormalize functions (which could be separately tested), and that you'd be able to use these functions in your tests on your mock data, to avoid needing to work with the storage-only normalized structure (just like how in a relational database, once the data is extracted, you'd generally strive to avoid dealing with the normalizations). I agree that in this case, since you're using the ID as a key, and not an index, and since addresses (while implemented as an array) is really being used as an object/Map, that destructuring doesn't make sense.

In general, reaching for a specific index in an array is itself a code smell, since an array should be treated either as an atomic list of things, or as a tuple (where you'd destructure).

However, there's not really a good way to detect this case without forcing you to rewrite things, so it seems appropriate to use an eslint override comment here absent code changes.

@ljharb First of all, thanks for the super quick reply!

So, yeah, that's the approach I went with - using the _eslint-disable_ comment. I was wondering if it'd make sense/be worth the trouble having the linter check if the index is different from 0 to throw the error. While reaching for a specific index is a code smell, as you said, I think reaching for an index that's not 0 most of the time points to a very specific use case which the linter probably doesn't understand.

In any case, just to clarify, addresses is not being used as an object/Map in my example implementation, the confusion is probably due to me using the same number to talk about both _keys_ and _indexes_. The addresses array would look something like this:

addresses = [

{ id: 32, street: Broadway Ave., number: 1132, city: New York },

{ id: 1, street: Dream Boulevard, number: 54, city: Random City },

...

];

Where it just so happens to have address with id=1 at index 1 of the array. Bad example from my part, haha.

But to complement, if it was possible to do something like (obviously not with that syntax, as it conflicts with something else):

[ user.address[1] ] = addresses;

these kinds of use cases wouldn't be an issue. In any case, I understand that this is probably too specific and doesn't affect enough people to become an actual concern. Just wanted to give my 2 cents on a scenario where it happens :)

@lucabezerra The error is desired when reaching into any index, not just 0, so i don't think would make sense (and we'd have to convince eslint to make the change in their own rule anyways). People do this all the time for cases that are better left to destructuring, or atomic list operations.

In your case, i'd make addresses a Map of ID -> data, rather than an array - but as an array, i'd do addresses.find(x => x.id === 1).

@ljharb Got it, makes sense! Thanks once again for the attention! :)

Here's an example why forcing destructuring on arrays of n length is quite silly:

const match = url.match(/^(.+):\/\/(.+):(\d+)\/?/);

// match[0]: result

// match[1]: protocol

// match[2]: hostname

// match[3]: port

// Get port

const port = match[3];

// with destructuring

const [, , , port] = match; // wth

Of course, this example is quite trivial. Why would I use capturing parenthesis if I wasn't going to use that value? I could just do this: const [, protocol, hostname, port] = match;

But sometimes you receive an array you didn't create, and you only need item at index 7, for example. The syntax starts to look a bit ludicrous. I support requiring destructuring arrays of length one, but not more than that.

@micchickenburger what you'd want to do with modern JS is use named capture groups, and if you only want the port, you'd want to make the other groups non-capturing - hence, you'd do const [, port] = url.match(/^(?:.+):\/\/(?:.+):(\d+)\/?/);.

@ljharb: ... you'd want to make the other groups non-capturing - hence, you'd do ...

Agree.

But,

@micchickenburger: But sometimes you receive an array you didn't create, and you only need item at index 7 for example.

is a good point too. Something like this const [,,,,,,,foo] = res might look ridiculous. That's why I like ignore comments, it makes things explicitly clear.

@micchickenburger: I support requiring destructuring arrays of length one, but not more than that.

Yup, make sense if we can configure it. But I'm for <= 3 length, meaning require destructuring for arrays with a length of 3 or less, but not more than 3.

I think in those rare cases where you’re forced to deviate from the best practice, an inline eslint override comment is appropriate to indicate you’re willfully violating the style guide.

It may not be that rare, but yea I just added that to my comment.

If it’s common, I’d suggest writing an abstraction around it so you don’t have to inflict an unfortunate api on your entire codebase :-)

I just wanted to share this if anyone wasn't aware, helps with the readability.

keys is an array of length 5

const { 4: key } = theme.breakpoints.keys;

@Motoxpro i'd suggest using const [,,,,key] = theme.breakpoints.keys. An array is not, conceptually, an object.

@Motoxpro yup, it's pretty cool, I used it few times.

@ljharb yea, but @Motoxpro's definitely looks better, instead of counting 4 commas... :D

The problem there is using an array to store “not an atomic list of things”; not that getting the 4th item looks ugly.

I think the one of the big reasons to access an array by individuall indices is for parsing strings into structured data. It reflects the nature of a task because at first we have characters that make no sense and gradualy make them into named values.

We can't use named capture groups, it's still in its early stage. And we might split and then access by indices.

@germansokolov13 you can use https://npmjs.com/string.prototype.matchall ?

@ljharb Introduce an external dependency in order to solve a Lint problem? I 'm not sure.

@germansokolov13 a) dependencies are a good thing; b) it's built into the language, that's just the polyfill in case you support environments without it; c) no, because splitting and accessing by indices seems like a messy and hacky way to do what you want :-)

Most helpful comment

Here's an example why forcing destructuring on arrays of n length is quite silly:

Of course, this example is quite trivial. Why would I use capturing parenthesis if I wasn't going to use that value? I could just do this:

const [, protocol, hostname, port] = match;But sometimes you receive an array you didn't create, and you only need item at index 7, for example. The syntax starts to look a bit ludicrous. I support requiring destructuring arrays of length one, but not more than that.