Instapy: Quota Supervisor Bug: Overshooting Quora supervisor limits

Clearly my peak_follows limit is 50 per hour, but the script has followed successfully 67 users in 58.34 minutes. What is going on ?

session.set_quota_supervisor(enabled = True,

sleep_after = ["likes", "comments_d", "follows", "unfollows", "server_calls_h"],

sleepyhead = True,

stochastic_flow = True,

notify_me = False,

peak_likes = (50, 450),

peak_comments = (50, 400),

peak_follows = (50, 850),

peak_unfollows = (50, 700),

peak_server_calls = (15000, None))

INFO [2019-05-14 11:42:28] [ishanduta2007] Sessional Live Report:

|> LIKED 31 images | ALREADY LIKED: 0

|> COMMENTED on 19 images

|> FOLLOWED 67 users | ALREADY FOLLOWED: 0

|> UNFOLLOWED 0 users

|> LIKED 0 comments

|> REPLIED to 0 comments

|> INAPPROPRIATE images: 0

|> NOT VALID users: 10On session start was FOLLOWING 5173 users & had 1818 FOLLOWERS

[Session lasted 58.34 minutes]

@uluQulu please take a look.

All 21 comments

I got softblocked again today.

I tagged @uluQulu and @timgrossmann , but if anyone else with god understanding of QS code can help with priority.

Hey @ishandutta2007

Current time 👉🏼 11:42:28

Lasted 👉🏼 58 minutes 34 seconds

👆🏼 Started at 👉🏼 10:43:54

According to those records, your session has lasted during these hourly intervals below:

1st hour - from 10:43:54 to 10:59:59

2nd hour - from 11:00:00 to 11:42:28

Rule: QS supervises in hourly basis.

According to the rule and your hourly follow peak [which is 50], it CAN obviously follow 50 + 50 = 100 users.

Why it is sort of confusing?

- There are 2 types of timing for this kind of applications.

1. Static timing

2. Dynamic timing

(Not sure such categorization exist. I'm just trying to explain how I think of it xD)

Static timing is:

- "1 day" means after 23:59:59 (24-hours) everyday there is a new day.

- "1 hour" means after each 60 minutes and 0 seconds (starting from 00:00:00) there is a new hour.

E.g. at 16:50, last static hour is the time between 16:50 and 16:00.

Dynamic timing is:

- "1 day" is recognized as last 24 hours reevaluated at request time.

- "1 hour" is last 60 minutes reevaluated.

- So on for minutes, seconds, etc. It's simply reevaluation at request time.

E.g. at 16:50, last dynamic hour is the time between 16:50 and 15:50.

QS fully uses static timing.

I wanted to use dynamic timing from the start but then thought it may confuse people which is why used static timing for ease of understanding [in initial release].

Also, dynamic timing in case of considered, would require much more lines to be implemented compared to the implementation based on static timing.

Most of the modern systems start to use dynamic timing cos of the effectiveness in many ways. E.g. current Bing image search has "Past year", "Past month", "Past week" and "Past 24 hours" categories which all rely on dynamic timing.

So, Ishan, what you can do to fulfill your expectation with the existing static timing?

- I have been asked that question a few times before you from the community and my answer has not changed - Just start (or schedule) your activity flow by static hours, so that joint hours do not produce unexpected results [in your point].

In the end, "Quota Supervisor Bug: Overshooting Quora supervisor limits" title is incorrect and conveys no truth 🙂

Note: It's based on the knowledge I have had from the time I was in the project. Now those rules may not apply if someone has had altered with a logical modification.

BTW, do you have any idea if IG uses either static or dynamic timing in their features? I strongly believe it's static. Cos dynamic timing is very cost ineffective and it matters a lot for a service with billions of active users meanwhile static timing requires nothing special.

Be well,

Shahriyar 😊

Thanks @uluQulu for explaining in detail, I wasn't aware of QS's implementation I mentioned it in the beginning itself. But is there any evidence that instagram might be using static timing internally, the reason why I feel they might be using dynamic timing is at times when I have set QS follow limit to 50 or 55 I got soft blocked which again forced me to reduce it to 40.

You're welcome, @ishandutta2007

In the PR of QS I have written all of the design details or you can get it by a little code reading.

So, what has impact over your concern is IG's timing policy mainly on controlling action quotas.

And the only place they have written about it [officially] is API endpoints and it's only said as "zx actions per hour". They don't provide any knowledge about whether it is static or dynamic time.

Also, they don't say "last 60 mins" or "last hour" or "past hour", instead say per hour.

And not say "last 24 hours" or "last day" or "past day", instead say per day.

As IG API and its documentation is quite old, they might use dynamic time in the core at the moment..

If you conduct a research, you would find something, maybe.

Timing is a very confusing topic, it has very diversified options in each scenarios.

So, only after fully verifying the target timing policy, one should convert that mechanism.

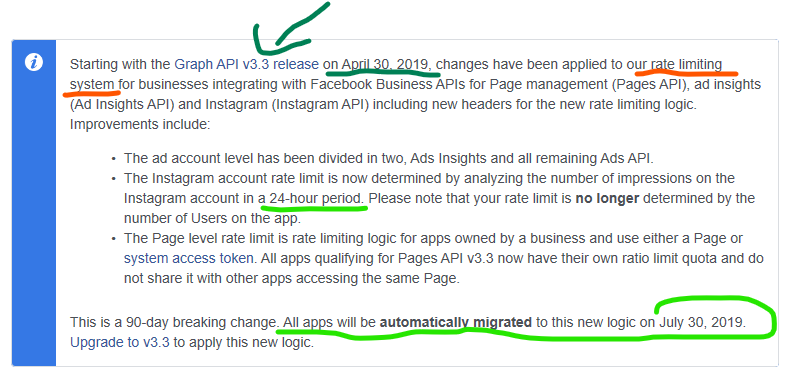

After writing above, I ran a fresh search on Bing and found out something interesting 😋

Some things has happened:

- Previously IG API is now called the IG Platform API.

- IG API Platform is being deprecated and it is being accelerated to "_continuously improve IG users' privacy and security_".

- There is a new thing called "the new IG Graph API".

- IG Graph API is just the Facebook IG API.

- IG Graph API cannot be used to access non-Business or non-Creator IG accounts. "If you are building apps for non-Business or non-Creator Instagram users, use the IG Platform API instead."

So, IG Graph API is the future and IG Platform API is ~dead.

IG Graph API may not have many features in IG Platform API per so-called privacy concerns and so.

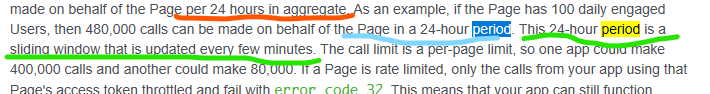

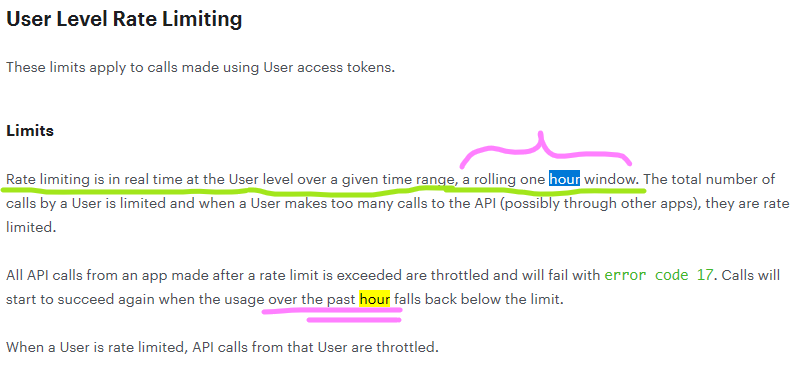

In the end, I checked out the rate limits of the new IG Graph API and it says something 👇🏼

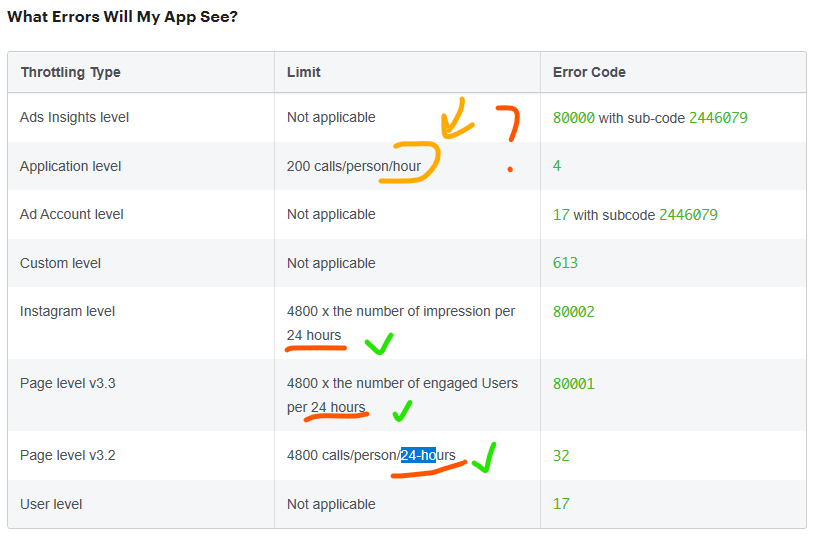

And they did have explain what is 24-hour period 🥳 here 👇🏼

And then there is this 👇🏼

As you can see, they no more say "per day", instead they say "per 24 hours". That means the IG rate limits are using dynamic timing for daily supervisioning.

But in hour basis, it's "/hour" (per hour) written instead of expected "per 60 minutes" period. Which makes me think dynamic timing is mixed with static timing.

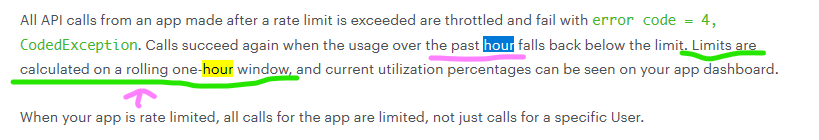

But wow another search answered above question 👇🏼

Hour is also dynamic timed and they call it "rolling one-hour window" 😅

Also this one 👇🏼

At the end, after those facts from the new IG Graph API documentation, QS should be changed to work per dynamic timing.

What do you think?

Be well,

Shahriyar 😊

Another question might be trivial, but I need clarifications. For static timing calculation how do you know what is instagram's server's time zone. If you are calculating it as per instapy users local time he might be in a time zone which may not have perfect hourly differenced time zone. for example if instagram are cacluating at UTC and say I am running on server which is UTS+5:30 then I am always 30 mins shifted. right ?

It's a nontrivial question for sure.

I have really not thought of it too much before.

In case of a time shift, even 1.00.00 raw hour can produce unexpected situation.

Let's put everything on the table,

Option 1: IG does the calculations on the application-end.

Option 2: IG does the ratio calcs on the server side.

You need a storage of captured quotient data to calculate ratio consumption.

Well, it is surely stored in the server-side cos imagne you open and log into your IG acc in a new PC which has no idea of previous data.

So, it stores the quotient data in server-side and gets it by API calls when needed.

Well, now it need to make calculations. It can be done in local and server side.

In local, it can be done by JS usage.

Or even it being done in server-side it can get the local time of the account and do calculations per that.

After all that possible, question is, which one do they choose - local or server time?

Uhm, IG is a huge company and that would be pretty ridiculous for them to force-calculate the ratio quota of all users worldwide in the standard server time.

E.g., imagine this, they have a user in Baku and he has ~11 hours of diff from the servers (e.g. in California).

Now that user has completely different timing when he can be active and if they choose server time for ratio calc for that user, then that will be very unfair.

And in practice, it really is very easy to get the local time of the user and do sum up some values.

And Quota Supervisor gets the local time of the user's machine and works off that.

But what is the point of all that brainstorming?

According to my previous post,

IG would have used static timing in past history but now they are most likely using dynamic timing and even if they not, I am very sure they will use it in near future.

Why?

- Cos, static timing was possibly part of IG Platform API and it will be deprecated as said in formal statements. And newer one IG Graph API fully uses dynamic timing.

Be well,

Shahriyar 😊

IG would have used static timing in past history but now they are most likely using dynamic timing and even if they not, I am very sure they will use it in near future.

Cool, so are you spending any bandwidth on migration of QS to dynamic as well ? In case you dont have free time at this moment lets put it on table with @timgrossmann to see who has bandwidth to do this fix. QS is very important I got softblocked a couple of times. Now to be safe I reduced it from 55 to 40.

Alright, I will extend QS to support both static and dynamic timing.

With new timing str param added to QS settings to take from 2 optional strings:

timing=["static", "dynamic"]

(static being the default one for now)

It could take couple of hours, let's go SQL!

Hey @timgrossmann, I'll open a PR soon for QS maintenance ⚒

Apart from all timing issues, @ishandutta2007, there could be other reasons to affect your scenario.

But in any case, QS should have had dynamic timing from the very start. And it is the thing of future. Now with that change, static timing would remain as earlier and operate fine, whilst, dynamic timing also be offered.

Be well,

Shahriyar 😊

@uluQulu Thank you for everything you are doing for this project. I got a follow block as well :( despite using QS. Is adding dynamic timing still on your roadmap? As you guys are discussing IG might be using some form of a sliding window instead of static timings.

Thank you very much @thealgor 🙌🏼

Yes, after I told to implement it, I did its core changes and then realized how huge its impact will be.

The thing is with static timing there is only 1 row of record per each single active hour.

But with/for effective/standard dynamic timing it's sort of 😯😯😯😯😯😯😯..

Why? Cos for dynamic timing, I propose to record every single action as a new single row of data and that means in 1 hour, casual user CAN have such as, 100 likes + 100 follows + 100 unfollows + 20 comments + 500 web address navigations + 100 scroll requests + a few others = 😧😧😧too many rows of data to be dumped to the SQLite DB😧😧😧

For that reason, I quit implementing it right away last time and decided to think some.

In real, it is not a problem, 100k rows of standard new data stream captures only 2172 kilo bytes.

And I will take the last dynamic data only at the startup (store as an in-local variable) and it won't read those hundreds of thousands of big data in every SQL query.

So, the problem [mainly] is its visual hugeness and thinking to maybe simplify it whilst preserving the strength of the implementation.

If you have ideas, please let me know on the way.

I think in the very first free time, I will finish it and open a PR to be reviewed in details.

Also, I was not aware of it before? - Yeah, it's the same logic I had from the first day I wrote QS, but when it came into practice, it did look much more wondrous 😅

Be well 😊

Shahriyar

@uluQulu I realised I need to configure half hourly limits how can I do that ?

@ishandutta2007

That's what will be achieved as dynamic timing is supported.

After that you can supervise per each momentary time interval you wish - e.g. per 10 min was the idea in my mind from early times.

Now you will just put 30 mins, and boom. It's done.

Got a few work but soon will finish it and share. İnşallah 🙌🏼

Hi!

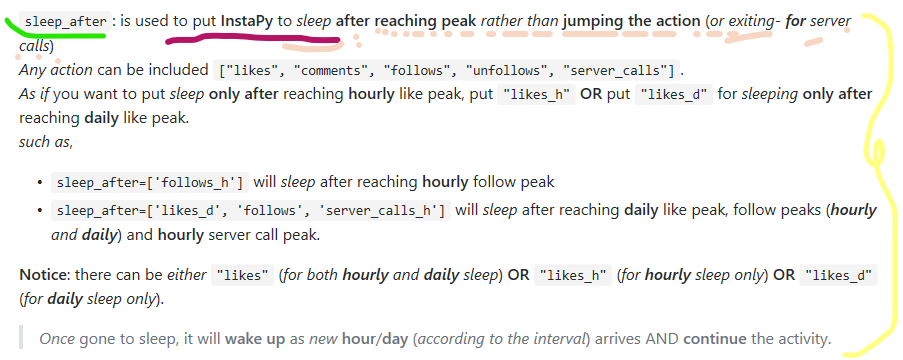

It is possible to set a sleep time/delay once the limit of the QS is reached? For example "once it reaches the limit and the QS is 'on', the program sleeps for an hour and a half".

Thanks :)

@panchoma

We've done that too 😋

It wakes up at the next hour/day as set precisely or if you enable sleepyhead parameter will wake up a bit late randomly each time.

Do you want it sleep much more than remaining time? - If so, just increase the percentage of the extra_sleep_percent variable and it will have the chance of waking up much more late as you set.

By default that is between 100 & 140 percentages.

Or do you want to set the precise wake up time rather than the time based on the remaining time per next day/hour?

Then just put the time delay you wish to the return remaining_seconds expression.

E.g. return 3600 will mean it will ridiculously sleep 3600 seconds in every quota peak reach that is put to sleep after..

If you have any idea about making it more generic (and why), please share your thoughts.

Have a good time,

Shahriyar 🖐🏼

@panchoma

We've done that too

It wakes up at the next hour/day as set precisely or if you enable

sleepyheadparameter will wake up a bit late randomly each time.Do you want it sleep much more than remaining time? - If so, just increase the percentage of the

extra_sleep_percentvariable and it will have the chance of waking up much more late as you set.

By default that is between 100 & 140 percentages.

Or do you want to set the precise wake up time rather than the time based on the remaining time per next day/hour?

Then just put the time delay you wish to the

return remaining_secondsexpression.

E.g.return 3600will mean it will ridiculously sleep 3600 seconds in every quota peak reach that is put to sleep after..If you have any idea about making it more generic (and why), please share your thoughts.

Have a good time,

Shahriyar 🖐🏼

@uluQulu

Hi Shahriyar!! I apologize for the delay in my answer, I hadn't had the time to review your reply until now. Thank you very much for it.

I have chosen the option 3 of your example. Every time the program reaches the peak it will sleep for 3900sec (65min). It isn't the definitive solution of going from static to dynamic time as Instagram proposes, but so I can control that by hour it won't make more than the actions limited in the QS. For example, the program starts to follow at 8 am, at 8:20 am it makes 30 follows, reaches its peak and enter to sleep. Instead of waking again at 9 am and making 30 follows in the first 20 minutes (from 8.20 to 9.20 there would be 40 follows in 60 min), with this modification it will wake up at 9.25 and so will never exceed more than 30 likes per hour.

I'm not entirely sure if this is correct, but I will try to see how it works.

Greetings and many thanks!

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

If this problem still occurs, please open a new issue

@uluQulu Sorry if I am too outdated, curious where are we on this ? is the changed logic in 0.6.0 ?

hi @ishandutta2007

No, it's still unimplemented. I will try to look at it today or tomorrow.

It is really urgent change that must be done in the first place.

I will request your views on the PR containing its code. I think we can make it optimal as much as possible.

Do you mean you have already posted a PR, Which is it?

Not yet, @ishandutta2007. I will mention you as opens a PR 💯

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

If this problem still occurs, please open a new issue

Most helpful comment

Thank you very much @thealgor 🙌🏼

Yes, after I told to implement it, I did its core changes and then realized how huge its impact will be.

The thing is with static timing there is only 1 row of record per each single active hour.

But with/for effective/standard dynamic timing it's sort of 😯😯😯😯😯😯😯..

Why? Cos for dynamic timing, I propose to record every single action as a new single row of data and that means in 1 hour, casual user CAN have such as, 100 likes + 100 follows + 100 unfollows + 20 comments + 500 web address navigations + 100 scroll requests + a few others = 😧😧😧too many rows of data to be dumped to the SQLite DB😧😧😧

For that reason, I quit implementing it right away last time and decided to think some.

In real, it is not a problem, 100k rows of standard new data stream captures only 2172 kilo bytes.

And I will take the last dynamic data only at the startup (store as an in-local variable) and it won't read those hundreds of thousands of big data in every SQL query.

So, the problem [mainly] is its visual hugeness and thinking to maybe simplify it whilst preserving the strength of the implementation.

If you have ideas, please let me know on the way.

I think in the very first free time, I will finish it and open a PR to be reviewed in details.

Also, I was not aware of it before? - Yeah, it's the same logic I had from the first day I wrote QS, but when it came into practice, it did look much more wondrous 😅

Be well 😊

Shahriyar