Ingress-nginx: Experiencing issues with ingress controller versions 0.18.0/0.19.0

Is this a BUG REPORT or FEATURE REQUEST? (choose one):

BUG REPORT

NGINX Ingress controller version:

0.18.0/0.19.0

no issues with version 0.17.1

Kubernetes version (use kubectl version):

1.10.5

Environment:

- Cloud provider or hardware configuration: AWS

- OS (e.g. from /etc/os-release):

- Kernel (e.g.

uname -a): - Install tools: kops

- Others:

What happened:

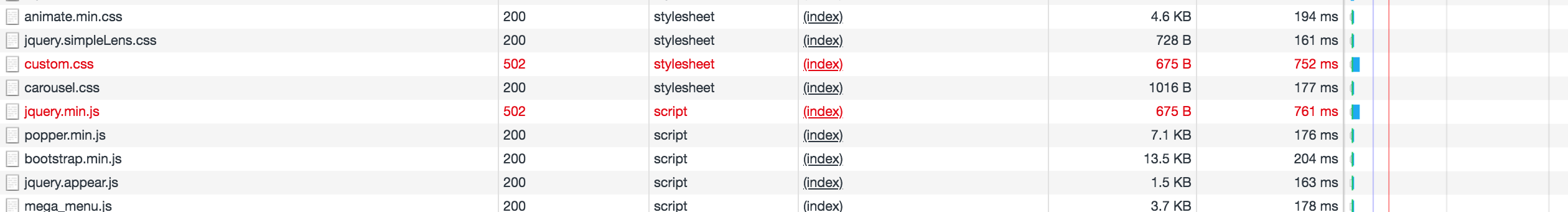

When I updated to nginx ingress version 0.18.0/0.19.0 I started receiving 502 gateway errors in the browser console chrome, safari, and firefox. The site would load but would be missing logos or css would not download.

For testing I also exposed my nginx deployment using type loadbalancer and didnt experience any issues.

What you expected to happen:

The site to load properly

How to reproduce it (as minimally and precisely as possible):

Anything else we need to know:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/proxy-body-size: "20m"

spec:

tls:

- hosts:

- REDACTED

secretName: REDACTED

rules:

- host: REDACTED

http:

paths:

- path: /

backend:

servicePort: 80

serviceName: nginxAll 22 comments

Could you try v0.19 or v0.18 with "--enable-dynamic-configuration=false" and try to reproduce ?

That worked! Thanks a lot. I tested v0.18 and v0.19

@JordanP any specific reason why you think it could be related to dynamic configuration? I can not think of one. At this point I'd switch it back and see if 502s re-occurs :)

@ElvinEfendi I also don't know why dynamic configuration would be the cause of this. I did test it before posting and when I readded dynamic configuration the issue would come back.

I also enabled dynamic configuration on v0.17.1 and the 502 error is present

@dannyvargas23 can you consistently regenerate it? It would be useful if you can post logs here around the time 502 happens. But before doing that please set nginx log level to info (using error-log-level configmap option).

It would be useful to see your generated Nginx configuration from the pod as well.

Also do you see any pattern? Does it alway happen or intermittently? Does it correlate with app deploy or ingress-nginx deploy?

@ElvinEfendi truth is I just had a big issue on prod yesterday after upgraded to 0.19 with dynamic-conf=true. 0.18 with dynamic-conf=false was running fine for 2/3 weeks. In my case, it looked like traffic was sent to non-existent pods (i.e non-existent endpoint) which caused 502 errors. We are heavy user of autoscaling and errors started to show when the number of nodes decreased, it's as if the ingress-controller didn't realize that nodes went down and pods on the node didn"t exist anymore.

I am going to investigate some more or course.

@JordanP are you configuring https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/#proxy-next-upstream ? By default only error and timeout are configured (you need to decide if want retries using http_502 http_503 http_504)

Nope, I did not change this config. For more than 10min traffic was redirected not to unhealthy pods but non-existent (i.e not anymore) pods. I am tring to reproduce.

@JordanP do you observe this when you scale down ingress-nginx replicas, or the backend app replicas?

What are the related Nginx errors (connect, timeout etc) for a given request resulting in 502?

Do you see errors such as "Dynamic reconfiguration failed"?

I am doing some more test right now. This happened when the backend app replicas went down. I feel I am going to reproduce this and don't want to bug you too much on this. Lemme get back to you with clean and complete logs with error-log-level: "info" when the issue arises.

So I tried a lot of different stuff in my staging env but that did not retrigger it. I guess I'll have to eventually give it another try in production , which I am not looking forward but I don't see any other way.

@ElvinEfendi This happens all the time all I have done is change the flag to enable dynamic configuration and the 502 errors start again. I also forgot to mention I tested with plain HTTP and don't experience the issue. I will provide the log information later today.

@dannyvargas23 looking at screenshot you provided in the issue description it does not happen all the time. Or maybe you meant random 502 happens all the time, but your screenshot clearly shows there are succeeding requests. When you rerun the request to those failing endpoints do you see 502 again and consistently? Is it everytime different endpoint? Is it only certain endpoints you experience 502 for?

I also forgot to mention I tested with plain HTTP and don't experience the issue.

Without logs or reproducible example I can not provide any help unfortunately. I can speculate something but that won't be useful.

So I gave another try on prod today and reproduced the issue. I can confirm that this happens when the number of upstream servers scale down. The issue started when the number of replicas (actually daemonset) of MicroService1 went from 4 to 3. At that time I had 5 replicas of Nginx Ingress Controller. ~1min30 after the downscale, 3 replicas printed the usual

I0920 18:56:16.204718 8 controller.go:169] Changes handled by the dynamic configuration, skipping backend reload.

I0920 18:56:16.212321 8 controller.go:204] Dynamic reconfiguration succeeded.

During 1min30 I got a bunch of these errors in the log

upstream timed out (110: Connection timed out) while connecting to upstream, ... HTTP/1.1", upstream: "http://10.132.0.50:2222/...

I am fine with those errors, it's because the way we do the downscaling, basically we kill the node so K8s takes some time before realizing the node and all its pods are gone.

But the 2 other Nginx replicas never picked up these change and never reloaded/reconfigured their conf, so they kept sending traffic on upstream server that didn"t exist. Even 10 min after the downscale.

I don't have more logs because when this happens, 1000 lines per/s get printed on stdout and I configured stackdriver (google) to not save those logs because stackdriver is damn expensive.....

This looks like a race condition (only 2 Nginx controler out of 5 got an issue).

My next try will be to narrow the issue because so far I tried to upgrade from 0.18 without dynamic conf to 0.19 with dynamic conf. I'll try to pin point whether it's related to 0.19 or dynamic-conf.

Yeah so I can't test 0.19 without dynamic conf because logs quickly get full of "monitor.lua:71: call(): omiting metrics for the request, current batch is full while logging request"

Just to add a bit,

I do also have issues with 18.0+. After running some time health checks start failing and controllers are restarted. Also it seems - it does not update configs properly. I got mystic 504 quite a lot or other 5xx. After controller restart - everything is back to normal for short time.

I think - I got 504, if I kill underlying pod for service (which leads to creating new pod).

p.s. Actually it seems 0.17.1 also drops me 504. Last good image was 0.16.1 i think - I did not have any issues with it.

Should this issue hold back the deprecation of the ability to disable dynamic configuration in 0.21.0?

I start to have some doubt about whether this is related to dynamic configuration

Closing. Please update to 0.21.0. Reopen if the issue persists after the upgrade

I have a similar issue with 0.21.0 installed via Helm. Will provide more info shortly

I also had the 'Dynamic reconfiguration failed' issue with version 0.21.0 on azure.

Most helpful comment

So I gave another try on prod today and reproduced the issue. I can confirm that this happens when the number of upstream servers scale down. The issue started when the number of replicas (actually daemonset) of MicroService1 went from 4 to 3. At that time I had 5 replicas of Nginx Ingress Controller. ~1min30 after the downscale, 3 replicas printed the usual

During 1min30 I got a bunch of these errors in the log

I am fine with those errors, it's because the way we do the downscaling, basically we kill the node so K8s takes some time before realizing the node and all its pods are gone.

But the 2 other Nginx replicas never picked up these change and never reloaded/reconfigured their conf, so they kept sending traffic on upstream server that didn"t exist. Even 10 min after the downscale.

I don't have more logs because when this happens, 1000 lines per/s get printed on stdout and I configured stackdriver (google) to not save those logs because stackdriver is damn expensive.....

This looks like a race condition (only 2 Nginx controler out of 5 got an issue).