Incubator-mxnet: asnumpy() on cpu is 80 times slower than gpu

mxnet 1.7.0 latest cu101 and cu101mkl aws p3.2x instance.

code pasted below to reproduce:

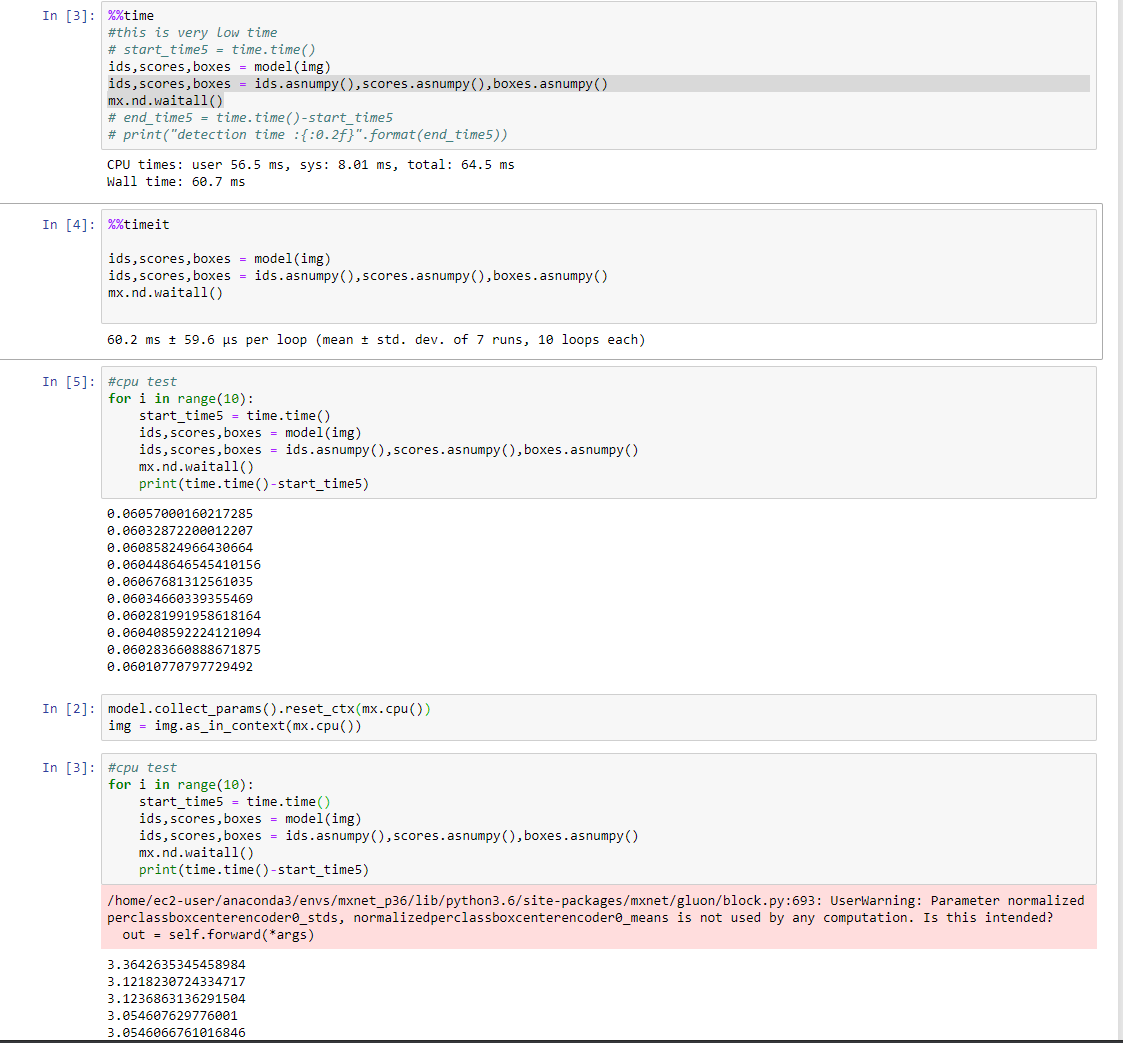

my results :

from mxnet.gluon.model_zoo.vision import get_model

from gluoncv.data.transforms.presets.ssd import transform_test

import mxnet as mx

import numpy as np

import gluoncv

from mxnet.gluon.data.vision import transforms

import cv2

import time

from matplotlib.pyplot import imshow

import glob

categories = ['Person','non-Moving']

# model = self.load_model(best=True)

model = gluoncv.model_zoo.get_model('faster_rcnn_resnet50_v1b_voc')

#print(model)

model.load_parameters('/home/ec2-user/SageMaker/PICV/Segmentation Job/OD/params/faster_rcnn_finalparams.params')

img = mx.random.randn(1,3,608,608,ctx = mx.gpu())

model.collect_params().reset_ctx(mx.gpu())

model.hybridize()

#img,image = transform_test(mx.nd.array(img),short=420, max_size=640)

#warmup

for i in range(10):

ids,scores,boxes = model(img)

ids,scores,boxes = ids.asnumpy(),scores.asnumpy(),boxes.asnumpy()

mx.nd.waitall()

%%time

#this is very low time

# start_time5 = time.time()

ids,scores,boxes = model(img)

ids,scores,boxes = ids.asnumpy(),scores.asnumpy(),boxes.asnumpy()

mx.nd.waitall()

# end_time5 = time.time()-start_time5

# print("detection time :{:0.2f}".format(end_time5))

%%timeit

ids,scores,boxes = model(img)

ids,scores,boxes = ids.asnumpy(),scores.asnumpy(),boxes.asnumpy()

mx.nd.waitall()

#cpu test

for i in range(10):

start_time5 = time.time()

ids,scores,boxes = model(img)

ids,scores,boxes = ids.asnumpy(),scores.asnumpy(),boxes.asnumpy()

mx.nd.waitall()

print(time.time()-start_time5)

model.collect_params().reset_ctx(mx.cpu())

img = img.as_in_context(mx.cpu())

#cpu test

for i in range(10):

start_time5 = time.time()

ids,scores,boxes = model(img)

ids,scores,boxes = ids.asnumpy(),scores.asnumpy(),boxes.asnumpy()

mx.nd.waitall()

print(time.time()-start_time5)

>All comments

Cleaned up the code a bit:

import mxnet as mx

import numpy as np

import gluoncv

import time

# model = self.load_model(best=True)

model = gluoncv.model_zoo.get_model('faster_rcnn_resnet50_v1b_voc',pretrained=True)

img = mx.random.randn(1,3,608,608,ctx = mx.gpu())

model.collect_params().reset_ctx(mx.gpu())

model.hybridize(static_shape=True, static_alloc=True)

#warmup

print('gpu warmup')

for i in range(10):

ids,scores,boxes = model(img)

ids,scores,boxes = ids.asnumpy(),scores.asnumpy(),boxes.asnumpy()

mx.nd.waitall()

#gpu test

for i in range(10):

start_time5 = time.time()

ids,scores,boxes = model(img)

ids,scores,boxes = ids.asnumpy(),scores.asnumpy(),boxes.asnumpy()

mx.nd.waitall()

print('gpu: %4.3f seconds' % (time.time()-start_time5))

model.collect_params().reset_ctx(mx.cpu())

img = img.as_in_context(mx.cpu())

#warmup

print('cpu warmup')

for i in range(10):

ids,scores,boxes = model(img)

ids,scores,boxes = ids.asnumpy(),scores.asnumpy(),boxes.asnumpy()

mx.nd.waitall()

#cpu test

for i in range(10):

start_time5 = time.time()

ids,scores,boxes = model(img)

ids,scores,boxes = ids.asnumpy(),scores.asnumpy(),boxes.asnumpy()

mx.nd.waitall()

print('cpu: %4.3f seconds' % (time.time()-start_time5))

Heres the output I get on P3.2xl with mxnet-cu102 (version 1.7.0):

gpu warmup

[01:37:43] src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable)

gpu: 0.052 seconds

gpu: 0.052 seconds

gpu: 0.052 seconds

gpu: 0.052 seconds

gpu: 0.052 seconds

gpu: 0.052 seconds

gpu: 0.052 seconds

gpu: 0.052 seconds

gpu: 0.052 seconds

gpu: 0.052 seconds

cpu warmup

cpu: 2.827 seconds

cpu: 2.837 seconds

cpu: 2.829 seconds

cpu: 2.826 seconds

cpu: 2.828 seconds

cpu: 2.830 seconds

cpu: 2.826 seconds

cpu: 2.830 seconds

cpu: 2.832 seconds

cpu: 2.824 seconds

I think this performance is expected. This doesnt seem to be extraneous.

Was this page helpful?

0 / 5 - 0 ratings

Most helpful comment

Cleaned up the code a bit:

Heres the output I get on P3.2xl with mxnet-cu102 (version 1.7.0):

I think this performance is expected. This doesnt seem to be extraneous.