Incubator-mxnet: Release newer versions of mxnet-tensorrt on PyPi

Latest version of TensorRT MXNet integration was 1.3.0

> pip search mxnet

...

mxnet-tensorrt-cu92 (1.3.0) - MXNet Python Package

mxnet-tensorrt-cu90 (1.3.0) - MXNet Python Package

Can this be updated to 1.4.1 and maybe nightly?

Thanks!

All 15 comments

@KellenSunderland you are listed as the owner of the package, could you work with @szha @zhreshold to transfer ownership of the package under the MXNet / MXNet CI ones so we can resume publishing MXNet-TensorRT builds?

@mxnet-label-bot add [pip]

Good callout @ThomasDelteil, I've added permissions to those packages. The 1.4 release didn't have many changes for TRT, so IMO there's not a large feature gap between 1.3 and 1.4 as far as TRT users are concerned.

For the 1.5 release we should do an update, theres a number of improvements that will be included. We'll want to carefully target a Cuda version though. In my testing the most stable / highest perf version have been:

CUDA_VERSION 10.1.105

CUDNN_VERSION 7.6.0.64

TRT_VERSION 5.1.5

So we'd probably shoot for a cu101 release if possible (and cu100 if cu101 isn't targeted for 1.5).

Edit: cu110 -> cu101.

cu110 release

Did you mean cu101?

Right, sorry cu101.

Thanks @KellenSunderland! I think there are a number of MXNet optmizations and new feature that TensorRT users might benefit from regardless of the changes to the TensorRT features of the current build, I mean, I think we should release this build for every release, for a few CUDA/CUDNN/TensorRT sets. A jetson one would be great too.

Hi, any updates to this? I need to use CUDA 10.0+ w/ TensorRT (running Jetson Nano) and there is no ready python package. Compiling from source crashes due to MSHADOW.

Sorry. Haven't been able to get TensorRT integration working with Jetsons

thusfar.

On Fri, Aug 2, 2019, 6:17 PM ban1080 notifications@github.com wrote:

Hi, any updates to this? I need to use CUDA 10.0+ (running Jetson Nano)

and there is no ready python package. Compiling from source crashes due to

MSHADOW.—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/apache/incubator-mxnet/issues/15319?email_source=notifications&email_token=ABYZGE5CXSOQIAJDT4NEOWTQCTMD5A5CNFSM4H2VDAU2YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD3PEGNA#issuecomment-517882676,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ABYZGE3YZXGTGAFLMVYTLK3QCTMD5ANCNFSM4H2VDAUQ

.

Any follow-ups?

^ Switched to TensorFlow, although, someone on the Nvidia forum claims to have been able to build MXNET from scratch with the Tensor_RT flag:

Any update on this issue? I am using CUDA10 with TensorRT. Does MXNet support TensorRT on CUDA10.0 or 10.1 so far? I can only find way to use TensorRT with MXNet on CUDA9... Any advice is appreciated.

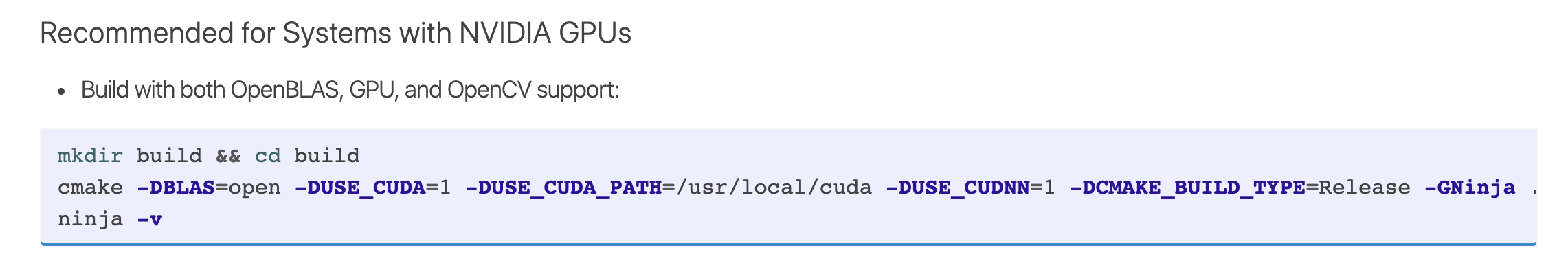

@weidai0903 we plan to add better support in binary releases such as TRT as part of the existing continuous delivery pipelines. For now, would you be able to build it from source? A tutorial can be found at https://mxnet.apache.org/api/python/docs/tutorials/performance/backend/tensorrt/tensorrt

@KellenSunderland what was the added binary size for TRT if we were to include TRT support in the release?

@ptrendx does nvidia allow redistributing TRT? what's the license?

@szha In the tutorial you provided, the link to "recommended instructions for building MXNet for NVIDIA GPUs" does not work anymore. Would you please provide another address?

@weidai0903 indeed, the anchor point is outdated and lost. You can find the instruction in one of the usage examples: