Incubator-mxnet: [R] use of mx.io.arrayiter completely crashes R environment

Running the code below causes the entire R environment to terminate. This code used to work on older versions of mxnet (older than 1.2.0). It seems to be related to mx.io.arrayiter

Environment info (Required)

R version 3.5.0 (2018-04-23)

Platform: x86_64-w64-mingw32/x64 (64-bit)

Running under: Windows >= 8 x64 (build 9200)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.1252 LC_CTYPE=English_United States.1252

[3] LC_MONETARY=English_United States.1252 LC_NUMERIC=C

[5] LC_TIME=English_United States.1252

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] mxnet_1.3.0

loaded via a namespace (and not attached):

[1] Rcpp_0.12.16 pillar_1.2.2 compiler_3.5.0 RColorBrewer_1.1-2 influenceR_0.1.0 plyr_1.8.4

[7] bindr_0.1.1 viridis_0.5.1 tools_3.5.0 digest_0.6.15 jsonlite_1.5 viridisLite_0.3.0

[13] tibble_1.4.2 gtable_0.2.0 rgexf_0.15.3 pkgconfig_2.0.1 rlang_0.2.0 igraph_1.2.1

[19] rstudioapi_0.7 yaml_2.1.19 bindrcpp_0.2.2 gridExtra_2.3 downloader_0.4 DiagrammeR_1.0.0

[25] dplyr_0.7.4 stringr_1.3.1 htmlwidgets_1.2 hms_0.4.2 grid_3.5.0 glue_1.2.0

[31] R6_2.2.2 Rook_1.1-1 XML_3.98-1.11 readr_1.1.1 purrr_0.2.4 tidyr_0.8.0

[37] ggplot2_2.2.1 magrittr_1.5 codetools_0.2-15 scales_0.5.0 htmltools_0.3.6 assertthat_0.2.0

[43] colorspace_1.3-2 brew_1.0-6 stringi_1.2.2 visNetwork_2.0.3 lazyeval_0.2.1 munsell_0.4.3

Build info (Required if built from source)

https://github.com/apache/incubator-mxnet/tree/master/R-package

cran <- getOption("repos")

cran["dmlc"] <- "https://apache-mxnet.s3-accelerate.dualstack.amazonaws.com/R/CRAN/"

options(repos = cran)

install.packages("mxnet")

Error Message:

R environment crashes.

Minimum reproducible example

data.A = read.csv("./matty_inv/A.csv", header = FALSE)

data.A.2 = read.csv("./matty_inv/A_2.csv", header = FALSE)

data.A <- as.matrix(data.A)

data.A.2 <- as.matrix(data.A.2)

dim(data.A) <- c(3,3,1,10)

dim(data.A.2) <- c(3,3,1,10)

train_iter = mx.io.arrayiter(data = data.A,

label = data.A.2,

batch.size = 1)

data <- mx.symbol.Variable('data')

label <- mx.symbol.Variable('label')

conv_1 <- mx.symbol.Convolution(data= data, kernel = c(1,1), num_filter = 4, name="conv_1")

conv_act_1 <- mx.symbol.Activation(data= conv_1, act_type = "relu", name="conv_act_1")

flat <- mx.symbol.flatten(data = conv_act_1, name="flatten")

fcl_1 <- mx.symbol.FullyConnected(data = flat, num_hidden = 9, name="fc_1")

fcl_2 <- mx.symbol.reshape(fcl_1, shape=c(3,3, 1, batch_size))

NN_Model <- mx.symbol.LinearRegressionOutput(data=fcl_2 , label=label, name="lro")

mx.set.seed(99)

autoencoder <- mx.model.FeedForward.create(

NN_Model, X=train_iter, initializer = mx.init.uniform(0.01),

ctx=mx.cpu(), num.round=n.rounds, array.batch.size=batch_size,

learning.rate=8e-3, array.layout = "rowmajor",

eval.metric = mx.metric.rmse, optimizer = "adam",

verbose = TRUE)

All 11 comments

Can you specify where the syntax is wrong? Also, I think it would be best practice for mxnet to throw an error instead of causing the R environment to crash.

@mxnet-label-bot [R, Bug, Data-loading]

@some-guy1 can you please share a small snapshot of these two data files ./matty_inv/A.csv and ./matty_inv/A_2.csv

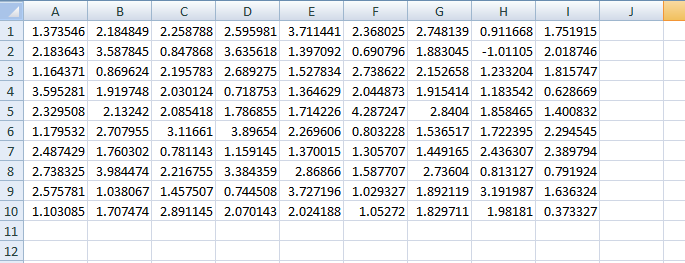

The csv files are identical:

I can confirm that:

train_iter$reset()

train_iter$iter.next()

train_iter$value()

Results in the expected:

$`data`

, , 1, 1

[,1] [,2] [,3]

[1,] 1.373546 3.595281 2.487429

[2,] 2.183643 2.329508 2.738325

[3,] 1.164371 1.179532 2.575781

$label

, , 1, 1

[,1] [,2] [,3]

[1,] 1.373546 3.595281 2.487429

[2,] 2.183643 2.329508 2.738325

[3,] 1.164371 1.179532 2.575781

So I have no idea why the entire R environment crashes when I started the training. Mxnet states it is starting to train, then everything crashes.

I think the issue comes from the evaluation metric. The following code include a modified rmse metric that flatten the pred and label vector. I think it's bug that the eval metric fails when the predictions are not in a flat setting, I'll open a PR to get it fixed.

data.A <- mx.nd.random.normal(shape = c(3,3,1,10))

data.A.2 <- mx.nd.random.normal(shape = c(3,3,1,10))

batch_size <- 5

train_iter = mx.io.arrayiter(data = as.array(data.A),

label = as.array(data.A.2),

batch.size = batch_size)

data <- mx.symbol.Variable('data')

label <- mx.symbol.Variable('label')

conv_1 <- mx.symbol.Convolution(data= data, kernel = c(1,1), num_filter = 4, name="conv_1")

conv_act_1 <- mx.symbol.Activation(data= conv_1, act_type = "relu", name="conv_act_1")

flat <- mx.symbol.flatten(data = conv_act_1, name="flatten")

fcl_1 <- mx.symbol.FullyConnected(data = flat, num_hidden = 9, name="fc_1")

fcl_2 <- mx.symbol.reshape(fcl_1, shape=c(3, 3, 1, batch_size))

NN_Model <- mx.symbol.LinearRegressionOutput(data=fcl_2 , label=label, name="lro")

fcl_2$infer.shape(list(data = c(3,3,1,batch_size)))

NN_Model$infer.shape(list(data = c(3,3,1,batch_size)))

mx.metric.rmse <- mx.metric.custom("rmse", function(label, pred) {

pred <- mx.nd.reshape(pred, shape = -1)

label <- mx.nd.reshape(label, shape = -1)

res <- mx.nd.sqrt(mx.nd.mean(mx.nd.square(label-pred)))

return(as.array(res))

})

mx.set.seed(99)

autoencoder <- mx.model.FeedForward.create(

NN_Model,

X = train_iter,

initializer = mx.init.uniform(0.01),

ctx=mx.cpu(),

num.round=5,

eval.metric = mx.metric.rmse,

optimizer = mx.opt.create("sgd"),

verbose = TRUE)

Thank you for the explanation!

@jeremiedb Were you able to open a PR for this ?

If yes, can you link the PR to this issue to that it can be tracked ?

@piyushghai Thanks for the reminder, I just open the PR.

@some-guy1 The PR for fixing this got merged. Can you verify and close this issue if the bug is bug ?

Thanks!

@sandeep-krishnamurthy The related PR is merged. The new R-package will include this fix when we release the new binaries of R along with the next MXNet release.

Please close this issue.

@some-guy1 Please feel free to re-open if closed in error. In case you want to instantly verify the fix, you can follow the instructions to build the R-package from source here : https://mxnet.incubator.apache.org/install/index.html?platform=Linux&language=R&processor=CPU

Most helpful comment

I think the issue comes from the evaluation metric. The following code include a modified rmse metric that flatten the pred and label vector. I think it's bug that the eval metric fails when the predictions are not in a flat setting, I'll open a PR to get it fixed.