Home: Searching in PMUI with Static / Sleet package sources causes OutOfMemoryException

Details about Problem

While using the PM UI and searching for a package, I received multiple 'System.OutOfMemoryException'. Discussing with @loic-sharma and @rrelyea, it seems we may need to discuss an approach to handling sleet / static sources in a better way.

Related past discussion: https://github.com/NuGet/Home/issues/7031

JSON Performance discussion: https://github.com/NuGetPackageExplorer/NuGetPackageExplorer/issues/804

NuGet product used: VS UI

VS version (if appropriate): 16.3.0 Preview 3.0

Worked before? If so, with which NuGet version: Related to new static sources.

Detailed repro steps so we can see the same problem

Create a new ASP.NET Core Web Application.

Drop a new NuGet.Config file in the solution directory with the following sources:

<packageSources>

<clear />

<add key="dotnet-core" value="https://dotnetfeed.blob.core.windows.net/dotnet-core/index.json" />

<add key="extensions" value="https://dotnetfeed.blob.core.windows.net/aspnet-extensions/index.json" />

<add key="entityframeworkcore" value="https://dotnetfeed.blob.core.windows.net/aspnet-entityframeworkcore/index.json" />

<add key="entityframework6" value="https://dotnetfeed.blob.core.windows.net/aspnet-entityframework6/index.json" />

<add key="aspnetcore-tooling" value="https://dotnetfeed.blob.core.windows.net/aspnet-aspnetcore-tooling/index.json" />

<add key="aspnetcore" value="https://dotnetfeed.blob.core.windows.net/aspnet-aspnetcore/index.json" />

<add key="aspnet-blazor" value="https://dotnetfeed.blob.core.windows.net/aspnet-blazor/index.json" />

<add key="NuGet.org" value="https://api.nuget.org/v3/index.json" />

</packageSources>

<disabledPackageSources>

<clear />

</disabledPackageSources>

- Open the

Manage NuGet PackagesUI - Search for various packages. It should take 3-5 attempts to recreate the problem. Example: Microsoft.EntityFramework.X", where X is changed to various characters.

- In the Output window, with "Show output from: Package Manager", you'll see exceptions similar to the following (could be 1 or more of these):

[aspnetcore] Exception of type 'System.OutOfMemoryException' was thrown. [dotnet-core] Exception of type 'System.OutOfMemoryException' was thrown. [dotnet-core] Exception of type 'System.OutOfMemoryException' was thrown. [dotnet-core] Exception of type 'System.OutOfMemoryException' was thrown. [dotnet-core] Exception of type 'System.OutOfMemoryException' was thrown. [aspnetcore] Exception of type 'System.OutOfMemoryException' was thrown.

All 18 comments

A simple approach spoken about before would be a new protocol just for package IDs. Listed state is a question.

https://github.com/NuGet/NuGetGallery/issues/3878

Do we know from telemetry how frequent this OOM is?

We should also consider optimizing our protocol libraries a bit. There are some low hanging fruits, like @304NotModified's fix: https://github.com/NuGetPackageExplorer/NuGetPackageExplorer/issues/804#issuecomment-520614388

Yeah that's the best approach! I did some investigating with Ashish ages ago and found out just how bad keeping JObject around (when you don't have to) is.

I looked into the code a bit. The RawSearchResourceV3 seems to only used by PackageSearchResourceV3 so it would be pretty easy to change the implementation to skip JObject entirely (or add an overload if we're concerned about breaking changes in this area).

Does anyone know why we even have the extra level of abstraction introduced by RawSearchResourceV3? Why not just make PackageSearchResourceV3 do the HTTP calls and deserialize right to models and skip JToken/JObject/... entirely?

This JObject shenanigans has been itching the back of my mind so I prototyped an implementation that does not break APIs... ish. Basically, I introduce an internal overload that client uses and leaves the JObject one as-is: https://github.com/NuGet/NuGet.Client/tree/dev-jver-nojobj

I ran some benchmarks on it with search take values 0, 100, 200, ..., 1000. This is not as much data as Sleet (which is unbounded take) but it should demonstrate the trajectory.

If we just deserialize right to List<PackageSearchMetadata> (no JObject in between) and leave the rest of the code the same, things run a lot faster.

BenchmarkDotNet=v0.11.5, OS=Windows 10.0.18362

Intel Core i7 CPU 920 2.67GHz (Nehalem), 1 CPU, 8 logical and 4 physical cores

[Host] : .NET Framework 4.7.2 (CLR 4.0.30319.42000), 32bit LegacyJIT-v4.8.4010.0

DefaultJob : .NET Framework 4.7.2 (CLR 4.0.30319.42000), 32bit LegacyJIT-v4.8.4010.0

Method | Take | Mean (us) | Mean (New / Old) | Allocated (KB) | Allocated (New / Old)

------ | ---- | ------------ | ---------------- | -------------- | ---------------------

Old | 100 | 116811 | - | 15449 | -

New | 100 | 62799 | 54% | 11245 | 73%

Old | 200 | 198516 | - | 28699 | -

New | 200 | 119027 | 60% | 21023 | 73%

Old | 300 | 316804 | - | 43561 | -

New | 300 | 177408 | 56% | 32502 | 75%

Old | 400 | 497178 | - | 60927 | -

New | 400 | 259972 | 52% | 44462 | 73%

Old | 500 | 637152 | - | 76293 | -

New | 500 | 332440 | 52% | 55076 | 72%

Old | 600 | 790286 | - | 96292 | -

New | 600 | 393473 | 50% | 71387 | 74%

Old | 700 | 912083 | - | 109487 | -

New | 700 | 451150 | 49% | 80330 | 73%

Old | 800 | 990259 | - | 117465 | -

New | 800 | 494789 | 50% | 85825 | 73%

Old | 900 | 1080035 | - | 128105 | -

New | 900 | 544532 | 50% | 93144 | 73%

Old | 1000 | 1191269 | - | 137723 | -

New | 1000 | 609990 | 51% | 99813 | 72%

The allocation and runtime can be improved even more by:

- Reconsider the necessity of buffering the entire response into memory. Intuitively some buffer would be good but buffering the whole response in unnecessary for a streaming deserializer like

JsonTextReader+StreamReader. Source:StreamExtensions.cs - Don't clone

PackageSearchMetadataintoClonedPackageSearchMetadata. Source:PackageSearchResourceV3.cs

All of these things can be changed as hidden implementation details of PackageSearchResourceV3 and RawSearchResourceV3. No need to break existing users.

Note that my current test implementation _does_ have some breaking behavior changes as demonstrated by some unit tests I added. It fails when the data property in the response is not an array or is a heterogeneous array. The existing implementation is more permissive. This could existing behavior be matched by switching to an incremental parsing method involving JsonTextReader.Read() etc.

I just folded an equivalent scenario into this issue: https://github.com/NuGet/Home/issues/8557.

It's not out of memory, but it takes forever :)

First of all this nuget server (dotnetfeed.blob) is rogue ignoring our skip, take parameters, always returns everything it has regardless of query parameters. So that response is always more than 100MB.

- So after more investigation I found main problem is when trying to read more than 100MB in http stream application it overflows memory constraint during json parsing. It seems there is limit around 200MB limit, don't know where it's set for now. 100MB read stream buffer + 100MB json parse >=200MB triggers exception. My solution would be we put hard stop maybe around 20MB then stop reading http stream then only process first 20MB, otherwise some rogue server can always cause this kind of problem simply returning 1TB json data due to some fault on server side. Since having hard stop also bring other issues how to trim and close json stream properly. I'm working on different ideas how to do it.

- Also we need to improve Json parsing performance but it's 2ndary problem compare to previous one. It also can be done 2-3 different ways, so I need to check which one is most performant after first problem is tackled.

First of all this nuget server (dotnetfeed.blob) is rogue ignoring our skip, take parameters, always returns everything it has regardless of query parameters. So that response is always more than 100MB.

You are correct. That feed is backed by Sleet, which is a static feed, meaning it won't apply any search logic, see code;

https://github.com/emgarten/Sleet/blob/9905ed916bc404e5cae48ce48aa931e0e0c9e17c/src/SleetLib/Services/Search.cs#L13

cc @emgarten

@erdembayar

Alternatively, you maybe just try to read the number of entries we expect, so just parse take number of entries.

@nkolev92 How do we read exact number of entries from raw stream?

As I see current response is stream? It's kind of tricky, if someone skip 10000 items then take 20 item. Unless you go through all first 10000 items we don't know where that 20 items we want starts. We can't jump to position so always have to read memory stream whether we like it or not.

Maybe we can do real time processing as reading raw stream. But problem is I have to deal with unicode(non-english) text descriptions which can be complicated. Of course it can be done just don't want to go down most complicated path for now. Even then we have to have some kind of hard stop, can't read stream 10 min to find result.

For now most straight forward solution would be just put hard stop if stream goes above 20MB then try process.

As I see current response is stream? It's kind of tricky, if someone skip 10000 items then take 20 item. Unless you go through all first 10000 items we don't know where that 20 items we want starts. We can't jump to position so always have to read memory stream whether we like it or not.

Maybe we can do real time processing as reading raw stream. But problem is I have to deal with unicode(non-english) text descriptions which can be complicated. Of course it can be done just don't want to go down most complicated path for now. Even then we have some kind of hard stop, can't read stream 10 min to find result.

For now most straight forward solution would be just put hard stop if stream goes above 20MB then try process.

skip & take are server params.

The client has no way to validate that the server correctly applied the skip.

But we can validate the take.

You can use JsonReader instead of converting to JObject which needs the complete response.

cc @zivkan for ideas as I think he's looked into this before.

Alternatively, you maybe just try to read the number of entries we expect, so just parse take number of entries.

@nkolev92 Yes, we real time json processing as we read http stream but might have to deal with international text(like japanese, arabic) make sure it's parsed correctly. Because 1 japanese letter can consists of more than 1 byte so during stream reading we might read only first byte of character but rest is coming with next read then have to make sure we're not breaking text. To prevent such problem I need more test data with international texts. Do you know any nuget package uses japanese, arabic text in description?

I'm start implementing benchmarking on my local branch to test if there are really performance difference for different implementations.

Also I'm going to write manual parsing instead of using any library, maybe it could be much faster. Only benchmarking will tell us which one is faster.

I don't think you need read byte by byte, you can use a JsonReader to parse incrementally.

The search response is json, it's something along the lines of:

{

"@context": {

"@vocab": "http://schema.nuget.org/schema#",

"@base": "https://api.nuget.org/v3/registration5-gz-semver2/"

},

"totalHits": 15488,

"data": []

You can use the fact that the response needs to be json: https://azuresearch-usnc.nuget.org/query?q=nuget.common&skip=0&take=5&prerelease=true&semVerLevel=2.0.0.

Also I'm going to write manual parsing instead of using any library, maybe it could be much faster. Only benchmarking will tell us which one is faster.

I'd caution against this.

You can use JsonReader instead of converting to JObject which needs the complete response.

cc @zivkan for ideas as I think he's looked into this before.

What I wanted to implement, if I had time to work on this myself, was to get rid of JObject completely out of the codepath. Use JsonReader to be read the stream without buffering the whole thing in memory, and implement a custom converter for the search response that simply stops reading once the expected number of responses are read.

Last year I started writing little benchmarks to compare Newtonsoft.Json vs System.Text.Json for nuget workloads: https://github.com/zivkan/Benchmarks/tree/master/JsonParsingBenchmark

I didn't implement stopping reading after x results were deserialized, but I did write custom converts, which could help with inspiration on how to do more efficient json deserialization.

My proposal means that reading static feeds, such as sleet's, will be very broken (the same 25 results will be returned every search, never getting other packages in the feed), but given that the search service is supposed to be dynamic, I consider this a "bug" in the server. Given this server is static, then the server is "broken" by design, and I don't think NuGet client should be responsible for working around the server's technical limitations.

My proposal means that reading static feeds, such as sleet's, will be very broken (the same 25 results will be returned every search, never getting other packages in the feed), but given that the search service is supposed to be dynamic, I consider this a "bug" in the server. Given this server is static, then the server is "broken" by design, and I don't think NuGet client should be responsible for working around the server's technical limitations.

I agree. :)

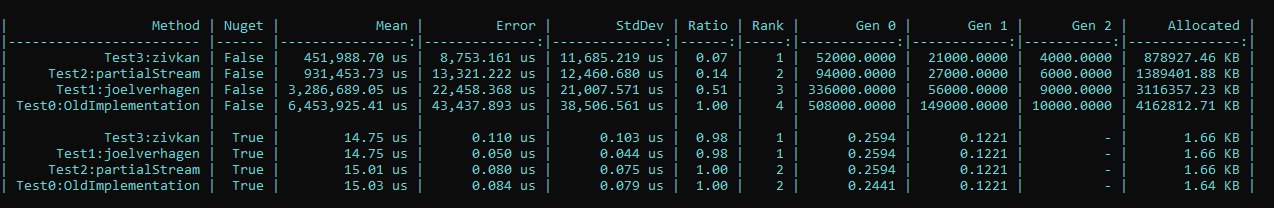

I looked through all approaches here and tried to put them in same project see how they fare against each other. Please note: It's reading from 2 local json files (First one is 141 MB large blob, 2nd one is 184k small one) so reading from network overhead is not included here. I believe we may need to work next time on how to make faster on initial overhead to make search.

Definitely we have winner, I'll retain approach inspired by @zivkan and remove others. :)

As you can see for large input speed gain was 14 times faster than previous solution and memory footprint is 5 times less according to benchmark.

But we don't gain that much on small inputs, cost of initialize overhead will weigh over it, there are almost same.

I created pull request today. https://github.com/NuGet/NuGet.Client/pull/3406

Please do review when you have time. @zivkan @nkolev92

Thanks @erdembayar.

Excited you've made some great progress.

@zivkan @nkolev92 I addressed code review comments. Can you review again?

Most helpful comment

I looked through all approaches here and tried to put them in same project see how they fare against each other. Please note: It's reading from 2 local json files (First one is 141 MB large blob, 2nd one is 184k small one) so reading from network overhead is not included here. I believe we may need to work next time on how to make faster on initial overhead to make search.

Definitely we have winner, I'll retain approach inspired by @zivkan and remove others. :)

As you can see for large input speed gain was 14 times faster than previous solution and memory footprint is 5 times less according to benchmark.

But we don't gain that much on small inputs, cost of initialize overhead will weigh over it, there are almost same.