Graphql-engine: Memory usage accumulating over time until crash / restart

Since installing Hasura beta 9 (along with other updates to our system), we experience a gradual increase in memory usage over time. We are hosted on Heroku, and may have been saved by the daily dyno restart prior to yesterday.

Yesterday, we had enough, heavy queries however to max out our memory during the day and a manual restart was required. Heavy queries does seem to be responsible for the behaviour.

Today, we are already at 3 times our normal memory consumption.

Please advise what to do!

Seems related to issue [#2794 ]

All 9 comments

We are seeing the same behavior after upgrading from beta-6 to beta-10. We are running docker on portainer. The memory seems to grow until the containers crash.

Can you check if this issue persists after setting the env variable HASURA_GRAPHQL_QUERY_PLAN_CACHE_SIZE to a value like 100? You'll have to upgrade to v1.0.0-rc.1.

I'm seeing the same issue at the moment, in a single-node k3s cluster (with 8GB RAM). It's a service we just set up, and which hasn't seen much traffic yet. Up till now I ran a single replica, without a memory limit, because I didn't think I needed to worry.

Today we are seeing some traffic (2, 3 requests per second, mostly mutations) because it's the time of the year for this service, and it took about 3 hours for the pod to consume all of the nodes' memory. Happened again after I restarted the whole thing.

Now I've scaled up the service to 4 replicas, and set the memory limit to 1200mi, and the OOMKiller kills a pod roughly every 15 minutes. There's no service disruption, since Kubernetes handles this fine, but I'm wondering what the cause of this could be. I'm not ruling out stupidity on my part, quite possibly I overlooked something.

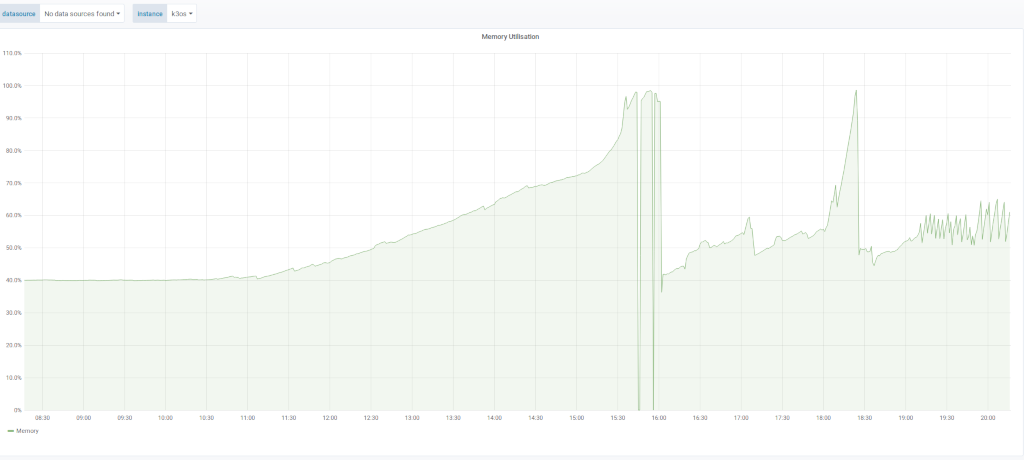

Here's what the memory consumption of the node in question looked like (2 times eating all the nodes memory, and the spikes at the end indicate the OOMKiller kicking in):

I tried the HASURA_GRAPHQL_QUERY_PLAN_CACHE_SIZE setting (to 100), as suggested above, but that didn't seem to have any impact.

@makkus thanks for the information. Can you confirm the version of graphql-engine you're using?

@jberryman , sure, I'm using this docker image: hasura/graphql-engine:v1.0.0.cli-migrations

I'm planning to setup a test case to be able to reproduce this under lab conditions, but haven't had the time yet.

@woba-nwa @steelerc69 @makkus

Do you mind providing a bit more info to help us reproduce this?:

- are you doing mostly queries or mutations? (makkus mentions mutations)

- are you using subscription queries?

- are you using the http or websockets transport?

- do you keep long running console sessions open?

Thanks!

Hiya, sorry it took me a while. I still intend to create a small test setup to have better data, but didn't get around to it yet. Here's what I remember from when I had the problem:

* are you doing mostly queries or mutations? (makkus mentions mutations)

Mostly mutations. We had about 15 people scanning barcodes at the same time, every scanned barcode was saved (seperately) into a postgres table via a python proxy webservice, which in turn talked to hasura. I reckon there were about 1 to 2 scan events per second on average.

* are you using subscription queries?

Yes, at the time as when the barcode scans were going on, there were about 2 subscription clients (seperately), to monitor the progress via a webinterface.

* are you using the http or websockets transport?

websockets for the subscription

* do you keep long running console sessions open?

Don't think I did at the time.

@woba-nwa @makkus @steelerc69 We've released v1.1.1 which should fix this, can you please try it out and let us know?

@0x777 Sorry just saw this notification, we are up to date currently with the latest drops of the engine and we are NOT currently seeing this behavior like we were before. Thanks!