Go: sync: concurrent map iteration and map write on Map error

Please answer these questions before submitting your issue. Thanks!

What version of Go are you using (go version)?

go version go1.10 darwin/amd64

Does this issue reproduce with the latest release?

Yes

What operating system and processor architecture are you using (go env)?

go version go1.10 darwin/amd64

ZShens-MacBook-Pro:network2 zshen$ go env

GOARCH="amd64"

GOBIN=""

GOCACHE="/Users/zshen/Library/Caches/go-build"

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="darwin"

GOOS="darwin"

GOPATH="/Users/zshen/Workspace/go"

GORACE=""

GOROOT="/usr/local/Cellar/go/1.10/libexec"

GOTMPDIR=""

GOTOOLDIR="/usr/local/Cellar/go/1.10/libexec/pkg/tool/darwin_amd64"

GCCGO="gccgo"

CC="clang"

CXX="clang++"

CGO_ENABLED="1"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/k4/tn3jnym94dq51ghd4krhvgfr0000gn/T/go-build339950240=/tmp/go-build -gno-record-gcc-switches -fno-common"

What did you do?

Modify and read sync.Map concurrently

What did you expect to see?

Work fine

What did you see instead?

fatal error: concurrent map iteration and map write

goroutine 223 [running]:

runtime.throw(0x153d054, 0x26)

/usr/local/Cellar/go/1.10/libexec/src/runtime/panic.go:619 +0x81 fp=0xc420643750 sp=0xc420643730 pc=0x102cf71

runtime.mapiternext(0xc420643870)

/usr/local/Cellar/go/1.10/libexec/src/runtime/hashmap.go:747 +0x55c fp=0xc4206437e0 sp=0xc420643750 pc=0x100aeec

runtime.mapiterinit(0x1489e60, 0xc4202dee70, 0xc420643870)

/usr/local/Cellar/go/1.10/libexec/src/runtime/hashmap.go:737 +0x1f1 fp=0xc420643808 sp=0xc4206437e0 pc=0x100a8a1

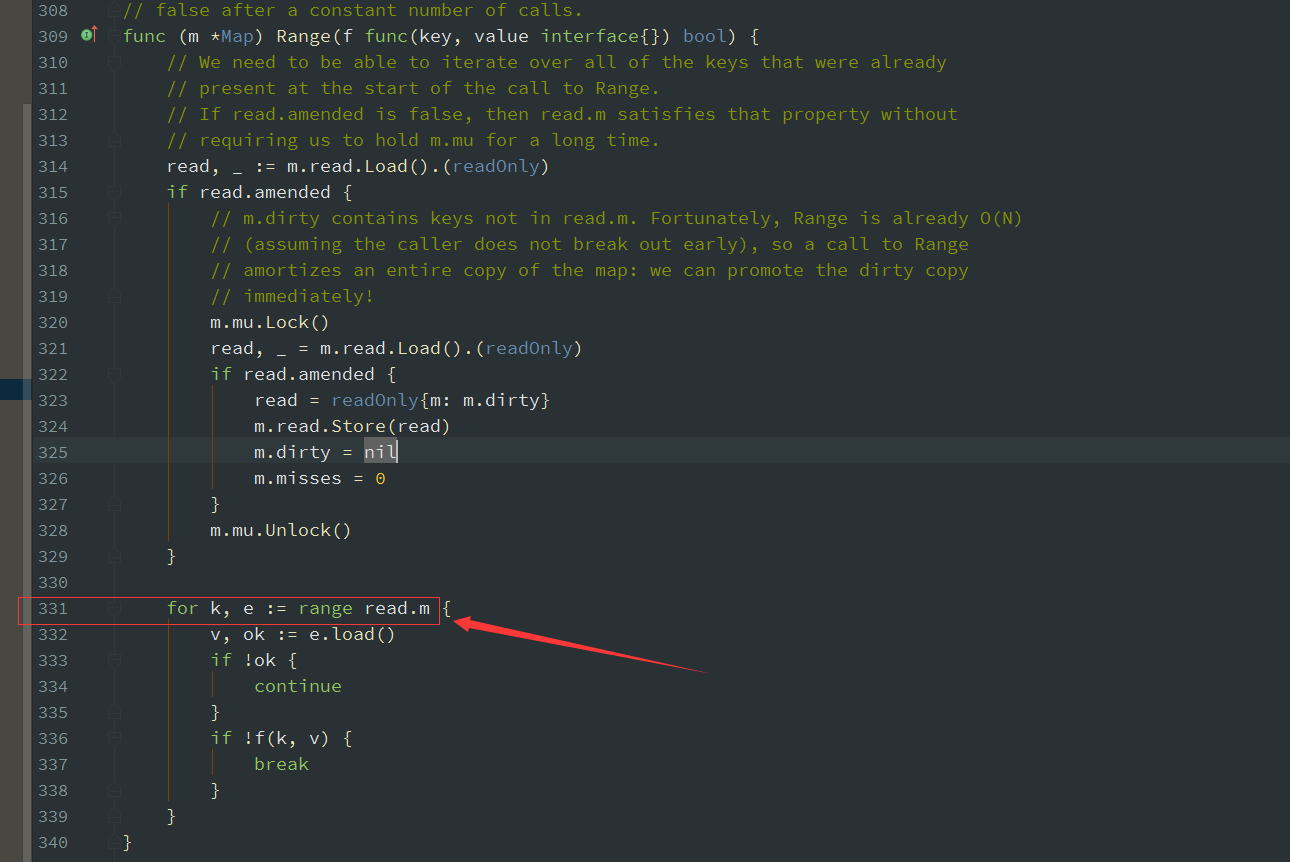

sync.(*Map).Range(0xc420371590, 0xc420643908)

/usr/local/Cellar/go/1.10/libexec/src/sync/map.go:332 +0xce fp=0xc4206438e0 sp=0xc420643808 pc=0x10626ee

All 28 comments

Please provide some way that we can reproduce this issue.

Missing reproducer aside, the stack trace seems to indicate the error really originates from within sync.Map code itself.

Alerting @bcmills too

@davecheney sorry, as it's used in a private project, I could share the details on how to reproduce it.

@zjshen14 how often does it occur in your project?

If I provide a hypothetical close reproducer, could you please help me provide edits to help get the reproducer to cause the concurrent read+write fatality? How is this for a start? https://play.golang.org/p/Odxo5TIUL4S or inlined.

package main

import (

"context"

"sync"

"time"

)

func main() {

m := new(sync.Map)

key := "foo"

ctx, cancel := context.WithCancel(context.Background())

defer cancel()

tick := time.NewTicker(1e3)

// Writer routine

go func() {

i := 0

for {

m.Store(key, i)

i += 1

select {

case <-tick.C:

// Continue

case <-ctx.Done():

return

}

}

}()

// Delete routine

go func() {

i := 0

for {

m.Delete(key)

i += 1

select {

case <-tick.C:

// Continue

case <-ctx.Done():

return

}

}

}()

// Get routine for unexpungement

go func() {

i := 0

for {

m.Load(key)

i += 1

select {

case <-tick.C:

// Continue

case <-ctx.Done():

return

}

}

}()

for i := 0; i < 1e9; i++ {

m.Range(func(key, value interface{}) bool {

return true

})

}

}

This looks like memory corruption. Have you tried running your program under the race detector? See https://blog.golang.org/race-detector .

Even if it is a bug in sync.Map (which is possible, but I hope unlikely), the race detector should make it much easier to diagnose.

I met similar problems too, when use sync.(*Map).Range, panic occurs,

fatal error:concurrent map iteration and map write

and i use Go Race Detector, find no warning

@barryqiu can you please open a new issue following the issue template. Thank you.

@barryqiu, can you provide an example program that reproduces the crash? Without either that or a race detector report, we don't have much to go on.

This is coming out in production environments, occasionally, hard to reproduces

If your test coverage is it able to provoke the issue, are you able to build a version of your application with the race detector enabled and deploy it to a percentage of servers In your fleet.

statck as follows

`runtime.throw(0x93ee2f, 0x26)

/data/barry_dev/go/src/runtime/panic.go:605 +0x95 fp=0xc4390d0a10 sp=0xc4390d09f0 pc=0x42ed95

runtime.mapiternext(0xc4390d0b10)

/data/barry_dev/go/src/runtime/hashmap.go:778 +0x6f1 fp=0xc4390d0aa8 sp=0xc4390d0a10 pc=0x40d441

sync.(*Map).Range(0xc44840ff50, 0xc4390d0bd8)

/data/barry_dev/go/src/sync/map.go:331 +0xf8 fp=0xc4390d0b80 sp=0xc4390d0aa8 pc=0x474a58`

If your test coverage is it able to provoke the issue, are you able to build a version of your application with the race detector enabled and deploy it to a percentage of servers In your fleet.

I will try

Can you please post the entire stack panic message including the entire set of stack traces.

one goroutine Store value in the sync.Map and another goroutine Range the sync.Map, and I got the panic

Without a way to reproduce this issue locally I wrote up a quick stress test of sync.Map to try to provoke a panic.

https://play.golang.org/p/PVpk6SvnLzq

So far I have not been able to.

@barryqiu @zjshen14 i'm sorry you are having issues, but without an assertion that your code bases are free of data races, or a code sample that reproduces the issue, it is hard to make progress on resolving this issue.

This is not a regression introduced in Go 1.10, because the stack trace posted by @barryqiu is produced by Go 1.9.

I read the code of

sync.Mapand I think this can't be a bug in it.

@barryqiu @zjshen14 Are you sure your code doesn't copy the sync.Map after first use?

I can reproduce the identical stack trace if I copy sync.Map.

The code is

package main

import (

"math/rand"

"sync"

)

func main() {

var m sync.Map

for i := 0; i < 64; i++ {

key := rand.Intn(128)

m.Store(key, key)

}

n := m

go func() {

for {

key := rand.Intn(128)

m.Store(key, key)

}

}()

for {

n.Range(func(key, value interface{}) bool {

return key == value

})

}

}

This code also produces a clear race report,

Daves-MBP(~/src) % go run -race syncmap2.go

==================

WARNING: DATA RACE

Write at 0x00c000096060 by goroutine 5:

runtime.mapassign()

/Users/dfc/go/src/runtime/map.go:505 +0x0

sync.(*Map).Store()

/Users/dfc/go/src/sync/map.go:160 +0x3a2

main.main.func1()

/Users/dfc/src/syncmap2.go:19 +0xc8

Previous read at 0x00c000096060 by main goroutine:

runtime.mapiterinit()

/Users/dfc/go/src/runtime/map.go:691 +0x0

sync.(*Map).Range()

/Users/dfc/go/src/sync/map.go:332 +0xc2

main.main()

/Users/dfc/src/syncmap2.go:23 +0x1ec

Goroutine 5 (running) created at:

main.main()

/Users/dfc/src/syncmap2.go:16 +0x1d2

==================

fatal error: concurrent map iteration and map write

goroutine 1 [running]:

runtime.throw(0x10a4a1a, 0x26)

/Users/dfc/go/src/runtime/panic.go:616 +0x81 fp=0xc000067da0

sp=0xc000067d80 pc=0x104fad1

runtime.mapiternext(0xc000067ea8)

/Users/dfc/go/src/runtime/map.go:747 +0x753 fp=0xc000067e50

sp=0xc000067da0 pc=0x1035a73

sync.(*Map).Range(0xc000096090, 0x10a5a08)

/Users/dfc/go/src/sync/map.go:332 +0xd3 fp=0xc000067f18

sp=0xc000067e50 pc=0x107eca3

main.main()

/Users/dfc/src/syncmap2.go:23 +0x1ed fp=0xc000067f88

sp=0xc000067f18 pc=0x108150d

runtime.main()

/Users/dfc/go/src/runtime/proc.go:198 +0x1d0 fp=0xc000067fe0

sp=0xc000067f88 pc=0x1051450

runtime.goexit()

/Users/dfc/go/src/runtime/asm_amd64.s:2361 +0x1 fp=0xc000067fe8

sp=0xc000067fe0 pc=0x1078221

goroutine 18 [runnable]:

sync.(*Map).Store(0xc000096030, 0x108c620, 0xc0000a8020, 0x108c620,

0xc0000a8028)

/Users/dfc/go/src/sync/map.go:160 +0x3a3

main.main.func1(0xc000096030)

/Users/dfc/src/syncmap2.go:19 +0xc9

created by main.main

/Users/dfc/src/syncmap2.go:16 +0x1d3

exit status 2

On 9 March 2018 at 19:50, Wèi Cōngruì notifications@github.com wrote:

>

1.

This is not regression introduced in Go 1.10, because the stack trace

posted by @barryqiu https://github.com/barryqiu is produced by Go

1.9.

2.I read the code of sync.Map and I think this can't be a bug in it.

@barryqiu https://github.com/barryqiu @zjshen14

https://github.com/zjshen14 Are you sure your code doesn't copy the

sync.Map after first use?

I can reproduce the identical stack trace if I copy sync.Map.

The code ispackage main

import (

"math/rand"

"sync"

)

func main() {

var m sync.Mapfor i := 0; i < 64; i++ {

key := rand.Intn(128)

m.Store(key, key)

}

n := m

go func() {

for {

key := rand.Intn(128)

m.Store(key, key)

}

}()

for {

n.Range(func(key, value interface{}) bool {

return key == value

})

}

}—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/golang/go/issues/24112#issuecomment-371751213, or mute

the thread

https://github.com/notifications/unsubscribe-auth/AAAcA0_Afs63EIb0e32PhJS7Je8oz-e9ks5tckJjgaJpZM4SSMuN

.

@crvv u r right, I copy the sync.map, I have this panicn in both golang 1.10 and golang 1.9. so why the copy will lead to this panic occasionally?

The document says "A Map must not be copied after first use"

https://golang.org/pkg/sync/#Map

Actually, everything in sync and sync/atomic must not be copied after first use.

After copying the sync.Map, two sync.Maps are holding the same map.

Two goroutines can access this map without protection of one mutex.

Looks like we can close here. Thanks @crvv for the investigative work : )

I'm glad you found the problem.

I want to point out that the race detector would have spotted this problem

immediately. It concerns me that your tests could not spot this, which

means they may not be testing the code you run in production. Similarly,

building a single race enabled version of your application and deploying it

would have immediately shown the problem as @crvv's example showed.

On 9 March 2018 at 22:06, Alberto Donizetti notifications@github.com

wrote:

Looks like we can close here. Thanks @crvv https://github.com/crvv for

the investigative work : )—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/golang/go/issues/24112#issuecomment-371783312, or mute

the thread

https://github.com/notifications/unsubscribe-auth/AAAcA552lyc4C5FQqnbKktJyia0zm_V5ks5tcmJEgaJpZM4SSMuN

.

Would some sort of copy detection help here? Have the sync.Map store the address on first use and clearly panic if it changes?

Would some sort of copy detection help here?

The vet copylocks check already catches it (https://golang.org/cl/59690). I do not know why the copylocks check was not enabled by default as part of #18085.

It's commented out in a TODO(@rsc) here:

It's nice that we now run go vet automatically, but I really, really wish it was more obvious in the release notes that not all checks are enabled by default

https://golang.org/doc/go1.10#introduction

The mention here is not enough: apparently many people don't read the entire document, as I've discovered myself by finding this issue resident in living codebases.

copylock has false positive. I think this is the reason.

https://github.com/golang/go/issues/13675

Most helpful comment

This is not a regression introduced in Go 1.10, because the stack trace posted by @barryqiu is produced by Go 1.9.

I read the code of

sync.Mapand I think this can't be a bug in it.@barryqiu @zjshen14 Are you sure your code doesn't copy the

sync.Mapafter first use?I can reproduce the identical stack trace if I copy

sync.Map.The code is