Go-ethereum: What is the upper bound of "imported new state entries"?

System information

Geth version: 1.6.5

OS & Version: Windows 7 x64

geth Command: geth --fast --cache 8192

Expected behaviour

Geth should start in full mode.

Actual behaviour

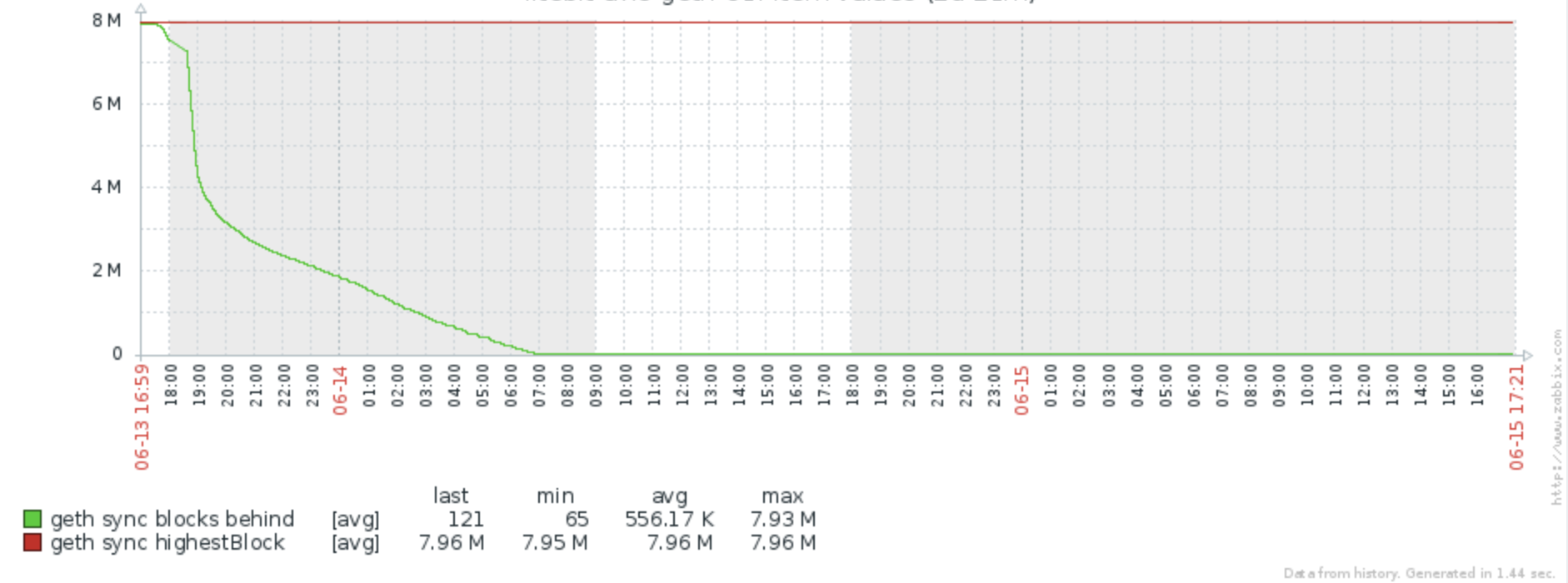

After nearing the current block geth is continuously "imported new state entries".

Steps to reproduce the behaviour

Currently running since 10 days.

Geth console info

eth.blockNumber

6

eth.syncing

{

currentBlock: 3890742,

highestBlock: 3890893,

knownStates: 17124512,

pulledStates: 17105895,

startingBlock: 3890340

}

Backtrace

INFO [06-18|10:10:31] Imported new state entries count=384 elapsed=22.001ms processed=17118951 pending=24263

INFO [06-18|10:10:32] Imported new state entries count=384 elapsed=33.001ms processed=17119335 pending=23819

INFO [06-18|10:10:33] Imported new state entries count=384 elapsed=111.006ms processed=17119719 pending=23875

INFO [06-18|10:10:34] Imported new state entries count=384 elapsed=131.007ms processed=17120103 pending=23855

INFO [06-18|10:10:35] Imported new state entries count=384 elapsed=116.006ms processed=17120487 pending=23978

INFO [06-18|10:10:36] Imported new state entries count=384 elapsed=134.007ms processed=17120871 pending=24186

INFO [06-18|10:10:38] Imported new state entries count=384 elapsed=305.017ms processed=17121255 pending=27727

INFO [06-18|10:10:42] Imported new state entries count=384 elapsed=448.025ms processed=17121639 pending=33614

INFO [06-18|10:10:46] Imported new state entries count=384 elapsed=441.025ms processed=17122023 pending=39642

INFO [06-18|10:10:48] Imported new state entries count=384 elapsed=44.002ms processed=17122407 pending=39170

INFO [06-18|10:10:52] Imported new state entries count=384 elapsed=427.024ms processed=17122791 pending=45142

INFO [06-18|10:10:55] Imported new state entries count=384 elapsed=473.027ms processed=17123175 pending=51166

INFO [06-18|10:10:58] Imported new state entries count=384 elapsed=448.025ms processed=17123559 pending=57128

INFO [06-18|10:11:01] Imported new state entries count=384 elapsed=444.025ms processed=17123943 pending=63129

INFO [06-18|10:11:04] Imported new state entries count=384 elapsed=441.025ms processed=17124327 pending=69173

INFO [06-18|10:11:04] Imported new state entries count=1 elapsed=0s processed=17124328 pending=69172

INFO [06-18|10:11:07] Imported new state entries count=384 elapsed=442.025ms processed=17124712 pending=75182

INFO [06-18|10:11:10] Imported new state entries count=384 elapsed=470.026ms processed=17125096 pending=81186

INFO [06-18|10:11:11] Imported new state entries count=384 elapsed=335.019ms processed=17125480 pending=81736

INFO [06-18|10:11:14] Imported new state entries count=384 elapsed=440.025ms processed=17125864 pending=87718

INFO [06-18|10:11:15] Imported new state entries count=384 elapsed=140.008ms processed=17126248 pending=87812

INFO [06-18|10:11:16] Imported new state entries count=384 elapsed=31.001ms processed=17126632 pending=87226

INFO [06-18|10:11:18] Imported new state entries count=384 elapsed=88.005ms processed=17127016 pending=87040

INFO [06-18|10:11:19] Imported new state entries count=384 elapsed=39.002ms processed=17127400 pending=86803

INFO [06-18|10:11:20] Imported new state entries count=384 elapsed=36.002ms processed=17127784 pending=86585

INFO [06-18|10:11:23] Imported new state entries count=1 elapsed=0s processed=17127785 pending=86272

INFO [06-18|10:11:23] Imported new state entries count=384 elapsed=1.610s processed=17128169 pending=86271

INFO [06-18|10:11:25] Imported new state entries count=384 elapsed=143.008ms processed=17128553 pending=87792

INFO [06-18|10:11:28] Imported new state entries count=384 elapsed=183.010ms processed=17128937 pending=90117

INFO [06-18|10:11:28] Imported new state entries count=1 elapsed=1ms processed=17128938 pending=90120

INFO [06-18|10:11:28] Imported new state entries count=1 elapsed=0s processed=17128939 pending=90118

INFO [06-18|10:11:29] Imported new state entries count=384 elapsed=102.005ms processed=17129323 pending=90022

INFO [06-18|10:11:30] Imported new state entries count=384 elapsed=184.010ms processed=17129707 pending=92320

INFO [06-18|10:11:32] Imported new state entries count=384 elapsed=185.010ms processed=17130091 pending=94665

INFO [06-18|10:11:34] Imported new state entries count=384 elapsed=187.010ms processed=17130475 pending=97053

INFO [06-18|10:11:36] Imported new state entries count=384 elapsed=194.011ms processed=17130859 pending=99550

INFO [06-18|10:11:38] Imported new state entries count=384 elapsed=183.010ms processed=17131243 pending=101954

INFO [06-18|10:11:40] Imported new state entries count=384 elapsed=202.011ms processed=17131627 pending=104395

INFO [06-18|10:11:42] Imported new state entries count=384 elapsed=196.011ms processed=17132011 pending=106904

INFO [06-18|10:11:44] Imported new state entries count=384 elapsed=186.010ms processed=17132395 pending=109176

INFO [06-18|10:11:47] Imported new state entries count=384 elapsed=184.010ms processed=17132779 pending=111554

INFO [06-18|10:11:47] Imported new state entries count=2 elapsed=184.010ms processed=17132781 pending=111554

INFO [06-18|10:11:48] Imported new state entries count=384 elapsed=34.002ms processed=17133165 pending=110760

INFO [06-18|10:11:50] Imported new state entries count=384 elapsed=193.011ms processed=17133549 pending=113172

All 223 comments

Yes .. same exact effect

Command is: geth --syncmode=fast --cache=4096 console

Please try geth v1.6.6.

in my case v1.6.6 does not fix it

same here, described detailed status here:

https://github.com/ethereum/go-ethereum/issues/14571

The same for me for --testnet (ropsten) on Mac OS. The geth version is 1.6.6.

I'm running:

geth --testnet --syncmode "fast" --rpc --rpcapi db,eth,net,web3,personal --cache=1024 --rpcport 8545 --rpcaddr 127.0.0.1 --rpccorsdomain "*" --bootnodes "enode://20c9ad97c081d63397d7b685a412227a40e23c8bdc6688c6f37e97cfbc22d2b4d1db1510d8f61e6a8866ad7f0e17c02b14182d37ea7c3c8b9c2683aeb6b733a1@52.169.14.227:30303,enode://6ce05930c72abc632c58e2e4324f7c7ea478cec0ed4fa2528982cf34483094e9cbc9216e7aa349691242576d552a2a56aaeae426c5303ded677ce455ba1acd9d@13.84.180.240:30303"

and when it comes near to latest blocks (at https://ropsten.etherscan.io/) it continuously "imports new state entries". If I restart the cmd above it fetches most recent blocks but never reach latest ones.

same here... the geth is 1.6.6, running geth --testnet --fast --cache=1024

I am in same state after a week using geth --fast --cache=1024. Anyone knows what should I do right now?

Same situation on testnet. OSX, geth 1.6.7-stable-ab5646c5. Started with --fast and --cache 1500

What I could understand from this geth fast mode problem is that:

- You must use Quad core processor with 4 gb or more RAM

- You must use SSD instead of HDD

- Your internet connection must be at least 2mbps or more and reliable.

If all above are checked then you should try geth. Using geth in full mode you would most probably synced in a week or two max. In fast mode it depends on your luck but you are most probably synced in 2-3 days or never.

Encountering similar issues. Geth --fast sync does not sync, let alone fast.

Using 1.6.7-stable on Ubuntu, and it gets within 100 blocks and then endlessly imports state entries.

@clowestab did you ever get it to sync?

Having same issue. Also using ubuntu, and geth endlessly syncs.

I am having the same issue. Has anyone been able to solve this problem?

Same problem here, I run geth with 1024 cache and fast syncing three days ago and, after reaching the last block number one day ago, it had never stopped the "Imported new state entries" state.

During this "state entries" phase, however, my balance changed from 0 to the correct value and the block number reported changed from 0 to the a number near the highestBlock value.

This is geth version on my ubuntu machine:

Geth

Version: 1.6.7-stable

Git Commit: ab5646c532292b51e319f290afccf6a44f874372

Architecture: amd64

Protocol Versions: [63 62]

Network Id: 1

Go Version: go1.8.1

Operating System: linux

GOPATH=

GOROOT=/usr/lib/go-1.8

and this is the result now every half second:

...

INFO [09-08|10:05:34] Imported new state entries count=11 flushed=7 elapsed=216.807ms processed=25170515 pending=1852 retry=1 duplicate=5293 unexpected=6261

INFO [09-08|10:05:34] Imported new state entries count=2 flushed=5 elapsed=521.989µs processed=25170517 pending=1847 retry=0 duplicate=5293 unexpected=6261

INFO [09-08|10:05:34] Imported new state entries count=7 flushed=1 elapsed=263.738ms processed=25170524 pending=1862 retry=2 duplicate=5293 unexpected=6261

INFO [09-08|10:05:34] Imported new state entries count=2 flushed=4 elapsed=6.934ms processed=25170526 pending=1859 retry=0 duplicate=5293 unexpected=6261

INFO [09-08|10:05:34] Imported new state entries count=1 flushed=0 elapsed=27.828ms processed=25170527 pending=1861 retry=1 duplicate=5293 unexpected=6261

INFO [09-08|10:05:34] Imported new state entries count=5 flushed=5 elapsed=19.440ms processed=25170532 pending=1860 retry=0 duplicate=5293 unexpected=6261

...

this is the result of eth.syncing and other geth tools:

eth.syncing

{

currentBlock: 4249131,

highestBlock: 4250814,

knownStates: 25172364,

pulledStates: 25170517,

startingBlock: 0

}

net.peerCount

25

eth.blockNumber

4244762

The ether balance of my wallet is not 0 and is reported correcly and updated to the last transaction made one day ago.

How long is this state supposed to last?

Another information, my .ethereum folder size is currently 41 GB, maybe a little too large for a right fast sync.

I think never.

UPDATE: I stopped geth with CTRL-D and reopen it. Now it seems that the "Imported new state entries" phase halted and geth is working correctly updating only new blocks.

It seems that the problem is that fast sync continue forever to download states and is not aware that the blockchain has already in a right, consistent state.

So, for now until the issue is solved this is my advise:

- Start geth fast sync

- wait until the command eth.syncing report a currentBlock near the highestBlock

- After that, every few hours run the command: eth.blockNumber ... firstly it returns probably 0 but continue to wait.

- When eth.blockNumber returns a value different from 0 and near the currentBlock value close geth with (CTRL-D or with the command "exit" so it can close correclty and under control) and wait until the program closes in the right way and your operating system shell comes back

- Reopen geth with fast option. You will see the warning "Blockchain not empty, fast sync disabled"... this is the correct behavior, is telling you that fast sync has been finished.

- Now the "Imported new state entries" messages disappear and you just see this messages every few seconds:

INFO [09-08|21:12:06] Imported new chain segment blocks=1 txs=131 mgas=5.379 elapsed=13.855s mgasps=0.388 number=4251422 hash=697652…d85ce8

Now your problem has been solved and probably geth avoid to download a lot of states, reducing the hard disk space taken by geth too.

This is valid until the issue will be solved and geth will become aware when the blockchain is correctly synced.

This is my experience, maybe work, maybe not. For me it worked.

Hope this helps,

Marco

Congrats but I had run it for more than 8 hours and eth.blockNumber had shown 6 always. I have to change to parity to sync the blockchain.

Edit: Fast sync worked at first run only on consecutive run you have to run in full mode or geth automatically give warning "Blockchain not empty, fast sync disable" and continue with full mode.

I think you have just to wait until eth.blockNumber shows a number near currentBlock before close it and start it again.

I forget to tell to remove old blockchains before starting the task I told before.

Yes, fast sync can be used with an empty blockchain and only the first time.

The command for clear the whole blockchain is "geth removedb" on the operating system shell, it removes everything has been downloaded before.

After that you are able to start a fast sync again from an empty blockchain and follow the procedure I told in my prev post hoping it works.

I'm not a geth developer, I just use it so I can't solve the problem or tell you what it does internally or why the command returns you "6" and what you have to do, but it seems that it downloads a lot of states and, when it finds the head state it's able to build the full blockchain. For me this happened when geth.syncing showed a "knownStates" near : 20.000.000 but it can happen before or after.

During my test, after fast sync finished to download all the blocks headers, it takes more than 24 hours more for having eth.blockNumber = 4244762 . I run geth on a server in with a band of 100 Mb/s.

When it showed to me "0" I let it doing the work and after 24 hours I see the command returns 4244762. I haven't tried to run the command in the middle so I don't know if the command returns other numbers before reaching the last block.

I have never used parity but is seems good and use less disk space than geth so it worth a try.

Maybe some geth dev can make things more clear.

We believe this is fixed on the master branch. Fast sync takes a while (especially with the mainnet), but will terminate eventually.

@brennino

My eth.blockNumber shows 0 after almost several days sync. I am wondering whether the fast sync will fail if I stop and restart the sync process in middle for several times.

@vincentvc fast sync in geth only works when the database is empty thus you get one chance to fast sync and then it will be the full sync after that _[STATED IN ERROR THE FOLLOWING- THIS IS INCORRECT AS POINTED OUT BY FJL: thus yes if you stop and restart anytime before that first fast sync finishes you won't do a fast sync from that point.]_ My experience with the scenario listed here was two fold. I used the latest build off of main and I made sure I had my database on an SSD. doesn't seem like the SSD vs HDD thing would matter so much but in my experience, until I put it on the SSD I could never get that first sync to finish up - not to say that is true for everyone just my experience.

I'm having the same problem, continuous imported state I'm currently trying as @brennino says and will come back with result later... currently 350k states processed

here some info :

> eth.syncing

{

currentBlock: 4269853,

highestBlock: 4270000,

knownStates: 357664,

pulledStates: 348163,

startingBlock: 4268019

}

net.peerCount

10

eth.blockNumber

0

update : almost 24h later here's the number

blockNumber : 0

eth.syncing

{

currentBlock: 4270728,

highestBlock: 4270793,

knownStates: 6879452,

pulledStates: 6875584,

startingBlock: 4268019

}

imported state still going, gonna check back tomorow...

hi @skarn01 I don't know if this happen only to me but when I start fast sync with an empty block chain starting block always shows 0. You can see my eth.syncing on my previous post that I report here:

eth.syncing

{

currentBlock: 4249131,

highestBlock: 4250814,

knownStates: 25172364,

pulledStates: 25170517,

startingBlock: 0

}

Maybe something wrong happen during fast sync or you close geth before eth.blockNumber says a number near last block. Blockchain sync is really time consuming and you haven't to stop fast sync until finishes or eth.blockNumber != 0 and near highestBlock.

What can I tell for helping you... it seems you are not starting fast sync from an empty block chain so if I'm in you I will start again from the beginning.

If you don't want to start again (and I can understand you, I went crazy for days before having some results...) you have this opportunities:

1 - If you want just to make a transaction because ethereum are falling in price now and you are in panic you can use light sync for syncing, make your transaction and, when you stop praying and shout in your room for a price peak, try with calm to sync your blockchain with fast sync again.

2 - wait two days more with your current situation and see if something changes.

About point number 1, I think light sync is not an experimental feature any more (but maybe someone else can confirm)... and I succeed to make a transaction with a light sync blockchain without problems.

If you want to start again with point number 2 later, you can close geth (because your situation is already corrupted) and rename your blockchiain directory .ethereum/geth. If you are ok instead for clear your current blockchain just use geth removedb on the operating system shell.

After that, for starting light sync (different from fast sync!), I have started geth with the command:

geth --light --cache=1024

and wait just 2 or 3 hour for download a 600Mb light blockchain. After that you can make your transaction. Again this is just my experience for helping people, no responsabilities if you lose your ethereum.

Hope it helps

Marco

hi @brennino, you're right that strange that my starting block is not 0 as i haven't stop the geth daemon...

what i want to do is develop my own services using the chain ( got experience creating an ICO for my boss, now i got some interest in that technology ^^ ) so i don't worry for money i currently put here, there's currently none, ahah.

i'll try the --light option after the geth removedb. --light still give me possibility to work with the chain and see full block after the first sync?

Thank you and I'll come back with update on my situation.

I have tried -light option to check if it is experimental or not. I had used Geth 1.70 and in output it said light mode is experimental feature.

fast sync in geth only works when the database is empty thus you get one chance to fast sync and then it will be the full sync after that thus yes if you stop and restart anytime before that first fast sync finishes you won't do a fast sync from that point.

That's not quite true. Fast sync will continue after restart.

The original issue should be solved in the 1.7.0 release. If you fast sync with the release, it should stop eventually.

@fjl is right in v1.7.0 fast mode continue from the last receipt after restart. I have started sync with geth 1.7.0 from scratch. This is current system:

Hardware: i5 2400, 16gb, hdd

(deliberately use hdd instread of ssd to check the results)

Softwares: Windows x64, geth 1.7.0

Network: 512kbps FTTH

Command: geth --syncmode "fast" cache 1024

Let's see what happens.

Edit: geth is fully synced but minimum network speed should be 1 mbps. For initial 20 days it was running on 512kbps but it was not enough. So I increase the network speed to 1mbps and in 8 days it synced from 2.1 million to latest block. I am closing this issue because the initial problem of unbounded importing state entries is resolved in version 1.7.0. @nabeelamjad and @fjl are correct in their approach and information.

@sonulrk any update? I have the same problem as you. Current state:

eth.syncing

{

currentBlock: 4282458,

highestBlock: 4282683,

knownStates: 18160211,

pulledStates: 18122951,

startingBlock: 0

}

Running for about 3 hours now

I'm experiencing very similar problem using Geth1.7 & Mist 0.9.0 on Win10 Home

However for me eth.syncing returns False and blockNumber returns 0

Started running Geth 1.7 yesterday afternoon after doing removedb and it completed sometime this morning, but wouldn't run the app (Mist)

I'm newbie just chipping in, (not a comprehensive posting .... but if problem persists I'll post more)

Seeing the same problem on Geth 1.7.0

> eth.blockNumber

0

> eth.syncing

{

currentBlock: 4285965,

highestBlock: 4286240,

knownStates: 512791,

pulledStates: 506078,

startingBlock: 4273567

}

--light work well, but i want a fast sync has i can get full block, so i've try another time from scratch and geth got killed at 3.8M block i dont know why, i'm gonna upgrade to 1.7.0 and post what happen with my next try...

update : same prob, now syncing say false, blocknumber say 0, and i've got 13.5 M state processed and still going, I gonna post feedback later...

using geth 1.7.0

update2 : just been able to do a sync without problem (got some out of memory error, so i've close everything else while it was syncing) but still can't finish to sync... that one never stoped but the starting block doesn't show blocknumber 0...

eth.syncing

{

currentBlock: 4312845,

highestBlock: 4313035,

knownStates: 12114361,

pulledStates: 12101760,

startingBlock: 4312790

}

eth.blockNumber

0

i'm gonna resign to use light mode for now, in hope everything I need will be there.

to give you feedback.

i've update to geth 1.7.0, adjust my setting so there was no memory error ( got too many thing on my server, closed some of them) and followed @brennino procedure.

After a while, it was near completion (about 100-200 block away) and started to import states only. eth.sync stoped showing startblock:0 and was sometime restarting to sync the last hundred of block needed. At about 28M states processed, it stop importing states and started saying "new chain segment". So i've let it go until it finally got the last block (sync was sometime temporary stoping and took some minute to see that it was late and started again to sync, switching eth.sync from stats to false to stats...). At the last block, after eth.sync was saying false, and blocknumber was still going up and showing the same blocknumber as etherscan, i've stop geth and restart it.

Now everything seem fine. Took about 2-3days to do a fast sync. Approx half a day to 1 day for the first sync, 1day to import state, and some hour to finish syncing.

Now i hope my computer won't blow for the next few month, I don't want to start syncing again, ahahah.

Good luck to everyone else

this eventually worked, but it was painful for sure.

Maybe you can try the light mode on the next time you need to start it from scratch!

https://github.com/ethereum/mist/issues/3097 🎉 and discussion here: https://github.com/ethereum/go-ethereum/issues/15001

I was away for a while and decided to do a fast sync to save some disk space by starting fresh, but ran pretty much in the same issue as described in this thread, here's what I did to solve it (using Geth 1.7.0):

Ensure you have an empty data directory, otherwise run your usual geth command followed with removedb at the end once to remove your DB.

- Run

geth --cache 2048 --datadir "D:/my/data/dir"(remove the-datadirargument if you want the default directory, the use of fast command wasn't required in 1.7 as it automatically assumes it) - When blockchain is nearing the

highestBlock(about 100-150 blocks away) it will start importing a lot of state entries. Please let these complete, as of today this will be around 31 million state entries ( they go really fast). - Once all state entries are completed it will automatically start dropping stale peers, when this happens close geth entirely.

- Repen geth using

geth --syncmode "full" --cache 2048 --datadir "D:/my/data/dir"(syncmodeis probably irrelevant) - Let it run for a while, it will reindex the chain and eventually auto-disable fast mode and switch to full mode. Took about 10 minutes for me during this stage.

Using the above settings, an SSD and a 220 Mbps download speed internet it took me roughly 1.5 hours to synchronize the blockchain from 0% to 99%, then the state entries took a good 3-4 hours before it finally finished. I think the keypoint is to let the state entries run until its completion, don't cut it off early. There's over 30 million state entries so it will take a while. Total blockchain size: ~26 GB from 0 to block 4351952 using the above steps.

I realize there's a light mode available, but it just doesn't cut it for me. I've tried it and it is very slow when submitting transactions (doesn't get picked up for a while or not at all when looping through 10-20 sendTransaction calls at once using web3, whereas the fast/full client picks them up instantly and I can see them on Etherscan).

Hope this helps.

I suppose it is a wise choice you turn your geth syncmode to "full" mode.

Just completed an import (--syncmode "fast" --cache=2048). "Imported new chain segment went" up to 14.7M in the process. Took about 6 hours. 38.4GB on disk.

sigh, all that is required is just some simple explanation of what 'fast' is doing(by the team), I was puzzled by what happened as well(and all those weird value when importing states, like ignored, duplicate what are they)

It seems what fast sync really does is :

- download block headers all the way to almost as close as latest(4.7M as of Dec 15 2017)

- download all states(but no verification) all the way to the latest(or may be close to)

- since each block can contain multiple transactions(?) the # of state which is effectively a database transaction log can be much larger I think 48M as of Dec 15 2017

- the 'processed=' is referring to the state record during the import state stage. So have to wait till this reaches 48M(in my case) and can be a gauge to guess when it will be done

Is this guess/interpretation correct ?

still not working

Did it ever finish?

I can't get it to work either. I went into the geth directory and manually deleted the chain store folder using windows explorer. I then ran the command: "%APPDATA%Ethereum WalletbinariesGethunpackedgeth.exe" geth --syncmode light

And all I currently see in CMD is just "imported new block headers" and "imported new block entries". Is this working correctly?

you would need to wait till you see import new state entries up until 50M(at least) before it starts the 'normal' full block sync for the remaining thousand or so.

Given today's size, expect at least 2-3 days. Parity may be faster but never had an opportunity to do that to completion.

for light mode you should see the number to about 4.8M(as of today) and it is basically done

try 'geth attach' then 'eth.syncing' to check for the status

will update if it syncs

{

currentBlock: 4815023,

highestBlock: 4815321,

knownStates: 36384431,

pulledStates: 36381874,

startingBlock: 4810860

still have a while, the latest state is at least 50M and your's is only 36M

Yup. I ran Geth on Windows a few months ago and have let the node run since, but am migrating the node to a Linux machine. I keep seeing people asking this question repeatedly so if it does sync at ~50m I'll update and let everyone know it does actually work lol

I just created a new node in 'fast' starting yesterday and it is almost done now. again just some reference point for those who may need it:

As of Dec 28 2017:

latest block was 4.8M, transaction state about 58M.

it took me 26 hours or so to have it sync up and it is in the final stage(already switched to full node equivalent as that is the design of fast sync), about 300 block left

the average disk usage is about 10-20MBps during the importing state stage and about 1-2Mbps in terms of network bandwidth

I have to kill it once as it stalled for about an hour during the early importing state stage but it does know where to pick up again(so still sort of fast sync even after the restart). I think this is an improvement in 1.73

the total disk space used is 49GB

hope this help others in the future

edit:

and it is fully sync now so this is now a node I can use for submitting transaction etc. about 5 minutes more when I first created this comment

I left geth 1.73 running for about 15 hours

geth --rpc --syncmode "fast" --cache 3096 --maxpeers 50

I was about 500 blocks away from highest block and knownStates was ~49M

geth stalled for about 20 min, so I restarted. knownStates reset to 0 and is now going extremely slow. But disk usage is the same as before restarting.

Windows 10

SSD

16GB RAM

100Mbit connection

so it would be at least 10M state to import. these sync always depend on what peer you have connected to. it was pretty bad yesterday but after restart, it proceeded with much higher rate. I was expecting at least another 2 days based on what I saw yesterday but some how, it got quicker over the night, no idea why.

geth 1.8

still having this issue.

after reboot geth shows this error

WARN [01-05|02:54:25] Ancestor below allowance peer=2586937320363579 number=4015416 hash=abc963…9b3dc5 allowance=4765509

WARN [01-05|02:54:25] Synchronisation failed, dropping peer peer=2586937320363579 err="retrieved ancestor is invalid"

now fast sync is dropping peers and not syncing. ~100 blocks to finish but it never gets synced.

this is the nature of p2p networking, you are at the mercy of the peers and network condition. usually when you wait enough, it would work. ctrl-c and restart is fine too(which hopefully can get you a better peer). another alternative is find a good peer and add it manually.

Is there a place to see the current max knownStates?

not simple. it basically depends on the transactions. at this moment do a rough estimate which is 15-20 per block. so may be 70-80M(close to 6M block now). but going forward it will be just more per block since they up the gas limit over time.

So I am at about 8 hours syncing on 57 Mbps with 16GB DDR4 / SSD / 1080Ti / 8700K and geth --fast --cache=4024. My eth.syncing says:

currentBlock: 4900069,

highestBlock: 4900328,

knownStates: 51148480,

pulledStates: 51137757,

startingBlock: 0

currentBlock hasn't moved for hours. 14 peers, eth.blockNumber 0, just cycling through new state entires:

INFO [01-13|10:00:32] Imported new state entries count=803 elapsed=3.654ms processed=51095193 pending=8217 retry=0 duplicate=4005 unexpected=12212

INFO [01-13|10:00:33] Imported new state entries count=834 elapsed=1.759ms processed=51096027 pending=13439 retry=0 duplicate=4005 unexpected=12212

Is this normal? Where can I find a breakdown of those values and their behavior? What's the point of 'pending' if it is constantly changing... ?

it is normal and if it is constantly changing(processed=51096027 ), you are good. just wait it will finish then switched to normal mode

Appreciate your time, thank you very much.

Do you recommend exploring Parity? I see it recommended over Geth but I've only personally been exposed through it through negative news from hacks and am wary.

Parity the contracts they wrote have bugs, but Parity the program itself is fine. However, one thing I noticed is that it seems to suck up lots of CPU when in the initial sync phase. I don't know how it behave during normal stage. It does have advantage of constantly pruning thus keep the storage space checked. geth needs lots of space after it enter normal stage. about 100-200G a month at the current rate. I need to have a standby machine to do the 'fast' periodically. As fast is the only way to 'compress'

I pulled the plug on my sync, going to look into Parity. Can't justify the space and effort to keep a real full node if this is what it looks like. The machine would have to be running constantly, right? Constantly syncing, and at some point probably not even being able to keep up? Are there known metrics for the network growing faster than D/L speeds can sync?

I thought I would have a HD that I booted to and got up to speed any time I wanted to work with ETH but it doesn't seem like that's the case with an actual full node without pruning or warp or some such optimisation.

Thanks again for your time, appreciate your info.

I don't feel secure using MEW, my Ledger has more on it than I am comfortable having in one place and they are back-ordered for new units, so I thought having an official client would be the next best thing... since Mist doesn't seem to fit my needs, Parity is pretty close to being official so I guess I'm headed there.

Is my logic sound?

if all that you care is the wallet address, you don't even need the node. just generate the wallet address, and send whatever coin to that address and be done with it. Basically treat this as 'I don't use it much' address. When you do need to pull things out, do it on an island machine(including a VM with no external network connection), sign the transaction the send the raw transaction using MEW or whatever to any node(infura whatever).

Hi Guys,

i used this to check my current block which needs to sync

currentBlock: 4506167,

highestBlock: 4934648,

knownStates: 866589,

pulledStates: 848856,

startingBlock: 4426024

}

I use this to mine

geth --datadir ./.ethereum/devnet/ --dev --mine --minerthreads 4 etherbase 0

I use this to connect the running client to the miner

eth --datadir ./.ethereum/devnet --dev attach ipc:./.ethereum/devnet/geth.ipc

Welcome to the Geth JavaScript console!

instance: Geth/v1.7.2-stable-1db4ecdc/linux-amd64/go1.9

coinbase: 0x2........................

at block: 330038 (Fri, 19 Jan 2018 13:40:44 CET)

datadir: /home/smartcontract/.ethereum/devnet

modules: admin:1.0 debug:1.0 eth:1.0 miner:1.0 net:1.0 personal:1.0 rpc:1.0 shh:1.0 txpool:1.0 web3:1.0

web3.eth.syncing

false

web3.fromWei(eth.getBalance(eth.coinbase),"ether")

1445287.5

web3.eth.blockNumber

330039

When I want to send a transactions I have the following code in that console

th.sendTransaction({from:eth.coinbase, to:eth.accounts[1], value: web3.toWei(0.05, "ether")}) Please unlock account d1ade25ccd3d550a7eb532ac759cac7be09c2719. Passphrase: Account is now unlocked for this session. '0xeeb66b211e7d9be55232ed70c2ebb1bcc5d5fd9ed01d876fac5cff45b5bf8bf4'.

At the end I do not see the tranasction on Etherscan nor in the wallet that I am sending to. Also balance remain the same.

HOW CAN THIS BE HAPPENING OMG:)

background: I had a not synced chaindata, some did a removedb on geth then importing all the blocks again, since the database was corrupted with message such as "Failing peer messages"? Really ethereum still need to improve.

I'm syncing because when I tried deploying with truffle, I got network state unknown. Reading around I found I needed to have eth.blockNumber be a positive integer. Currently it's 0 and my state is syncing.

> eth.syncing

{

currentBlock: 5186010,

highestBlock: 5186166,

knownStates: 32389802,

pulledStates: 32378516,

startingBlock: 5185518

when can I expect this to finish? What are these states?

At this point (9 March 2018) it takes almost 8 days to sync the Ethereum blockchain with a setup like so:

i7 processor, 8 cores, 64GB RAM, 100Mbps Internet connection, SSD drive - ideally with RAID

The fastest way to sync is to start clean by removing your existing database:

geth removedb

and then doing a fast sync with large cache size (i.e., 8GB if you have enough RAM, like 64GB of RAM):

geth --syncmode "fast" --cache 8192 console

There are 3 distinct stages in the sync process:

- Importing blocks (see Etherchain.org, 5,269,000+, 10+ hours)

After you get close to the current block, geth automatically switches to full mode and continues on. So there is no need to stop and restart. - Importing state entries (96M+, 1+ days)

- Importing chain segments (same # as blocks, 6+ days), for example:

INFO [03-10|14:07:44] Imported new chain segment blocks=139 txs=776 mgas=430.595 elapsed=8.039s mgasps=53.560 number=1854160 hash=9d134e…e0adc0 cache=15.85mB

Your chaindata folder will be 220+ GB after you have fully sync'ed.

You can tell that you are fully sync'ed by getting chain segment messages of only 1 block, which is identical to the current block number:

INFO [03-16|21:23:04] Imported new chain segment blocks=1 txs=341 mgas=7.990 elapsed=435.942ms mgasps=18.329 number=5269405 hash=939ae8…96e825 cache=143.76mB

Good luck!

See this issue for a detailed description of what is going on under the hood - https://github.com/ethereum/go-ethereum/issues/15001#issuecomment-370732526

@quantumproducer:

See @tinschel 's comment above and also see https://github.com/ethereum/go-ethereum/issues/16147#issuecomment-371878483

@tinschel you said:

You can tell that you are fully sync'ed by getting chain segment messages of only 1 block, which is identical to the current block number

Wouldn't it be super nice if eth.syncing would return false only when this point is reached? And give some sort of human understandable numbers back detailing the progress it is making, until it gets there?

I see so many questions on this point, users are totally confused by eth.syncing's behaviour.

it sure would be, @cies !

This is the dumbest shit ever.

I synced and took some measurements: http://www.freekpaans.nl/2018/04/anatomy-geth-fast-sync/

@tinschel I don't see a lot of time being taken by "Importing chain segments". In fact it only seems to be doing that for "new" blocks. Any idea?

That is awesome analysis @FreekPaans... I pondered back in December 2017 about whether it is to do with a particular method called requestTTL... https://github.com/ethereum/mist/issues/2749#issuecomment-354282608

Basically you can be stalled waiting for one of the peers to be giving you the information you need.

Also, parts of the network may be starved of blocks or the trie state that you are seeking. Effectively, if you are not connected to enough peers with the latest data, then you will waiting on the "network" to respond with the information you need in order to continue with the chain validation.

There was some good analysis I found here:

- https://github.com/ethereum/go-ethereum/issues/15824

- https://gist.github.com/holiman/5ec5d518c0ebe602872e37bd5775f0ef

Hope this helps... I'm yet to figure out the exact reason why it stalls.

Yes, I also have the feeling it's due to blocks/state trie data not being available at the peers I'm connected to, but no clue how I'd verify that. I'm doing a full sync now, has been fetching just the blocks for over 11hrs as we speak, still about 30% to go.

@FreekPaans: I'm surprised your first single-block “Imported new chain segment” log message came about so fast. At what point in your analysis did you see the importing of chain segments starting? For me the importing of chain segments lasted 6 days before my eth.synching actually showed false.

Maybe your higher speed had to do with the increased number of peers (25) that you were seeing. I did not unblock port 30303 and although I also used --maxpeers 25 (which is the default) I only ever saw 8 peers max.

@tinschel yes, with 30303 closed it stuck on about 8 peers with me as well. Not familiar with the P2P protocol so don't know why.

The first Imported new chain segment came when the state trie finished importing, and it only had 4 entries count > 1.

Took me about 1-2 hours to fully sync Rinkeby using geth --rinkeby (2,057,556 blocks and about 10,760,209 state entries).

I now also completed a geth full sync: http://www.freekpaans.nl/2018/04/anatomy-of-a-geth-full-sync/. tl;dr: took a little over 9 days, vs under 8 hours for fast sync.

@FreekPaans, yes, the 9 days are more in line with my sync; I didn't realize that your original sync didn't go all the way; if you only do fast sync, others are not able to use you as a node for blockchain synchronization; so if everyone just used fast sync, the blockchain couldn't exist.

@tinschel

that sounds very bad for Ethereum. The size of it grows really fast(I think in the range of 100G a month) and not that many people have such space for full sync. How can it scale for the intended usage(rule the world) ?

@garyng2000, that's a little bit of an exaggeration; I've been running the full sync now for a month and it has only grown from 220GB to 241GB today

@tinschel

would that be affected by when you started(recent price drop can have an effect on # of transactions) ? I remembered we had a 'fast sync' node that was growing really fast but I am not managing it so not sure how large it is now. But even using your figure, it is still growing at an alarming rate. Basically, not something for a typical 'personal' node and it basically break the intended large P2P nature. even for mining, we are seeing that most of them are done by very few pools, basically centralized in a sense.

if you only do fast sync, others are not able to use you as a node for blockchain synchronization; so if everyone just used fast sync, the blockchain couldn't exist.

Nope, that's incorrect. A fast-synced node helps out, it's got a lot of state others can download. A les-client otoh is maybe what you were thinking about.

@garyng2000, no effect on the start date. it's possible that different months have more transactions and state and therefore the blockchain size grows more rapidly during those months. There is no difference between the block chain size in fast sync mode and full mode because after fast sync completes, it automatically switches to full mode. In other words, fast sync may get you to the end of the blocks very quickly, but you won't have the full blockchain with all state until you complete the full sync.

So fast sync is good if all you want to do is maintain a wallet or start mining. Full sync is necessary so that you can become a full node within the Ethereum blockchain.

that is strange, I just asked our guy maintaining the node. The last fast sync(from scratch) was about a month ago and it ended for about 60G in size(all updated state downloaded) and now is already 140G. not sure what is going on there. Though from an operational perspective, I see no difference between full and fast(which is just a trimmed full). can still do all the operation, unlike light which is basically useless for our usage.

I am experiencing the same issue when using geth --fast --cache 1048.

Imported new state entries

eth.syncing

{

currentBlock: 5535921,

highestBlock: 5536021,

knownStates: 274,

pulledStates: 273,

startingBlock: 5535921

}

This is driving me crazy. One week to sync! 😳

Read, tried! And nothing helps

If you use a hard disk geth will not work. You need SSD, or switch to parity for syncing.

Just give some information:

I started the syncing from May 18, It just ended minutes ago.

BlockNumber: 5740903

pulledStates: 191247645

@TooBug Hello,

Do you get "false" when you type this command : eth.syncing

Can you give me the number of the KnowStates/pulledStates you at ?

@Othman21 Oth

Yes, When it's finished, eth.syncing returns false.

The number is given above. KnownStates is quite similar to pulledStates.

@TooBug Can you tell me the exact processed number that you see right now ?

for exemple i'm at processed=193996209

FWIW I was able to get geth to fully sync by waiting until eth.blockNumber is near the numbers in eth.syncing and then restarting geth. I was able to do this at ~160m states. After restarting geth, it took about 20 min to catch up to the blockchain and now eth.syncing is false and the only output now is 'imported new chain segment' every time a new block is found.

here is an output of mine taken on 16JUN2018 at 6:18am PTD:

>eth.syncing

{

currentBlock: 5799102,

highestBlock: 5799188,

knownStates: 96279752,

pulledStates: 96279752,

startingBlock: 5799001

}

@tinschel here is an output of mine taken on 19JUN2018 at 7:32am PTD:

> eth.syncing

{

currentBlock: 5815191,

highestBlock: 5815294,

knownStates: 198143487,

pulledStates: 198143486,

startingBlock: 5815181

}

Just to clarify... fastsync is now default... and I may quit and restart geth with fast enabled?

Anyway I'm currently at:

> eth.syncing

{

currentBlock: 5827681,

highestBlock: 5827747,

knownStates: 96988858,

pulledStates: 96988508,

startingBlock: 5824114

}

> eth.blockNumber

0

@tinschel @Othman21 How is this possible? 100% more state entries in 3 days?

@Othman21 This is nuts! 29 days and 200m state entries? Crazy...

@codepushr yes you can, i run this command :

./geth --cache=25000 --rpc --maxpeers 25 --fast

I have not finished syncing yet, today it will be 29 days

eth.syncing

{

currentBlock: 5829510,

highestBlock: 5829582,

knownStates: 203714648,

pulledStates: 203714648,

startingBlock: 5829510

}

eth.blockNumber

0

@codepushr i know

I'm looking for someone who has finished syncing to tell me the current knownStates that he has to get an idea if I'm far or near.

It's so bad that there is no mechanism to determine the absolute progress...

@codepushr : yes, fast sync is enabled by default so you don't have to specify it on command line, BUT once you start sync'ing and you restart geth, it will automatically DISABLE fast sync; so best not to restart

@tinschel Really? So even when restarting with --fast or --syncmode=fast it will boot up in full sync and I would need to remove the db and restart from scratch to get a fast sync?

I'm fairly certain that it is not the case... you are in fast sync until you hit the pivot point. Then eth.blockNumber will be a positive value (not zero). From that point onwards, you are in full sync mode.

Here are the details of the pivot implementation: https://github.com/ethereum/go-ethereum/pull/14460 and https://github.com/ethereum/go-ethereum/commit/0042f13d47700987e93e413be549b312e81854ac

I would just not bother specifying --fast on command line or removing your DB and restarting in the hope that it will be faster. just run geth without the option and keep going! If you have to restart geth don't specify the option. geth will use fast by default if it can. otherwise it will give you a message that fast is disabled.

@codepushr @tinschel any news ?

eth.blockNumber

0

eth.syncing

{

currentBlock: 5852375,

highestBlock: 5852457,

knownStates: 213724149,

pulledStates: 213706822,

startingBlock: 5852248

}

@Othman21 Currently at 121m states... I'll need another week to catch up lol.

you unfortunately don't see the known states anymore once synchronization is complete. But my currentBlock is at 5853416 as of 25JUN2018 1218 Pacific Time.

is there anyone who has finished syncing to tell us the current knowstates he has?

eth.syncing

{

currentBlock: 5865970,

highestBlock: 5866080,

knownStates: 218653449,

pulledStates: 218633252,

startingBlock: 5865761

}

eth.blockNumber

0

here is where I'm currently at after a 1 hour break:

> eth.syncing

{

currentBlock: 5866670,

highestBlock: 5866837,

knownStates: 96279752,

pulledStates: 96279752,

startingBlock: 5866459

}

@tinschel are you already synced and just paused it for an hour to demonstrate? if so, how come your states are less than 1/2 of @Othman21 's?

I don't think pulledStates can be used for progress indication, since I just reconnected a node after a while and that one didn't get beyond 115m states.

@codepushr yes I'm sync'ed and just paused for an hour. that's when I sent the latest eth.syncing. the states are a mystery to me. like @FreekPaans said, they are not a good indicator for sync completion.

@FreekPaans did you finish the sync ?

I have not finished syncing yet, today it will be 37 days.

eth.syncing

{

currentBlock: 5876059,

highestBlock: 5876129,

knownStates: 223140030,

pulledStates: 223136789,

startingBlock: 5875977

}

eth.blockNumber

0

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/simfs 524288000 126645968 397642032 25% /

@codepushr run it in background

sudo nano /etc/systemd/system/eth.service

[Unit]

Description=eth for Pool

After=network-online.target

[Service]

ExecStart=/home/eth-geth/build/bin/geth --cache=25000 --rpc --maxpeers 25 --fast

Restart=always

RestartSec=3

User=root

[Install]

WantedBy=multi-user.target

sudo systemctl enable eth

sudo systemctl start eth

sudo systemctl status eth

@othman21 yes, did you see my blogposts?

http://www.freekpaans.nl/2018/04/anatomy-geth-fast-sync/

http://www.freekpaans.nl/2018/04/anatomy-of-a-geth-full-sync/

This time I synced from a node that was about 1 month stale, took 10 days.

On Fri, 29 Jun 2018 at 19:50, Othman21 notifications@github.com wrote:

@FreekPaans https://github.com/FreekPaans did you finish the sync ?

I have not finished syncing yet, today it will be 37 days.eth.syncing

{

currentBlock: 5876059,

highestBlock: 5876129,

knownStates: 223140030,

pulledStates: 223136789,

startingBlock: 5875977

}

eth.blockNumber

0@codepushr https://github.com/codepushr run it in background

sudo nano /etc/systemd/system/eth.service

`[Unit]

Description=eth for Pool

After=network-online.target[Service]

ExecStart=/home/eth-geth/build/bin/geth --cache=15000 --rpc --maxpeers 25

--fast

Restart=always

RestartSec=3

User=root[Install]

WantedBy=multi-user.targetsudo systemctl enable ethsudo systemctl start

ethsudo systemctl status eth`—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/ethereum/go-ethereum/issues/14647#issuecomment-401426522,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ADfb6UOvIyow9xag4aVQ-H8j44j_S0x1ks5uBmjrgaJpZM4N9Zz8

.

@FreekPaans

Yes I saw them, the geth gets only the last block and sometimes some Knowstates and it's very "slow"

I just changed my command line like yours

geth --maxpeers 25 --cache 25000 --verbosity 4 --syncmode full

Can you please do a screenshot on the lines that appears in your terminal to get an idea

You have a vps more powerful than mine (x3) lol

@Othman21 see attached. This is when I restart a node that was last fully synced a couple of hours ago.

geth-detail.log

I have 2 servers to sync. A for a week, B for 2 weeks. Here are their states:

Machine A:

> eth.syncing

{

currentBlock: 6358077,

highestBlock: 6358143,

knownStates: 167673098,

pulledStates: 167673097,

startingBlock: 6357998

}

> eth.blockNumber

0

> net.peerCount

21

Machine B:

> eth.syncing

{

currentBlock: 6358040,

highestBlock: 6358157,

knownStates: 208173888,

pulledStates: 208173888,

startingBlock: 6358040

}

> eth.blockNumber

0

> net.peerCount

2

I succeeded before, the "state entries" log ends at 150 millions I recall.

Now state entries more than 160 millions and 200 millions. I wonder when it will ends...

@dreamingodd Do you have any updates (e.g. at which number the states were finishing)?

@dreamingodd , I've been sync'ed for several months now; when I restart geth, it has to re-sync just the last few blocks. Here is what I get when I do that today (9/28/2018 at 11:41am PT):

> eth.syncing

{

currentBlock: 6416641,

highestBlock: 6416662,

knownStates: 96279752,

pulledStates: 96279752,

startingBlock: 6416641

}

Whats the current upper bound of --testnet in fast sync mode, it's been almost a week, 8hrs per day:

{

currentBlock: 4479565,

highestBlock: 4479638,

knownStates: 54592672,

pulledStates: 54572219,

startingBlock: 4474887

}

I recently completed a fast sync, starting from scratch. My node processed 254,811,206 state entries before finishing. Here was the last Imported new state entries line:

INFO [12-07|09:41:58.173] Imported new state entries count=325 elapsed=9.931ms processed=254811206 pending=0 retry=0 duplicate=14306 unexpected=44810

And here is some context around that log line:

INFO [12-07|09:41:52.982] Imported new state entries count=499 elapsed=7.055ms processed=254810260 pending=771 retry=0 duplicate=14306 unexpected=44810

INFO [12-07|09:41:57.012] Imported new state entries count=621 elapsed=4.587ms processed=254810881 pending=796 retry=0 duplicate=14306 unexpected=44810

INFO [12-07|09:41:58.173] Imported new state entries count=325 elapsed=9.931ms processed=254811206 pending=0 retry=0 duplicate=14306 unexpected=44810

INFO [12-07|09:41:58.177] Imported new block receipts count=1 elapsed=3.971ms number=6841800 hash=c4db3b…040b25 age=27m9s size=116.08kB

INFO [12-07|09:41:58.178] Committed new head block number=6841800 hash=c4db3b…040b25

INFO [12-07|09:42:07.044] Imported new chain segment blocks=10 txs=927 mgas=65.008 elapsed=8.864s mgasps=7.334 number=6841810 hash=2698fa…cebe66 age=25m46s cache=7.55mB

INFO [12-07|09:42:15.582] Imported new chain segment blocks=12 txs=1384 mgas=88.370 elapsed=8.537s mgasps=10.351 number=6841822 hash=a9064c…432e78 age=23m32s cache=17.34mB

INFO [12-07|09:42:24.527] Imported new chain segment blocks=12 txs=1440 mgas=74.632 elapsed=8.944s mgasps=8.344 number=6841834 hash=c80849…f12f03 age=20m21s cache=27.75mB

INFO [12-07|09:42:32.561] Imported new chain segment blocks=14 txs=1246 mgas=83.007 elapsed=8.034s mgasps=10.331 number=6841848 hash=2cbbfe…d173c0 age=18m19s cache=37.18mB

INFO [12-07|09:42:41.012] Imported new chain segment blocks=14 txs=1655 mgas=80.438 elapsed=8.451s mgasps=9.518 number=6841862 hash=5d3bc2…bd7289 age=14m19s cache=47.72mB

INFO [12-07|09:42:49.356] Imported new chain segment blocks=10 txs=1557 mgas=63.153 elapsed=8.344s mgasps=7.569 number=6841872 hash=1c11a8…ba7cac age=11m44s cache=56.52mB

INFO [12-07|09:42:57.637] Imported new chain segment blocks=15 txs=1616 mgas=85.097 elapsed=8.280s mgasps=10.276 number=6841887 hash=1ac03b…622d3e age=7m53s cache=67.46mB

INFO [12-07|09:43:06.485] Imported new chain segment blocks=13 txs=1657 mgas=79.934 elapsed=8.847s mgasps=9.034 number=6841900 hash=2300d2…e3076a age=4m38s cache=77.99mB

INFO [12-07|09:43:10.533] Imported new chain segment blocks=8 txs=793 mgas=32.020 elapsed=4.048s mgasps=7.909 number=6841908 hash=8dd97c…cb2afe age=3m9s cache=82.81mB

INFO [12-07|09:43:10.535] Imported new block headers count=1 elapsed=1m2.045s number=6841909 hash=8bb9a3…27c074 age=3m4s

INFO [12-07|09:43:10.544] Imported new block headers count=1 elapsed=8.759ms number=6841910 hash=d3f22b…1d80b0 age=2m24s

INFO [12-07|09:43:10.554] Imported new block headers count=2 elapsed=10.131ms number=6841912 hash=67b75c…918ac2 age=1m10s

WARN [12-07|09:43:10.610] Discarded bad propagated block number=6841904 hash=b7deda…28b3dc

INFO [12-07|09:43:10.683] Imported new block headers count=1 elapsed=7.534ms number=6841913 hash=58f0f9…a9a201

INFO [12-07|09:43:11.590] Imported new chain segment blocks=1 txs=165 mgas=7.985 elapsed=808.053ms mgasps=9.882 number=6841909 hash=8bb9a3…27c074 age=3m5s cache=83.99mB

INFO [12-07|09:43:14.869] Imported new chain segment blocks=4 txs=755 mgas=29.935 elapsed=3.278s mgasps=9.131 number=6841913 hash=58f0f9…a9a201 cache=88.67mB

INFO [12-07|09:43:14.869] Fast sync complete, auto disabling

INFO [12-07|09:43:36.504] Imported new chain segment blocks=1 txs=48 mgas=1.493 elapsed=198.174ms mgasps=7.535 number=6841914 hash=1be461…c61103 cache=88.98mB

INFO [12-07|09:44:16.096] Imported new chain segment blocks=1 txs=192 mgas=7.996 elapsed=558.982ms mgasps=14.304 number=6841915 hash=e9f46b…989562 cache=90.27mB

@jeremyschlatter what kind of system do you have and how long did it take to sync?

And should this issue be re-opened? It seems like people are still getting "stuck" during a fast sync waiting for states and confused because of the lack of a progress bar. I understand building a progress bar for this in a decentralized and secure way is not simple (or maybe even possible), but then we need better messaging.

I did one recently, about same number of states, took 5 days on a 4 core/8

gb + SSD

On Sat, 8 Dec 2018 at 19:37, Bryan Stitt notifications@github.com wrote:

@jeremyschlatter https://github.com/jeremyschlatter what kind of system

do you have and how long did it take to sync?—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/ethereum/go-ethereum/issues/14647#issuecomment-445480304,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ADfb6YAroP8d1bjPjCEwNuHxfC0w1bODks5u3AbPgaJpZM4N9Zz8

.

@WyseNynja ~4 days on an n1-standard-4 + SSD on Google Cloud Platform

I have a 12 core 32GB ram machine and after ~2 days or so I'm at 163mm state entries done which appears to be ~64% of the way through so yeah about 4 days (pretty sure it gets slower the further it progresses) on my machine. Gave it cache=7000

Just finished fast sync on AWS EC2 i3.xlarge (with attached NVMe SSD).

It took about 17 hours, and the final eth.syncing.pulledStates was about 252307641.

Interesting, I am currently at pulledStates: 261648186.

For those interested: I just finished a sync to the testnet (Ropsten) in about 23 hours on an n1-standard-4 Google Cloud VM with an SSD (100GB), default configuration has 4 vCPUs and 15 GB memory. I ran geth with --cache=4096. Pulled states was 80M+ shortly before finishing.

@kaythxbye have you finally sync'd?

{

currentBlock: 6897129,

highestBlock: 6897235,

knownStates: 266058499,

pulledStates: 266043876,

startingBlock: 6897063

}

This node is synching since 2+ weeks. Recently upgraded to v1.8.20-stable-24d727b6

It took me around 3 days and 4 hours to synchronize a ETH Geth fast node:

Settings: --syncmode fast --gcmode full --cache 4096

Start: 2018-12-15 10:00 UTC

End: 2018-12-18 14:08 UTC

State entries: 260172278

Disk usage: 149G

Hardware: Virtual machine, 4 cores, 8 GB memory, 300 GB SSD

As of today .Took about a week to finally synced.

Testet(Ropsten)

- Disk Using : about40G.

- KnowStates:92 million more of less.

- Hardware:AWS cloud service machine,2 Processor , 2 GB memory 100GB SSD.

Just finished syncing mainnet at block 7089163. Total time about 36 hours on a quad core with 32GB RAM, fast connection, 256GB SSD. Disk usage ~154GB.

Final "Imported new state entries": 268473324

INFO [01-18|21:27:10.217] Imported new state entries count=1081 elapsed=12.427ms processed=268473324 pending=0 retry=0 duplicate=24770 unexpected=101202

february 6 2019

sync taken5 days

2 vCPU

8 GB RAM

SSD drive

disk usage: 160G

highestBlock: 7178900

knownStates: 276777218

HARDWARE:

HP ProBook470 G2/windows7

Core i5-5200 @ 2.2Ghz

8GB

Samsung T5 USB 3.0 SSD 250G

Geth Running on VirtuaMachine:

ubuntu server on Vmware player

3 processor 3GB System memory

2GB geth cache option

default fast sync option

it's taken about 1-week

block 7191540

knownStates are about 283500000

disk usage : 167G

Just finished now, took around 4 days on a Laptop with

- Intel Core i5-8250U

- Samsung MZ-V7E500BW 970 EVO.

Disk Usage: 162G

processed=284332222 pending=0 retry=0 duplicate=11504 unexpected=67689

My statistics:

2019-05-06 01:00:32 avg: 1827 max: 1938 min: 1378 states/s remain: 136604075 states 4 peers eta@ 20:46:28.165828

2019-05-06 01:00:37 avg: 1864 max: 1938 min: 1378 states/s remain: 136595500 states 3 peers eta@ 20:21:14.951050

2019-05-06 01:00:42 avg: 1791 max: 1938 min: 1378 states/s remain: 136583359 states 3 peers eta@ 21:11:16.481006

2019-05-06 01:00:48 avg: 1742 max: 1938 min: 1378 states/s remain: 136580287 states 3 peers eta@ 21:46:35.797305

2019-05-06 01:00:53 avg: 1721 max: 1938 min: 1378 states/s remain: 136575694 states 3 peers eta@ 22:03:01.154434

2019-05-06 01:00:58 avg: 1682 max: 1938 min: 1378 states/s remain: 136569043 states 4 peers eta@ 22:33:15.402442

2019-05-06 01:01:03 avg: 1698 max: 1938 min: 1378 states/s remain: 136564293 states 3 peers eta@ 22:20:27.458747

PS. I wrote a tiny python script to overview the process. It's here https://github.com/hayorov/ethereum-sync-mertics

Would be really useful if this would be trackable somewhere in geth or via some online tool like Etherscan. The latest number of states that I had before syncing completed was 314.8m state entries. I was dumping an output every 15 minutes to the console while syncing (because when you're synced up you cannot read that number anymore):

setInterval(function(){console.log(web3.eth.syncing.knownStates/1000000);}, 15*60*1000)

322.14m states on may 9th

INFO [05-09|12:10:17.599] UTC - new state entries processed=322150741

~1 day for blockchain. ~5 days for states - 206 GB on disk

Just completed another one, took a little under 48 hours.

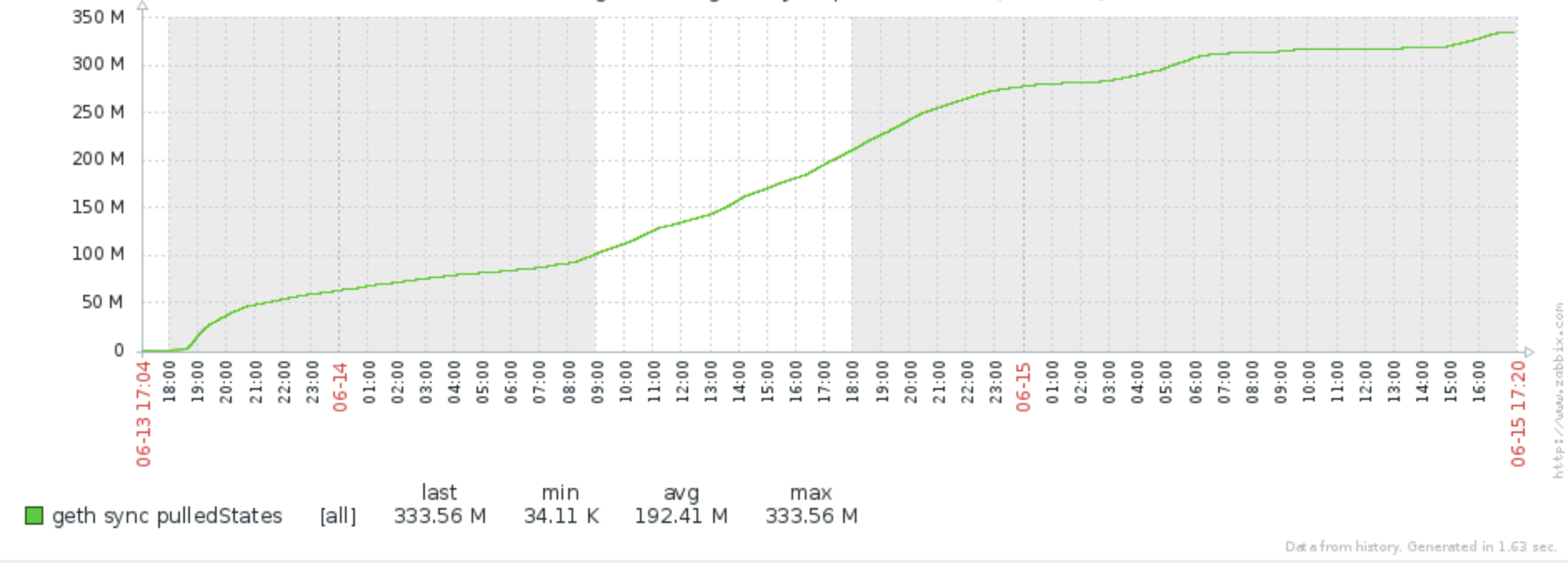

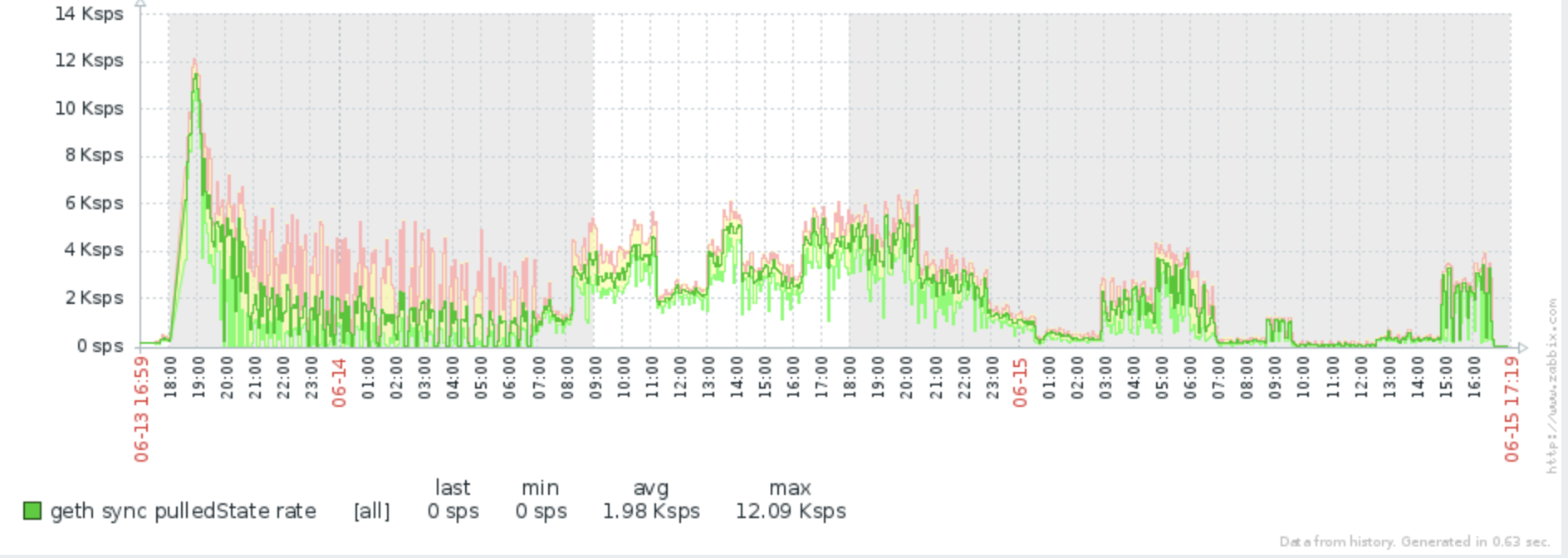

Geth 1.8.27

AWS t2.xlarge (4 cores, 16gb)

States 333564669 @ block 7963639

What's interesting is that at some point the states "rate" gets really low:

I suspect this is due to certain parts of the state trie not being available at my peers. Might be that restarting (or some other way of getting new peers) would help in those cases.

There are now more than 362,778,222 states.

INFO [07-23|18:30:58.161] Imported new block headers count=1 elapsed=4.196ms number=8208527 hash=6891ea…0a52cb age=1m2s

INFO [07-23|18:31:22.870] Imported new state entries count=484 elapsed=4.748ms processed=362778222 pending=2760 retry=0 duplicate=1583 unexpected=9221

It would be really nice if there were some way to know how much longer this is going to take.

Hi @WyseNynja , How much time it took you to sync 362,778,222 states?

It's 14th day and I am at 260873292 only :(

Started the node fresh on July 11. The blocks synced fast, but states are going slow. The server is busy doing other things, but this is longer than I expected. It’s still syncing now.

Started the node fresh on July 11. The blocks synced fast, but states are going slow. The server is busy doing other things, but this is longer than I expected. It’s still syncing now.

I also started on July 12.But, it's way too slow. Synced 269325649 entries only.

Can you help me with the configuration you are using?

Started the node fresh on July 11. The blocks synced fast, but states are going slow. The server is busy doing other things, but this is longer than I expected. It’s still syncing now.

I also started on July 12.But, it's way too slow. Synced 269325649 entries only.

Can you help me with the configuration you are using?

Sounds like you're on an HDD instead of SSD

Started the node fresh on July 11. The blocks synced fast, but states are going slow. The server is busy doing other things, but this is longer than I expected. It’s still syncing now.

I also started on July 12.But, it's way too slow. Synced 269325649 entries only.

Can you help me with the configuration you are using?Sounds like you're on an HDD instead of SSD

yes. I started with HDD

Started the node fresh on July 11. The blocks synced fast, but states are going slow. The server is busy doing other things, but this is longer than I expected. It’s still syncing now.

I also started on July 12.But, it's way too slow. Synced 269325649 entries only.

Can you help me with the configuration you are using?Sounds like you're on an HDD instead of SSD

yes. I started with HDD

It can't sync on an HDD - it's too slow

For those looking just for the number:

13 Aug 2019: 400.695.865 states

Long way to go.

I think your sync speed for State entries and blocks has to do with your memory i have a 614000 cache ad im on 30000000 state entries in a few hours and like block 5800000.

and the state entries are fluoing by.

4 days 15 hours for me. Running Ryzen 1700X with SSD and cable modem

24794 pts/2 Sl+ 896:58 build/bin/geth -rpc

[ron@Bertha bin]$ ps -p 24794 -o etime

ELAPSED

4-15:50:16

Need one of the eth blockchain services to list state entries along with the blockchain size data etc, welp until then:

2019-11-25: 405,923,438

Just finished fast sync after 5 days 12 hours. This thread here helped me knowing what to expect, so here are my stats.

2019-12-04

Hardware: Raspberry Pi 4 Model B/4GB, SSD attached vis USB3, broadband internet.

Geth version: 1.9.8

Datadir size: 186 GB

Highest block: 9,048,250

Number of imported state entries: 441,013,364

12-05-2019 418,072,677 state entries.

12-25-2019

Geth version: 1.9.9

Highest block: 9160798

Known states: 475519190

There really should be an API for this.

Jan 17 2020

Known states: 432,391,041

Not sure why that is smaller than the one above.

INFO [01-17|18:39:47.580] Imported new state entries count=433 elapsed=2.721ms processed=432391041 pending=0 retry=0 duplicate=71137 unexpected=326170

INFO [01-17|18:39:47.590] Imported new block receipts count=1 elapsed=7.889ms number=9300316 hash=7e5fbf…197524 age=24m54s size=54.71KiB

INFO [01-17|18:39:47.593] Committed new head block number=9300316 hash=7e5fbf…197524

INFO [01-17|18:39:47.624] Deallocated fast sync bloom items=431854683 errorrate=0.001

Jan 17 2019

Known states: 432,391,041

Not sure why that is smaller than the one above.INFO [01-17|18:39:47.580] Imported new state entries count=433 elapsed=2.721ms processed=432391041 pending=0 retry=0 duplicate=71137 unexpected=326170 INFO [01-17|18:39:47.590] Imported new block receipts count=1 elapsed=7.889ms number=9300316 hash=7e5fbf…197524 age=24m54s size=54.71KiB INFO [01-17|18:39:47.593] Committed new head block number=9300316 hash=7e5fbf…197524 INFO [01-17|18:39:47.624] Deallocated fast sync bloom items=431854683 errorrate=0.001

Nice, updated https://github.com/hayorov/ethereum-sync-metrics

On a system with 4 cores, 16 GB RAM, 320 GB SSD it took around 36 hours to sync in 'fast' syncmode. Once the syncing was done, the system was adjusted to use 2 cores and 10GB RAM and the same disk. This seems to work well.

Ditto on the ~36 hrs, Known states was 431,372,690 @ block height ~9361043

Is it not possible to extract this information from a node that is already synced?

Can this be reopened? If I open a new issue requesting an API to retrieve the current number of states in the trie after the node is done syncing will it be closed summarily?

Still syncing on known states 436141638 and block height 9362396

15 Feb 2020

446266045

INFO [02-15|22:49:59.641] Imported new state entries count=484 elapsed=11.987ms processed=446266045 pending=0 retry=0 duplicate=50314 unexpected=640943

17 Mar 2020

466184476

INFO [03-17|02:20:26.688] Imported new state entries count=343 elapsed=16.813ms processed=466184476 pending=0 retry=0 duplicate=157290 unexpected=584684

25.03.2020

I am not sure when my blockchain downloaded fully, but i guess that i had to fetch max 475000000 state entries

INFO [04-11|22:56:13.547] Imported new block receipts count=1 elapsed=999.7µs number=9852885 hash=45231e…244fc9 age=20m32s size=9.53KiB

INFO [04-11|22:56:13.566] Committed new head block number=9852885 hash=45231e…244fc9

INFO [04-11|22:56:13.618] Deallocated fast sync bloom **items=480155136** errorrate=0.001

items=480155136

My problems with syncing for 2 weeks were relying on HDD and double-NAT. But after adding an SSD read-only cache to my NFS, and after today opening the ports on my outer NAT while still relying on UPnP for my inner router I got it synced.

my final amount of processed state entries was 487040102

INFO [04-14|19:48:25.498] Imported new state entries count=271 elapsed=2.687ms processed=487040102 pending=0 retry=0 duplicate=17264 unexpected=61660

INFO [04-14|19:48:25.556] Deallocated fast sync bloom items=486016461 errorrate=0.001

Updated bound:

INFO [05-10|23:51:14.924] Imported new state entries count=147 elapsed=7.432ms processed=504700513 pending=0 retry=0 duplicate=1115 unexpected=4208

INFO [05-10|23:51:14.968] Imported new block receipts count=1 elapsed=37.733ms number=10040872 hash=27a688…d5f8f4 age=28m12s size=23.45KiB

INFO [05-10|23:51:14.978] Committed new head block number=10040872 hash=27a688…d5f8f4

INFO [05-10|23:51:14.982] Deallocated fast sync bloom items=504344987 errorrate=0.202

INFO [05-10|23:51:25.223] Imported new chain segment blocks=3 txs=407 mgas=29.972 elapsed=10.224s mgasps=2.932 number=10040875 hash=4cb642…c3d687 age=27m45s dirty=3.67MiB

I.e. 504344987 state entries at 10 May 2020. For fast sync this is about 240GB of data stored on the SSD.

Using a RPi v4, 4GB RAM, externally powered Samsung T5 1TB SSD to sync. Pro tip for Raspberry Pi users: use Ubuntu Server 64bit. Raspbian currently does not natively use 64bit. You can manually "allow" this via some kernel settings but I still ran into out of memory issues. I might have done something wrong there. However, on Ubuntu, I have never had an out of memory error so far since syncing. I have to note that I allocated 8GB swap on the SSD and using --cache=512 as Geth parameter. Also make sure your 30303 port is open, so external nodes can connect (and you can find peers!).

Sadly, I have not written down when I started syncing. However, I know it took less than a week. It is somewhere between 5-6 days I think.

11 June 2020

537342779

INFO [06-11|20:02:41.758] Imported new state entries count=452 elapsed=6.587ms processed=537342779 pending=0 retry=0 duplicate=11612 unexpected=28764

Times are in GMT +0. Started about 1 week ago.

Ropsten testnet

INFO [06-19|13:24:06.907] Deallocated fast sync bloom items=245025020 errorrate=0.001

Chaindata Storage - 39.6GB

Ancient Storage - 36.8GB

INFO [06-21|07:57:24.932] Deallocated fast sync bloom items=536929173 errorrate=0.001

Chaindata Storage - 100.2GB

Ancient Storage - 133.2GB

21 June 2020

574408341

INFO [06-21|17:06:28.769] Imported new state entries count=435 elapsed=3.950ms processed=574408341 pending=0 retry=0 duplicate=268 unexpected=578

I think someone has a problem with the state. This won't scale much more.

10 Aug 2020

571739565

INFO [08-09|23:26:03.932] Imported new state entries count=56 elapsed=2.469ms processed=571739565 pending=0 retry=0 duplicate=13914 unexpected=49760

INFO [08-09|23:26:03.939] Imported new block receipts count=1 elapsed=6.060ms number=10626424 hash="70eb79…2712a6" age=23m46s size=67.10KiB

INFO [08-09|23:26:03.941] Committed new head block number=10626424 hash="70eb79…2712a6"

挺折腾的,在同步期间经常卡在这里:

WARN [08-08|00:09:42.076] Dropping unsynced node during fast sync id=541791c3ce2e53df conn=dyndial addr=198.204.254.10:3030

但是重启后就又可以正常同步了。猜想应该是节点限流,同步一定量的数据就会被节点拒绝。

然后就做了一个shell脚本监测同步日志,当一定时间内的日志增长量过于少时,就怀疑是节点卡住了,脚本会自动kill掉同步程序,再重新执行。

就这样,花了大概2天时间完全同步好了,ssd空间用了249G.

It's kind of annoying, getting stuck here a lot during syncing.

WARN [08-08|00:09:42.076] Dropping unsynced node during fast sync id=541791c3ce2e53df conn=dyndial addr=198.204.254.10:3030

But once you restart it, you can sync again.The conjecture is that the node is limited in current, and a certain amount of data synchronized will be rejected by the node.

Then I made a shell script to monitor the synchronous log. When the log growth is too small within a certain period of time, it is suspected that the node is stuck. The script will automatically kill the synchronous program and execute it again.

In this way, it took about 2 days to fully synchronize, and the SSD space used 249G.

572083659 August 9th 2020

INFO [08-09|16:37:34.615] Imported new state entries

count=1606 elapsed=20.127ms processed=

579048027 pending=0 retry=0 duplicate=0 unexpect

ed=0

INFO [08-09|16:37:34.618] Imported new block receipts

count=1 elapsed=1.940ms number=106

26719 hash="444831…2b96ff" age=28m10s size=87.93KiB

INFO [08-09|16:37:34.621] Committed new head block

number=10626719 hash="444831…2b96ff"

INFO [08-09|16:37:34.644] Deallocated fast sync bloom

items=572083659 errorrate=0.001

@wellttllew @supenghai

Hey how long did it take for a full sync?

Imported new state entries count=384 elapsed=4.84µs processed=665994734

My state entries has been downloading for like a week.

@wellttllew

I would like to know your server configuration.

My server configuration is: ten-core processors, 32M memory,20M band width.The fast sync took about 48 hours.

When reporting sync times, please include the type of disk as well. That can make a huge difference. HDD < SATA SSD < NVME SSD

INFO [08-18|22:42:47.091] Deallocated fast sync bloom items=581136598 errorrate=0.173

Size ~271 GB

Hey @jochem-brouwer is 581136598 your final state entries?

Because for me it has already been higher than yours and it is still contnuing..

Imported new state entries count=412 elapsed=8.904ms processed=673602785 pending=8320 retry=0 duplicate=0 unexpected=30

I am going on 9 days now for a full "fast"-sync, fast i9 comp, fiber internet with SSD & cache =2048....

Is that a SATA SSD? Or an NVME?

On Aug 19, 2020, at 6:42 PM, steelep notifications@github.com wrote:

I am going on 9 days now for a full "fast"-sync, fast i9 comp, fiber internet with SSD & cache =2048....—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or unsubscribe.

SATA, still its a very quick unit.

On Thu, 20 Aug 2020 at 14:34, Bryan Stitt notifications@github.com wrote:

>

>

>

Is that a SATA SSD? Or an NVME?

On Aug 19, 2020, at 6:42 PM, steelep notifications@github.com wrote:

>

I am going on 9 days now for a full "fast"-sync, fast i9 comp, fiber

internet with SSD & cache =2048....>

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or unsubscribe.

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

https://github.com/ethereum/go-ethereum/issues/14647#issuecomment-676861306,

or unsubscribe

https://github.com/notifications/unsubscribe-auth/AAKCS2ZM22O2OLYUPT7YUVTSBSDS5ANCNFSM4DPVTT6A

.--

Patrick Steele

NZ: 0212384777

HK: 6926 1526

@wellttllew

I would like to know your server configuration.

My server configuration is: ten-core processors, 32M memory,20M band width.The fast sync took about 48 hours.

About 3 days , with an aws lightsail $80 plan.

4Cores, 16GB, 320GB SSD.

Fast sync took about 10 hours on Amazon i3.xlarge instance (4 vCPUs, 30GB RAM, NVMe hard drive).

575809113 state entries as of 2020-08-25.

I used the following flags:

--cache=4096 (essential for speed improvement, but requires more RAM)

--maxpeers=50

The final .ethereum directory size is about 253 GB.

geth version: 1.9.20-unstable-7b5107b7-20200824

what is the size of the db directory after fully sync ? I haven't done this for a while(didn't have the freezer feature back then). TIA.

what is the size of the db directory after fully sync ? I haven't done this for a while(didn't have the freezer feature back then). TIA.

full sizes without anything moved out

mainnet = 402GB

testnet = 96GB

i have started fast sync and its was took 3 days

on first day every blocks was synced but its syncing states and now its really slow!

here is syncing result:

{

"currentBlock": 10735343,

"highestBlock": 10735450,

"knownStates": 584833661,

"pulledStates": 584754308,

"startingBlock": 10734477

}

here is console result:

INFO [08-26|12:13:23.330] Imported new state entries count=384 elapsed=6.877ms processed=584758628 pending=77408 retry=0 duplicate=1090 unexpected=4261

INFO [08-26|12:13:27.602] Imported new state entries count=636 elapsed=7.330ms processed=584759264 pending=77425 retry=0 duplicate=1090 unexpected=4261

INFO [08-26|12:13:32.088] Imported new block headers count=2 elapsed=11.847ms number=10735455 hash="71d3b7…0e55db"

INFO [08-26|12:13:32.300] Downloader queue stats receiptTasks=0 blockTasks=0 itemSize=193.43KiB throttle=339

INFO [08-26|12:13:33.786] Imported new state entries count=680 elapsed=7.854ms processed=584759944 pending=77018 retry=0 duplicate=1090 unexpected=4261

INFO [08-26|12:13:40.582] Imported new state entries count=618 elapsed=10.019ms processed=584760562 pending=76876 retry=0 duplicate=1090 unexpected=4261

INFO [08-26|12:13:46.097] Imported new state entries count=768 elapsed=14.378ms processed=584761330 pending=76011 retry=0 duplicate=1090 unexpected=4261