Framework: ErrorException: Warning: Error while sending STMT_PREPARE packet

- Laravel Version: Laravel Framework Lumen (5.8.8) (Laravel Components 5.8.*)

- PHP Version: PHP 7.2.19-0ubuntu0.18.04.1

- Database Driver & Version: mysql Ver 14.14 Distrib 5.7.26

Description:

Queue jobs is losing connection to database and throw exception

ErrorException: Warning: Error while sending STMT_PREPARE packet

Queue command (managed by supervisord) artisan queue:work --queue=children --sleep=3 --tries=3 --daemon

The error is not related to large data sent to database, it seems to be it's related to some how data connection is lost with database

My error comes from a simple query

$child = Child::find($child_id);

Let me know if you need any further information

PS: No change in mysql nor php default settings.

Steps To Reproduce:

Sometime below steps will work

1- Make sure queue is running

2- Restart database (/etc/init.d/mysql restart)

3- Send a job to the queue

4- You will get this error

5- Send another job to the queue (no error)

All 61 comments

Small clarification, my job will just call a model that will process the data

<?php

namespace App\Jobs;

use Illuminate\Support\Facades\DB;

use App\Models\Child;

class childjob extends Job {

public $tries = 3;

public $timeout = 1800;

public $child_id;

public function __construct($child_id)

{

$this->child_id = $child_id;

}

public function handle(){

Child::setsomething($this->child_id);

return true;

}

}

and inside Child Model:

public static function setsomething($child_id){

$child = Child::find($child_id);

}

Hey there,

Can you first please try one of the support channels below? If you can actually identify this as a bug, feel free to report back and I'll gladly help you out and re-open this issue.

Also: please post this on the Lumen repo if you decide to repost. Thanks!

Thanks!

Many users are reporting similar experiences on Laracasts

Also in stackoverflow

And most of the cases its laravel not lumen

On Sun, Jun 30, 2019 at 13:25 decowboy notifications@github.com wrote:

Many users are reporting similar experiences on Laracasts

—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub

https://github.com/laravel/framework/issues/28920?email_source=notifications&email_token=AAHJ3YIHWUDFAD2EZM2PX7DP5CC3NA5CNFSM4H2XQDTKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODY4JMVI#issuecomment-507024981,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AAHJ3YLOMPMBI2FGAWJDWS3P5CC3NANCNFSM4H2XQDTA

.>

echo "Hello World :)"

Could this be because the database service is getting a new PID for whatever reason and that the old one is still loaded since horizon is a long running process?

Since i have updated my application i also get this error regularly.

Code has been changed by over 400 commits, find the culprit in this is not really easy.

Only big thing changed on the server is from mysql -> mariadb.

Our problem indeed also happens on MariaDB, not sure about mysql.

I'll re-open this to have a look at a later time. Feel free to provide more info on the matter in the meantime.

What is can see so far:

Happens on different random jobs.

Happens around 1 of 1000 jobs, could be complete random.

Examples:

ErrorException[internal] in unserialize warning

Warning: Error while sending STMT_PREPARE packet. PID=26143

variable_representation | O:28:"App\Jobs\FetchProofscopeData":8:{s:15:"*project_file";O:45:"Illuminate\Contracts\Database\ModelIdentifier":4:{s:5:"class";s:15:"App\ProjectFile";s:2:"id";i:154404;s:9:"relations";a:1:{i:0;s:7:"Project";}s:10:"connection";N;}s:6:"*job";N;s:10:"connection";N;s:5:"queue";s:13:"project-files";s:15:"chainConnection";N;s:10:"chainQueue";N;s:5:"delay";N;s:7:"chained";a:2:{i:0;s:367:"O:34:"App\Jobs\FetchProjectFileThumbnail":8:{s:15:"*project_file";O:45:"Illuminate\Contracts\Database\ModelIdentifier":4:{s:5:"class";s:15:"App\ProjectFile";s:2:"id";i:154404;s:9:"relations";a:1:{i:0;s:7:"Project";}s:10:"connection";N;}s:6:"*job";N;s:10:"connection";N;s:5:"queue";N;s:15:"chainConnection";N;s:10:"chainQueue";N;s:5:"delay";N;s:7:"chained";a:0:{}}";i:1;s:364:"O:31:"App\Jobs\ForceRenderProjectFile":8:{s:15:"*project_file";O:45:"Illuminate\Contracts\Database\ModelIdentifier":4:{s:5:"class";s:15:"App\ProjectFile";s:2:"id";i:154404;s:9:"relations";a:1:{i:0;s:7:"Project";}s:10:"connection";N;}s:6:"*job";N;s:10:

-- | --

ErrorException/app/Jobs/InsertSystemLog.php in App\Jobs\InsertSystemLog::handle warning

Warning: Error while sending STMT_PREPARE packet. PID=26147

/app/Jobs/InsertSystemLog.php in App\Jobs\InsertSystemLog::handle at line 100

$log->save();

}

}

FYI

Not sure if this is has anything to do with this error, but I'm using https://github.com/GeneaLabs/laravel-model-caching to cache my queries

let me know if you want me to upload any log to the issue

Since i have updated my application i also get this error regularly.

Code has been changed by over 400 commits, find the culprit in this is not really easy.

Only big thing changed on the server is from mysql -> mariadb.

Same here, I started to get this error after lumen upgrade

Some jobs will also be marked as failed in horizon, most of them go trough.

Getting the same issue. Never had this issue on Laravel 5.6 but after updating to 5.7 this now happens daily. It looks like jobs are not actually failing since I have no items in the failed_jobs table, yet Sentry reports 80 issues. We are working with MySQL so not mariadb related

All these issues seem to be related:

- PDO::prepare(): MySQL server has gone away

- E_WARNING: Error while sending STMT_PREPARE packet. PID=10

I have the exact same error on Laravel 5.8 with Mysql 5.6 and I thought it has to do with Telescope or horizon, but apparently, it is more common than I thought.

Same issue from April 28: https://github.com/laravel/horizon/issues/583

Adding mine for context:

Laravel 5.8.28

mysql Ver 15.1 Distrib 10.2.25-MariaDB and 10.1.36-MariaDB

Happens with and without telescope installed.

I'm seeing both the STMT_PREPARE and MySQL server has gone away errors.

Seems like this PR probably causes this but it's actually quite logic. If you're in a transaction and lose connection, there's no recovery from that.

Are all of you using transactions?

@driesvints In my case i just use Model::create()

Seems a change that was already in 5.1 i think it started happening for me between 5.7/5.8 reverted a couple version but doesn't seem to fix it. I think it is somewhere in a horizon update.

I’m not using any transaction

I doubt this error related to losing connection, i have my code hosted with

my database and no errors in MySQL logs

This error is very random for me, in one day I get like 10 errors, next few

day no error at all

PS: my app is not production so it’s used only by me (server not overloaded)

On Fri, Jul 12, 2019 at 17:01 Dries Vints notifications@github.com wrote:

Seems like this PR https://github.com/laravel/framework/pull/12929

probably causes this but it's actually quite logic. If you're in a

transaction and lose connection, there's no recovery from that.Are all of you using transactions?

—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub

https://github.com/laravel/framework/issues/28920?email_source=notifications&email_token=AAHJ3YNVJRGYVQ4QOMXG3OLP7CFDNA5CNFSM4H2XQDTKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODZZ2ZCQ#issuecomment-510897290,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AAHJ3YPGHQGOWTDRNBOO5QLP7CFDNANCNFSM4H2XQDTA

.>

echo "Hello World :)"

@driesvints No transactions.

It seems it has to do with Horizon/Telescope.

Although Telescope is fully disabled.

Below you can find some of our Sentry logs:

Our telescope_monitoring table is completely empty, too, so it shouldn't have to do with that select query.

@driesvints 3 separate listeners are failing in our application and none of them have database-transactions. It might indeed be a horizon update, we started to notice it after a composer update. We don't use telescope so horizon seems most likely.

Im not using horizon nor telescope and face this issue

I’m passing Eloquent model to the queued job constructor, maybe this is the

issue

Any one doing the same thing ?

On Fri, Jul 12, 2019 at 17:38 jlmmns notifications@github.com wrote:

@driesvints https://github.com/driesvints No transactions.

It seems it has to do with Horizon/Telescope.

Although Telescope is fully disabled.Below you can find some of our Sentry logs:

[image: Screen Shot 2019-07-12 at 9 35 07 AM]

https://user-images.githubusercontent.com/3619890/61136110-9d1de380-a488-11e9-8be5-bbb4e502d071.png[image: Screen Shot 2019-07-12 at 9 34 16 AM]

https://user-images.githubusercontent.com/3619890/61136129-a5761e80-a488-11e9-8c71-00d86a2c7beb.png—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub

https://github.com/laravel/framework/issues/28920?email_source=notifications&email_token=AAHJ3YNWIWKDS74BKT4B64DP7CJODA5CNFSM4H2XQDTKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODZZ6CXA#issuecomment-510910812,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AAHJ3YMC4TABMGIQY6PKDHDP7CJODANCNFSM4H2XQDTA

.>

echo "Hello World :)"

I do not use telescope so i don't think the problem lies there, no transactions too

I too am facing this issue. I am not using horizon or telescope. I do not have any jobs that are shown as failed in my DB but I do get the notifications a few times a day. It fails randomly on any job in the program. I have very little traffic on the server. I have been trying to figure it out for the past few days now.

I only receive the "Error while sending STMT_PREPARE packet. PID=*"

I have not received the "MYSQL server has gone away."

Laravel 5.8.28

MYSQL version: 5.7.26

The server is deployed using forge. My worker settings:

connection = redis

queue = default

timeout = 0

processes = 1

tries = 3

I am going to bump up the processes number and see if that helps and then I'll report back

It seems like everybody with this problem is using Sentry? Is this correct or are some people here using other services?

@Christophvh Sentry is just for receiving/displaying errors. The actual error has already before sent to Sentry.

I’m using Sentry but I configured it as a log channel

On Mon, Jul 15, 2019 at 13:40 Robert Fridzema notifications@github.com

wrote:

@Christophvh https://github.com/Christophvh Sentry is just for

receiving/displaying errors. The actual error has already before sent to

Sentry.—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub

https://github.com/laravel/framework/issues/28920?email_source=notifications&email_token=AAHJ3YLNJC6N2NM7UBVJMXDP7RHZZA5CNFSM4H2XQDTKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODZ5J7GI#issuecomment-511352729,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AAHJ3YLRDZZHODFD67WBZMLP7RHZZANCNFSM4H2XQDTA

.>

echo "Hello World :)"

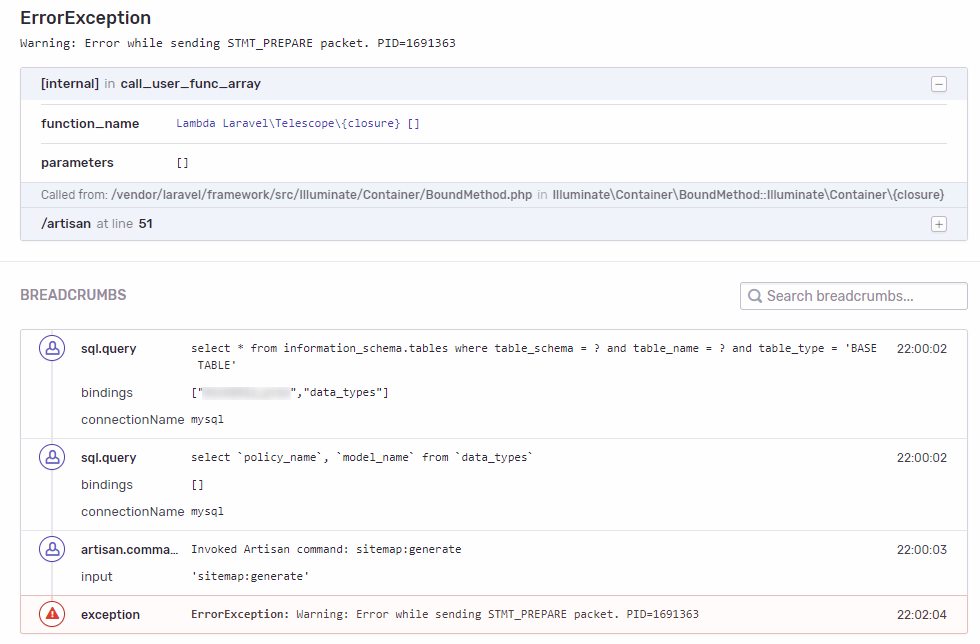

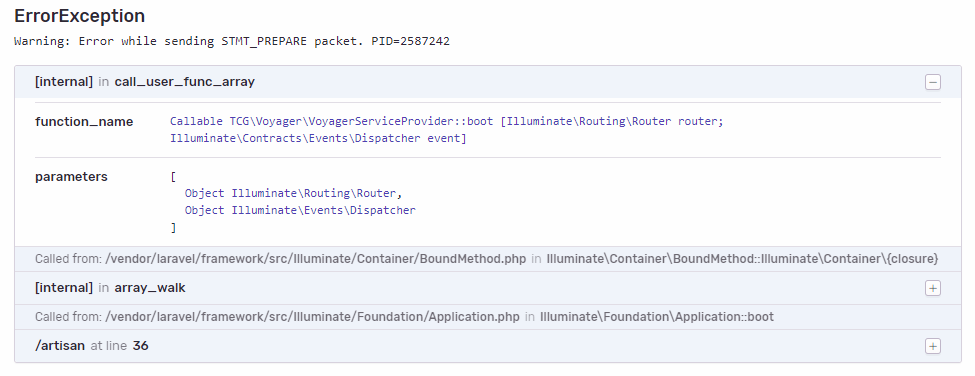

I'm also having this issue with Telescope AND Voyager: It seems to happen with artisan commands, one of wich is very long (sitemap:generate from spatie's package on a 1500 pages website)

Screenshot 1

Screenshot 2

EDIT:

I don't use queues at all.

Hey everyone, It has been a few days since I added more workers to my system and there has been no errors reported!

My advice right now would be to try upping the number of workers you have and see if that helps

Cheers!

@dark9911 how many workers?

I have had it with 1 and also with 40, so i don't think it has anything to do with the amount of workers

Same issue here. It only seems to occur on queue workers, not on the application itself. Seems related to the long running processes. Haven't got Telescope nor Horizon.

Php: 7.3.7

Database: 10.3.15-MariaDB-1:10.3.15+maria~bionic

Laravel: v5.8.28

Edit:

Indeed: also using sentry-laravel 1.1.0

@michaelnguyen547 how many workers?

i set it to 10 workers.

@koenhoeijmakers :(

Just got hit with one today :(

@koenhoeijmakers is right. The error has nothing to do with the amount of workers.

Sentry says it failed making a request to grab user_settings from MYSQL during a job call.

ErrorException: Warning: Error while sending STMT_PREPARE packet. PID=27771

ErrorException: Warning: PDO::prepare(): MySQL server has gone away.

What we do know:

- Database choice (mysql, mariaDB, etc.) does not matter so its not the database type.

- The number of workers does not solve the problem either.

- Horizon and Telescope are not the issue. (some people are using them others are not)

- The error does not create a record in the failed_job table.

I'm with @Christophvh on the question about Sentry.

The error itself is pretty normal for long running jobs, but it supposed to be caught by the framework and transformed to a QueryException (which in turn triggers the re-connection to the database), so it should never reach Sentry in its native form.

But it does. (Maybe even before the framework catches and handles it? That would explain why there's no failed jobs)

So the question remains: do we all use Sentry?

I do: "sentry/sentry-laravel": "^1.0.0",

And also updated it to 1.0 the same time we did the 5.8 update.

@sebestenyb I think we are all using sentry. Sounds like we know where the problem lies now. I guess we should notify sentry next?

So the question remains: do we all use Sentry?

I do use Sentry. And I don't recall seeing the issue reported in HoneyBadger, which I also use.

I am using "sentry/sentry-laravel": "1.1.0", but at the time of updating all packages is also updated this, and wonder if the cause is in this package version. @sebestenyb Are you also receiving this errors 1.0.0?

I'm with @Christophvh on the question about Sentry.

The error itself is pretty normal for long running jobs, but it supposed to be caught by the framework and transformed to a QueryException (which in turn triggers the re-connection to the database), so it should never reach Sentry in its native form.

But it does. (Maybe even before the framework catches and handles it? That would explain why there's no failed jobs)So the question remains: do we all use Sentry?

I do:

"sentry/sentry-laravel": "^1.0.0",

And also updated it to 1.0 the same time we did the 5.8 update.

Perhaps parent::report($exception); should be placed at the beginning of the report method, rather than at the end of it? I don't see the error appear in my logs either.

my composer.json:

"sentry/sentry-laravel": "^1.0",

and composer show | grep sentry:

sentry/sdk 2.0.3 This is a metapackage shipping sentry/sentry wit...

sentry/sentry 2.1.1 A PHP SDK for Sentry (http://sentry.io)

sentry/sentry-laravel 1.1.0 Laravel SDK for Sentry (https://sentry.io)

So the question remains: do we all use Sentry?

It may come from Sentry: On a project, I'm using both Sentry and Bugsnag, but this error only appears in Sentry.

NB: It happened both in Sentry 1.0.2 and 1.1.0

Happening to our app too. It's happening in a queued job and am using this sentry

sentry/sdk 2.0.3 This is a metapackage shipping sentry/sentry wit...

sentry/sentry 2.1.1 A PHP SDK for Sentry (http://sentry.io)

sentry/sentry-laravel 1.1.0 Laravel SDK for Sentry (https://sentry.io)

Along with Laravel Framework 5.8.27

No issues in Laravel 5.7.

Anyone know a workaround?

Same issue on our app. We are using queued jobs and sentry

sentry/sdk 2.0.3 This is a metapackage shipping sentry/sentry wit...

sentry/sentry 2.1.1 A PHP SDK for Sentry (http://sentry.io)

sentry/sentry-laravel 1.0.1 Laravel SDK for Sentry (https://sentry.io)

Laravel Framework 5.8.28

As replied by a dev from Sentry in the sentry-laravel issue

Interesting. We would have to dig where the warning is emitted from since it might not be silenced and thus will be caught by Sentry which is correct.

Since 2.x of the base SDK (and 1.x of Laravel) "errors" (like warnings and notices) are captured much better which might be the reason of the uptick of the errors. We also now capture every "error" by default which you can change using the error_types option.

To reiterate what is said in the other issues, this is a warning and Laravel recovers from this gracefully so it's mere informational that this warning is emitted, it does not crash your apps or jobs these warnings were always emitted but not always captured.

For reference:

Our problem indeed also happens on MariaDB, not sure about mysql.

I'm experiencing the issue on MySQL.

Happens around 1 of 1000 jobs, could be complete random.

Same for me.

I'm using https://github.com/GeneaLabs/laravel-model-caching to cache my queries

I'm not using any form of model caching

It seems it has to do with Horizon/Telescope.

I'm also using Horizon but not Telescope.

Are all of you using transactions?

No transactions in my jobs either.

It seems like everybody with this problem is using Sentry?

I'm also using Sentry.

The warnings seem to have stopped for me after I've changed my retry_after and timeout according to the docs

The

--timeoutvalue should always be at least several seconds shorter than yourretry_afterconfiguration value. This will ensure that a worker processing a given job is always killed before the job is retried. If your--timeoutoption is longer than yourretry_afterconfiguration value, your jobs may be processed twice.

My timeout was 600 seconds in horizon but the retry_after was still the same (in the config/queue.php it's set at 90), changing the retry_after to 630 seconds fixed it :)

I'm going to close this as this seems to be Sentry specific and there really isn't an issue except from the warning. Thanks to everyone who helped investigate this 👍

My apologies if it is frowned upon to comment on a closed issue, but I'd like to quickly reply to the solution suggested by @koenhoeijmakers

The warnings seem to have stopped for me after I've changed my retry_after and timeout according to the docs

My configuration already had the retry_after set significantly higher than the timeout before this issue starting popping up, so (at least in my case) the two are not related.

Anyone have a solution for this? It's still an issue to at least v5.8.32

Same here on 5.8.32

@markdza @Mult1Hunter you can find the issue here https://github.com/getsentry/sentry-laravel/issues/254#issuecomment-512715265 seems like nothing to do with laravel itself.

Thanks. Looks like we are stuck with this warning in sentry then. Don't want to silence all warnings.

I ended up adding this method to Exceptions/Handler.php:

PHP

public function shouldReport(Exception $e)

{

// check if sentry is trying to falsely report 'Warning: Error while sending STMT_PREPARE packet. PID=XX'

if (strpos($e->getMessage(), 'STMT_PREPARE packet') !== 'false') {

return parent::shouldReport($e);

}

}

I ended up adding this method to Exceptions/Handler.php:

public function shouldReport(Exception $e) { // check if sentry is trying to falsely report 'Warning: Error while sending STMT_PREPARE packet. PID=XX' if (strpos($e->getMessage(), 'STMT_PREPARE packet') !== 'false') { return parent::shouldReport($e); } }```

public function shouldReport(Exception $e)

{

// check if sentry is trying to falsely report 'Warning: Error while sending STMT_PREPARE packet. PID=XX'

if (strpos($e->getMessage(), 'STMT_PREPARE packet') === false) {

return parent::shouldReport($e);

}

}

@mrahmadt What happens if the error message does NOT contain 'STMT_PREPARE packet'?

In your case it's undefined / not explicit, so I rewrote your code as follows:

public function shouldReport(Exception $e)

{

// check if sentry is trying to falsely report 'Warning: Error while sending STMT_PREPARE packet. PID=XX'

// see https://github.com/laravel/framework/issues/28920

if (strpos($e->getMessage(), 'STMT_PREPARE packet') !== false) {

return false;

}

return parent::shouldReport($e);

}

I continue obtaining the error even though apply the code from last comment

I continue obtaining the error even though apply the code from last comment

I assume the Laravel logger is capturing it and logging it as opposed to the Exception Handler.

I also have the same issue.

Laravel 6.5.0

No sentry used.

More > 1000 Jobs

Queue limit to 1 process.

Horizon timeout set to 3600.

ErrorException: Error while sending STMT_PREPARE packet. PID=25026

/home/forge/ggwp/vendor/laravel/framework/src/Illuminate/Database/Connection.php:327

Our problem indeed also happens on MariaDB, not sure about mysql.

It happens on mysql too

so the best solution is to discard error in handler?

I had the same problem with my laravel v6.18.0.

And the error stopped coming after update sentry/sentry-laravel library from v1.5.0 to v1.8.0 by command composer update sentry/sentry-laravel.

I continue obtaining the error even though apply the code from last comment

It also should help here, if the decision from comment above (excluding error by Error Handler) doesn't work.

Most helpful comment

As replied by a dev from Sentry in the sentry-laravel issue