Framework: Queues are failing when primary cache goes offline

Setup

- Laravel Version: 5.5.32

- PHP Version: 7.1

- Database Driver & Version: Aurora MySQL 5.6.10a

- Cache Driver & Version: AWS Elasticache Redis

- Queue Driver & Version: Beanstalkd, "pda/pheanstalk v3.1.0"

Description:

Hi, we're running high load (10,000 requests/sec) Laravel server with MySQL DB, Redis cache and Beanstalkd queues.

Everything is dockerized using laradock docker-compose.

Our app running queues as: "php artisan queue:work", which is essential part of our architecture. On the other hand, our Redis cache is not mandatory and we could live without it if it goes down.

So the main issue here is, if our Redis Cache goes offline for maintenance or any other reason, all the queues are crashing with error:

In AbstractConnection.php line 155:

queue-worker-default_1 |

queue-worker-default_1 | Error while reading line from the server. [tcp://redis-track:6380]

The problem is very similar to what was described here: https://github.com/laravel/framework/issues/19458

Also from Laravel docs, we figured:

"The queue uses the cache to store restart signals, so you should verify a cache driver is properly configured for your application before using this feature."

Bottom line/Possible Resolution:

Currently I don't see a way to specify the cache driver or failover in queue.php.

Is there any way in Laravel to use a secondary cache driver specifically used for queues? Or at least have a failover to our secondary cache automatically without crashing the queues?

All 14 comments

Probably we should support a failover cache driver. I would be open to PRs in this area if someone wants to contribute! :heart:

Any reason not to use Redis Queues? You could then setup a High Availability Redis cluster?

How is that going to solve the issue when redis is the bottleneck and not beanstalkd?

Because you can configure high availability clusters in Redis? And therefore scale as needed?

I'm not against the original proposal - just giving an intrim option...

And therefore scale as needed?

The problem with Error while reading line from the server. is that it's not exclusively due to redis server going down. In my own application I'm seeing a cache key got corrupted and Predis throws Predis\Connection\ConnectionException with Error while reading line from the server. while at the same time accessing another key return correct value without a problem.

So is the answer allowing the queue system to continue indefintely while the cache is down (if configured)?

i.e. the only reason queues need cache (by the framework) is for checking if a queue needs to be restarted. If the cache goes away (for any reason) - then the queue can carry on as normal?

The right question here is how should should we handle failover Cache::get() in general. Queue may have a specific ways to handle failover.

In my case it isn't related to queue at all and I brought this up with @taylorotwell. Which is why he suggested that.

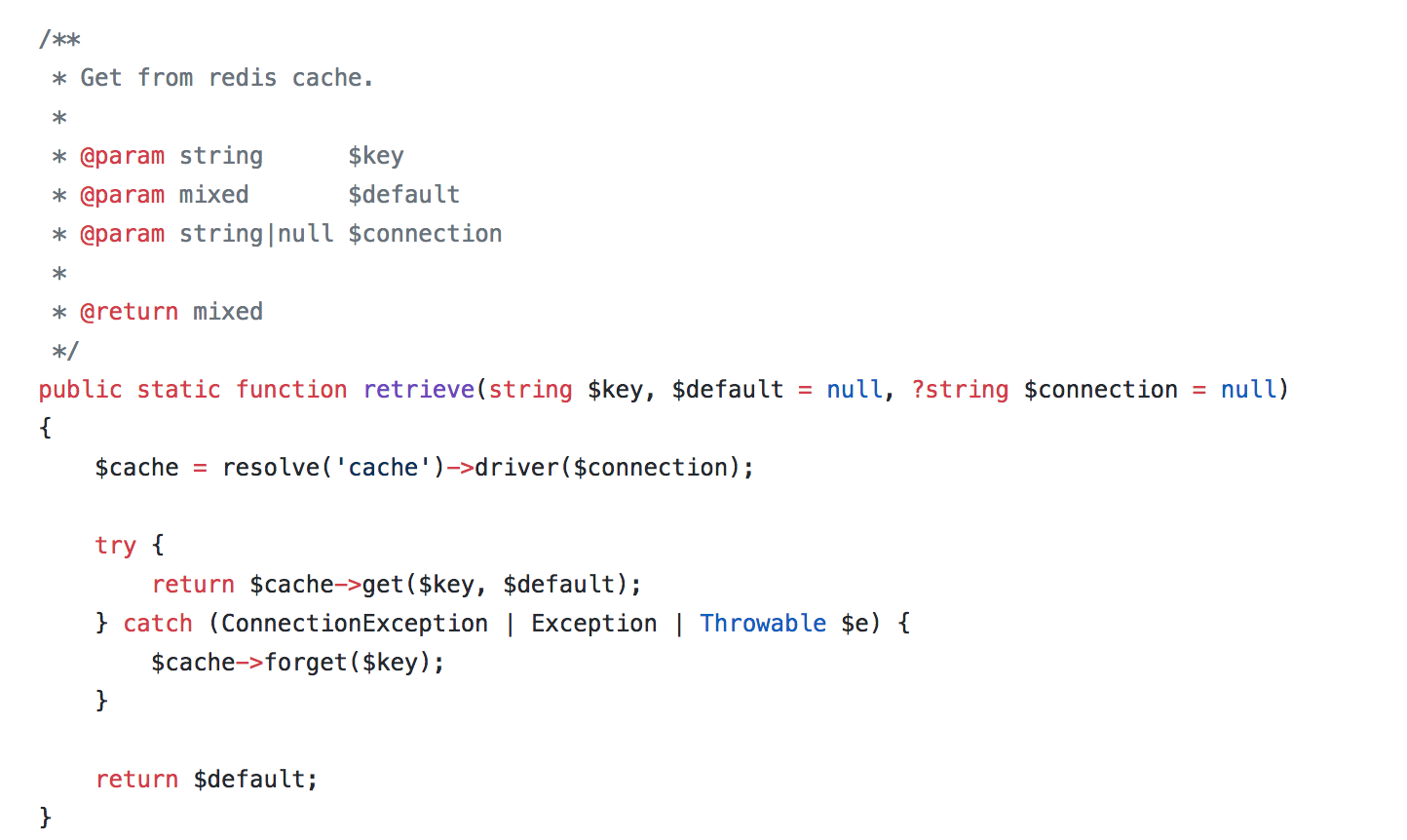

Right now to be safe I need to do a try and catch when using Cache::get().

The right question here is how should should we handle failover Cache::get() in general

Whatever the answer - it'll have to be configurable.

There would be instances where a failed cache stored would be reason to not continue letting the application run.

For example - if you had heavy database caching - a failed cache would let all queries hit the DB, and might cause it to come down unexpectedly. I know specific instances I would rather the front end application go offline than the DB being pulled down with it.

@laurencei We already have high availability Redis Cluster, it's our primary cache driver and it currently uses around 50GB of cache memory. The issue is, AWS informed us about a maintenance which will take our Redis down for more than 1 hour due to big data migration from one Redis to another.

Our app is configured to have a fallback to secondary cache or database in that case. But due to failing queues it makes our entire application unusable, which in my opinion should not be happening.

I think the best option here is having queues with automatic failover to secondary cache in Laravel.

Or at least let us point to a different Cache driver that will be used for queues specifically.

Somethink like this would be helpful:

````

config/cache.php

'default' => env('CACHE_DRIVER', 'redis'),

config/queue.php

'default' => env('QUEUE_DRIVER', 'beanstalkd'),

'queue_cache_driver' => env('QUEUE_CACHE_DRIVER', 'memcached'), # or anything different than primary cache driver

````

We're only talking about the illuminate:queue:restart key correct? If that's the only key, maybe we can ditch the whole cache dependency, instead of a dedicated cache store for a single key.

Replacing the Cache usage with something else just moves the point of failure. The queue workers still need to be informed of a restart across threads and across servers through a shared channel, which will be subject to failures. If you configure a separate cache driver for the queue to use then that is subject to failure too.

There's a problem with silently failing over. If I'm running two workers on different servers and one server cant reach the shared cache resource but the other can, then I'll have one worker looking for it's restart signals in the main cache and the other looking in the failover cache. I suppose a workaround to this would be to broadcast the restart signal on the main cache _and_ any failover caches?

I'm all for making Laravel run better in HA environments, but what we're talking about here is a _cache missing_ scenario not a _cache miss_. I would expect that to cause failure and wouldn't consider the cache to be an optional piece of infrastructure. Is it the frameworks responsibility to failover to a new cache driver here? Isn't that down the redis ha/infrastructure layer to deal with keeping our cache online? Consider as well that some cache drivers support tags and some don't so failing over could cause compatibility problems.

Instead of trying to setup some kind of high availability cache failover solution, you might be able to avoid using the cache at all for worker restarts.

If you are on PHP 7.1+ and have the pcntl extension installed the worker listens for a SIGTERM signal and will gracefully restart the same way it does when you call queue:restart. If you are using supervisord you can use supervisorctl restart to send the stop signal and then restart the process. If the worker is able to run at all than you will be able to send a signal, so it's not likely to fail 😁.

Right now it's pretty difficult to override the cache driver the worker uses (it's set here so you can't override it with a container binding). I've just been setting the default cache driver to array so the worker gets that instead of a redis connection. But the idea is, make sure the cache the worker gets is the array driver so it doesn't try to use the real cache.

I may have discovered something (or perhaps not). Anyways i will report!

(It is not cluster nor hight load related! Just fresh laravel project that uses queue(s) with redis.)

This problem seems to accure only when using Redis from cli including tinker:

php artisan tinker

>>> Redis::set('sdfsdfsdf', microtime()); var_dump(Redis::get('sdfsdfsdf'));

Predis/Connection/ConnectionException with message 'Error while reading line from the server. [tcp://127.1.19.91:6379]'

When i run this Redis command pair in web request, i never get the error. Only tinker. Queues fail as well. And it manifests it self totally randomly. Can anybody else reproduce this?

/**

* Execute the job.

*

* @return void

*/

public function handle()

{

\Log::info('second '.$this->type.' '.$this->ip);

}

Sometimes it fails, needs a retry, and sometimes it does not.

php72-cgi

Redis server v=3.2.12 sha=00000000:0 malloc=jemalloc-4.0.3 bits=64 build=1486d93367f39e5a

EDIT:

@driesvints this might be why horizon workers are failing

if redis.conf has timeout > 0, then server will kill the connection after timout is exceeded. default timeout for redis-server is 0

so my understanding is, that when queue worker is spawned, predis will make the connection and just sit idle or do the work. but it does not close connection after job is finished.

and then at some point redis-server will close the connection (timeout exceeded) and next predis request will fail.

i did lot of testing and for now it seems that timeout cannot be anything else besides 0 for cli. web requests are different story, cause with each request predis will create a new connection. it has nothing to do with laravel:

<?php

require_once 'vendor/autoload.php';

$client = new Predis\Client([

'scheme' => 'tcp',

'host' => 'xxx',

'password' => 'xxx',

'port' => 6379,

]);

function execute($client, $delay = 0) {

$delay = $delay === 600 ? 0 : $delay + 1;

sleep($delay);

try {

$client->set('test', microtime());

$client->get('test');

} catch (Exception $e) {

$delay = 0;

file_put_contents('log.txt', $delay.': ('.date('Y-m-d G:i:s').') '.$e->getMessage()."\n", FILE_APPEND);

}

execute($client, $delay);

}

execute($client);

the script above fails also with Error while reading line from the server. error

is it expected behaviour?

This is a feature request for a cache failover system and not really an existing bug in any current code.

Most helpful comment

Replacing the Cache usage with something else just moves the point of failure. The queue workers still need to be informed of a restart across threads and across servers through a shared channel, which will be subject to failures. If you configure a separate cache driver for the queue to use then that is subject to failure too.

There's a problem with silently failing over. If I'm running two workers on different servers and one server cant reach the shared cache resource but the other can, then I'll have one worker looking for it's restart signals in the main cache and the other looking in the failover cache. I suppose a workaround to this would be to broadcast the restart signal on the main cache _and_ any failover caches?

I'm all for making Laravel run better in HA environments, but what we're talking about here is a _cache missing_ scenario not a _cache miss_. I would expect that to cause failure and wouldn't consider the cache to be an optional piece of infrastructure. Is it the frameworks responsibility to failover to a new cache driver here? Isn't that down the redis ha/infrastructure layer to deal with keeping our cache online? Consider as well that some cache drivers support tags and some don't so failing over could cause compatibility problems.