Envoy: Postgres Statistics Envoy Network Filter

Postgres Statistics Envoy Network Filter

Background

It is becoming more and more important to have detailed insights into the performance of PostgreSQL databases. In particular, query performance is a hot topic. But it is not easy or feasible on all situations to get metrics about query performance.

The usual way is to either:

Log all queries by setting parameters like log_min_duration_statement to

0or log_statement tomodorall. This approach may create a logging storm, which can significantly affect the workload. Plus it still requires parsing the logs, which is a complicated task (as log entries are complex and can be multi-line).Use Postgres extensions like pg_stat_statements. They capture query performance, but only on an aggregated fashion, and reading them requires querying Posgres (pull mode), versus metrics being pushed to the monitoring system. Plus the performed aggregation makes it very difficult to compute relevant performance metrics such as query latency percentiles. And it relies on the availability of the extension, and the user has to explicitly install it --it is not enabled by default, and turning it on requires a database restart.

Design Goals

This issue describes the goals to develop a PostgreSQL protocol filter for the Envoy. Envoy proxy network filters allows to observe and/or manipulate TCP traffic passing through the proxy. This work references #2861 and has some common goals with the initial design document associated with that issue.

The main goal of this project is to add network observability to PostgreSQL. By decoding the PostgreSQL wire protocol within the Envoy filter, it would be possible to extract relevant information about the activity and the performance of a Postgres server, like number of queries per unit of time, types of queries and the performance of those queries.

With respect to the #2861 design document, only item 5 is considered for this work.

An additional goal is to be able to capture an existing workload (i.e., the exact queries that have happened over a designed interval of time) and store it. A separate tool must be able to reproduce this workload and run it against the same or other servers, at a pre-defined speed (1x or less or more). This would be similar to how pgreplay works. Workload capture may or may not be something as simple as a text file with the timestamp and full text of all executed queries within the time range. This feature is lower priority than the statistics, and is subject to further design considerations.

Non-goals

To manipulate (change, inject or supress) data of the Postgres protocol. Traffic is only to be inspected and decoded.

To introduce connection pooling mechanisms. Traffic should just pass through and be inspected. There could be upstream connection poolers before the Postgres server, and this is OK and expected on most scenarios.

Support query routing, i.e., the ability to distinguish write queries (state affecting queries) that must be send to the master; and read-only queries (idempotent) that may be sent to either the master or (probably preferred) to the replicas.

Provide metrics that are already provided by other usual monitoring tools, unless it is really trivial to implement them.

Future improvements to this work may include goals such as the above. But for now, it is aimed for a quick win by providing observability to Postgres, and not having to dig into much more significant efforts that are not "read-only" from a traffic perspective.

Design

Implement the functionality as an Envoy Network Filter Extension. Support for only the PostgreSQL wire protocol version v3 will be provided.

A phased, iterative approach is a must. We need to be able to deliver "quick wins", already providing basic observability. Current status is that there's only bytes sent/received metrics. First steps before implementation starts is to develop a roadmap and detail which versions to produce, each of which adding more functionality over the previous one. And developing production quality for each version, before moving on to implementing additional functionality on the next one.

Statistics

The network filter has to be able to export metrics to other external systems (like Prometheus) in the same way that Envoy supports. As such, it should be able to create, and document, which metrics are exported, what the expected frequency of those metrics will be, and their data type.

This design does not detail all the exact statistics that are to be captured by this filter. The exact definition of the metrics exported should be provided as an augmentation to this issue or as separate issues that will become part of the filter documentation. Nonetheless, general guidance is provided here:

Statistics about connections and usage, in a similar way to what PgBouncer provides with the

SHOW STATScommand. These will be provided for completeness, and for scenarios where no connection pool is present.Second-granularity (ideally) information about operations (per second) performed at the database level, including total queries and drill-down of INSERTs, UPDATEs, DELETEs, DDL operations and possibly others.

Individual (per-query) tracking of query performance. A maximum number of queries will be tracked (configuration parameter). Query hashing --essentially "removing" parameter values from the query--, like the one performed in

pg_stat_statemetns, will be needed. Ideally, the latency (execution time, from the proxy's point of view) would be recorded. There might be configuration filters to select which types of queries are recorded and if there would be a minimum query duration to be recorded (it should be possible to set it to 0). There are two usage patterns that need to be distinguished:- Logging all queries for query replay (see section below).

- Exporting metrics to Prometheus. In this case, we can't export high volumes of detailed information about individual query performance. A maximum of a small number of queries (say 10 by default) will be tracked. The user may be able to specify the (parametrized) queries that wants to be tracked. For those queries, per-query and aggregated latency figures will be computed, as detailed below.

Per-query statistics

The goal is that the performance of each individual query is recorded. It is unfeasible to export this metrics volume to Prometheus. But they can be aggregated over short periods of time (ideally user configurable) like 10 seconds or 1 minute. For this aggregation, metrics similar to those of pg_stat_statements should be provided, like total execution time, number of times the query has been executed, mean time per query and standard deviation.

Aggregated percentiles

Per query (only for the tracked queries, like above) percentiles are computed over an observation period of time, which could be long (e.g. 10 mins or 1 hour). After this observation time, counters need to be rest. Ideally, the metrics to report are the 50, 95, 99 and 999 percentiles, per query, over the specified aggregation period. It might be tricky to compute this over long periods of time, due to the need to store all the runtime performance of every query, plus the overhead to compute the metrics. If possible, a library may be used such as HdrHistogram.

This may turn into a difficult feature to implement. As such, it may be one of the latest goals to be delivered.

Workload replay

Workload replay would give Postgres users the ability to generate real-life workload benchmarks with almost no effort. This, in turn, enables to accurately predict the performance of configuration improvements and new application deployments, among possibly others. It would be a very welcome feature.

As of today, this basically implies logging all queries to disk on the same database server. This may obviously create an explosion of log traffic that may severely affect performance on a busy server. This is to be avoided at all costs.

If, however, traffic is captured at a separate host or process space from the Postgres server, and possibly at a more efficient level, it could be very useful, and would enable workload replay. Please note that this functionality may also leverage Envoy's traffic mirroring capabilities (see for example Redis Proxy traffic shadowing) to offload the protocol decoding and query logging to a separate Envoy instance, lowering the load on the Envoy-master server pair.

It is not a goal of this work to implement a tool for workload replay. Actually, pgreplay may be used for this.

Configuration

Envoy configuration should be extended to include additional parameters specific for this network filter. These configuration values should allow an user to:

Decide what metrics will be collected/exported and which ones will be ignored. It must be possible to ignore all metrics, and performance degradation should be negligible, ideally zero. Some default values should be provided that would collect meaningful statistics, without significantly impacting performance.

Decide about the volume and frequency of the metrics (on those metrics that makes sense).

Performance degradation

Note that all features described here are for a read-only filter. It should not introduce a noticeable performance degradation, even on heavy traffic scenarios. Its development must prioritize performance impact over features or advanced functionality.

Benchmarking should be performed to assess fulfilment of the above performance goals --which are subject to future revision.

References

From Envoy's #2861 issue design document:

- tcp_proxy: A TCP proxy as a network filter extension.

- redis_proxy: A Redis proxy as a network filter extension.

- mongo_proxy: A MongoDB proxy as a network filter extension.

All 12 comments

@ahachete great write up! This sounds awesome to me and I know a lot of people would be interested in seeing this land. Let us know what we can do to help!

Nice idea!

@mattklein123 hi, I'm working on this issue sponsored by OnGres and I implemented a "very very" first WIP patch based on MySQL Filter.

But after learning more about Envoy internals I figure out we can start implement it as an Extension (like https://github.com/envoyproxy/envoy-filter-example) instead of touch the core and need to wait for PR and release cycles.

I really don't know if it make senses for this community, but on PostgreSQL community we try to start new features as an extension before to touch the core. Ofc there are some features we'll need to touch the core but I think this one we don't need.

So due to my limited C++ and Envoy internals knowledge I need some help with some problems (probably dumb problem) building my extension:

INFO: Found 1 target...

INFO: From ProtoGenValidateCcGenerate postgres_filter.pb.h:

postgres_filter.proto: warning: Import validate/validate.proto but not used.

ERROR: /ongres/gitlab.com/labs/envoy-postgres-filter/BUILD:21:1: C++ compilation of rule '//:pkg_cc_proto' failed (Exit 1) gcc failed: error executing command /usr/bin/gcc -U_FORTIFY_SOURCE -fstack-protector -Wall -Wunused-but-set-parameter -Wno-free-nonheap-object -fno-omit-

frame-pointer '-std=c++0x' -MD -MF ... (remaining 39 argument(s) skipped)

Use --sandbox_debug to see verbose messages from the sandbox

In file included from bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:5:0:

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.h:23:47: error: 'PostgreSQLProxy' in namespace 'postgres::proxy' does not name a type

extern bool Validate(const ::postgres::proxy::PostgreSQLProxy& m, pgv::ValidationMsg* err);

^~~~~~~~~~~~~~~

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:18:35: error: 'PostgreSQLProxy' is not a member of 'postgres::proxy'

pgv::Validator<::postgres::proxy::PostgreSQLProxy> validator___postgres__proxy__PostgreSQLProxy(static_cast<bool(*)(const ::postgres::proxy::PostgreSQLProxy&, pgv::ValidationMsg*)>(::postgres::proxy::Validate));

^~~~~~~~~~~~~~~

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:18:35: note: suggested alternative:

In file included from bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.h:14:0,

from bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:5:

bazel-out/k8-fastbuild/bin/postgres_filter.pb.h:70:7: note: 'postgres_proxy::PostgreSQLProxy'

class PostgreSQLProxy :

^~~~~~~~~~~~~~~

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:18:35: error: 'PostgreSQLProxy' is not a member of 'postgres::proxy'

pgv::Validator<::postgres::proxy::PostgreSQLProxy> validator___postgres__proxy__PostgreSQLProxy(static_cast<bool(*)(const ::postgres::proxy::PostgreSQLProxy&, pgv::ValidationMsg*)>(::postgres::proxy::Validate));

^~~~~~~~~~~~~~~

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:18:35: note: suggested alternative:

In file included from bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.h:14:0,

from bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:5:

bazel-out/k8-fastbuild/bin/postgres_filter.pb.h:70:7: note: 'postgres_proxy::PostgreSQLProxy'

class PostgreSQLProxy :

^~~~~~~~~~~~~~~

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:18:50: error: template argument 1 is invalid

pgv::Validator<::postgres::proxy::PostgreSQLProxy> validator___postgres__proxy__PostgreSQLProxy(static_cast<bool(*)(const ::postgres::proxy::PostgreSQLProxy&, pgv::ValidationMsg*)>(::postgres::proxy::Validate));

^

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:18:142: error: 'PostgreSQLProxy' in namespace 'postgres::proxy' does not name a type

pgv::Validator<::postgres::proxy::PostgreSQLProxy> validator___postgres__proxy__PostgreSQLProxy(static_cast<bool(*)(const ::postgres::proxy::PostgreSQLProxy&, pgv::ValidationMsg*)>(::postgres::proxy::Validate));

^~~~~~~~~~~~~~~

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:18:97: error: invalid conversion from 'bool (*)(const int&, pgv::ValidationMsg*) {aka bool (*)(const int&, std::__cxx11::basic_string<char>*)}' to 'int' [-fpermissive]

pgv::Validator<::postgres::proxy::PostgreSQLProxy> validator___postgres__proxy__PostgreSQLProxy(static_cast<bool(*)(const ::postgres::proxy::PostgreSQLProxy&, pgv::ValidationMsg*)>(::postgres::proxy::Validate));

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:48:40: error: 'PostgreSQLProxy' in namespace 'postgres::proxy' does not name a type

bool Validate(const ::postgres::proxy::PostgreSQLProxy& m, pgv::ValidationMsg* err) {

^~~~~~~~~~~~~~~

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc: In function 'bool postgres::proxy::Validate(const int&, pgv::ValidationMsg*)':

bazel-out/k8-fastbuild/bin/postgres_filter.pb.validate.cc:61:25: error: request for member 'stat_prefix' in 'm', which is of non-class type 'const int'

const auto length = m.stat_prefix().size();

^~~~~~~~~~~

Target //:envoy failed to build

Use --verbose_failures to see the command lines of failed build steps.

INFO: Elapsed time: 739.162s, Critical Path: 24.58s

INFO: 204 processes: 204 linux-sandbox.

FAILED: Build did NOT complete successfully

I shared by broken code at: https://github.com/fabriziomello/envoy-postgres-filter

Can you or someone else help me with this basic issue?

Thanks in advance!

@fabriziomello if it is OK, I can collaborate with you. I'll try to push a PR to your repo today.

@fabriziomello if it is OK, I can collaborate with you. I'll try to push a PR to your repo today.

Thank you so much!

Hi @ahachete, @dio and @mattklein123,

After some time since last discussion now we have a very first version of a working PostgreSQL Network Filter. We know it lacks of documentation, automated tests, code refactoring and other things I didn't realize yet.

Here is the code I implemented after @dio help me (thanks a lot man) and created a separated repo to us start to work on this: https://github.com/envoy-postgres/postgresql-proxy/pull/3

Despite that I want to know what we need to do on this code to send a PR to the EnvoyProxy?

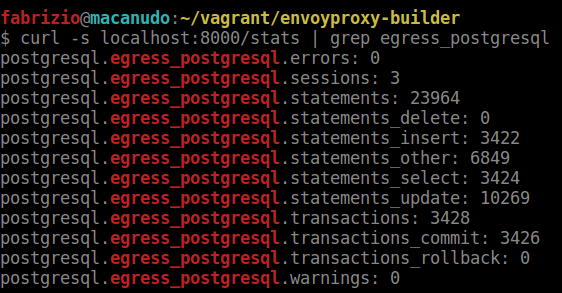

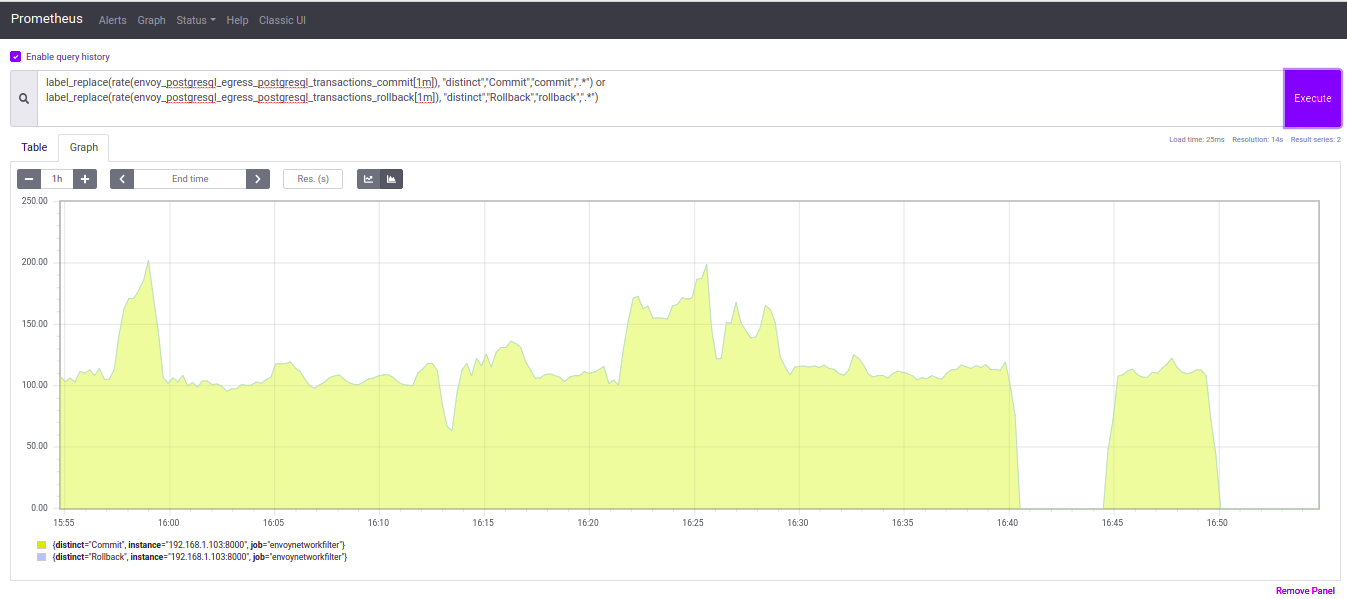

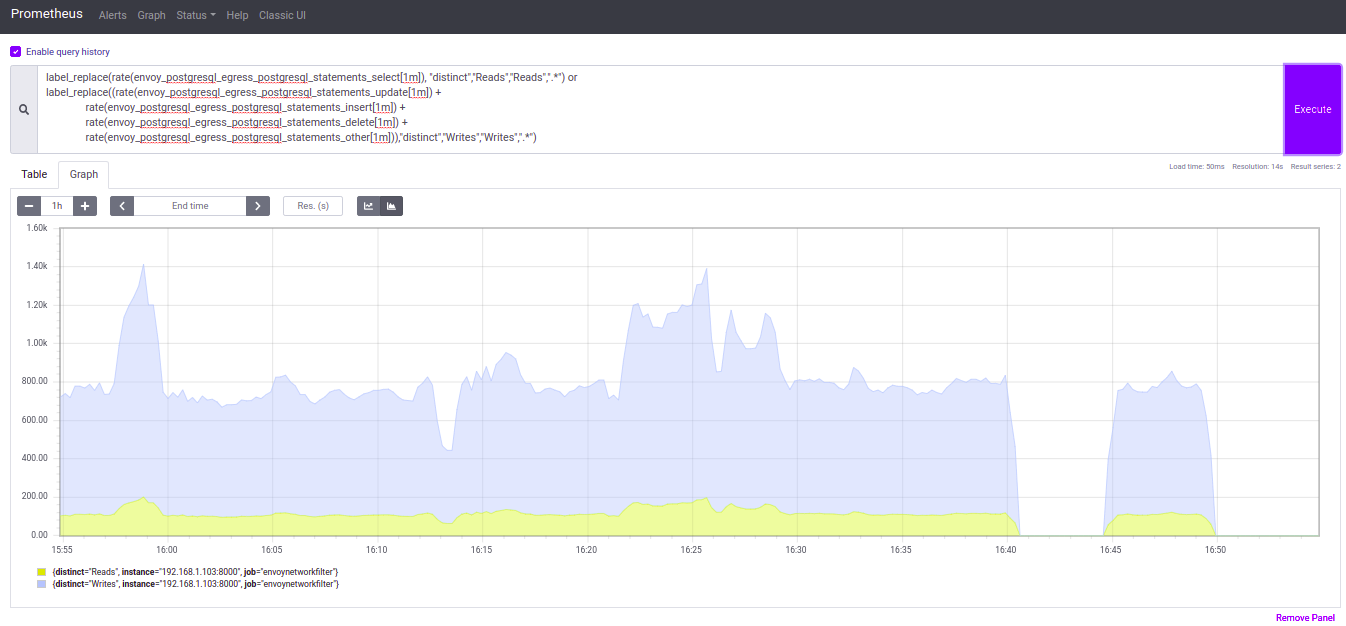

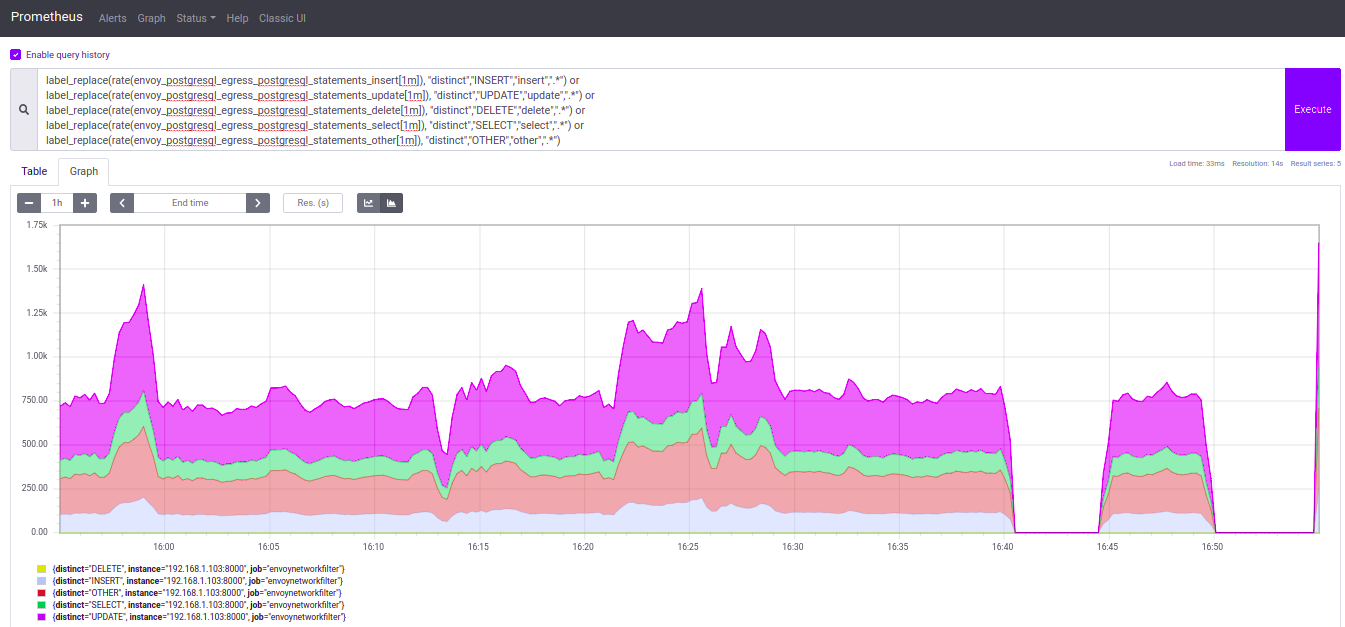

Some screenshots about the results we have now:

- Filtered metrics

- Graph of Commits and Rollbacks rate

- Graph of Writes and Reads rate

- Graphs of Statements rate (drilling down to statements types)

Neat! I think the next step here is to find a sponsor for your filter which I'm guessing will be @dio? If so, @dio can work with you on a plan to start the upstreaming process.

@mattklein123 yes I'll sponsor this. I'll work with @fabriziomello to make it happen. However, I think we need to have a cleaned up design doc to make sure we have reference (it probably can be just transferring this issue into a proper doc though).

Hi @dio As the one who wrote the original design doc (above, as the description of this issue), let me know what would you need. I'd be happy to collaborate on this one, if needed.

Other than that, we're also eager to know about the code, what it would take it to be in shape for potential inclusion in envoy :) So far we have performed limited testing, but the functionality is great --at least for us, from the Postgres operational perspective.

Thanks!

Hi @dio I'm planning return work on it from next week. IMHO maintain a document outside of GH is counterproductive once in this issue we have a very good and detailed reference already. But if it is the community way will do it.

Anyway I have some comments before:

We already have some detailed design on this issue and a first WIP version here: https://github.com/envoy-postgres/postgresql-proxy/issues/1

IMHO we can use this issue as an epic and create more small issues based on this to improve step by step.

I need help to review the code of the first version on other repo to see what I need to work/rework to polish the patch to become acceptable to introduce it to the core. I know we lack of automated tests (will have a more careful look next week) and documentation

Maybe we can start working on the other repo first because there we're not strongly tied to main project. Anyway we plan to ship this first code into our project called StackGres (https://stackgres.io) as it due to our release schedule.

So in parallel we can work into improvements to become this extension part of Envoy and in a near future release StackGres just with Envoy without use the fork.

What's your thoughts?

@fabriziomello sorry for the late reply, I've been sidetracked by other things.

- Cool, yeah, I'll review that.

- I understand that, however, the norm here is to have that in google docs or something so we can put granular comments, but I don't feel strongly about it.

- Sure, I'll do the review. and prepare a PR structure for you.

Thanks for the hard work!

@dio thanks and now sorry for my late reply.

Just created a Design Documentation on GDocs.

/cc @ahachete

Most helpful comment

Hi @ahachete, @dio and @mattklein123,

After some time since last discussion now we have a very first version of a working PostgreSQL Network Filter. We know it lacks of documentation, automated tests, code refactoring and other things I didn't realize yet.

Here is the code I implemented after @dio help me (thanks a lot man) and created a separated repo to us start to work on this: https://github.com/envoy-postgres/postgresql-proxy/pull/3

Despite that I want to know what we need to do on this code to send a PR to the EnvoyProxy?

Some screenshots about the results we have now: