Envoy: difference between --service-cluster and 'local_cluster_name'

reading through the doc, seems to be the same to me.

a quick grep of the code show the former is only used in zone aware routing, and the later everything else.

so why there are two configuration?

BTW: should we emphasis that there should be only one kind service behind each ingress s2s instance ? it's implied by lots of features, but can't find that in the doc.

All 7 comments

so why there are two configuration?

This is unfortunate and frankly is due to internal Lyft infra design. Basically, --service-cluster and local_cluster_name are not necessarily the same. --service-cluster is abstract and can be set to anything and is used in various places. 'local_cluster_name' must match the SDS/CDS cluster name. I think in fresh infras, these are going to be the same, but not always. We will try to make the docs better in this area.

BTW: should we emphasis that there should be only one kind service behind each ingress s2s instance ? it's implied by lots of features, but can't find that in the doc.

What do you mean?

thanks for so quick response.

we are considering share a single envoy per host for multiple different service on the same host, but reading through the doc i seems lots of feature depends on --cluster-name.

since there are multiple different service,

1) is this supported ? if so, how should i name my envoy ?

2) should i better use one envoy for one service on the same host, which means multiple envoy per host ?

can you give me an example where --service-cluster and local_cluster_name really need to be different ?

below are my understanding:

for my front envoy, i can just name it "front-envoy", for both configuration.

for my s2s envoy per host, then

- if there is only one service, i can name both configuration after the service;

- if there are multiple service

- name both config after one of the service

- choose some generic name for all the services

both approaches seems wired and some function may not work properly.

i can't find a way that --service-cluster/local_cluster_name differ will help.

or, is multiple service behind one s2s instance is not the ideal deployment type ?

I'm sorry but I don't think I can give you any detailed guidance. Names are just ... names. You can arrange things however you like. In terms of one Envoy for multiple services on a host, there is nothing about this that inherently won't work.

If you want to use zone local routing, you might to use a synthetic name for the group of services on each host. E.g., "envoy_service_group_a", along with registration in discovery for this group, so that Envoy knows how many hosts there are for the service group. I might ignore zone aware routing though until you get things working.

thanks for your advice.

sorry for not make my concerns more clear.

when we're running multiple different services behind one envoy instance, i guess we must give our shared envoy some generic name.

by different services, i mean different services for each host: some hosts may have A,B,C, some may have C,D,E, ...

what i am concerning about is will any feature not work optimally when deployed as multiple service per envoy ?

from the docs :

--service-cluster

(optional) Defines the local service cluster name where Envoy is running. Though optional, it should be set if any of the following features are used: statsd, health check cluster verification, runtime override directory, user agent addition, HTTP global rate limiting, CDS, and HTTP tracing.

for those features using --service-cluster, if using generic cluster name, i may get the following behaviour:

- statsd

may not get statsd for each service, at least not out of box- health check cluster verification

if we name our shared envoy some generic name, this feature is not used- runtime override directory

not affected- user agent addition

not helpful if we want to know exact service- HTTP global rate limiting

can't rate limit based on service, unless we use descriptor key/value- CDS/SDS

name our service cluster after service for CDS/SDS, and expose the shared envoy for the ip:port.

a single ip:port entry appears in multiple cluster definitions, i am not sure if this would cause problems for connection management or health checks:

would one service failed cause the entire shared envoy health check fail ?- http tracing

Originating/Downstream cluster will show the generic cluster name, may not be helpful.

as for zone-aware routing as you suggested, if we have different set of services for each host, the service-group-name schema may also not work optimally.

BTW, what's your envoy setup for s2s ?

are you using one envoy for one kind of service, or sharing envoy for multiple service but have fixed set of service for each host group ?

At Lyft we have one Envoy per service, and a dedicated Edge Envoy front/middle proxy layer. In general I think there are no major blockers for having Envoy work with multiple local services. I would work your way through building a config and see how it goes. Going to close this out.

As it pertains to Tracing, I don't think it is possible (or makes sense to have one envoy proxy for many services). The Zipkin trace info depends on this --service-cluster value pretty heavily.

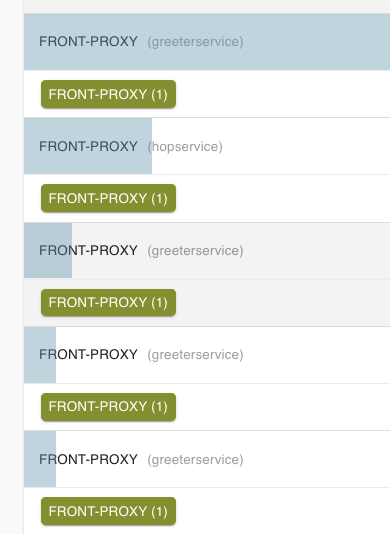

You'll see only one tag on the trace info. Here you'll see only "front-proxy" as the cluster-name event though there are many service hops in my example. 😞