Egg: nodejs-ClusterClientNoResponseError-client-no-response.md

- Node Version: 6.9.4 npm 4.1.1

- Egg Version: 0.12.0

- Plugin Name:

- Plugin Version:

- Platform: centos

egg.js部署在alpha环境下,发现时常出现卡的现象,首次卡差不多在5-20秒之间。查看日志记录如下:

- egg-web.log

2017-02-27 10:40:19,002 INFO 25022 [tcp-base] the connection: 127.0.0.1:43504 is closed by other side

2017-02-27 10:40:19,004 WARN 25022 [ClusterClient#Watcher] follower closed, and try to init it again

2017-02-27 10:40:19,017 INFO 25022 [ClusterClient#Watcher] failed to seize port 43504, and this is follower client.

2017-02-27 10:40:19,020 INFO 25022 [Follower#Watcher] register to channel: Watcher success

2017-02-27 10:41:40,605 INFO 25022 [tcp-base] the connection: 127.0.0.1:43504 is closed by other side

2017-02-27 10:41:40,606 WARN 25022 [ClusterClient#Watcher] follower closed, and try to init it again

2017-02-27 10:41:40,611 INFO 25022 [ClusterClient#Watcher] failed to seize port 43504, and this is follower client.

2017-02-27 10:41:40,613 INFO 25022 [Follower#Watcher] register to channel: Watcher success

2017-02-27 10:43:05,202 INFO 25022 [tcp-base] the connection: 127.0.0.1:43504 is closed by other side

2017-02-27 10:43:05,204 WARN 25022 [ClusterClient#Watcher] follower closed, and try to init it again

2017-02-27 10:43:05,216 INFO 25022 [ClusterClient#Watcher] failed to seize port 43504, and this is follower client.

2017-02-27 10:43:05,219 INFO 25022 [Follower#Watcher] register to channel: Watcher success

2017-02-27 10:44:36,837 INFO 25022 [tcp-base] the connection: 127.0.0.1:43504 is closed by other side

2017-02-27 10:44:36,838 WARN 25022 [ClusterClient#Watcher] follower closed, and try to init it again

2017-02-27 10:44:36,845 INFO 25022 [ClusterClient#Watcher] failed to seize port 43504, and this is follower client.

2017-02-27 10:44:36,847 INFO 25022 [Follower#Watcher] register to channel: Watcher success

egg-agent.log

2017-02-27 10:27:44,949 INFO 25012 [Leader#Watcher] socket connected, port: 52294

2017-02-27 10:29:12,108 INFO 25012 [Leader#Watcher] socket connected, port: 52332

2017-02-27 10:30:35,432 INFO 25012 [Leader#Watcher] socket connected, port: 52398

2017-02-27 10:32:01,630 INFO 25012 [Leader#Watcher] socket connected, port: 52584

2017-02-27 10:33:23,592 INFO 25012 [Leader#Watcher] socket connected, port: 52618

2017-02-27 10:34:47,405 INFO 25012 [Leader#Watcher] socket connected, port: 52628

2017-02-27 10:36:10,506 INFO 25012 [Leader#Watcher] socket connected, port: 52680

2017-02-27 10:37:32,651 INFO 25012 [Leader#Watcher] socket connected, port: 52690

2017-02-27 10:38:55,057 INFO 25012 [Leader#Watcher] socket connected, port: 52744

2017-02-27 10:40:19,019 INFO 25012 [Leader#Watcher] socket connected, port: 52784

2017-02-27 10:41:40,612 INFO 25012 [Leader#Watcher] socket connected, port: 53028

2017-02-27 10:43:05,218 INFO 25012 [Leader#Watcher] socket connected, port: 53096

2017-02-27 10:44:36,846 INFO 25012 [Leader#Watcher] socket connected, port: 53196

common-error.log

2017-02-27 00:01:25,445 ERROR 25012 nodejs.ClusterClientNoResponseError: client no response in 12397ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/pathxx/node_modules/cluster-client/lib/leader.js:59:23)

at ontimeout (timers.js:365:14)

at tryOnTimeout (timers.js:237:5)

at Timer.listOnTimeout (timers.js:207:5)

name: 'ClusterClientNoResponseError'

pid: 25012

hostname: host-192-168-51-80

2017-02-27 00:02:45,501 ERROR 25012 nodejs.ClusterClientNoResponseError: client no response in 17120ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/pathxx/node_modules/cluster-client/lib/leader.js:59:23)

at ontimeout (timers.js:365:14)

at tryOnTimeout (timers.js:237:5)

at Timer.listOnTimeout (timers.js:207:5)

name: 'ClusterClientNoResponseError'

pid: 25012

hostname: host-192-168-51-80

此日志文件记录内容最多

web.log

此日志文件为空

启动命令为

nohup node index.js > np-show20170220.log 2>&1 &

All 62 comments

是不是 app worker 挂了,看看 common-error 最上方的错误?

看起来是 app worker 长时间没有发送心跳,先看看 common.log 里还有没有其他的错,或者直接附上 common.log

有同样的问题

2017-03-05 16:41:21,813 ERROR 45645 nodejs.ClusterClientNoResponseError: client no response in 6429325ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/Users/mingzhang/projects/xxx/node_modules/cluster-client/lib/leader.js:59:23)

at ontimeout (timers.js:365:14)

at tryOnTimeout (timers.js:237:5)

at Timer.listOnTimeout (timers.js:207:5)

name: 'ClusterClientNoResponseError'

pid: 45645

hostname: Mings-MBP.local

// common-error.log

// 第一行至末尾全是这样的错误日志

2017-03-06 08:50:52,928 ERROR 5864 nodejs.ClusterClientNoResponseError: client no response in 13916ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/root/code/node_modules/cluster-client/lib/leader.js:59:23)

at ontimeout (timers.js:365:14)

at tryOnTimeout (timers.js:237:5)

at Timer.listOnTimeout (timers.js:207:5)

name: 'ClusterClientNoResponseError'

pid: 5864

hostname: host-192-168-51-80

2017-03-06 09:02:02,989 ERROR 5864 nodejs.ClusterClientNoResponseError: client no response in 10135ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/root/code/node_modules/cluster-client/lib/leader.js:59:23)

at ontimeout (timers.js:365:14)

at tryOnTimeout (timers.js:237:5)

at Timer.listOnTimeout (timers.js:207:5)

name: 'ClusterClientNoResponseError'

pid: 5864

hostname: host-192-168-51-80

// egg-web.log

2017-03-06 08:47:37,250 INFO 5874 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"application"}

2017-03-06 08:47:37,690 INFO 5874 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/clean_log.js

2017-03-06 08:47:37,691 INFO 5874 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/rotate_by_file.js

2017-03-06 08:47:37,288 INFO 5880 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"application"}

2017-03-06 08:47:37,727 INFO 5880 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/clean_log.js

2017-03-06 08:47:37,727 INFO 5880 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/rotate_by_file.js

2017-03-06 08:47:37,504 INFO 5886 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"application"}

2017-03-06 08:47:37,861 INFO 5886 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/clean_log.js

2017-03-06 08:47:37,862 INFO 5886 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/rotate_by_file.js

2017-03-06 08:47:37,646 INFO 5904 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"application"}

2017-03-06 08:47:38,005 INFO 5904 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/clean_log.js

2017-03-06 08:47:38,006 INFO 5904 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/rotate_by_file.js

2017-03-06 08:47:37,578 INFO 5898 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"application"}

2017-03-06 08:47:38,014 INFO 5898 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/clean_log.js

2017-03-06 08:47:38,014 INFO 5898 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/rotate_by_file.js

2017-03-06 08:47:37,627 INFO 5892 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"application"}

2017-03-06 08:47:37,986 INFO 5892 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/clean_log.js

2017-03-06 08:47:37,986 INFO 5892 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/rotate_by_file.js

2017-03-06 08:47:37,678 INFO 5916 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"application"}

2017-03-06 08:47:38,115 INFO 5916 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/clean_log.js

2017-03-06 08:47:38,115 INFO 5916 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/rotate_by_file.js

2017-03-06 08:47:37,778 INFO 5910 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"application"}

2017-03-06 08:47:38,136 INFO 5910 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/clean_log.js

2017-03-06 08:47:38,136 INFO 5910 [egg-schedule]: register schedule egg-schedule:/root/node-show/node_modules/egg-logrotator/app/schedule/rotate_by_file.js

2017-03-06 08:47:38,607 INFO 5874 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 08:47:38,629 INFO 5874 [Follower#Watcher] register to channel: Watcher success

2017-03-06 08:47:38,629 INFO 5874 [egg-watcher:app] watcher start success

2017-03-06 08:47:38,640 INFO 5880 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 08:47:38,704 INFO 5880 [Follower#Watcher] register to channel: Watcher success

2017-03-06 08:47:38,704 INFO 5880 [egg-watcher:app] watcher start success

2017-03-06 08:47:38,780 INFO 5886 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 08:47:38,845 INFO 5886 [Follower#Watcher] register to channel: Watcher success

2017-03-06 08:47:38,846 INFO 5886 [egg-watcher:app] watcher start success

2017-03-06 08:47:38,945 INFO 5904 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 08:47:38,959 INFO 5904 [Follower#Watcher] register to channel: Watcher success

2017-03-06 08:47:38,959 INFO 5904 [egg-watcher:app] watcher start success

2017-03-06 08:47:38,971 INFO 5898 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 08:47:38,995 INFO 5898 [Follower#Watcher] register to channel: Watcher success

2017-03-06 08:47:38,996 INFO 5898 [egg-watcher:app] watcher start success

2017-03-06 08:47:39,020 INFO 5892 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 08:47:39,026 INFO 5892 [Follower#Watcher] register to channel: Watcher success

2017-03-06 08:47:39,026 INFO 5892 [egg-watcher:app] watcher start success

2017-03-06 08:47:39,156 INFO 5916 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 08:47:39,161 INFO 5916 [Follower#Watcher] register to channel: Watcher success

2017-03-06 08:47:39,162 INFO 5916 [egg-watcher:app] watcher start success

2017-03-06 08:47:39,207 INFO 5910 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 08:47:39,214 INFO 5910 [Follower#Watcher] register to channel: Watcher success

2017-03-06 08:47:39,215 INFO 5910 [egg-watcher:app] watcher start success

2017-03-06 08:51:01,006 INFO 5898 [tcp-base] the connection: 127.0.0.1:36931 is closed by other side

2017-03-06 08:51:01,007 WARN 5898 [ClusterClient#Watcher] follower closed, and try to init it again

2017-03-06 08:51:01,013 INFO 5898 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 08:51:01,016 INFO 5898 [Follower#Watcher] register to channel: Watcher success

2017-03-06 09:02:03,029 INFO 5898 [tcp-base] the connection: 127.0.0.1:36931 is closed by other side

2017-03-06 09:02:03,030 WARN 5898 [ClusterClient#Watcher] follower closed, and try to init it again

2017-03-06 09:02:03,033 INFO 5898 [ClusterClient#Watcher] failed to seize port 36931, and this is follower client.

2017-03-06 09:02:03,037 INFO 5898 [Follower#Watcher] register to channel: Watcher success

2017-03-06 09:23:03,108 INFO 5904 [tcp-base] the connection: 127.0.0.1:36931 is closed by other side

2017-03-06 09:23:03,111 WARN 5904 [ClusterClient#Watcher] follower closed, and try to init it again

// egg-agent.log

2017-03-06 08:47:32,474 INFO 5864 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"agent"}

2017-03-06 08:47:32,890 INFO 5864 [ClusterClient#Watcher] has seized port 36931, and this is leader client.

2017-03-06 08:47:32,891 INFO 5864 [egg-watcher:agent] watcher start success

2017-03-06 08:47:32,892 WARN 5864 [egg-watcher] defaultEventSource watcher will NOT take effect

2017-03-06 08:47:38,613 INFO 5864 [Leader#Watcher] socket connected, port: 50950

2017-03-06 08:47:38,643 INFO 5864 [Leader#Watcher] socket connected, port: 50952

2017-03-06 08:47:38,782 INFO 5864 [Leader#Watcher] socket connected, port: 50956

2017-03-06 08:47:38,947 INFO 5864 [Leader#Watcher] socket connected, port: 50962

2017-03-06 08:47:38,973 INFO 5864 [Leader#Watcher] socket connected, port: 50964

2017-03-06 08:47:39,022 INFO 5864 [Leader#Watcher] socket connected, port: 50968

2017-03-06 08:47:39,158 INFO 5864 [Leader#Watcher] socket connected, port: 50972

2017-03-06 08:47:39,209 INFO 5864 [Leader#Watcher] socket connected, port: 50976

2017-03-06 08:51:01,015 INFO 5864 [Leader#Watcher] socket connected, port: 51208

2017-03-06 09:02:03,036 INFO 5864 [Leader#Watcher] socket connected, port: 51618

2017-03-06 09:23:03,124 INFO 5864 [Leader#Watcher] socket connected, port: 51698

2017-03-06 09:25:53,222 INFO 5864 [Leader#Watcher] socket connected, port: 51800

2017-03-06 09:25:53,307 INFO 5864 [Leader#Watcher] socket connected, port: 51804

2017-03-06 10:07:13,335 INFO 5864 [Leader#Watcher] socket connected, port: 52348

2017-03-06 10:11:31,801 INFO 5864 [Leader#Watcher] socket connected, port: 52352

2017-03-06 10:22:19,713 INFO 5864 [Leader#Watcher] socket connected, port: 52690

2017-03-06 10:22:23,816 INFO 5864 [Leader#Watcher] socket connected, port: 52694

2017-03-06 10:22:25,286 INFO 5864 [Leader#Watcher] socket connected, port: 52704

2017-03-06 10:22:25,758 INFO 5864 [Leader#Watcher] socket connected, port: 52710

2017-03-06 10:22:46,149 INFO 5864 [Leader#Watcher] socket connected, port: 52726

2017-03-06 10:23:45,593 INFO 5864 [Leader#Watcher] socket connected, port: 52768

2017-03-06 10:23:45,736 INFO 5864 [Leader#Watcher] socket connected, port: 52772

2017-03-06 10:47:53,608 INFO 5864 [Leader#Watcher] socket connected, port: 53198

2017-03-06 10:56:53,568 INFO 5864 [Leader#Watcher] socket connected, port: 53280

2017-03-06 11:07:53,667 INFO 5864 [Leader#Watcher] socket connected, port: 53686

2017-03-06 11:15:33,603 INFO 5864 [Leader#Watcher] socket connected, port: 53894

2017-03-06 11:20:24,595 INFO 5864 [Leader#Watcher] socket connected, port: 53932

2017-03-06 11:24:33,756 INFO 5864 [Leader#Watcher] socket connected, port: 53964

2017-03-06 11:38:03,791 INFO 5864 [Leader#Watcher] socket connected, port: 54100

2017-03-06 11:45:34,065 INFO 5864 [Leader#Watcher] socket connected, port: 54104

2017-03-06 12:07:03,895 INFO 5864 [Leader#Watcher] socket connected, port: 54402

2017-03-06 12:11:43,934 INFO 5864 [Leader#Watcher] socket connected, port: 54542

2017-03-06 12:11:44,087 INFO 5864 [Leader#Watcher] socket connected, port: 54546

2017-03-06 12:18:14,151 INFO 5864 [Leader#Watcher] socket connected, port: 54550

2017-03-06 12:18:43,922 INFO 5864 [Leader#Watcher] socket connected, port: 54554

2017-03-06 12:39:54,240 INFO 5864 [Leader#Watcher] socket connected, port: 54714

2017-03-06 12:55:24,149 INFO 5864 [Leader#Watcher] socket connected, port: 54718

2017-03-06 13:04:14,215 INFO 5864 [Leader#Watcher] socket connected, port: 54750

2017-03-06 13:08:04,477 INFO 5864 [Leader#Watcher] socket connected, port: 54782

2017-03-06 13:08:54,233 INFO 5864 [Leader#Watcher] socket connected, port: 54796

2017-03-06 13:12:54,284 INFO 5864 [Leader#Watcher] socket connected, port: 54800

2017-03-06 13:30:14,378 INFO 5864 [Leader#Watcher] socket connected, port: 54992

2017-03-06 13:32:34,256 INFO 5864 [Leader#Watcher] socket connected, port: 54996

2017-03-06 13:43:24,410 INFO 5864 [Leader#Watcher] socket connected, port: 55000

2017-03-06 13:48:04,481 INFO 5864 [Leader#Watcher] socket connected, port: 55008

2017-03-06 13:49:04,697 INFO 5864 [Leader#Watcher] socket connected, port: 55012

2017-03-06 13:52:24,389 INFO 5864 [Leader#Watcher] socket connected, port: 55018

2017-03-06 13:56:44,528 INFO 5864 [Leader#Watcher] socket connected, port: 55022

2017-03-06 14:10:14,619 INFO 5864 [Leader#Watcher] socket connected, port: 55028

2017-03-06 14:10:14,630 INFO 5864 [Leader#Watcher] socket connected, port: 55032

2017-03-06 14:10:44,566 INFO 5864 [Leader#Watcher] socket connected, port: 55036

2017-03-06 14:12:34,607 INFO 5864 [Leader#Watcher] socket connected, port: 55040

2017-03-06 14:15:34,931 INFO 5864 [Leader#Watcher] socket connected, port: 55044

2017-03-06 14:21:38,377 INFO 8175 [egg:logger] init all loggers with options: {"dir":"./logs/node-show","encoding":"utf8","env":"prod","level":"INFO","consoleLevel":"INFO","outputJSON":false,"buffer":true,"appLogName":"node-show-web.log","coreLogName":"egg-web.log","agentLogName":"egg-agent.log","errorLogName":"common-error.log","coreLogger":{},"type":"agent"}

2017-03-06 14:21:38,495 INFO 8175 [ClusterClient#Watcher] has seized port 34509, and this is leader client.

2017-03-06 14:21:38,496 INFO 8175 [egg-watcher:agent] watcher start success

2017-03-06 14:21:38,497 WARN 8175 [egg-watcher] defaultEventSource watcher will NOT take effect

2017-03-06 14:21:43,862 INFO 8175 [Leader#Watcher] socket connected, port: 59136

2017-03-06 14:21:43,875 INFO 8175 [Leader#Watcher] socket connected, port: 59134

2017-03-06 14:21:43,988 INFO 8175 [Leader#Watcher] socket connected, port: 59144

2017-03-06 14:21:44,054 INFO 8175 [Leader#Watcher] socket connected, port: 59150

2017-03-06 14:21:44,134 INFO 8175 [Leader#Watcher] socket connected, port: 59152

@popomore @gxcsoccer 是不是cluster watcher 有问题?

感觉像 centos 下连接被断开了.

@atian25 本地开发环境在 ubuntu16.10 下也同样出现上面的 ClusterClientNoResponseError 错误,有时导致nodejs响应比较慢。

@itsky365 把日志上传一下吧 最好能有代码

@itsky365 试试把本地 node_modules 删除重现安装,看是否还有问题

// index.js

require('egg').startCluster({

workers: 8, // 多加的

baseDir: __dirname,

port: process.env.PORT || 7001, // default to 7001

});

// app/controller

也是日常的http处理逻辑

删除了node_modules目录,npm install 后运行再看看日志。

除非用了 ccap 生成的验证码 有冲突?

如果还有问题 ps aux | grep node 把结果贴下

$ ps aux | grep node 结果如下,启动了2个node项目,一个work采用eggjs开发,一个code采用vue2 webpack开发

$ ps aux | grep node

root 2900 0.1 0.0 16332 2684 ? Ss 08:58 0:03 /sbin/mount.ntfs /dev/sda3 /media/zhuxh/12A62C8FA62C74FF -o rw,nodev,nosuid,uid=1000,gid=1000,uhelper=udisks2

root 2904 0.0 0.0 16224 2292 ? Ss 08:58 0:00 /sbin/mount.ntfs /dev/sda2 /media/zhuxh/系统保留 -o rw,nodev,nosuid,uid=1000,gid=1000,uhelper=udisks2

zhuxh 7103 0.0 0.0 4504 776 pts/8 S+ 09:25 0:00 sh -c node index.js

zhuxh 7104 0.0 0.2 891360 35872 pts/8 Sl+ 09:25 0:00 node index.js

zhuxh 7110 0.0 0.2 1138880 42964 pts/8 Sl+ 09:25 0:00 /home/zhuxh/local/node-v6.x/bin/node --debug-port=5856 /home/zhuxh/work/node_modules/egg-cluster/lib/agent_worker.js {"framework":"/home/zhuxh/work/node_modules/egg","baseDir":"/home/zhuxh/work","port":7001,"workers":8,"plugins":null,"https":false,"key":"","cert":"","customEgg":"/home/zhuxh/work/node_modules/egg","clusterPort":34647}

zhuxh 7120 0.0 0.2 942520 43032 pts/8 Sl+ 09:25 0:00 /home/zhuxh/local/node-v6.x/bin/node /home/zhuxh/work/node_modules/egg-cluster/lib/app_worker.js {"framework":"/home/zhuxh/work/node_modules/egg","baseDir":"/home/zhuxh/work","port":7001,"workers":8,"plugins":null,"https":false,"key":"","cert":"","customEgg":"/home/zhuxh/work/node_modules/egg","clusterPort":34647}

zhuxh 7126 0.0 0.2 943568 43524 pts/8 Sl+ 09:25 0:00 /home/zhuxh/local/node-v6.x/bin/node /home/zhuxh/work/node_modules/egg-cluster/lib/app_worker.js {"framework":"/home/zhuxh/work/node_modules/egg","baseDir":"/home/zhuxh/work","port":7001,"workers":8,"plugins":null,"https":false,"key":"","cert":"","customEgg":"/home/zhuxh/work/node_modules/egg","clusterPort":34647}

zhuxh 7127 0.0 0.2 943580 43788 pts/8 Sl+ 09:25 0:00 /home/zhuxh/local/node-v6.x/bin/node /home/zhuxh/work/node_modules/egg-cluster/lib/app_worker.js {"framework":"/home/zhuxh/work/node_modules/egg","baseDir":"/home/zhuxh/work","port":7001,"workers":8,"plugins":null,"https":false,"key":"","cert":"","customEgg":"/home/zhuxh/work/node_modules/egg","clusterPort":34647}

zhuxh 7138 0.0 0.2 943552 43316 pts/8 Sl+ 09:25 0:00 /home/zhuxh/local/node-v6.x/bin/node /home/zhuxh/work/node_modules/egg-cluster/lib/app_worker.js {"framework":"/home/zhuxh/work/node_modules/egg","baseDir":"/home/zhuxh/work","port":7001,"workers":8,"plugins":null,"https":false,"key":"","cert":"","customEgg":"/home/zhuxh/work/node_modules/egg","clusterPort":34647}

zhuxh 7139 0.0 0.2 942544 42584 pts/8 Sl+ 09:25 0:00 /home/zhuxh/local/node-v6.x/bin/node /home/zhuxh/work/node_modules/egg-cluster/lib/app_worker.js {"framework":"/home/zhuxh/work/node_modules/egg","baseDir":"/home/zhuxh/work","port":7001,"workers":8,"plugins":null,"https":false,"key":"","cert":"","customEgg":"/home/zhuxh/work/node_modules/egg","clusterPort":34647}

zhuxh 7145 0.0 0.2 942760 44876 pts/8 Sl+ 09:25 0:00 /home/zhuxh/local/node-v6.x/bin/node /home/zhuxh/work/node_modules/egg-cluster/lib/app_worker.js {"framework":"/home/zhuxh/work/node_modules/egg","baseDir":"/home/zhuxh/work","port":7001,"workers":8,"plugins":null,"https":false,"key":"","cert":"","customEgg":"/home/zhuxh/work/node_modules/egg","clusterPort":34647}

zhuxh 7156 0.0 0.3 1017396 49932 pts/8 Sl+ 09:25 0:00 /home/zhuxh/local/node-v6.x/bin/node /home/zhuxh/work/node_modules/egg-cluster/lib/app_worker.js {"framework":"/home/zhuxh/work/node_modules/egg","baseDir":"/home/zhuxh/work","port":7001,"workers":8,"plugins":null,"https":false,"key":"","cert":"","customEgg":"/home/zhuxh/work/node_modules/egg","clusterPort":34647}

zhuxh 7157 0.0 0.2 942552 44776 pts/8 Sl+ 09:25 0:00 /home/zhuxh/local/node-v6.x/bin/node /home/zhuxh/work/node_modules/egg-cluster/lib/app_worker.js {"framework":"/home/zhuxh/work/node_modules/egg","baseDir":"/home/zhuxh/work","port":7001,"workers":8,"plugins":null,"https":false,"key":"","cert":"","customEgg":"/home/zhuxh/work/node_modules/egg","clusterPort":34647}

zhuxh 7481 0.0 0.1 875440 25692 pts/4 Sl+ 09:29 0:00 node /home/zhuxh/code/node_modules/.bin/cross-env NODE_ENV=development webpack-dev-server --port 7000 --open --inline --hot

zhuxh 7487 2.0 3.0 1659592 498004 pts/4 Sl+ 09:29 0:15 node /home/zhuxh/code/node_modules/.bin/webpack-dev-server --port 7000 --open --inline --hot

zhuxh 7980 0.0 0.0 15992 972 pts/7 S+ 09:41 0:00 grep --color=auto node

@itsky365 还有问题?

@gxcsoccer alpha 上刚刚部署了 node_modules 下,观看一天 common-error.log 才知道结果呢

@itsky365 我大概知道原因了,今天我会更新一版

2017-03-07 18:30:43,217 ERROR 20965 nodejs.ClusterClientNoResponseError: client no response in 10158ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/root/np-node/node_modules/cluster-client/lib/leader.js:59:23)

at ontimeout (timers.js:365:14)

at tryOnTimeout (timers.js:237:5)

at Timer.listOnTimeout (timers.js:207:5)

name: 'ClusterClientNoResponseError'

pid: 20965

hostname: host-192-168-51-80

2017-03-07 18:42:03,280 ERROR 20965 nodejs.ClusterClientNoResponseError: client no response in 10027ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/root/np-node/node_modules/cluster-client/lib/leader.js:59:23)

at ontimeout (timers.js:365:14)

at tryOnTimeout (timers.js:237:5)

at Timer.listOnTimeout (timers.js:207:5)

name: 'ClusterClientNoResponseError'

pid: 20965

hostname: host-192-168-51-80

2017-03-07 19:00:03,385 ERROR 20965 nodejs.ClusterClientNoResponseError: client no response in 10158ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/root/np-node/node_modules/cluster-client/lib/leader.js:59:23)

at ontimeout (timers.js:365:14)

at tryOnTimeout (timers.js:237:5)

at Timer.listOnTimeout (timers.js:207:5)

name: 'ClusterClientNoResponseError'

pid: 20965

hostname: host-192-168-51-80

alpha上运行的结果

@itsky365 最迟明天发新版本

@itsky365 重新安装部署一下(删掉 node_modules,重新 npm i)

@itsky365 怎么样?

@atian25

[root@host-192-168-1-1 code]# cat common-error.log

2017-03-14 13:28:13,655 ERROR 28134 nodejs.ClusterClientNoResponseError: client no response in 31752ms exceeding maxIdleTime 30000ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/root/code/node_modules/.1.5.1@cluster-client/lib/leader.js:63:23)

at ontimeout (timers.js:365:14)

at tryOnTimeout (timers.js:237:5)

at Timer.listOnTimeout (timers.js:207:5)

name: 'ClusterClientNoResponseError'

pid: 28134

hostname: host-192-168-1-1

现在还报这样的错误,只是比 egg@rc-2 、[email protected] 少些

@atian25 看了下 cluster-client/lib/leader.js 代码,写了一个心跳包的定时器。egg.js 必须要用 cluster-client吗?

cluster-client 是高级用法。

这块要 @gxcsoccer

@gxcsoccer @atian25 升级到 [email protected] 还是报 ClusterClientNoResponseError 错误,能解决吗?

@itsky365 帖一下最新的日志呢?

@itsky365 加微信 gxcsoccer

client no response in 31752ms exceeding maxIdleTime 30000ms, maybe the connection is close on other side.

这个日志看起来确实是心跳超时了

@gxcsoccer 这个问题是不是修复了?

@gxcsoccer 关了

依然还有这个问题。

2018-01-29 21:38:42,969 WARN 56094 [ClusterClient:Watcher] follower closed, and try to init it again

2018-01-29 21:38:42,970 ERROR 56093 nodejs.ClusterClientNoResponseError: client no response in 129863ms exceeding maxIdleTime 60000ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/Users/dang/projects/open-webhook/node_modules/cluster-client/lib/leader.js:73:23)

at ontimeout (timers.js:475:11)

at tryOnTimeout (timers.js:310:5)

at Timer.listOnTimeout (timers.js:270:5)

name: 'ClusterClientNoResponseError'

pid: 56093

hostname: dang-3.local

我也遇到这个问题

egg版本是:

"dependencies": {

"egg": "^2.0.0",

"egg-kafka": "^1.2.6",

"egg-kafka-java-bridge": "^1.0.2",

"egg-mongoose": "^2.1.1",

"egg-scripts": "^2.1.0"

}

偶发

2018-02-10 08:41:34,018 ERROR 52798 nodejs.ClusterClientNoResponseError: client no response in 3562922ms exceeding maxIdleTime 60000ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/Users/Cui/Project/369/chess-admin-server/node_modules/cluster-client/lib/leader.js:73:23)

at ontimeout (timers.js:458:11)

at tryOnTimeout (timers.js:296:5)

at Timer.listOnTimeout (timers.js:259:5)

name: 'ClusterClientNoResponseError'

pid: 52798

hostname: Cuis-MacBook-Pro

[1]

2018-02-10 08:41:34,018 WARN 54232 [ClusterClient:Watcher] follower closed, and try to init it again

2018-02-10 08:47:03,422 WARN 54232 [TCPBase] socket is closed by other side while there were still unhandled data in the socket buffer

2018-02-10 08:47:03,425 WARN 54232 [ClusterClient:Watcher] follower closed, and try to init it again

2018-02-10 08:47:03,430 ERROR 52798 nodejs.ClusterClientNoResponseError: client no response in 329392ms exceeding maxIdleTime 60000ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/Users/Cui/Project/369/chess-admin-server/node_modules/cluster-client/lib/leader.js:73:23)

at ontimeout (timers.js:458:11)

at tryOnTimeout (timers.js:296:5)

at Timer.listOnTimeout (timers.js:259:5)

name: 'ClusterClientNoResponseError'

pid: 52798

hostname: Cuis-MacBook-Pro

依然存在这个问题,我的egg版本是:

"egg": "^2.3.0",

"egg-cors": "^2.0.0",

"egg-jwt": "^2.2.1",

"egg-lru": "^1.0.1",

"egg-mongo-native": "^3.0.0",

"egg-mongoose": "^2.1.1",

"egg-oss": "^1.1.0",

"egg-passport": "^2.0.1",

"egg-scripts": "^2.5.0",

"egg-validate": "^1.0.0",

+1

偶发 +1

是否电脑休眠了一段时间?

@fengmk2 应该没有,还没部署,待生产后继续反馈

@superlbr

能否重现

@gxcsoccer 让我研究下哈

agent 是否做了耗时的 job? 我的心跳是 20s 一次,检查连接是否在 60s 内都没有流量(若是就会报错,断开连接),正常 idle 的时间不可能超过 80s,但是日志显示为 3562922ms(将近一个小时)

2018-02-10 08:41:34,018 ERROR 52798 nodejs.ClusterClientNoResponseError: client no response in

3562922ms exceeding maxIdleTime 60000ms, maybe the connection is close on other side.

笔记本休眠确实有😓

休眠那是必然的... 你的网卡断了但程序还在跑

@gxcsoccer 这种异常情况,判断一下在 local 环境,不打印错误日志好了。

线上服务器也有这问题,根本没法工作

+1 影响日志查找,但又好像不影响 Egg 运行

- 场景:

开发环境下,使用egg-logrotater分割日志文件。配置成每小时分割一次。

我在17:30左右启动了服务,本来需要等到18:00,就会分割日志。

但我想着,通过修改本机电脑的时间为17:59:00,来加快测试。

结果就报错了。

如下:

2018-04-26 17:58:04,449 ERROR 55124 nodejs.ClusterClientNoResponseError: client no response in 634937ms exceeding maxIdleTime 60000ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (work/node_modules/[email protected]@cluster-client/lib/leader.js:74:23)

at ontimeout (timers.js:475:11)

at tryOnTimeout (timers.js:310:5)

at Timer.listOnTimeout (timers.js:270:5)

name: 'ClusterClientNoResponseError'

pid: 55124

其实,是因为egg-logrotator源码里有存活期的判断

源码如下:

node_modules/[email protected]@cluster-client/lib/leader.js中69~79行,

this._heartbeatTimer = setInterval(() => {

const now = Date.now();

for (const conn of this._connections.values()) {

const dur = now - conn.lastActiveTime;

if (dur > maxIdleTime) {

const err = new Error(`client no response in ${dur}ms exceeding maxIdleTime ${maxIdleTime}ms, maybe the connection is close on other side.`);

err.name = 'ClusterClientNoResponseError';

conn.close(err);

}

}

}, heartbeatInterval);

判断了存活时间。

- 解决办法:

为了测试日志分割,不能等一个小时吧,那不是傻吗?

我就先把本机时间修改为17:57,然后立马启动服务,

这样大概等3分钟,也就是在16:00时,日志文件就成功分割了。

common-error.log (服务用的新的空log文件)

common-error.log.2018-04-26-17 (分割出来的老log文件)

还是有这么问题,看了这么多次的回复,还是不知道怎么解决! @gxcsoccer @atian25 @popomore

@gxcsoccer 我这边必现的。 "egg": "^2.7.1"

@itsky365 请问老哥,这块最终解决了么?

可以在报错前,再调一次服务,看看是不是存活。若此时还不存活且超时,就报错。而不是只判断超时。

还没有解决吗

检查你们的代码,应该是某段逻辑耗尽了 CPU,检查是不是有重 CPU 操作,或者 sync 等同步操作。

必要时用 alinode 分析下。

检查你们的代码,应该是某段逻辑耗尽了 CPU,检查是不是有重 CPU 操作,或者 sync 等同步操作。

必要时用 alinode 分析下。

前段时间找了半天才发现问题,因为同事在模板引擎不小心写了死循环导致的,谢谢大佬解答

@fhj857676433 可以描述一下是什么样的死循环吗?

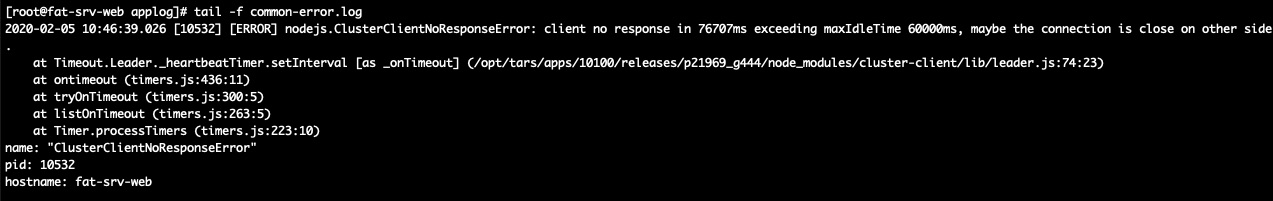

我这边服务器上部署了4个egg应用, 运行一段时间后集体报错:

2020-02-12 15:00:59.690 [32048] [ERROR] nodejs.ClusterClientNoResponseError: client no response in 76721ms exceeding maxIdleTime 60000ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (/opt/tars/apps/10353/releases/p22097_g523/node_modules/cluster-client/lib/leader.js:77:23)

at ontimeout (timers.js:436:11)

at tryOnTimeout (timers.js:300:5)

at listOnTimeout (timers.js:263:5)

at Timer.processTimers (timers.js:223:10)

name: "ClusterClientNoResponseError"

pid: 32048

hostname: fat-srv-web

@fhj857676433 可以描述一下是什么样的死循环吗?

前端写的 类似 while(true)一样的逻辑 判断写错了

可以检查下自己的代码 有没有长时间运行的那种

@fhj857676433 我这边场景比较奇怪, 同样的代码部署在三台服务器, 仅在一台服务器报错, 其它两台正常

代码中有轮询Java接口的定时任务, 如果这里报错的话, 是否会抛出 ClusterClientNoResponseError 错误?

const Subscription = require('egg').Subscription;

const apollo = require('../../lib/apollo');

const path = require('path');

class Update extends Subscription {

static get schedule() {

return {

interval: process.env.APOLLO_PERIOD ?

process.env.APOLLO_PERIOD + 's' : '30s',

type: 'worker',

};

}

// subscribe 是真正定时任务执行时被运行的函数

async subscribe() {

const apolloConfig = require(path.join(this.app.config.baseDir, 'config/apollo.js'));

const result = await apollo.remoteConfigServiceSikpCache(apolloConfig);

if (result && result.status === 304) {

this.app.coreLogger.info('apollo 没有更新');

} else {

apollo.createEnvFile(result);

apollo.setEnv();

this.app.coreLogger.info(`apollo 更新完成${new Date()}`);

}

}

}

module.exports = Update;

代码中有轮询Java接口的定时任务, 如果这里报错的话, 是否会抛出

ClusterClientNoResponseError错误?const Subscription = require('egg').Subscription; const apollo = require('../../lib/apollo'); const path = require('path'); class Update extends Subscription { static get schedule() { return { interval: process.env.APOLLO_PERIOD ? process.env.APOLLO_PERIOD + 's' : '30s', type: 'worker', }; } // subscribe 是真正定时任务执行时被运行的函数 async subscribe() { const apolloConfig = require(path.join(this.app.config.baseDir, 'config/apollo.js')); const result = await apollo.remoteConfigServiceSikpCache(apolloConfig); if (result && result.status === 304) { this.app.coreLogger.info('apollo 没有更新'); } else { apollo.createEnvFile(result); apollo.setEnv(); this.app.coreLogger.info(`apollo 更新完成${new Date()}`); } } } module.exports = Update;

有这个可能 加个超时的处理吧

@fhj857676433 感谢, 我会再检查一下

Most helpful comment

依然还有这个问题。