Dxvk: Games crash on Nvidia due to memory allocation failures

For some reason it looks like DXVK's device memory allocation strategy does not work reliably on Nvidia GPUs. This leads to game crashes with the characteristic DxvkMemoryAllocator: Memory allocation failed error in the log files.

This issue has been reported in the following games:

1099 (Bloodstained: Ritual of the Moon)

1087 (World of Warcraft)

If you run into this problem, please do not open a new issue. Instead, post a comment here, including the full DXVK logs, your hardware and driver information, and information about the game you're having problems with.

Update: Please check https://github.com/doitsujin/dxvk/issues/1100#issuecomment-509484527 for further information on how to get useful debugging info.

Update 2: Please also see https://github.com/doitsujin/dxvk/issues/1100#issuecomment-515083534.

Update 3: Please update to driver version 440.59.

All 244 comments

The same error with World of Tanks.

Hardw.: 6700k 16GB GTX 780 3GB

when i set and without __GL_SHADER_DISK_CACHE_PATH=~/.nv Nvidia doesn't allocate cache. Maybe here is issue?

GPU drivers: 418.52.10 or 430.26

@Sandok4n

post a comment here, including the full DXVK logs

The shader cache should have nothing to do with memory allocation issues.

Ok. I'll try to reproduce this error but when it appeared I've downgraded kernel, dxvk and wine. Problem were that same. Only one thing was not changed. NV drivers (installation version in AUR was only new). Problem is for about two weeks.

The shader cache should have nothing to do with memory allocation issues.

Maybe not, but as i have pointed out in another thread "dirty shader cache", it seems to me i have fewer crashes with a fresh .nv cache (delete the GLCache folder AND the WoW/_Retail_/Cache folder). If i keep clearing it regularly the crashes is less, but more stuttering at the start. Crashing while zoning COULD perhaps mean something weird happens when DXVK shader compilation is done?

I assume that the shader compilation business with WoW goes something in the lines of:

WoW (Cache .WDB) -> DXVK -> .nv (driver cache)? Could the WoW cache folder contain some weird shaders that DXVK uses too much memory to compile/read somehow?

https://github.com/Joshua-Ashton/d9vk/issues/170 - possibly connected.

From my observations, crashes are more often if "free" host memory is low. But IMHO app should use "available" memory.

https://gist.github.com/pchome/fb43b3752b878501757bdad571473a4e - mem data during such crash (from D9VK issue 170).

_#103 - I was happy with this fix, some "heavy" games was able to use my whole VRAM, then RAM, swap, ... and still be alive :smile: . Or REISUB sysrq sometimes.

Because of current issue I definitely want more "magically created RAM"._

Test cache behaviour:

- drop whole caches (not recommended):

sync && echo 3 | sudo tee /proc/sys/vm/drop_caches

more free ram, longer game sessions.

- fill caches:

search/copy/... large amount of files

less free ram, shorter game sessions.

p.s. 418.52.10

If you can grab /proc/slabinfo or slabtop output that would be helpful. As is the output from grep . /proc/sys/vm/* preferably before and after though that understandably might be hard.

You could for example bump /proc/sys/vm/swappiness as a test, it would tell the kernel to be more active in freeing memory. Your gist doesn't show any swap at all which is odd.

From my observations, crashes are more often if "free" host memory is low. But IMHO app should use "available" memory.

The average application doesn't even know or care about how much RAM you have at all.

Someone on the VKx discord found that if VRAM is full, vkAllocateMemory fails even on a memory type that is not device local. This would also explain why #1099 crashes even though memory utilization is very low. This does include VRAM allocated by other applications (window manager, browser, ...), which DXVK has no control over.

@doitsujin

From my observations, crashes are more often if "free" host memory is low. But IMHO app should use "available" memory.

The average application doesn't even know or care about how much RAM you have at all.

Yes, it was not a technical description.

@h1z1

Your gist doesn't show any swap at all which is odd.

swap:512MiB, swappiness:10, swap in my system used only as "fallback", it rarely filled and used as indicator "be ready". Also it's zram.

Well, superposition test still the thing, I able to reproduce the issue running the "1080p" profile. It quits immediately when VRAM got filled. "720p" profile is fine with ~1200/1300MB used/allocated.

I installed 418.49.04, the lowest (IIRC) driver version for my current kernel (5.0.21) and was able to fill whole VRAM (1900+) and have ~2700/2800MB used/allocated during benchmark. Well, it's freshly booted system, so I going to stay on 418.49.04 driver for a while and perform more tests later, to be sure.

This is also an issue with Borderlands GOTY Enhanced. Seems to occour when loading new map areas/title sequence. It seems that this does not happen once loaded successfully into a map, until I have been playing for around 15-20minutes. For example, after loading in, traveling between seperate map areas (loading sequence) does not produce a crash no matter how many times you travel. But trying to load a new area after ~10 minutes crashes the game.

Regarding https://github.com/doitsujin/dxvk/issues/1100#issuecomment-503645510, Clearing an already built cache makes the game crash on launch with the same errors nearly every single time until the 3rd or 4th launch. Very strange.

At first I thought this was an issue with Reshade, however it appears that this happens less often with Reshade active. Perhaps this is just placebo.

d3d11.log (note: I removed a few thousand lines of compiling shader outputs, above paste limit)

Specs:

i7-4770 GTX 980 Ti

Kernel: 5.1.11-arch

Driver: 430.26.0

DXVK: 1.2.2

Wine: ge-protonified-4.10 (tested Proton 4.2-7 & Wine 4.9 Staging)

Cheers (side question: Is this a recent development? I've never noticed this with any other games before, although previous DXVK versions have the same error)

@telans @Rugaliz Can you test setting the environment variable __GL_AllowPTEFallbackToSysmem=1?

Note that performance will most likely be poor, but this should hopefully work around the crashes.

Is Borderlands a 32-bit game? In that case your issue is most likely something else, on Proton you can try PROTON_FORCE_LARGE_ADDRESS_AWARE=1. Some wine builds in Lutris may also support this (it would be WINE_LARGE_ADDRESS_AWARE=1 there).

The Enhanced version (remastered/released a couple months ago) I'm playing is 64bit, the remastered versions are also updated to DX11 from DX9.

update: ge-wine does support WINE_LARGE_ADDRESS_AWARE, but this didn't change anything.

From my observations, crashes are more often if "free" host memory is low. But IMHO app should use "available" memory.

The average application doesn't even know or care about how much RAM you have at all.

I had the error in D9VK on my system with 32 GB on a 2080 Ti. Both RAM and VRAM were barely 25% used when I got this error. It has nothing to do with availability.

Also interesting is that I can hit the error with BL2 in a couple of minutes, but I've been playing Bloodstained Ritual of the Night for much longer without a problem. Could it be something new that is not included in Proton yet? The errors are also relatively new to D9VK (as in, builds older than Monday 10 June were fine).

From my observations, crashes are more often if "free" host memory is low. But IMHO app should use "available" memory.

The average application doesn't even know or care about how much RAM you have at all.

I had the error in D9VK on my system with 32 GB on a 2080 Ti. Both RAM and VRAM were barely 25% used when I got this error. It has nothing to do with availability.

The couple of times i have actually had any monitoring up while this crash happened with World of Warcraft and DXVK, the dxvk HUD had a bump in allocated up around 3.6GB-4GB, and nVidia SMI was barely 2GB'ish. This is with RTX2070 8GB card.

So yeah, it does not really seem to be ACTUAL resource starvation, but some imaginary problem possibly from the driver perhaps.

I placed __GL_AllowPTEFallbackToSysmem=1 in lutrisas @telans did.

Bloodstained still crashes after moving a few screens.

performance is pretty much the same though

Could it be something new that is not included in Proton yet? The errors are also relatively new to D9VK (as in, builds older than Monday 10 June were fine).

There have been no memory allocation changes at all for several months. Only 138dde6c3d4458a1d262093b93773b6a90090c40 (from today) changs things a bit, but most likely won't affect this issue at all.

I also somehow doubt that this can be fixed within DXVK since it's the vkAllocateMemory calls that are failing for no apparent reason, no matter which memory type we're trying to allocate from.

There have been no memory allocation changes at all for several months. Only 138dde6 (from today) changs things a bit, but most likely won't affect this issue at all.

Yeah, just tried it and still crashed unfortunately.

New lines in log:

err: DxvkMemoryAllocator: Memory allocation failed

Size: 53660160

Alignment: 256

Mem flags: 0x7

Mem types: 0x681

err: Heap 0: 1319 MB allocated, 1181 MB used, 6144 MB available

err: Heap 1: 857 MB allocated, 766 MB used, 5935 MB available

I also somehow doubt that this can be fixed within DXVK since it's the

vkAllocateMemorycalls that are failing for no apparent reason, no matter which memory type we're trying to allocate from.

As mentioned, I haven't actually run into this one myself with DXVK in Proton 4.2-7. But assuming that D9VK still shares the same memory allocation code, something changed in the last 10 days that made it highly sensitive. Maybe there is a hint there.

There are a few people with Proton having similar crashing issues: https://www.protondb.com/app/729040

Well, found a little snippit to allocate ram via CUDA.

https://devtalk.nvidia.com/default/topic/726765/need-a-little-tool-to-adjust-the-vram-size/

#include <stdio.h>

int main(int argc, char *argv[])

{

unsigned long long mem_size = 0;

void *gpu_mem = NULL;

cudaError_t err;

// get amount of memory to allocate in MB, default to 256

if(argc < 2 || sscanf(argv[1], " %llu", &mem_size) != 1) {

mem_size = 256;

}

mem_size *= 1024*1024;; // convert MB to bytes

// allocate GPU memory

err = cudaMalloc(&gpu_mem, mem_size);

if(err != cudaSuccess) {

printf("Error, could not allocate %llu bytes.\n", mem_size);

return 1;

}

// wait for a key press

printf("Press return to exit...\n");

getchar();

// free GPU memory and exit

cudaFree(gpu_mem);

return 0;

}

Needs cuda-dev-kit from nVidia (or distro). Compile with:

nvcc gpufill.cu -o gpufill

That way you can allocate and "spend" vram without actually spending it.. What happened if i spend 6GB vram, was that WoW started as normal, and did not crash even tho after running around a bit and zoning++ vram was topped out at 7.9GB+ on my 8GB card. Did not crash, not notice any huge issues, but did not test more than maybe 10-15 minutes.

However, using "gpufill" to load 7GB ram ./gpufill 7000 to spend 7GB vram BEFORE starting WoW, something was clearly taxed to system ram instead, cos the performance was horrible. But i still did not crash from that.

Screenshot:

Closing "gpufill" by pressing enter did release 7GB of vram according to nVidia-smi, but there was no change in WoW performance. This atleast indicates that allocated vram -> system ram does not "transfer" back to actual vram even if its freed later. That may well be intended tho, but from what i gather even this experiment did not immediately crash WoW, so the crashing might not REALLY be actual memory allocation problems due to memory starvation.

The "shared memory" thing between vram<->sysram probably does not work the same way that swap does i guess? Ie. in a memory starving situation things gets put to swap on disk, but once memory gets freed, it does not continue to be used from swap. I have no clue what is supposed to happen in a situation like that tho?

Will do some more testing with this, and with the latest https://github.com/doitsujin/dxvk/commit/138dde6c3d4458a1d262093b93773b6a90090c40

https://github.com/doitsujin/dxvk/commit/138dde6c3d4458a1d262093b93773b6a90090c40

seems an improvement so far.

Doing the same test as above with 7GB memory allocated with "gpufill", WoW loaded and had a lot higher fps, although some stuttering and framespikes.. closing "gpufill" to release 7GB vram brought the frametimes down, and fps up. Fairly playable, but i noticed GPU load was still 90%+ vs if normally where i was standing it usually is 45-50% with 30+ more fps.

So for the little testing i did, https://github.com/doitsujin/dxvk/commit/138dde6c3d4458a1d262093b93773b6a90090c40 did help on performance when in a out of vram situation.

EDIT: Clearing the .nv/GLCache folder and WoW/_retail_/Cache folder brought back the same "issues" as https://github.com/doitsujin/dxvk/issues/1100#issuecomment-504068676 it seems..

One other thing i noticed was nVidia-smi seemed to indicate less vram usage from WoW. Is this due to "reuising chunks" so that "actual" vram is not so much?

Since i am an incredibly slow learner, and a n00b.. Let me just ask this to TRY to get my head around this "allocated" thing.

The Cuda app i posted above "allocates" vram from "actual" vram. If i have 7800MB free vram, i can allocate 7800MB, but if i try to allocate 7900MB i get "Error, could not.."

So, when i open eg. firefox, it uses (according to nVidia SMI) 79MB. When i play WoW at my current resolution/settings, the app uses 1880'ish MB. This does not vary much, but may vary with spell effects, and possibly when changing "worlds" (ref. expansions and different texture details and whatnot).

Simple math according again to nVidia SMI, 1880 (wow) + 79 (firefox) = 1959mb. This means i can allocate 6GB (well.. i could allocate 5960MB with the cuda app).

Reading from DXVK HUD, the "allocation" is 4500+ MB. What is this "allocation", and is this "unlimited"? Is the allocation limited by vram + system ram? (in my case 8 + 16 = 24GB)

From the little tests i have done, it is atleast clear that the "allocated" and "used" listed on dxvk hud does not in any way limit me allocating vram with the cuda app, or starting chrome or whatnot. The only thing that actually spew an error message is if i try to use the cuda app to allocate > available vram.

What i don't know is supposed to happen with this "dxvk allocation" is what happens if physical vram is full. From the tests it SEEMS as it will happily use system ram (as i guess this is the intended function). The "allocation" and "used" does not change, but WoW (according to nVidia SMI) uses less physical vram if the game is started in a vram starved situation vs. not.

What was rather clear tho, is that it can seem as if once any actual data (textures and whatnot) is put in the system ram, it stays there for some reason. The tests with really starved vram makes the GPU usage 99%, and fps.. a LOT less even after i kill the cuda app, even if i then get 5GB free physical vram.

Would it not be ideal if allocation blocks could be freed or moved to vram once vram is free? Or is that not a feature available to vulkan.. or perhaps a driver thing that things dont get "transfered"?

Would it not be ideal if allocation blocks could be freed or moved to vram once vram is free?

Indeed, but that would require recreatnig all Vulkan resources that are in system memory, as well as all views for those resources. This is an absolute nightmare, and I have no plans to do that.

DXVK can let the driver do the paging so that it doesn't have to recreate any resources, however that only works on drivers which support VK_EXT_memory_priority and allow over-subscribing the device-local memory heap. On Linux, this currently only works on AMD and possibly Intel drivers.

SveSop, have you tried completely disabling GLCache with __GL_SHADER_DISK_CACHE=0?

DXVK can let the driver do the paging so that it doesn't have to recreate any resources, however that only works on drivers which support

VK_EXT_memory_priorityand allow over-subscribing the device-local memory heap. On Linux, this currently only works on AMD and possibly Intel drivers.

Since this extension IS available for Windows and nVidia, hopefully this COULD be a thing for Linux aswell.

IF this happens, would this help in situations like this? Cos to me it kinda seems like somewhat of a drawback if resources ever get put in system ram and never moved back. I wonder if this is somewhat related to what i have tried to describe before - After playing a while (2-3hours +), the performance is worse (less fps) standing at the same spot, but restarting the game will gain back the same performance i had earlier.

Maybe over time some stuff gets bumped to sysmem due to the "allocated memory" actually allocating memory outside of vram and decides to put some shit there? Cos as i have kinda proven above - allocation does not seem to have anything AT ALL to do with available vram.

Is it up to the driver not to mess this up? If i have 2GB physical vram, and DXVK allocates 4.5GB, it is feasible to think 2.5GB of that is allocated in system ram, but if i have 8GB vram, it "should" be allocated in vram... but that does not seem to be the way things actually works i guess. Can one blame the driver for putting stuff "where it seems fit", assuming VK_EXT_memory_priority extension is not available?

So I assume there aren't any possible ways to temporarily fix this? (aside from reinstalling Windows...) I'm at a point in the game where I can't progess because it always crashes when loading a section of the last available mission, which is a bummer

Let me know if there are any settings you'd like to try, log etc. Like the help resolve this if possible, but I'm not familliar with code much.

Total number of allocations can be limited, not only their size.

The limit is something like 4 billion on Nvidia's desktop driver.

That said, even if it was 4096 I'd be surprised if DXVK ran into the issue, the memory allocator is designed to only only do a few hundred allocations at most.

I had same problem with Fallout4 + Proton 4.2-7 + GTX960. PC freezed each 30-40 min, however problem got fixed after disabling TRANSPARENT_HUGEPAGES. Try put transparent_hugepage=never into linux kernel options (grub.cfg).

PC crash or game crash with memory allocation errors?

Doesn't this slighty decrease performce with it off?

I had same problem with Fallout4 + Proton 4.2-7 + GTX960. PC freezed each 30-40 min, however problem got fixed after disabling TRANSPARENT_HUGEPAGES. Try put transparent_hugepage=never into linux kernel options (grub.cfg).

Can't say I'm surprised, I used to use automatic huge page for tmpfs (with huge=within_size) and it frequently led to my PC fully freezing (randomly) when doing things like building software on tmpfs (took me a while to realize it was the problem). That made me lose faith in the thing and I disabled huge pages completely. The idea behind it isn't bad though, but I'd rather stay away for a while (could be fixed though, I know huge pages are actively being worked on). Transparent huge pages is however a default on a lot of distributions, I'd assume it "usually" works fine, but wine and games perhaps lead to more unusual use-cases.

This doesn't sound like it's related to this issue though.

@ionenwks

No problems for me w/ transparent huge pages enabled.

$ zgrep TRANSPARENT_HUGE /proc/config.gz

CONFIG_HAVE_ARCH_TRANSPARENT_HUGEPAGE=y

CONFIG_HAVE_ARCH_TRANSPARENT_HUGEPAGE_PUD=y

CONFIG_TRANSPARENT_HUGEPAGE=y

CONFIG_TRANSPARENT_HUGEPAGE_ALWAYS=y

# CONFIG_TRANSPARENT_HUGEPAGE_MADVISE is not set

CONFIG_TRANSPARENT_HUGE_PAGECACHE=y

Also, I prefer "huge" ccache on regular hard drive, rather than tmpfs for building software.

Off-topic TL;DR

Even on my ancient PC everything builds very quickly (updates and subsequent rebuilds). I also use emerge --exclude="package/name" ... to control build times, and I usually doing (re)builds during my rest/sleep times.

Well, this PC got configured over time, I can even build/load/etc. when doing other things -- no freezing, no glitches, even w/o any kernel "interactivity" patches. If there is still any free RAM/Swap, and even then I can do SysRqs. No hard locks ever happen.

So, my usual workflow is switching between workspaces where different things are running and only limitation is the free RAM (my swap in tmpfs, x16 times smaller then the whole ram size :) ). Sometimes there's a game running in background, utilizing ~100% CPU/GPU, and I don't lose DE interactivity while doing other things in the mean time.

\ ~FYI

Maybe I'll check if transparent_hugepage=never changes anything, on next reboot.

Off-topic TL;DR

Maybe I'll check iftransparent_hugepage=neverchanges anything, on next reboot.

You can just echo never >/sys/kernel/mm/transparent_hugepage/enabled

However, I did that + kernel param and it seemed to help for 20-30 minutes, but then I crashed again. Not sure if that's luck or not.

I have been able to mitigate the issue as @doitsujin suggested by having everything i don't need closed. So basically i just have desktop environment running (Gnome here) plus lutris and the game. Anything else opened and sooner or later Bloodstained eventually crashes.

I placed __GL_AllowPTEFallbackToSysmem=1 in lutrisas @telans did.

Bloodstained still crashes after moving a few screens.

performance is pretty much the same though

@Rugaliz you tried that out with the 418.74 driver, correct? Would you be able to try the Vulkan Developer Beta 418.52.10 driver?

You won't need to use the __GL_AllowPTEFallbackToSysmem environment variable with that driver. Let me know if that works without you needing to close your other applications.

I placed __GL_AllowPTEFallbackToSysmem=1 in lutrisas @telans did.

Bloodstained still crashes after moving a few screens.

performance is pretty much the same though@Rugaliz you tried that out with the 418.74 driver, correct? Would you be able to try the Vulkan Developer Beta 418.52.10 driver?

You won't need to use the

__GL_AllowPTEFallbackToSysmemenvironment variable with that driver. Let me know if that works without you needing to close your other applications.

Unrelated, but do you know when changes in that branch will make it up the the mainstream drivers? There's a few other changes/fixes there that are quite useful for DXVK/D9VK.

I've been playing around with the simple code from SveSop above and can replicate crashing of it, problem is it's been random. What window managers are being used? One thing I've noticed with newer drivers is kwin randomly causing corruption in anything GPU related like mpv while X swallows a lot of GPU memory. Example

| NVIDIA-SMI 430.14 Driver Version: 430.14 CUDA Version: 10.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GeForce GTX 108... Off | 00000000:43:00.0 On | N/A |

| 9% 51C P0 87W / 280W | 9859MiB / 11178MiB | 4% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 11517 G X 8217MiB |

| 0 38149 G kwin 467MiB |

| 0 68423 G mpv 10MiB |

| 0 89658 G ...quest-channel-token=4620640961200869647 65MiB |

| 0 98390 G ...quest-channel-token=4771647170898914487 963MiB |

+-----------------------------------------------------------------------------+

89658 and 98390 are Discord, doing what I have no idea. Point is it's quite possible to have rather large resource swings and quickly. Kwin

I placed __GL_AllowPTEFallbackToSysmem=1 in lutrisas @telans did.

Bloodstained still crashes after moving a few screens.

performance is pretty much the same though@Rugaliz you tried that out with the 418.74 driver, correct? Would you be able to try the Vulkan Developer Beta 418.52.10 driver?

You won't need to use the

__GL_AllowPTEFallbackToSysmemenvironment variable with that driver. Let me know if that works without you needing to close your other applications.

Doesn't change anything for me going from 430.26 to 418.52.10

I have reproduced errors:

WorldOfTanks_d3d11.log

WorldOfTanks_dxgi.log

DE: XFCE, memory allocation during start game ~95MB, unfortunately i didn't catch memory use during crash.

And here is full run log with all start params:

run.log

I was experiencing exactly the same memory allocation errors with Final Fantasy XIII and D9VK (it was impossible to load a savegame). The setting which helped was d3d9.evictManagedOnUnlock = True in dxvk.conf. Maybe DXVK needs something similar ?

D3D11 has no concept of managed memory, so the D9VK option does not apply here.

Also it's quite likely that you are simply running out of 32-bit address space in Final Fantasy XIII, I sometimes have that problem even with wined3d and d9vk makes it even worse.

I'm running into some issues reproducing this locally with 430.26. Could someone who has a fairly consistent repro try today's DXVK release? It looks like @doitsujin added some extra logging for failed allocations that might help provide a better picture of what's going on.

I've tried with _Bloodstained: Ritual of the Night_ and _World of Tanks_ and I'm running latest DXVK (from Git) against Proton 4.2-8

@liam-middlebrook Borderlands GOTY Enhanced debug logs with dxvk built from afe2b487a62cc62246926e11723a0277ecc42aca:

I can't see anything different from my previous logs unfortunately

@liam-middlebrook

I'm not sure my logs are relevant, but just in case: dxvk-superposition-1280x720-crash.zip

All data collected while the crash dialog window is up (while application still running).

Unigine Superposition benchmark with higher quality textures (-textures_quality 2), just to fill the whole VRAM (2GB).

RAM: ~300MB free (~5GB in caches/buffers) before the test.

After cleaning caches I able to launch the benchmark with the same params, DXVK HUD reports ~2700/2800MB used/allocated memory.

RAM: ~6GB free before the test / ~2GB free during the test

_I kind of understand and can accept such behaviour, but ... it looks like "something" just checks free mem, but not trying to allocate it. In opposite case more free RAM should be pushed out of caches by the system ... Well, I'm not good in technical details (and English)._

@pchome

_In opposite case more free RAM should be pushed out of caches by the system ... Well, I'm not good in technical details (and English)._

This is kind of what i have been trying to ask aswell. I do not really know how vram<->systemram (shared system ram) kinda interlink when it comes to this. I kinda have a idea how this works with system memory and swap tho, and what i believe there is that the system will move "less used shit" to swap when you get in a low system memory state (kernel tunable, but even so).

If you THEN close whatever ram hungry app and start accessing apps that have memory on swap, this will be moved back to free system memory again. Might not happen immediately (and probably kernel tunable too), but you wont end up in a situation where everything is running like a -386 cos its constantly reading from swapfile, with 12GB unused physical memory.

As i said a few posts up, it might NOT be intended to work this way when it comes to vram and shared-system ram and whatnot for graphics related apps... but if it IS intended that free vram means "move data from system ram -> vram" (like it happens with swap), this does clearly not happen with DXVK.

Is it a "system" thing? "Driver" thing? "Vulkan" thing? "Intended behavior" thing? :)

Googled an example how to quickly fill cache : find . -type f -exec cat {} + > /dev/null

So, just run watch free -m in the one terminal and the "find" command in another, then stop the "find" command when "free" mem will be small enough. Then try to use DXVK.

This should help systems w/ "a lot of" RAM quicker reach the behaviour described above.

ref: Experiments and fun with the Linux disk cache

_p.s. the command just "reads" files from current directory into /dev/null, even if it looks scary._

Here are my logs from Frostpunk which is constantly crashing in ~20 minutes:

Frostpunk_dxgi.log

Frostpunk_d3d11.log

Kernel: 4.19.49-1-MANJARO

Proton 4.2-9

CPU: AMD Ryzen 5 1600 Six-Core Processor

GPGPU: NVIDIA Corporation GeForce GTX 960 (2GB)

Driver: 4.6.0 NVIDIA 430.14

RAM: 16053 MB

Game: VALKYRIE DRIVE -BHIKKHUNI- (steam id 550080)

Game randomly crashes during loading with (err: DxvkMemoryAllocator: Memory allocation failed)

I looked into logs and decided that it's better to go with this problem directly to dxvk issues.

Proton log: steam-550080.log

VD_BHIKKHUNI_dxgi.log

VD_BHIKKHUNI_d3d11.log

Tell me if I missed something.

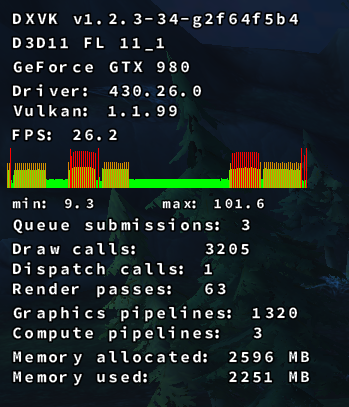

I get a pretty guaranteed crash now when loading into World of Warcraft. Saw you added more debugging info in the latest commits so I did a build from master in hopes that I could be helpful in locating the issue.

Kernel: 5.1.15-arch1-1-ARCH

Cpu: AMD Ryzen 7 2700X

Gpu: GeForce GTX 980:

Driver: 430.26.0

Vulkan: 1.1.99

Wine version: ge-protonified-4.10-x86_64

I set all the debugging environment variables I could see in the readme. Tell me if I'm missing anything, I'm fairly certain that I can reproduce the crash by logging in to the same character.

info: Memory Heap[0]:

info: Size: 4096 MiB

info: Flags: 0x1

info: Memory Type[7]: Property Flags = 0x1

info: Memory Type[8]: Property Flags = 0x1

info: Memory Heap[1]:

info: Size: 12025 MiB

info: Flags: 0x0

err: DxvkMemoryAllocator: Memory allocation failed

Size: 13824

Alignment: 256

Mem flags: 0x7

Mem types: 0x681

err: Heap 0: 405 MB allocated, 309 MB used, 406 MB allocated (driver), 1189 MB available (driver), 4096 MB total

err: Heap 1: 128 MB allocated, 127 MB used, 132 MB allocated (driver), 12025 MB available (driver), 12025 MB total

So, how do one interpret these data? 1189 MB available vram, and 12025 MB available system ram, and still Memory allocation failed?

Very small allocation with 405MB tho, so that does not seem right i guess. I tend to easily top atleast 4GB allocated, but depending on settings, you might have 3'ish GB "allocated".

Also, why is 128MB allocated from "Heap 1"? (System memory) in the first place? Is the driver responsible for this, or is the numbers not right when it comes to this log (hence my question about only 405MB allocated from vram).

Perhaps more to it than this?

EDIT: I wonder about the "size" of 13824? Is this 1189MB + 12025MB + 406MB + 132MB = 13752MB? How come this is CLOSE to the value? Free + allocated = total?

EDIT: I wonder about the "size" of 13824?

That's the number of bytes it was trying to allocate (so ~13kB). The actual allocation from the driver however will be 64MB.

The log may be a bit confusing because the "available" value corresponds to a budget, not an amount of memory that is still free to allocate. The difference is that the budget includes allocations that were already made.

Looking at SveSop's log:

err: Heap 0: 405 MB allocated, 309 MB used, 406 MB allocated (driver), 1189 MB available (driver), 4096 MB total

That suggests that there is 1189-406 = 783MB of free memory on the GPU, yet a 64MB allocation is failing. This makes little sense, unless the memory budget was queried after some application consuming a lot of VRAM was killed.

It can be fragmentation issue. 4096MB = 64 blocks by 64MB. Imagine VRAM have allocated 32MB every 64MB block, so we have 2048MB free, but we can`t allocate 64MB block.

:thinking: I want an UKSM + auto compaction for VRAM.

3b1376b2feba0bed66fd3581766bf8c357a33ecc increases the chunk size to 128 MB (from 64), please test if this changes anything. Here's a build:

dxvk-master.tar.gz

Unfortunately Total War: Rome II continue to crash on extreme settings in the beginning of a new game:

`sun_direction

Imposter quality 3 (size 2048, step 0.05): 156Mb

sun_direction

d3d call failed (0x80004001) : unspecified

d3d call failed (0x80004001) : unspecified

d3d call failed (0x80004001) : unspecified

d3d call failed (0x80004001) : unspecified

d3d call failed (0x80004001) : unspecified

err: DxvkMemoryAllocator: Memory allocation failed

Size: 251658240

Alignment: 256

Mem flags: 0x7

Mem types: 0x681

err: Heap 0: 2096 MB allocated, 1883 MB used, 2136 MB allocated (driver), 10755 MB available (driver), 11264 MB total

err: Heap 1: 1140 MB allocated, 908 MB used, 1144 MB allocated (driver), 24062 MB available (driver), 24062 MB total

terminate called after throwing an instance of 'dxvk::DxvkError'

abnormal program termination

`

Rome2_d3d11.log

Rome2_dxgi.log

@ahuillet

Looking at SveSop's log:

err: Heap 0: 405 MB allocated, 309 MB used, 406 MB allocated (driver), 1189 MB available (driver), 4096 MB totalThat suggests that there is 1189-406 = 783MB of free memory on the GPU, yet a 64MB allocation is failing. This makes little sense, unless the memory budget was queried _after_ some application consuming a lot of VRAM was killed.

Just to clarify, it is not my log, but a snippit from the log JonasKnarbakk above posted. Anyway, the error messages and questions are mostly the same.

@lieff

>

It can be fragmentation issue. 4096MB = 64 blocks by 64MB. Imagine VRAM have allocated 32MB every 64MB block, so we have 2048MB free, but we can`t allocate 64MB block.

So, this begs the question of why it needs to be like this? Perhaps rather complicated to "defragment" those blocks, at this might not even be feasable i guess. Then what about "Memory Heap[1]" (system memory), would it not start allocating blocks from sysmem? (Like i suspect is happening over time). So in a 8GB vram/16GB sysram situation, IS it really possible to use 24GB of allocations for a game that is using tops of <2GB vram?

If the latter is the case, this SURELY has to be something not intentional.

Looking at DXVK hud, the allocations when playing eg. World of Warcraft it is around 4GB, but i dont think i have really seen >5GB. What would be the worst case scenario then with a total of 24GB combined ram? You should have 386 blocks of 64MB in that situation (Now with latest build 192 blocks of 128MB).

I am struggeling a bit to figure out how this "allocation" is actually tracked in the system. Is there a way to view "system allocation" per app and a total value some place in Linux? pmap -x pid? Plx someone clever pop in with a copy&paste line for a human readable type to "confirm" the allocation of dxvk (outside of the HUD)..

So in a 8GB vram/16GB sysram situation, IS it really possible to use 24GB of allocations for a game that is using tops of <2GB vram?

As you can see, there is something like (total RAM)-25% available ...

Fragmentation doesn't sound like a very probable scenario here, but the best thing to focus on at this point is to obtain a consistent reproduction with a minimal set of variables.

Once this is available, it becomes possible to debug inside the driver to find out the origin of the memory allocation failure.

I'm also curious to see the contents of dmesg after someone reproduces the problem, as well as the output of nvidia-smi.

@doitsujin

Is it possible (and make sense) to create a "self test", which will try to allocate requested amount of memory from all available heaps.

dxvk.selfTest = 2048 in dxvk.conf will try to allocate 2GB VRAM and RAM, and then continue to execute or exit if allocation failed. Or so.

@pchome what is that supposed to accomplish and how is that in any way a clean solution to the problem? Why 2048 MB? Why should every user have to enter an arbitrary number into the configuration file?

@ahuillet

I'm also curious to see the contents of dmesg after someone reproduces the problem, as well as the output of nvidia-smi.

The "reproduce" is a problem, but the times this HAS happened, there is nothing special listed in nvidia-smi. Last this happened and i had nvidia-smi up listing loads and ram usage, i had 6GB vram free. Nothing "chugging" vram.. but there might be some nvidia-smi options available that can get some more details? I just do nvidia-smi --loop-ms=500 to refresh stats when i check something.

@doitsujin

how is that in any way a clean solution to the problem?

Not solution, for testing.

Why 2048 MB?

Just example.

Why should every user have to enter an arbitrary number into the configuration file?

Just for testing, e.g. after fail I could pick "allocated" err: Heap 0: 2096 MB allocated, ... and could pass this or bigger value to self-test, just to check if it will fail again. For example.

Just a suggestion. Forget about this.

So I tried logging in with the same build as I have given logs for earlier (3b128179ab): crash

Tried the dxvk-master build from doitsujin (3b1376b2fe): crash

Checked out the newest commit (2f64f5b4e7): Loaded into the game this time, though the fps is really low compared to what it was before the this crash issue started occurring.

3b1376b increases the chunk size to 128 MB (from 64), please test if this changes anything. Here's a build:

Before i go to bed, i just wanna ask a couple of quick questions you probably will think is so stupid that you wonder how i even are able to log into my Linux account, let alone a webpage like Github.

In a "suspected low memory situation", how does logic dictate that "Hey, lets increase the allocation from 64 -> 128 MB so it uses more memory? Or was it just a test to see if ppl crashed more often when that happens?

What does

constexpr VkDeviceSize MinChunkCount = 16;indicate? Other than when i lowered that number to 2, i had (ofc) less memory allocation? Is it for better performance, since doing the allocation uses more resources than placing whatever shaders in a already allocated chunk?

I did some tests in WoW (was not able to crash the game tho, but did not test for hours ofc). I logged in and zoned around a couple of places.

Test1: 128 max chunk size, 2 chunks - 3152MB allocated/2503Used

Test2: 32MB max chunk size, 2 chunks - 2847MB allocated/2447Used

Test3: 16MB max chunk size, 2 chunks - 2804 MB allocated/2463Used

I understand this might not be a problem for THIS game (WoW), but does having low max chunk size cause problems in games with large textures and such?

And lastly - the wording indicate "MaxChunkSize", but is it? Or should it be "ChunkSize", since i think you said someplace more or less that "The chunks are 64MB" (Well, with this patch i guess 128MB).

Again, sorry for not understanding obvious elementary things, but i am positive and try to learn :)

Having a larger chunk size may reduce external fragmentation, which appears to be a bit of a problem on some drivers. On the other hand you'll see increased internal fragmentation, but also reduced queue submission overhead on some other drivers.

It's a tradeoff, and both 128 MB and 64 MB look like reasonable picks; we've had 32 MB in the past but that turned out to be too small, especially with games happily filling up 6-8 GB of VRAM these days.

What does

constexpr VkDeviceSize MinChunkCount = 16;indicate?

It basically caps the chunk size for very small heaps, so that we can at least allocate 16 different chunks. This is important on AMD GPUs there's a 256MB device-local+host-visible heap which gets used a lot by DXVK, and also for integrated GPUs which tend to have small amounts of dedicated memory (768 MB on my Kaveri notebook).

Possibly related?

Unfortunately I know of no way to see -WHAT- is allocated so I don't have much to contribute for why. I have however run into two crashes this week alone where I should have had GPU memory yet allocations were failing. I've been able to duplicate entirely random allocation failures using the cuda script above. The card itself would take upto a few minutes respond to to nvidia-smi, though it showed very little GPU use.

I was seeing this in SteamVR too.

Thu Jun 27 2019 15:15:35.527841 - CVulkanVRRenderer::CreateTexture - Unable to allocate memory

Thu Jun 27 2019 15:15:35.527875 - Failed to create new shared image: format=37 dimensions=1912x2124

Thu Jun 27 2019 15:15:35.656027 - CVulkanVRRenderer::CreateTexture - Unable to allocate memory

Thu Jun 27 2019 15:15:35.656104 - Failed to create new shared image: format=37 dimensions=1912x2124

Thu Jun 27 2019 15:15:35.800281 - CVulkanVRRenderer::CreateTexture - Unable to allocate memory

Thu Jun 27 2019 15:15:35.800309 - Failed to create new shared image: format=37 dimensions=1912x2124

Thu Jun 27 2019 15:15:35.805583 - CVulkanVRRenderer::CreateTexture - Unable to allocate memory

Having a larger chunk size may reduce external fragmentation, which appears to be a bit of a problem on some drivers. On the other hand you'll see increased internal fragmentation, but also reduced queue submission overhead on some other drivers.

It's a tradeoff, and both 128 MB and 64 MB look like reasonable picks; we've had 32 MB in the past but that turned out to be too small, especially with games happily filling up 6-8 GB of VRAM these days.

Yeah, probably what i kinda thought of sorts. Would it be a feasible idea to have this as a "game tunable" tho? With todays values as "default", and dxvk.conf tweakable if it is found to be of benefit for certain games/drivers? This might not be the issues with the memory allocation AT ALL tho, and if it is basically no benefit of tuning this for anyone its fine as it is. I am going to do some more testing just in case anyway, but so far no definite result.

What does

constexpr VkDeviceSize MinChunkCount = 16;indicate?It basically caps the chunk size for very small heaps, so that we can at least allocate 16 different chunks. This is important on AMD GPUs there's a 256MB device-local+host-visible heap which gets used a lot by DXVK, and also for integrated GPUs which tend to have small amounts of dedicated memory (768 MB on my Kaveri notebook).

Hmm.. I dont really have a proper grasp of heap, chunk and blocks, but i thought it kinda could be like this:

Heap 0: Device memory (vram)

Heap 1: Some mix between vram and sysram?

Chunk: A "portion" of heap memory "set aside" (Ie. 128MB currently)

Block: Portion of memory containing image/data - must reside inside a "Chunk".

Something like this? And if so, why would "Min"chunkcount have anything to do with "size"? The word indicates that it is the minimum amount of chunks created, and in current case 16x128MB = 2048MB. My limited understanding then sais to me that 2048MB of Heap 0 (vram) memory is "set aside" (allocated), and one have 16 pieces of 128MB "portions" to put various amounts and sizes of "blocks" of data inside those. Eg. I have 20 images each of 10MB would fill 120MB of one "chunk", then 80MB of another "chunk". This leaves the first chunk a wee bit fragmented, since you cannot fit another image inside that..

Probably completely bunkus and im not understanding this AT ALL tho, so sorry for that.

I can only assume its a costly affair to move a block from one chunk to another, and it should be avoided since it would ultimately eat resources doing that? I guess blocks gets freed once the image/shader whatever leaves the queue? But is freeing a chunk also a costly affair?

What i am aiming at is the reason why chunks gets created but never released. Is this just "common practice"? (Cos for a system comparison, this would really be considered a memory leak hehe).

I found something interesting with WoW i think. Atleast i cant directly explain this tho.

With latest commit i seem to have just shy of 2.8GB memory allocated after zoning a bit back and forth to various worlds. Now setting dxgi.nvapiHack = False doing exactly the same will allocate around 3.1-3.2GB. Why is that?

The game detects i have switched "adapters", cos when i change between true/false a dialog box will pop up when logging in saying something in the line of "Hardware changed. Reload default settings?". I select NO, so that i use the same settings tho.

Why would memory allocation change by 3-400MB by doing this? Internal WoW tweaks that will use different set of textures if i have a nVidia adapter?

The nvapi calls being made by WoW when you have set to nVidia adapter (nvapiHack=false) is the call to what i believe is the "profile" stuff of nvapi. The same functions as this: https://github.com/doitsujin/dxvk/issues/853#issuecomment-507770326

Maybe some hidden graphic setting only usable if WoW detects a nVidia adapter?

PS. Just had a memory allocation crash when i logged in while i was testing this false/true options back and forth for some reason. Relogging worked fine.. Totally random :(

This seems to happen with Path of Exile too, I can't even log into a character as it is and get bombarded with allocation failures:

PathOfExile_x64_d3d11.log

PathOfExile_x64_dxgi.log

But then one user in the forums found out that passing the game a few launch flags(_--waitforpreload --gc 2 --noasync_) made it not crash anymore for him. I was able to reproduce his results and I think just _--noasync_ is enough to stop it from crashing. The wiki states this flag makes so it "Do not preload art assets on startup and disable background loading threads. Completely disable the asynchronous loading changes introduced in version 2.3.0. ". Perhaps that helps shed some light on the issue?

Edit: after some more thorough testing it seems even with those flags crashes are still possible, just significantly rarer

My specs are:

GeForce GT740, 1GB GDDR5, 430.14 drivers

Ryzen 5 1600

16 GB DDR4

kernel 5.1.8-1-MANJARO

wine staging 4.11

DXVK from https://github.com/doitsujin/dxvk/commit/c631953ab6852fb63c91dbc3385b482db2d09240

@SveSop

Now setting

dxgi.nvapiHack = Falsedoing exactly the same will allocate around 3.1-3.2GB. Why is that?

nvapidepends onwined3d, which was created w/ opengl in mind. So, some functions may initialize additional structures ... Just diff your logs w/WINEDEBUG="+loaddll,+nvapi,+wined3d,+opengl32"or so, to see what actually going on.- Some engines picks base configuration profile based on CPU/GPU model (e.g. UE3

BaseCompat.ini) - Some games using different shaders/settings for different GPUs

@pchome well first of all, the nvapi hack doesn't have anything to do with nvapi directly, it literally just reports Nvidia GPUs as an RX 480.

But yeah, if there are differences in memory usage / performance, that's on the game.

@doitsujin

well first of all, the nvapi hack doesn't have anything to do with nvapi directly, it literally just reports Nvidia GPUs as an RX 480.

Yes, but like in case of UE4, when the hack disabled, game then can decide to use nvapi

- which will report GPU as "GTX 999" or so (if requested)

- which will obtain GPU memory size from wined3d (if requested)

That's what I mean.

@SveSop likely uses his own nvapi implementation, so things differs even more.

Yes, but my point is that not all games do that and that this isn't necessarily what's causing the WoW weirdness.

@pchome

That's what I mean.

@SveSop likely uses his ownnvapiimplementation, so things differs even more.

Yes, when doing testing i do. The only two called functions is as i said what i believe to be "getting profiles" from the nvidia gaming profiles you have in Windows, but since the actual call addresses for nvapi is under some NDA of sorts (i guess you need a nvidia dev account to get it, and i don't) i cannot directly verify that these calls that are just "NULL terminated" in staging nvapi actually IS those calls. (But i posted what i know in the other post i linked).

What those functions actually do is kinda beside the point.. i just wanted to mention that WoW probably uses some differences in quality/textures whatever between AMD and nVidia.

I started on a lengthy post last night, but was so tired i scrapped it. So i thought a bit more about this, and would like to ask a (dumb i guess) question.

When allocating memory, does this allocation happen in system ram, and then transfer whatever is needed to the vram? And if so, is this "by design", so that you ALWAYS will need to have a potential available systemram = vram to not get this problem in ALL vulkan applications?

Example: I have a vulkan app that display a image on-screen of exactly 8GB (yeah, i have a 40K screen or whatever resolution.. just for argument). Does this mean that the vulkan app will allocate 8GB of system memory, put that image there and THEN transfer it to vram to display on my uber high-res monitor?

I was experimenting with creating a ramdisk yesterday, and filling that with a file to the point of going VERY low on system ram, and lo and behold when DXVK did some allocations in a low system memory situation, it crashed rather than waiting for the system to pop stuff over to swap. Is there a "timing issue" here, where dxvk memory allocator just times out too fast and throw this error, rather than wait for the system to catch up?

This COULD explain why this would seem random at times, cos in whatever occurance that makes the system sluggish (could be whatever really, just something that makes the "system" busy enough that dxvk memory allocation would HAVE to wait), even tho you have available system ram, if the dxvk memory allocation has to wait x amount of nanoseconds it throw this error? Could this be the case?

@doitsujin

WoW weirdness

Such "weirdness" is pretty common, especially for d3d9 games and D9VK. Game using mixed APIs at the same time (Vulkan,OpenGL,...(?)), which causing issues very similar to described above.

I did faced similar issues before (GTA IV, Batman AA, almost every "nvapi hack OFF", etc.).

https://github.com/Joshua-Ashton/d9vk/issues/90#issuecomment-494609218

https://github.com/Joshua-Ashton/d9vk/issues/90#issuecomment-495169048 (sorted +loaddll log )

Can't say what exactly going on in WoW w/o WINEDEBUG tracing.

\

EDIT: Also, GL and Vulkan driver parts are involved at the same time for the same application, if this matter.

I was having the same issue while playing (failing to play) WoW. After trying a lot of things (wine and dxvk versions, disabling nv hack) the following worked. I've been playing 10 minutes and WoW does not crash. It was crashing within minutes of launching the game or while loading.

Following were set in dxvk.conf

dxgi.customDeviceId = 11c6

dxgi.customVendorId = 10de

d3d11.maxFeatureLevel = 11_0

My system is:

Dxvk: 1.2.3

D3D11 FL 11_0

Driver: Nvidia 430.26.0 (GeForce GTX 650 Ti)

Vulkan: 1.1.99

Manjaro: 5.1.15-1-MANJARO

Edit: Crashed again. I noticed that Memory Allocated was 1128 MB (my card is 1024 MB) and when Memory used surpassed 1024 MB game crashed, but when it doesn't surpass the GPU memory limit game is okay.

I'll try to set dxgi.maxDeviceMemory = 1024 MB.

@panabar

I also can play for hours on end... after randomly hitting a "crash streak" of dxvk.. then it magically just works for a good while.

This randomly occurs even with loads of free vram/system ram. Keep playing while it works tho :)

BTW, once I accidentally figured out that disabling -fipa-pta GCC flag makes The Witcher 3 randomly crash w/ Memory Allocation error. _(I use -fipa-pta as system-wide compiler flag by default)_

https://github.com/doitsujin/dxvk/issues/798#issuecomment-444207165

Still too many "variables" and it was a while ago, but may worth to check.

-fipa-pta short description

EDIT:

Also, may be worth to check DXVK w/ clang-tidy again

Just random output for *bugprone* clang-tidy check:

../src/d3d11/../dxvk/dxvk_buffer.h:250:30: warning: loop variable has narrower type 'uint32_t' (aka 'unsigned int') than iteration's upper bound 'VkDeviceSize' (aka 'unsigned long') [bugprone-too-small-loop-variable]

for (uint32_t i = 0; i < m_physSliceCount; i++) {

BTW, once I accidentally figured out that disabling

-fipa-ptaGCC flag makes The Witcher 3 randomly crash w/ Memory Allocation error. _(I use-fipa-ptaas system-wide compiler flag by default)_

#798 (comment)

No, it doesn't. I compiled DXVK with -fipa-pta for some time now, and the crashes remain. It's totally dependent on VRAM and system RAM usage. The memory allocation error may occur even when enough memory is free for the allocation. It's probably some memory fragmentation issue when the driver needs memory mapped contiguously and the system cannot currently provide that. My uneducated guess is this is why Linux huge pages make the problem much more prominent.

The best chance is to close down all applications/windows that make extensive use of render surface compositing via the GPU, that is most browsers. It can also help to disable compositing in your window manager.

So it's more likely you also accidently closed some windows while you did your tests, or managed to accidentally optimize memory usage of those apps.

-fipa-pta does nothing that would change memory consumption or allocation behavior of a program (tho it may theoretically reduce the pressure on stack usage). You just recompiled stuff, that may have kicked in the kernel memory defragmenter, and the result was a more stable game. Or a combination of all the above.

Usually memory fragmentation isn't an issue... The CPU page tables will show allocated memory as a linear block of addressable space. But as soon as hardware is involved which needs to DMA such memory, it may need contiguous memory to do its job. And there's no layer of page tables involved to simulate linear address space. It has to be physically contiguous. The same goes for memory to be allocated within VRAM. I guess this is the underlying problem here.

I think this is also when an issue called "alloc stall" kicks in which can lead to severe stutter during gaming: It's the kernel rearranging and moving memory around to make space for the allocation request.

I'm not sure how to fix this - but using bigger chunks as DXVK introduced in the latest commits may improve this tho OTOH it leads to higher memory usage with more unused slack space. It could reduce the pressure of finding contiguous blocks immediately because larger chunks of contiguous memory may have already been allocated, and the memory needed just fits into a existing chunk more likely.

But this is only my uneducated guess, I'm not really sure how the NVIDIA driver (which is involved in my case) works here, and neither what's demanded from the kernel when doing such allocations.

I think this is also when an issue called "alloc stall" kicks in which can lead to severe stutter during gaming: It's the kernel rearranging and moving memory around to make space for the allocation request.

Since the memory allocation problem seems kind of random, and you cannot directly (atleast not in an easy manner) verify that this thing happens, could there (as i kinda asked before) be a timing issue? Cos if it was this, launching a game after using the system for a long time with multiple apps open/closing++ would make this happen a LOT more often, but for my case i have not been able to verify this.

I sometimes have multiple browsers up, videos, compiling stuff in the background++ without issues, and other times i can experience this crash from a freshly booted system that ONLY have the game running.

Question: How long does DXVK "wait on the system" before crashing with a memory allocation error?

I'm not sure how to fix this - but using bigger chunks as DXVK introduced in the latest commits may improve this tho OTOH it leads to higher memory usage with more unused slack space. It could reduce the pressure of finding contiguous blocks immediately because larger chunks of contiguous memory may have already been allocated, and the memory needed just fits into a existing chunk more likely.

Well, the same could be said about using smaller allocations, as finding smaller chunks of contiguous memory would be easier too.. so i dunno.

Question: When does the allocation happen? "When it is needed"? How about some sort of "predictive allocation" where you would always have X amounts of allocations "ahead of what is currently needed" sort of thing? This would ofc lead to even more memory usage tho...

I would appreciate if you guys could stay even remotely on topic with your discussions, although I guess it's too late for this thread anyway.

Question: How long does DXVK "wait on the system" before crashing with a memory allocation error?

It doesn't wait at all; why would it? If a memory allocation fails, why would we expect it to succeed after an arbitrary amount of time if nothing else changes?

I would appreciate if you guys could stay even remotely on topic with your discussions, although I guess it's too late for this thread anyway.

I took a random gander up the thread, and i dont really understand why comments about memory allocation and theories and tests that have been done to try to figure out a persistent way to crash the game is NOT on topic for this? Talking about and trying to understand HOW memory allocation happens is IMO "on topic" to the topic of "Games crash on Nvidia due to memory allocation failures".

Question: How long does DXVK "wait on the system" before crashing with a memory allocation error?

It doesn't wait at all; why would it? If a memory allocation fails, why would we expect it to succeed after an arbitrary amount of time if nothing else changes?

I find it hard to believe that allocation while having 10GB of 16GB+2GB(swap) available memory will be so fragmented that the system is completely unable to provide a 128MB continuous block of memory. For other programs in memory you have "page faults" and other mechanics that sort this out. Is there no such thing in Vulkan/DXVK?

Does vulkan memory allocation not follow regular system memory allocation of pagefaults and whanot? https://scoutapm.com/blog/understanding-page-faults-and-memory-swap-in-outs-when-should-you-worry

Is there no such thing in Vulkan/DXVK?

No, since it's kernel-level stuff. It's not the application's job to sort that out, and you simply cannot do it because you have no control over it. Likewise, DXVK has no control whatsoever over physical memory fragmentation, and no control over allocations made by other applications at all.

Talking about and trying to understand HOW memory allocation happens is IMO "on topic" to the topic of "Games crash on Nvidia due to memory allocation failures".

No, technically it isn't, since by asking those questions you're not contributing anything to the solution of the problem and also aren't giving any additional relevant information, but whatever.

For some additional logging information could people try to add the following

kernel module option to nvidia.ko:

NVreg_ResmanDebugLevel=0

You can add this option with modprobe via the command-line at module-load time,

or by creating a modprobe configuration file. Here's a sample command-line for

loading the nvidia.ko module with this option:

modprobe nvidia NVreg_ResmanDebugLevel=0

You can verify that this option is set by running the following command:

grep ResmanDebugLevel /proc/driver/nvidia/params

Note: The kernel module must be unloaded before running modprobe via the

command-line in order for this option to be set. If you run modprobe when the

module is already loaded it will return an exit code of 0 and not present any

warning messages indicating that no change has taken place.

This will help us track information at the system memory page allocation level,

and will be extremely verbose. If you enable this option you'll want to be

mindful of your physical storage device usage, and disable this option after

you've gotten a reproduction.

This will log to dmesg, so in addition to the normal d3d11 and dxgi logs, please

send us an nvidia-bug-report.log.gz file, which can be generated using the

nvidia-bug-report.sh script (normally placed in /usr/bin). If you're unable to

attach the bug report log to this GitHub thread, please send an email to

linux-bugs [at] nvidia.com and put "DXVK Memory Crash" in the Subject field.

Leaving it here, since it's not in the original post – it seems that I originally had the same issue for Escape from Tarkov in #873 a while ago.

Thanks for investigating!

I had a couple people reach out to me with questions about modprobe so here's some much simpler instructions on how to setup kernel module options with modprobe.d where you can just reboot your machine to apply the changes.

As root (or with sudo) create a new configuration file in /etc/modprobe.d/, for simplicity you can call this nvidia.conf. Then add the following line to the new file:

options nvidia NVreg_ResmanDebugLevel=0

You can use # to create comments in your modprobe.d configuration files, for more information checkout the man page for modprobe.d(5).

Once you've done that, just reboot and the next time nvidia.ko is loaded, the verbose kernel logging will be enabled.

@liam-middlebrook I have just tried your suggestion of creating the nvidia.conf file and rebooted. It appears that the Nvidia driver does not load when the nvidia.conf is present. I checked that I had not made any typos or anything.

When I removed this file and restarted everything functioned as normal again? Any ideas?

@liam-middlebrook I also had a go at loading a modprobe.d/ conf file.

It appears to not have worked? After reboot I checked and get this:

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

tim@tim-MS-7B17:$ tail /etc/modprobe.d/nvidia.conf

. Temporarily change debug level

options nvidia NVreg_ResmanDebugLevel=0

tim@tim-MS-7B17:$ grep ResmanDebugLevel /proc/driver/nvidia/params

ResmanDebugLevel: 4294967295

tim@tim-MS-7B17:$ cd /etc/modprobe.d/

tim@tim-MS-7B17:/etc/modprobe.d$ ls -l

total 48

-rw-r--r-- 1 root root 2507 Jul 30 2015 alsa-base.conf

-rw-r--r-- 1 root root 154 Jan 7 2019 amd64-microcode-blacklist.conf

-rw-r--r-- 1 root root 325 Apr 12 04:23 blacklist-ath_pci.conf

-rw-r--r-- 1 root root 1518 Apr 12 04:23 blacklist.conf

-rw-r--r-- 1 root root 210 Apr 12 04:23 blacklist-firewire.conf

-rw-r--r-- 1 root root 677 Apr 12 04:23 blacklist-framebuffer.conf

-rw-r--r-- 1 root root 156 Jul 30 2015 blacklist-modem.conf

lrwxrwxrwx 1 root root 41 Jul 1 07:22 blacklist-oss.conf -> /lib/linux-sound-base/noOSS.modprobe.conf

-rw-r--r-- 1 root root 583 Apr 12 04:23 blacklist-rare-network.conf

-rw-r--r-- 1 root root 127 Aug 6 2018 dkms.conf

-rw-r--r-- 1 root root 154 Mar 16 20:07 intel-microcode-blacklist.conf

-rw-r--r-- 1 root root 347 Apr 12 04:23 iwlwifi.conf

-rw-r--r-- 1 root root 73 Jul 10 11:26 nvidia.conf

tim@tim-MS-7B17:/etc/modprobe.d$

+++++++++++++++++++++++++++++++++++++

I'm also having this issue sporadically while playing Escape From Tarkov.

Once I get this set, I'll email you my log files.

-Tim

I had a couple people reach out to me with questions about

modprobeso here's some much simpler instructions on how to setup kernel module options withmodprobe.dwhere you can just reboot your machine to apply the changes.As root (or with sudo) create a new configuration file in

/etc/modprobe.d/, for simplicity you can call thisnvidia.conf. Then add the following line to the new file:options nvidia NVreg_ResmanDebugLevel=0You can use

#to create comments in yourmodprobe.dconfiguration files, for more information checkout the man page formodprobe.d(5).Once you've done that, just reboot and the next time

nvidia.kois loaded, the verbose kernel logging will be enabled.

You forgot an important step if they have an initram file, you have to rebuild your initramfs with (on ubuntu) sudo update-initramfs -u

Got a new game, but in conjunction with D9VK: Sonic & Allstars Racing Transformed (on Steam).

The game runs fine with WineD3D, but performance isn't that great. With D9VK on the other hand

it'll crash immediately.

I tested to change the driver from 430.26 to 390.xx: no change.

I tested 3 different kernel versions (linux419, linux51, linux52): When I changed from linux52 to linux419 I was able to get to the main menu, but a couple seconds later it crashed - I couldn't reproduce the behavior since then.

I gonna try to generate the nvidia bug report as described above & will attach it when it's ready.

EDIT: Wasn't able to verify if the changes were applied and the needed data is logged, but hopefully it worked:

nvidia-bug-report.log

I was able to get in-game again on this try for whatever reason and it crashed again about 30 seconds after launch. Let me know if this is of any use.

btw I had to tab out of the game and kill the process.

EDIT2: Updated d3d9 and steam log files

System information

GPU: GTX 1060 6GB

Driver: 430.26

Wine version: Proton 4.2-9

D9VK version: Latest Master / Version 0.13 (tested both)

more system infos: https://gist.github.com/MadByteDE/e3977871207310f9f9acb035e8c1257c

Log files

d3d9.log: https://gist.github.com/MadByteDE/61c0104da87c615bedf6224a0b6c8e9b

Steam.log: https://gist.github.com/MadByteDE/883324eafac62b57fb1e68ffc2f5604f

Same problem with Elite Dangerous Horizons

GPU: GTX 1050 Ti 4GB

Driver: 430.26

Wine version: tkg-4.2-x86_64

DXVK version: 1.2.3

Even though this bug is believed to be related to Nvidia, I have noticed that I get the same issue on my laptop which is an intel integrated gpu.

My little nephew plays beamng and it often crashes. I decided to check the dxvk logs and found the above. It is 100% repeatable when trying to load certain scenarios. The laptop is quite old and very under spec for such a demanding game but on lowest graphics settings it can run reasonably well with DXVK.

Maybe it isn't just an Nvidia problem?

Hmm, I did some more reading from this bug and there is a comment from doitsujin that suggests that 64MB will try to be allocated even if it is only a small amount of VRAM required (just under 4MB in this instance).

If that is correct then there is a good chance that the log above is not the same issue and that it is actually a genuine memory allocation issue due to insufficient spare VRAM.

@7oxicshadow

Hmm, I did some more reading from this bug and there is a comment from doitsujin that suggests that 64MB will try to be allocated even if it is only a small amount of VRAM required (just under 4MB in this instance).

Afaik 128MB is now the smallest "chunk size" that is being allocated ref: https://github.com/doitsujin/dxvk/commit/3b1376b2feba0bed66fd3581766bf8c357a33ecc

It is unclear to me what VkDeviceSize MinChunkCount = 16; mean tho, but from reading it directly it CAN be read as "the minimum number of chunks allocated", and if 128MB is the chunksize, 128x16=2048MB. But it do NOT seem to be the actual case of doing that tho, so i am a bit unsure what this actually does.

Still i think you would be hard pressed running anything DXVK with less than 2GB vram without performance issues.

If you compile DXVK yourself, you can experiment with the size in the commit above. Eg constexpr VkDeviceSize MaxChunkSize = 32 << 20; or something to see if it changes stuff.

Had some time to test with NVreg_ResmanDebugLevel, the logs are certainly verbose though still not clear what is happening / worth reporting here? The allocations randomly fail and at different amounts. At least on 1080 TIs, allocation on lower 1G is substantially faster.

Can also confirm the entire GPU still becomes unstable around 6G.

It is unclear to me what

VkDeviceSize MinChunkCount = 16;mean tho, but from reading it directly it CAN be read as "the minimum number of chunks allocated", and if 128MB is the chunksize, 128x16=2048MB.

It is not unclear if you'd actually look at the code in memory.cpp: If your heap is too small to fit at least 16 chunks, it will fall back to a smaller chunk size instead. This is to provide at least 16 chunk types (because there's different types of memory allocated from chunks - but it will use only one type of memory allocations per chunk). That's why I said previously that bigger chunks may reduce the problem: It's likely that all types of memory will be allocated very early during game initialization, and later on there's a higher chance of no additional chunk being needed for a specific small allocation type. But it will also increase the chance of failing to allocate a chunk later if it is needed because the system may struggle with finding contiguous memory for it (external vs. internal fragmentation).

If your heap is too small (below 2048 MB), it will instead allocate just smaller chunks (heapSize devided by 16). @doitsujin I wonder: Are there any alignment constraints? Because let's say we have some uncommon heapsize 1024+512=1536M, and divide that by 16, it would allocate 96M chunks. This is not a power of 2. Does this matter?

Does this matter?

No, doesn't matter. In fact, there are sometimes dedicated memory allocations only for one single resource (most of the time, render targets), which have arbitrary sizes. This is allowed in Vulkan and works fine in practice.

@kakra

Thank you for the explanation that i as a non-coder could use to understand without having to "learn" how to "read" memory.cpp.

I have been testing various stuff to try to "force" this problem happening, but have so far not come up with a reproducible result. Yesterday i used the "gpufill" proggy (cuda app i posted above in this thread someplace) to fill 7GB of vram + i used a ramdisk to fill 14/16GB system ram, and i was able to run the "Monster Hunter Online benchmark" program without a memory allocation error. This should really not be possible, but the system was happily filling my swap before coming to a almost dead halt (graphics freeze, X freeze++) but i was able to kill some tasks via telnet and do a clean reboot.

This means that everything worked "as intended" by using system memory > vram and when running low on systemram swap started. Still within needed allocations.

Now tests like these i did by creating a "hopeless situation" before running the app, and thus the allocation happens probably from system ram instead of vram. Am i right in assessing allocation "probing" what type of memory to use for allocation https://github.com/doitsujin/dxvk/blob/master/src/dxvk/dxvk_memory.cpp#L182-L206 happens every time a new chunk is to be allocated?

Eg. Lets say an app have allocated 3840MB on my 4GB adapter (+there are some 100'ish MB in use by system and whatnot), and then needs another 128MB block, will it then spew a memory allocation error, or would it allocate 128MB from VK_MEMORY_PROPERTY_DEVICE_LOCAL_BIT rather than VK_MEMORY_HEAP_DEVICE_LOCAL_BIT? (And subsequently could there be a problem with the driver figuring out when and what those heaps have available?)

@SveSop I'm not sure about architecture of Vulkan itself but from the code it looks like there are allocation types that are allowed to fall back to sysmem, and there are some that depend von vram and cannot fallback. This is a trade-off between speed and space because the GPU is much slower at accessing sysmem via PCIe than accessing its local memory. There's no swapping involved here, DXVK (or Vulkan on its behalf) will not swap memory dynamically from vram to sysmem to optimize access patterns or make space for more device-local-only memory.

So, if it needs another device-local chunk and the driver cannot provide that, it will return a memory allocation error. Vulkan has a whole lot of allocation flags that could be combined to different configurations. You may also want to look at the Vulkan docs for more info. It is quite complicated because it also honors things like memory caches etc.

Looking at the code you mentioned, there may be a concept of required flags and optional flags (referring memory that needs no device-local memory).

But going more into depth is probably not adding to the issue, you may want to start a thread somewhere else or open a new discussion thread.

@kakra

But going more into depth is probably not adding to the issue, you may want to start a thread somewhere else or open a new discussion thread.

Yeah, i know. Both you and Philip keep saying this is highly off-topic, so i guess i should just stop doing any tests. Conclusion: Everything is working as expected, and if someone do not feel so, learn to be a vulkan guru and make your own DXVK version :)

Hopefully someone with half a brain wont waste time asking stupid questions (like me) and figure this out (if there is a problem) and post a nice PR, while i come to my senses and do something else.

Thanks for the info tho.

Yeah, i know. Both you and Philip keep saying this is highly off-topic, so i guess i should just stop doing any tests. Conclusion: Everything is working as expected, and if someone do not feel so, learn to be a vulkan guru and make your own DXVK version :)

That's really not how you should understand this. Try imaging the developers place: If this has a lot of noise it becomes useless as an issue to properly work on or follow up on. No question is stupid. Maybe just start a new thread about testing memory allocation behavior and reference this thread here. Anyone interested can follow the link that will be referenced here. And if we come up with some useful results, it can be mentioned here. I'd happily join such a separate discussion thread but I'm not very eager on adding more noise to this thread. Testing different scenarios without some well founded technical conclusion doesn't really help in the thread. Such development of test results should be done in another thread. Can you agree?

Given I'm seeing the allocations fail completely outside DXVK or wine, are we chasing the wrong thing here?

For reference I've tested with 2x eVGA FT3 1080TI's, 86.02.39.00.90 BIOS on both. If there was a way to enable more logging without needing to unload the module (seriously Nvidia?), I'd test on other hardware. I'd imagine NVIDIA has a lab of machines they can validate with.

Updating: It's worth noting some braindead syslog replacements will throttle and outright discard messages, leading to lovely things like

Jul 14 03:16:53 localhost rsyslogd: imjournal: 197974 messages lost due to rate-limiting

Jul 14 03:26:54 localhost rsyslogd: imjournal: 181846 messages lost due to rate-limiting

Jul 14 03:36:55 localhost rsyslogd: imjournal: 194669 messages lost due to rate-limiting

Jul 14 03:46:56 localhost rsyslogd: imjournal: 106647 messages lost due to rate-limiting

...

Jul 15 17:10:40 localhost rsyslogd: imjournal: 26029 messages lost due to rate-limiting

Jul 15 17:20:41 localhost rsyslogd: imjournal: 1495171 messages lost due to rate-limiting

Jul 15 17:30:42 localhost rsyslogd: imjournal: 578974 messages lost due to rate-limiting

Perhaps it would be better _not_ to use syslog for this and heed the warning from Nvidia regarding verbosilly^Wverbosity. It is interesting those events happen at almost 10minute intervals. The 1.5Million was during a crash which makes this entire excercise pointless.

If you use D9VK and try to play Ghostbusters: The Video Game it will crash 100% of the time just as it tries to render the first frame in game (menus and fmv work fine).

info: D3D9: Setting display mode: 1920x1080@60

err: DxvkMemoryAllocator: Memory allocation failed

Size: 16384

Alignment: 256

Mem flags: 0xe

Mem types: 0x681

err: Heap 0: 584 MB allocated, 443 MB used, 594 MB allocated (driver), 7621 MB available (driver), 8192 MB total

err: Heap 1: 456 MB allocated, 455 MB used, 511 MB allocated (driver), 24080 MB available (driver), 24080 MB total

This is using Proton with the proton.py script updated to add support for d3d9.dll. All other DX9 games appear to work well in Proton.

Did you tried setting video memory size manually? This help me with Star Wars: The Force Unleashed II and D9VK. I set VRAM in Wine to 4096 and it helps. Otherwise the game crashes every minute or two with the memory allocation error.

My laptop has nvidia 730m with only 1024MiB of vram, I play A hat in time with d9vk and it immediately crash with memory allocation failed on proton's log.