Dvc: pull: --jobs not honored for imported data

DVC pull from not honoring --jobs or -j

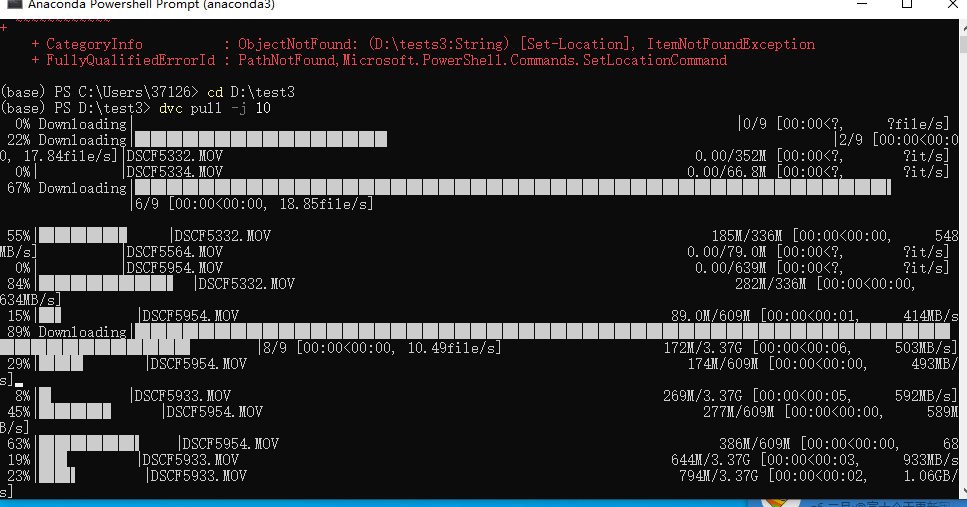

from git clone of an existing repository that includes an imported dvc resource, after cloning on windows, the dvc pull is not honoring the --jobs or -j flag.

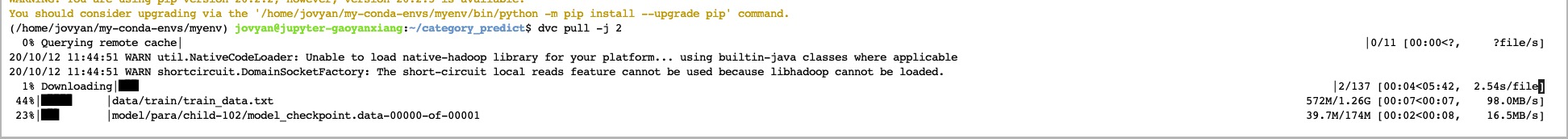

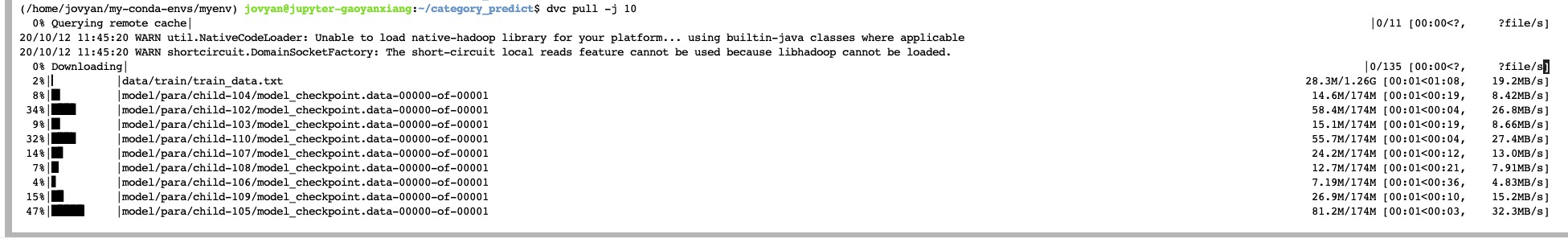

dvc pull --jobs 2

(30 or 40 downloads.....)

Nothing in special in the repo's .dvc/config

$ cat .dvc/config

[cache]

type = "symlink,copy"

shared = group

no .dvc/config.local for the repo

the remote resource that we are pulling from has a .dvc/config

[core]

remote = origin

[cache]

type = "symlink,copy"

shared = group

['remote "origin"']

url = s3://dvc-bucket/datasets/foo

endpointurl = https://endpoint.com

profile = my_profile

Please provide information about your setup

Output of dvc version:

Windows: from installer

1.8.1

All 10 comments

-j

, --jobs - parallelism level for DVC to download data from remote storage.Using more jobs may improve the overall transfer speed.

Seems it isn't configurable in the config file, it can be a new feature, and ought to be a local config(depends on both the local machine and the remote storage) I think.

Was able to get around this by building a local cache from data repo (where pull -j works) - then perform pull on imported resource (where -j doesn’t work).... the 48 concurrent requests on large file - caused timeouts on the s3 resource - leading to multiple failures to pull... repeating the pull multiple times - was incrementally getting more of the data - before I gave up - and went the two step process of creating the local cache from the data repo first....

-j , --jobs - parallelism level for DVC to download data from remote storage.Using more jobs may improve the overall transfer speed.

Seems it isn't configurable in the config file, it can be a new feature, and ought to be a local config(depends on both the local machine and the remote storage) I think.

@efiop

Oh sorry, this is my own thought not about this issue. I'll create a new ticket for it later.

@wdixon

dvc pull creates more connections than what -j config in your cases and that means it didn't work. Besides this, it even causes some other problems and can't get the files downloaded? Can you please give more details and help us reproduce it?

reproducing seems straight forward..... If you have an remote .dvc resource committed to a repo....

git clone http://github.com/repo_with_imported_dvc_resource.git

cd repo_with_imported_dvc_resource

dvc pull -j <limit>

Assuming no shared cache configured - you will see that it fetches the remote resources - but does not limit the number of concurrent downloads to what is specified by the -j flag.

This repo happened to have several hundred large files (~1GB each) - lead to timeouts and failures with so many concurrent downloads (think its defaults to 4*number of cores streams - which is really not practical).

But, the large files are irrelevant to reproducing more concurrent downloads than specified by the -j limit.

Seems OK on Linux with HDFS remote in version 1.8.1, I'll try it on Windows later.

I'm sorry I can not reproduce it on the Windows platform with a local cache. I haven't got an s3 server now.

Just to be clear - the issue I face is when the dvc resource is imported from another repo. A dvc pull for resources directly within your repo does obey the jobs limit.

I setup a dvc data repo... and a dvc training repot. The training repo defined a dvc import for subset of the data repo... after a fresh git clone of the training repo, the dvc pull of the training repo’s dvc data resource - doesn’t honor the -j or —jobs flag

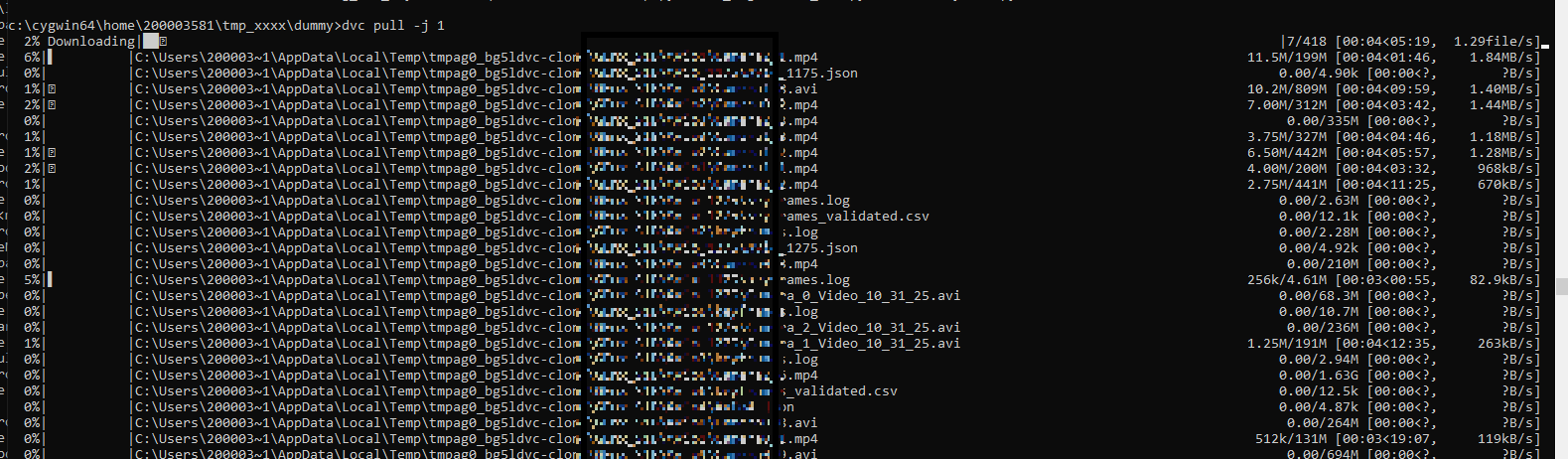

here is what I get when I clone a repo with the remote resource.

You will see I limit to 1 job - but it kicks off multiple downloads...

If the resource is within the repo (not import from another) - the limit works.

@wdixon

You are right, thanks for your report. It is a bug I think.

Seems that it is because although we pass arguments job to the fetch_external in

https://github.com/iterative/dvc/blob/68220cbea07fd445901f7f124d900676d2d536e1/dvc/repo/fetch.py#L70

But it takes no effect.

https://github.com/iterative/dvc/blob/68220cbea07fd445901f7f124d900676d2d536e1/dvc/external_repo.py#L149-L176

Most helpful comment

Seems it isn't configurable in the config file, it can be a new feature, and ought to be a local config(depends on both the local machine and the remote storage) I think.