Dvc: Support for providing certificate for SSL required S3 connections

Please provide information about your setup

DVC version: 0.57.0

Platform and method of installation: Linux, pip install

Problem:

When trying to use DVC to push data to a remote S3 bucket that requires SSL verification you can run into issues when telling DVC to use a certificate stored on your computer. Even if you have the aws cli configured properly pointing to your certification bundle, DVC will not be aware of it. For example, a correctly setup .aws/config file might look like:

[default]

output = json

region = us-east-1

ca_bundle = /path/to/cert/cert.crt

And you will be able to use aws --endpoint=https://endpoint.url.com -

-profile=myprofile s3 like commands just fine without any SSL errors.

However, using dvc push still results in SSL errors because it doesn't source the location of your certification file.

Temporary Solution:

DVC (or its dependencies) can correctly source the certification file from the env variable AWS_CA_BUNDLE. That is, in a terminal session that you are plan on running DVC in you can define:

export AWS_CA_BUNDLE=/path/to/cert/cert.crt

And DVC should be able to properly push data to the remote S3 bucket without any SSL errors.

This problem and temporary solution were discussed/troubleshooted on the discord help space at:

Discord conversation link

All 30 comments

Looks like we need to do the same thing awscli does:

1) get ca_bundle from config: https://github.com/aws/aws-cli/blob/2.0.0dev0/awscli/customizations/globalargs.py#L79

2) set it for the client: https://github.com/aws/aws-cli/blob/2.0.0dev0/awscli/clidriver.py#L713

I wasn't able to replicate this :slightly_frowning_face:

It looks like boto3 already loads the ca_bundle for me.

A small example with min.io:

# Set up certs for minio

mkcert -init

mkdir -p ~/.minio/certs

cd ~/.minio/certs

mkcert -cert-file public.crt -key-file private.key localhost

# Start minio

mkdir -p /tmp/minio/bucket

MINIO_ACCESS_KEY=changeme \

MINIO_SECRET_KEY=changeme \

minio server --compat /tmp/minio

# Configure ASW CLI

aws configure --profile=custom

# AWS Access Key ID: changeme

# AWS Secret Access Key: changeme

aws configure set profile.custom.ca_bundle "$(mkcert -CAROOT)/rootCA.pem"

# Test CLI

aws --profile=custom --endpoint='https://localhost:9000' s3 ls

# Test DVC

mkdir /tmp/example

cd /tmp/example

dvc init --no-scm

dvc remote add s3 s3://bucket

dvc remote modify s3 profile custom

dvc remote modify s3 endpointurl https://localhost:9000

dvc run -o foo "echo foo > foo"

dvc push -r s3

If I remove the ca_bundle entry from the ~/.aws/config file it gives me the respective error;

ERROR: unexpected error - SSL validation failed for https://localhost:9000/bucket/d3/b07384d113edec49eaa6238ad5ff00 [SSL: CERTIFICATE_VERIFY_FAILED

] certificate verify failed: unable to get local issuer certificate (_ssl.c:1076)

@pfigliozzi, did you set the correct profile with dvc remote modify ?

I am pretty sure I did, here are relevant files for DVC and AWS:

(base) pfigl@server [.../GitHub/drove] $ cat .dvc/config

['remote "drove"']

url = s3://poc-analytics/drove

profile = nonprod

endpointurl = https://my.endpoint.com

[cache]

s3 = drove

[core]

remote = drove

(drove) pfigl@server [.../GitHub/drove] $ cat ~/.aws/config

[default]

output = json

region = us-east-1

ca_bundle = /path/to/cert/my_cert.crt

(drove) pfigl@server [.../GitHub/drove] $ cat ~/.aws/credentials

[default]

aws_access_key_id = key_1

aws_secret_access_key = secret_key_1

[nonprod]

aws_access_key_id = key_2

aws_secret_access_key = secret_key_2

My .dvc/config properly points to my nonprod profile and retrieves the correct access/secret keys. I thought that DVC would use the default profile to get the cert file.

Following my original post, I have set an env variable in my .bashrc to set the AWS_CA_BUNDLE path to my cert file. Below I can show how a dvc push fails the SSL verification when I don't have this env variable set. (Please ignore the 502 Bad Gateway error, that is a problem with my company's S3 setup and not with DVC, please focus on the SSL error for the second dvc push command).

(base) pfigl@server [.../GitHub/drove] $ echo $AWS_CA_BUNDLE

/path/to/cert/my_cert.crt

(base) pfigl@server [.../GitHub/drove] $ dvc push

Preparing to upload data to 's3://poc-analytics/drove'

Preparing to collect status from s3://poc-analytics/drove

Collecting information from local cache...

100%|█████████████████████████████████████████████████████████████████████████████████████████████| 11/11 [00:00<00:00, 1201.02md5/s]

Collecting information from remote cache...

36%|██████████████████████████████████▉ | 4/11 [04:43<08:16, 70.98s/md5]

ERROR: unexpected error - An error occurred (502) when calling the ListObjectsV2 operation (reached max retries: 4): Bad Gateway

Having any troubles?. Hit us up at https://dvc.org/support, we are always happy to help!

(base) pfigl@server [.../GitHub/drove] $ unset AWS_CA_BUNDLE

(base) pfigl@server [.../GitHub/drove] $ dvc push

Preparing to upload data to 's3://poc-analytics/drove'

Preparing to collect status from s3://poc-analytics/drove

Collecting information from local cache...

100%|█████████████████████████████████████████████████████████████████████████████████████████████| 11/11 [00:00<00:00, 1145.56md5/s]

Collecting information from remote cache...

0%| | 0/11 [00:13<?, ?md5/s]

ERROR: unexpected error - SSL validation failed for https://my.endpoint.com/poc-analytics?list-type=2&prefix=drove%2F86%2F5b1c2ff4e47ecf189f8168a761ed4b&encoding-type=url [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1056)

Test the aws cli after unsetting AWS_CA_BUNDLE

(base) pfigl@server [.../GitHub/drove] $ aws --profile=nonprod --endpoint='https://my.endpoint.com' s3 ls

[Displays lots of Buckets]

@pfigliozzi , I notice that your ca_bundle is under [default] instead of [nonprod]on your .aws/config file.

From the CLI docs:

The information in the default profile is used any time you run an AWS CLI command that doesn't explicitly specify a profile to use.

Could you try moving the ca_bundle to [nonprod]?

I created a new profile under .aws/config titled [nonprod] which is a copy of my [default]. I still get the SSL error.

(base) pfigl@server [.../GitHub/drove] $ echo $AWS_CA_BUNDLE

/path/to/cert/my_cert.crt

(base) pfigl@server [.../GitHub/drove] $ unset AWS_CA_BUNDLE

(base) pfigl@server [.../GitHub/drove] $ cat ~/.aws/config

[default]

output = json

region = us-east-1

ca_bundle = /path/to/cert/my_cert.crt

[nonprod]

output = json

region = us-east-1

ca_bundle = /path/to/cert/my_cert.crt

(base) pfigl@server [.../GitHub/drove] $ cat .dvc/config

['remote "drove"']

url = s3://poc-analytics/drove

profile = nonprod

endpointurl = https://my.endpoint.com

[cache]

s3 = drove

[core]

remote = drove

(base) pfigl@server [.../GitHub/drove] $ dvc push

Preparing to upload data to 's3://poc-analytics/drove'

Preparing to collect status from s3://poc-analytics/drove

Collecting information from local cache...

100%|█████████████████████████████████████████████████████████████████████████████████████████████| 11/11 [00:00<00:00, 1174.28md5/s]

Collecting information from remote cache...

0%| | 0/11 [00:11<?, ?md5/s]

ERROR: unexpected error - SSL validation failed for https://my.endpoint.com/poc-analytics?list-type=2&prefix=drove%2F86%2F5b1c2ff4e47ecf189f8168a761ed4b&encoding-type=url [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1056)

thanks, @pfigliozzi !

Let me investigate further, then :slightly_smiling_face:

@pfigliozzi, my apologies, I didn't notice that the error was not about DVC being unable to read the SSL certificate.

The error you're experiencing is the following:

[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1056)

It is related to SSL verification: https://www.python.org/dev/peps/pep-0476/

And certificate being self signed: http://gagravarr.org/writing/openssl-certs/others.shtml#selfsigned-openssl

We could support to not verify SSL, what do you think, @efiop ? https://boto3.amazonaws.com/v1/documentation/api/latest/reference/core/session.html

@mroutis

my apologies, I didn't notice that the error was not about DVC being unable to read the SSL certificate.

Why is it working with AWS_CA_BUNDLE then? The ssl verification is probably comming from the included certificate that boto3 brings with it, which it uses by default because it is not seeing the one from the config.

We could support to not verify SSL, what do you think, @efiop ?

We do support that already via use_ssl config option.

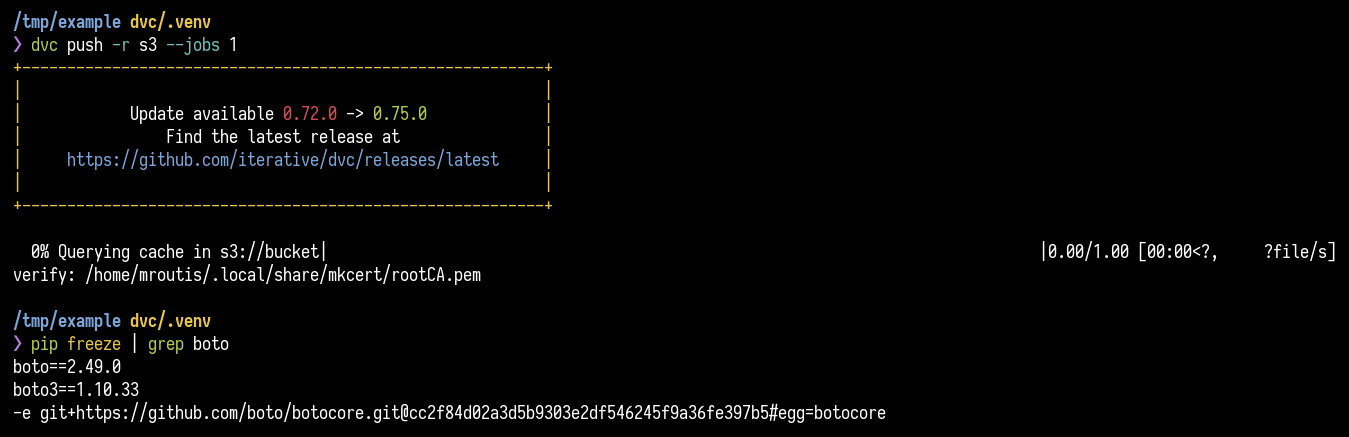

@pfigliozzi Btw, could you show pip freeze | grep boto, please?

We do support that already via use_ssl config option.

@efiop, use_ssl=False is different from verify=False. The former one will not use SSL at all, and the second one will _trust_ the certificate.

Why is it working with AWS_CA_BUNDLE then? The ssl verification is probably comming from the included certificate that boto3 brings with it, which it uses by default because it is not seeing the one from the config.

Yep, good catch. So, do you think that DVC isn't picking up the ca_bundle from the config?

What do you think about the example I shared, @efiop , maybe I'm missing something there :thinking:

@efiop, use_ssl=False is different from verify=False. The former one will not use SSL at all, and the second one will trust the certificate.

Great point! Totally forgot about it!

Yep, good catch. So, do you think that DVC isn't picking up the ca_bundle from the config?

Have no idea so far, tbh. From boto3 docs it does seem like it should indeed pick it up, not sure what's wrong there. Maybe a typo somewhere or something? Btw @pfigliozzi , does

(base) pfigl@server [.../GitHub/drove] $ aws --profile=nonprod --endpoint='https://my.endpoint.com' s3 ls

work without AWS_CA_BUNDLE in the env?

I guess @pfigliozzi could verify the certificate with some OpenSSL black magic.

openssl verify -verbose -x509_strict -CAfile <ca.pem> <ca_bundle.pem>

In my example it would be openssl verify -verbose -x509_strict -CAfile "$(mkcert -CAROOT)/rootCA.pem" ~/.minio/certs/public.crt

Sorry for not responding sooner. Here is the requested information.

@efiop Here is the results of the pip freeze:

(base) pfigl@server [/home/pfigl] $ pip freeze | grep boto

boto==2.49.0

boto3==1.9.234

botocore==1.12.237

@efiop Even when unsetting the AWS_CA_BUNDLE the s3 ls command still works:

(base) pfigl@server [.../GitHub/drove] $ unset AWS_CA_BUNDLE

(base) pfigl@server [.../GitHub/drove] $ echo $AWS_CA_BUNDLE

(base) pfigl@server [.../GitHub/drove] $ aws --profile=nonprod --endpoint='https://my.endpoint.com' s3 ls

[ LIST OF BUCKETS]

@mroutis I am having trouble running the openssl verify command. I only know about the .crt file my company provides at /path/to/cert/my_cert.crt. I don't know where the .pem file is and the mkcert command doesn't work on the server I am working on. Is there any other way I can verify the certificate?

Even when unsetting the AWS_CA_BUNDLE the s3 ls command still works:

@pfigliozzi And while doing that, do you have ca_bundle for nonprod profile in your aws config? If so, could you remove it, leaving it only in default section, just to check if that works in your original setup.

The boto versions look alright, as far as I can see.

Hi @efiop, I re-ran the aws s3 command after unsetting AWS_CA_BUNDLE and removing the ca_bundle from my "nonprod" profile and leaving it only in the "default" profile in my .aws/config. The aws s3 ls command still produced a list of buckets on the endpoint, see the commands below:

(base) pfigl@server [.../GitHub/drove] $ echo $AWS_CA_BUNDLE

/path/to/cert/my_cert.crt

(base) pfigl@server [.../GitHub/drove] $ unset AWS_CA_BUNDLE

(base) pfigl@server [.../GitHub/drove] $ echo $AWS_CA_BUNDLE

(base) pfigl@server [.../GitHub/drove] $ cat ~/.aws/config

[default]

output = json

region = us-east-1

ca_bundle = /path/to/cert/my_cert.crt

[nonprod]

output = json

region = us-east-1

ca_bundle = /path/to/cert/my_cert.crt

(base) pfigl@server [.../GitHub/drove] $ vim ~/.aws/config

(base) pfigl@server [.../GitHub/drove] $ cat ~/.aws/config

[default]

output = json

region = us-east-1

ca_bundle = /path/to/cert/my_cert.crt

[nonprod]

output = json

region = us-east-1

(base) pfigl@server [.../GitHub/drove] $ aws --profile=nonprod --endpoint='https://my.endpoint.com' s3 ls

[ LIST OF BUCKETS ]

@pfigliozzi Thanks!

@mroutis Please take a look at the code that I've linked in my first comment. If boto is able to read those by default, I wonder why awscli is doing that manually. Most likely it is for a good reason, and if it is so, we need to do the same.

@efiop , all right, I'll take a look

@pfigliozzi , thanks a lot for the information! :slightly_smiling_face:

@efiop, probably aws-cli is reading the config file because it provides the --ca-bundle flag on their commands.

boto3 team mentions in their docs that they support reading ca_bundle from the aws config file:

https://boto3.amazonaws.com/v1/documentation/api/latest/guide/configuration.html#configuration-file

I can confirm it does.

https://github.com/boto/botocore/blob/develop/botocore/session.py#L798-L799

I added a print on that line, ran my example, and then I got: verify: /home/mroutis/.local/share/mkcert/rootCA.pem

Not sure what's going on :thinking:

Maybe I'm missing something, do you have any ideas or further comments, @efiop ?

The botocore version that I'm using is 1.13.34, I'll try the same example with checking out the version @pfigliozzi is using.

Same results with version 1.12.237 https://github.com/iterative/dvc/issues/2558#issuecomment-563317124

cc2f84d0 (HEAD -> develop, tag: 1.12.237)

@pfigliozzi , could you update your DVC installation?

One more iteration, this time, using everything from DVC 0.57.0.

mkdir /tmp/example

cd /tmp/example

python3.7 -m venv .venv

source .venv/bin/activate

pip install dvc[s3]==0.57.0

pip uninstall botocore

pip install -e 'git+https://github.com/boto/[email protected]#egg=botocore'

sed -i '803 a \ print("verify:", verify)' .venv/src/botocore/botocore/session.py

dvc init --no-scm

dvc remote add s3 s3://bucket

dvc remote modify s3 profile custom

dvc remote modify s3 endpointurl https://localhost:9000

dvc run -o foo "echo foo > foo"

dvc push -r s3 --jobs 1

I don't get why it is not working for @pfigliozzi, mine returns the following:

verify: /home/mroutis/.local/share/mkcert/rootCA.pem

Then if I change it to something else:

aws configure set profile.custom.ca_bundle "/tmp/something-else"

No need to tamper botocore:

We can see that session is loading correctly the config and it is passing the certificate to the HTTP session with the following:

@cached_property

def s3(self):

session = boto3.session.Session(

profile_name=self.profile, region_name=self.region

)

client = session.client(

"s3", endpoint_url=self.endpoint_url, use_ssl=self.use_ssl

)

print("profile_map:", session._session._profile_map)

print("verify:", client._endpoint.http_session._verify)

return client

Turns out the verify keyword comes from the HTTPAdapter in requests: https://github.com/psf/requests/blob/eedd67462819f8dbf8c1c32e77f9070606605231/requests/adapters.py#L403-L405

@pfigliozzi , could you use https://www.ssllabs.com/ssltest/ to test if your SSL is configured correctly on your endpoint?

Great research @mroutis ! Thank you! @pfigliozzi Looks like we are not able to reproduce this right now. Let's try this last sanity check (please be sure to check that PROFILE, ENDPOINT and BUCKET as set correctly):

import os

import boto3

# sanity checks to ensure that nothing is messing around with us

assert not os.getenv("AWS_PROFILE")

assert not os.getenv("AWS_CA_BUNDLE")

# SET THESE VARS WITH YOUR VALUES

PROFILE = "nonprod"

ENDPOINT = "https://my.endpoint.com"

BUCKET = "poc-analytics"

session = boto3.session.Session(profile_name=PROFILE)

client = session.client("s3", endpoint_url=ENDPOINT)

client.list_objects_v2(Bucket=BUCKET, MaxKeys=1)

If this works, then we have an issue somewhere. If it doesn't - it is boto bug (which we've seemingly ruled-out already by our tests and analyzing the boto3/botocore code) or it is your particular workspace misconfiguration (e.g. maybe some config mess or just a typo somewhere).

Maybe the certifi or the OpenSSL version is not the correct one? https://github.com/aws/aws-cli/issues/1499

@mroutis I just upgraded DVC to the latest version (0.75.0) and I still get the SSL error.

@mroutis I tried using https://www.ssllabs.com/ssltest/ to test https://my.endpoint.com and it is "Unable to resolve the domain name". This endpoint is one that my company stood up, perhaps it is only accessible through our company's intranet, meaning the ssltest cannot find it?

@efiop I created a script out of the code you shared as test_dvc.py. The program results in an SSL error

(base) pfigl@server [/home/pfigl] $ python test_dvc.py

Traceback (most recent call last):

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/connectionpool.py", line 600, in urlopen

chunked=chunked)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/connectionpool.py", line 343, in _make_request

self._validate_conn(conn)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/connectionpool.py", line 839, in _validate_conn

conn.connect()

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/connection.py", line 344, in connect

ssl_context=context)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/util/ssl_.py", line 347, in ssl_wrap_socket

return context.wrap_socket(sock, server_hostname=server_hostname)

File "/home/pfigl/anaconda3/lib/python3.7/ssl.py", line 412, in wrap_socket

session=session

File "/home/pfigl/anaconda3/lib/python3.7/ssl.py", line 853, in _create

self.do_handshake()

File "/home/pfigl/anaconda3/lib/python3.7/ssl.py", line 1117, in do_handshake

self._sslobj.do_handshake()

ssl.SSLCertVerificationError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1056)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/httpsession.py", line 262, in send

chunked=self._chunked(request.headers),

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/connectionpool.py", line 638, in urlopen

_stacktrace=sys.exc_info()[2])

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/util/retry.py", line 344, in increment

raise six.reraise(type(error), error, _stacktrace)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/packages/six.py", line 685, in reraise

raise value.with_traceback(tb)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/connectionpool.py", line 600, in urlopen

chunked=chunked)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/connectionpool.py", line 343, in _make_request

self._validate_conn(conn)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/connectionpool.py", line 839, in _validate_conn

conn.connect()

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/connection.py", line 344, in connect

ssl_context=context)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/urllib3/util/ssl_.py", line 347, in ssl_wrap_socket

return context.wrap_socket(sock, server_hostname=server_hostname)

File "/home/pfigl/anaconda3/lib/python3.7/ssl.py", line 412, in wrap_socket

session=session

File "/home/pfigl/anaconda3/lib/python3.7/ssl.py", line 853, in _create

self.do_handshake()

File "/home/pfigl/anaconda3/lib/python3.7/ssl.py", line 1117, in do_handshake

self._sslobj.do_handshake()

urllib3.exceptions.SSLError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1056)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "test_dvc.py", line 15, in <module>

client.list_objects_v2(Bucket=BUCKET, MaxKeys=1)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/client.py", line 357, in _api_call

return self._make_api_call(operation_name, kwargs)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/client.py", line 648, in _make_api_call

operation_model, request_dict, request_context)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/client.py", line 667, in _make_request

return self._endpoint.make_request(operation_model, request_dict)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/endpoint.py", line 102, in make_request

return self._send_request(request_dict, operation_model)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/endpoint.py", line 137, in _send_request

success_response, exception):

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/endpoint.py", line 231, in _needs_retry

caught_exception=caught_exception, request_dict=request_dict)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/hooks.py", line 356, in emit

return self._emitter.emit(aliased_event_name, **kwargs)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/hooks.py", line 228, in emit

return self._emit(event_name, kwargs)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/hooks.py", line 211, in _emit

response = handler(**kwargs)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/retryhandler.py", line 183, in __call__

if self._checker(attempts, response, caught_exception):

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/retryhandler.py", line 251, in __call__

caught_exception)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/retryhandler.py", line 277, in _should_retry

return self._checker(attempt_number, response, caught_exception)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/retryhandler.py", line 317, in __call__

caught_exception)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/retryhandler.py", line 223, in __call__

attempt_number, caught_exception)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/retryhandler.py", line 359, in _check_caught_exception

raise caught_exception

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/endpoint.py", line 200, in _do_get_response

http_response = self._send(request)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/endpoint.py", line 244, in _send

return self.http_session.send(request)

File "/home/pfigl/anaconda3/lib/python3.7/site-packages/botocore/httpsession.py", line 280, in send

raise SSLError(endpoint_url=request.url, error=e)

botocore.exceptions.SSLError: SSL validation failed for https://my.endpoint.com/poc-analytics?list-type=2&max-keys=1&encoding-type=url [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1056)

@efiop Considering that this failed perhaps it is an issue with some configuration I have on my machine. If it is I have know idea what I did to mis-configure my system though haha. I appreciate all the time you have put in to debugging this!

@pfigliozzi Thanks! 🙂 Looking at https://boto3.amazonaws.com/v1/documentation/api/latest/guide/configuration.html , I would probably double-check these things (since they are lower in priority than env var, which clearly works fine):

* Shared credential file (~/.aws/credentials)

* AWS config file (~/.aws/config)

* Assume Role provider

* Boto2 config file (/etc/boto.cfg and ~/.boto)

* Instance metadata service on an Amazon EC2 instance that has an IAM role configured.

We'll close this ticket for now, as we are currently unable to reproduce it or pinpoint what specifically went wrong. Please let us know if you will run into this on another machine/different setup (e.g. maybe a colleague of yours would run into the same issue) and we'll take another look, maybe the new details will help debugging it. Thanks for the feedback! 🙂

Most helpful comment

thanks, @pfigliozzi !

Let me investigate further, then :slightly_smiling_face: