Deno: await Deno.stdout.write doesn't resolve when called in rapid succession

Came across this when using the https://github.com/jakobhellermann/deno-progressbar library to provide feedback on a series of tasks.

example script that has the issue

const exampleSource = ["one", "two"];

const encoder = new TextEncoder();

const exampleProcesses = exampleSource.map(async (value) => {

const text = `${value}`;

await Deno.stdout.write(encoder.encode(text));

});

await Promise.all([

...exampleProcesses,

// not needed to reproduce, this is just to show the promise unresolved status in output

new Promise((_, reject) => {

setTimeout(() => {

console.log("\n---\nfailures");

exampleProcesses.forEach(console.log);

reject();

}, 1000);

}),

]);

console.log("never reached");

output of example script

deno run test.ts

Check file:///Users/lukewoollard/work/deno-figma/test.ts

onetwo

---

failures

Promise { <pending> } 0 [ Promise { <pending> }, Promise { undefined } ]

Promise { undefined } 1 [ Promise { <pending> }, Promise { undefined } ]

error: Uncaught undefined

Worked around this locally by vendoring that code and replacing the use of await Deno.stdout.write with Deno.stdout.writeSync.

Related issue: https://github.com/denoland/deno/issues/5515

All 6 comments

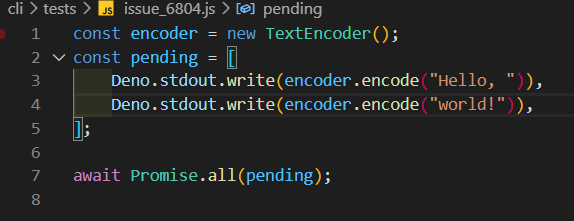

Stripped down example:

const encoder = new TextEncoder();

const pending = [

Deno.stdout.write(encoder.encode("Hello, ")),

Deno.stdout.write(encoder.encode("world!")),

];

await Promise.all(pending);

Seems like a deadlock is occurring, it's not sound to let a race occur against writes/reads on the same fd but this should be a race, not a deadlock.

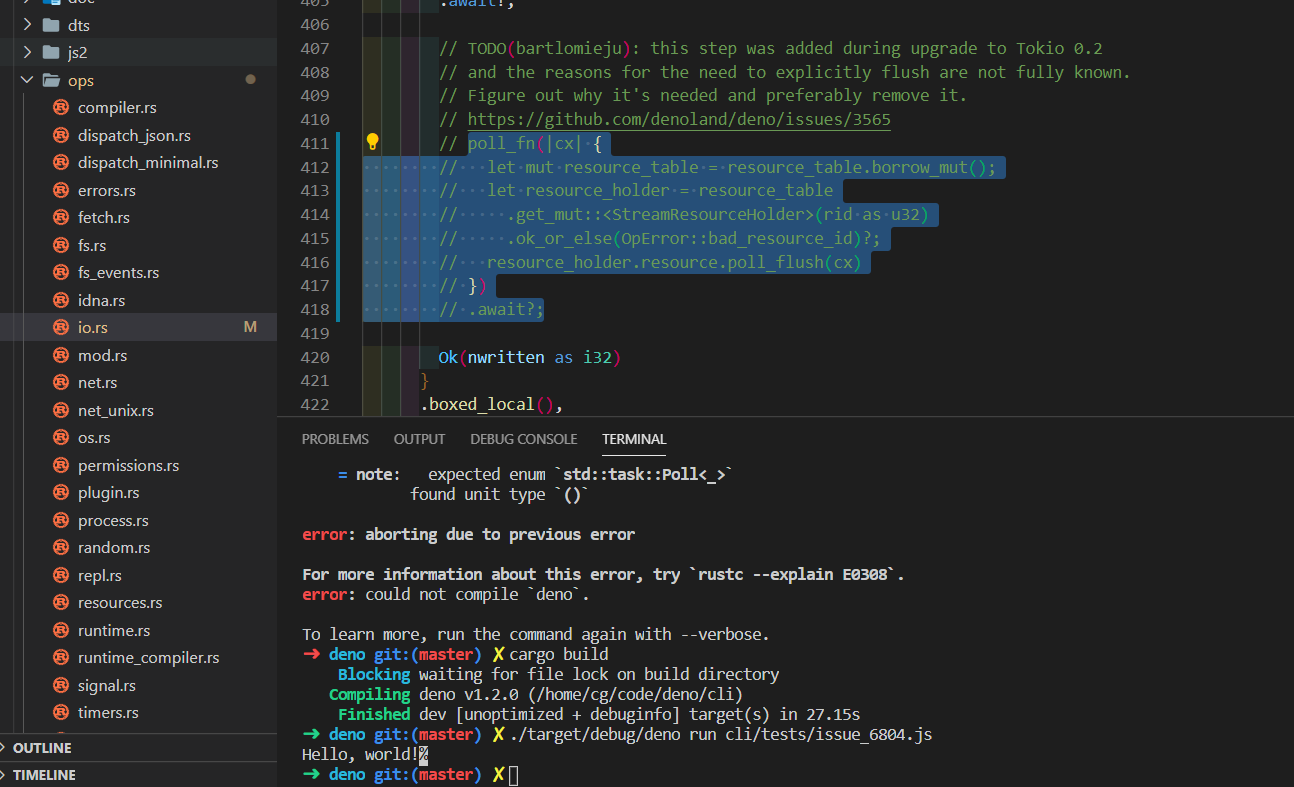

From what I've gathered so far this was introduced by https://github.com/denoland/deno/issues/3565.

Removing the flush fixes this and works locally but breaks the CI 🤔

Before I put another bandaid on top of the bandaid... did anyone ever work out the details of why we need this flush?

cc @bartlomieju @piscisaureus

@caspervonb not really. Just yesterday I landed tokio upgrade. Does this problem persist?

Does this problem persist?

Unfortunately yes @bartlomieju, this is reproducible with b573bbe4471c3872f96e1d7b9d1d1a2b39ff4cf1 as-well.

From what I've gathered so far this was introduced by #3565.

Removing the flush fixes this and works locally but breaks the CI 🤔

Before I put another bandaid on top of the bandaid... did anyone ever work out the details of why we need this flush?

cc @bartlomieju @piscisaureus

Me, too. Remove it works again

Edit:

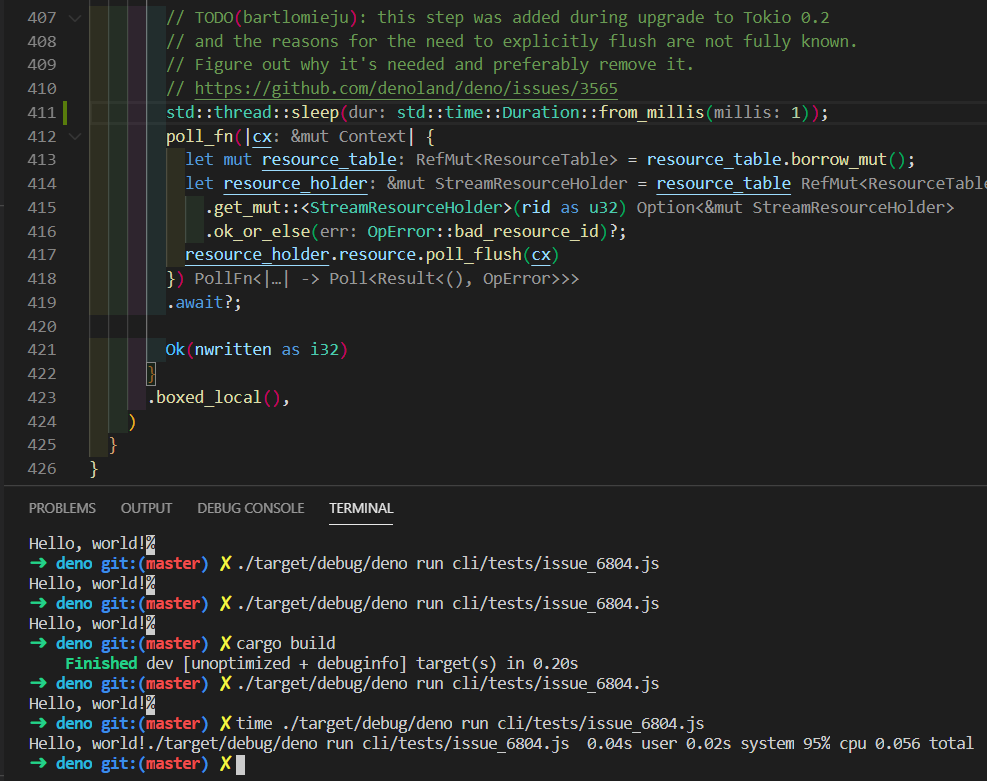

add a sleep also works

Edit#2

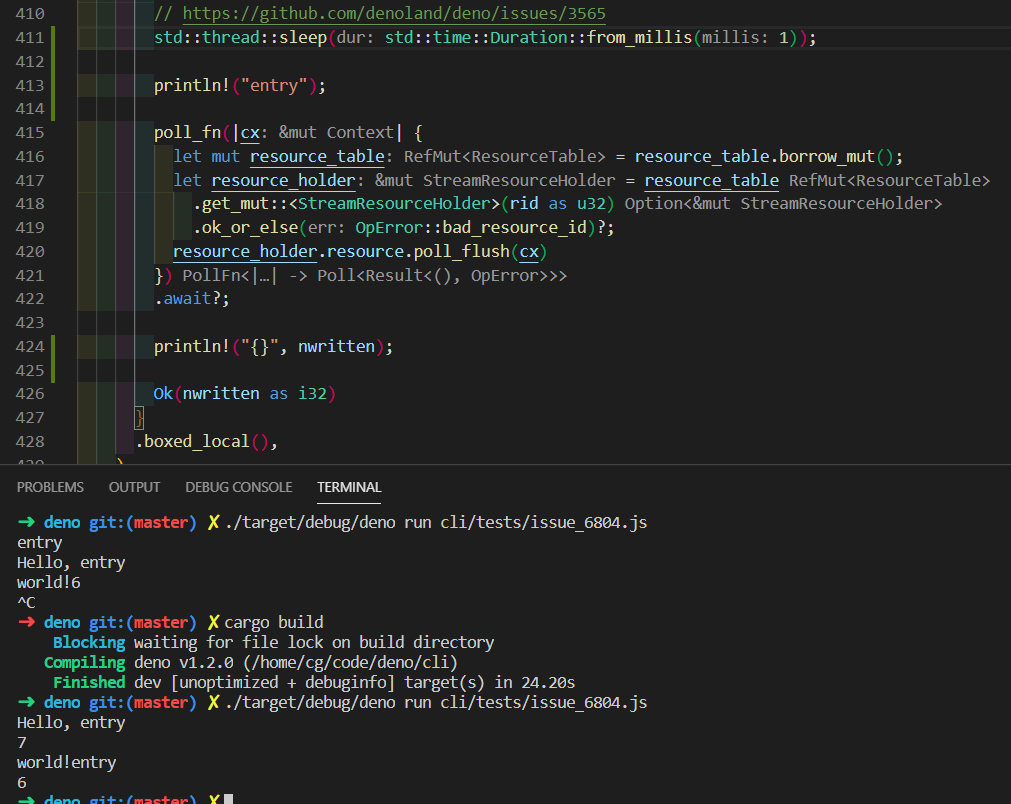

As the screenshot above shows: first run without sleep, second run with sleep, I think it must be something wrong with that poll_flush, because first run the println!("{}", nwritten); corresponding to 'hello, ' did not run which was must been blocked by poll_flush

I think it must be something wrong with that poll_flush, because first run the println!("{}", nwritten); corresponding to 'hello, ' did not run which was must been blocked by poll_flush

Pretty sure this would deadlock even with just two pairs poll_write and await in succession.