Darknet: Implemented weighted-multi_input-[shortcut] layer with weights-normalization

Implemented weighted-multi_input-[shortcut] layer with weights-normalization, added:

New [shortcut] can:

- can take more than 2 input layers for adding:

from = -2, -3(and -1 by default)

can multiply by weights:

- the different input layers: weight

per_feature(per_layer) - or the different input layers and channels: weight

per_channel

- the different input layers: weight

can normalize weights by using:

avg_reluorsoftmax

The simplest example: yolov3-tiny_3l_shortcut_multilayer_per_feature_softmax.cfg.txt

- Fusion-layer for BiFPN for EfficientDet network: https://github.com/AlexeyAB/darknet/issues/4346

[shortcut]

from= -2, -3, 6 # means: -1, -2, -3, 6 - relative/absolute indexes of input layers

weights_type= per_feature # none (default), per_feature, per_channel

weights_normalizion= relu # none (default), relu, softmax

activation= linear # linear (default), leaky, rely, logistic, swish, mish, ...

- Original residual-connection:

[shortcut]

from= -5 # means: -1, -5 - relative indexes of input layers

activation= linear

https://arxiv.org/abs/1911.09070v1

https://arxiv.org/abs/1911.09070v1

All 91 comments

@AlexeyAB @WongKinYiu I made a network has 3 BiFPN blocks with P3~P5, just take a look for sure,

darknet53-bifpn3.cfg.txt

but even set input size to 320*320 and subdivision=32will get this error

CUDA Error Prev: an illegal memory access was encountered

CUDA Error Prev: an illegal memory access was encountered: Resource temporarily unavailable

darknet: ./src/utils.c:297: error: Assertion `0` failed.

Aborted (core dumped)

@Kyuuki93 Try to use the latest commit. I set

batch=64

subdivisions=16

width=320

height=320

and trained your cfg-file for 100 iterations successfully.

@Kyuuki93

There is bug in your cfg:

use weights_type=per_feature instead of weights_type=per_feture

@Kyuuki93

Also you should not specify -1 layer, since it is set by default for all [shortcut] layers.

Just use

[shortcut]

from=61

weights_type=per_feature

weights_normalizion=relu

activation=leaky

instead of

[shortcut]

from=-1,61

weights_type=per_feature

weights_normalizion=relu

activation=leaky

@AlexeyAB cuda error was lead by weights_type=per_feture, my mistake.

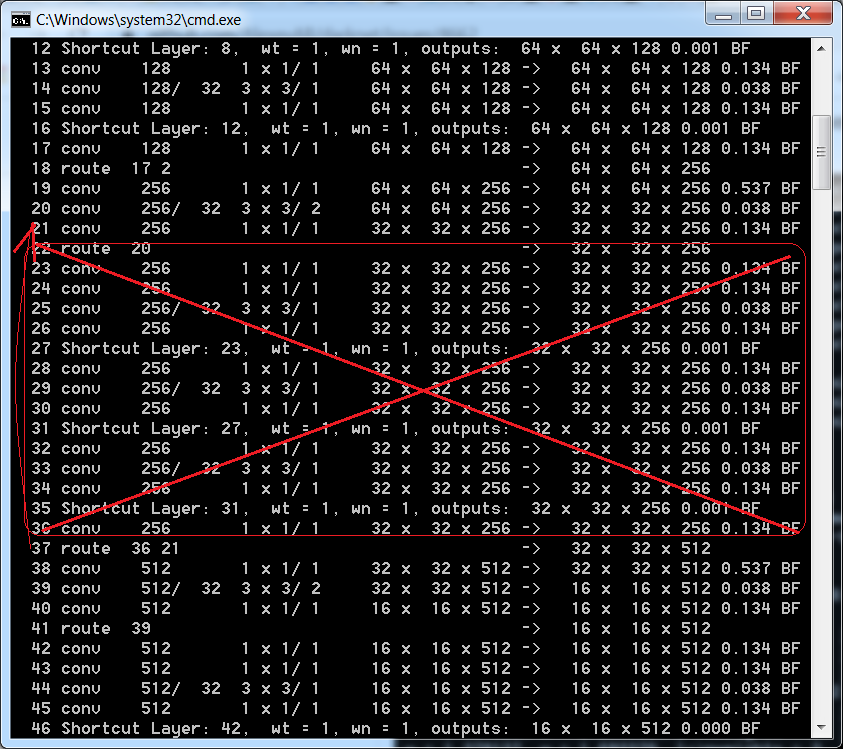

Modified .cfg is here darknet53-bifpn3.cfg.txt which look like this

Comparison on my dataset, all cases with same training settings and used MS COCO detector pre-trained weights, only different in backbone

| Model | [email protected]| [email protected]| precision(.7) | recall(.7)| inference time(416x416)|

| --- | --- | --- | --- | --- | --- |

|yolov3| 91.79%| 63.09% |0.95| 0.71| 13.25ms|

|csresnext50-panet|92.80%|64.16%|0.96|0.67|15.61ms |

|darknet53-bifpn3(P3-5)|91.74%|63.48%|0.95|0.71|15.25ms|

ALL network has SPP-layer, inference time test on RTX 2080Ti, BiFPN block use

weights_type=per_feature

weights_normalizion=relu

darkenet53-bifpn3-spp got very similar performance with yolov3-spp, I think the reason could be

- ability of BiFPN*3(P3-5) was very close to FPN in

yolov3-spp - my dataset was too small to show different of two FPN

the option of next step could be

- darknet53-bifpn*N(P3-5)

- darknet53-bifpn*N(P2-5)

- darknet53-bifpn*N(P3-7)

- csresnext50-panet-bifpn*N(Px-Px)

but recently my machines was occupied by other task, I will try further experiment when GPUs got free

@Kyuuki93

Yes,

- Try to use more than 3xP

- Also try to use 3-5 BiFPN blocks

since BiFPN is not very expensive

@Kyuuki93 @WongKinYiu I just fixed weights_normalizion=softmax for [shortcut] layer. https://github.com/AlexeyAB/darknet/commit/14172d42b68cf9c81ca1150020475f3e79c82fab#diff-0c461530f46c81f7013a6eaec297ebcfR135

weights_normalizion=relu remains the same as earlier.

@AlexeyAB

Start re-train ASFF models now.

@WongKinYiu

[shortcut] doesn't affect ASFF.

And [shortcut] weights_normalizion=softmax even doesn't affect BiFPN.

- default ASFF uses

[scale_channels] scale_wh=1 - default BiFPN uses

[shortcut] weights_normalizion=relu

@Kyuuki93 @WongKinYiu

Also I don't know what result of BiFPN (weights_normalizion=softmax) will be better, with max_val=0 or without it, between these 2 lines in these 2 places:

- https://github.com/AlexeyAB/darknet/blob/14172d42b68cf9c81ca1150020475f3e79c82fab/src/blas_kernels.cu#L705-L706

- https://github.com/AlexeyAB/darknet/blob/14172d42b68cf9c81ca1150020475f3e79c82fab/src/blas_kernels.cu#L781-L782

So you can test both cases on two small datasets.

@Kyuuki93 Hi,

Do you get any progress in BiFPN and BiFPN+ASFF?

Do you get any progress in BiFPN and BiFPN+ASFF?

Sorry, can't work this days because new year and new virus in china ...

@Kyuuki93 @AlexeyAB I'm interested in implementing a BiFPN head on top of darknet in https://github.com/ultralytics/yolov3 using https://github.com/AlexeyAB/darknet/files/4048909/darknet53-bifpn3.cfg.txt and benchmarking on COCO.

EfficientDet paper https://arxiv.org/pdf/1911.09070.pdf mentions 3 summation methods: "scalar (per-feature), a vector (per-channel), or a multi-dimensional tensor (per-pixel)". It seems they select scalar/per-feature for their implementation. Then I assume to add multiple 4D tensors, say of shape 16x256x13x13, would we have 2 scalar weights if done 'per_feature', and 2 vector weights of shape 1x256x1x1 if done 'per_channel'?

Also, I'm surprised softmax on the weights imparts such a slowdown (1.3X) in their paper, have you guys also observed this?

@glenn-jocher

Then I assume to add multiple 4D tensors, say of shape 16x256x13x13, would we have 2 scalar weights if done 'per_feature', and 2 vector weights of shape 1x256x1x1 if done 'per_channel'?

Yes.

- per_feature (per input layer) - 1 float value for each input layer

- per_channel - 1 float value per each channel from each layer

Also, I'm surprised softmax on the weights imparts such a slowdown (1.3X) in their paper, have you guys also observed this?

Only for training. And only for weighted-shortcut-layer.

As result:

- for training ~1-10%

- for detection == 0% (since normalization can be done during initialization) https://github.com/AlexeyAB/darknet/blob/653eceb1a72641cf978734dc15257f8273f1ac94/src/network.c#L1112-L1162

Did you write a paper for Mosaic data augmentation?

@AlexeyAB ah I see. Actually no, I haven't had time to write a mosaic paper. It's too bad, because the results are pretty clear that it helps significantly.

Another thing I was wondering is I see in the BiFPN cfg only the head used weighted shortcuts, while the darknet53 backbone does not. If it helps the head then it may help the backbone as well no? Though to keep expectations in check, the EfficientDet paper only shows a pretty small +0.45 mAP bump from weighted vs non-weighted head.

One difference though is that currently the regular non-weighted shortcut layers effectively have weights=1 that sum to 2, 3 etc depending on the number of inputs. Isn't this a bit strange then that the head must be constrained to sum the weights to 1?

@glenn-jocher

If it helps the head then it may help the backbone as well no?

I have the same thoughts.

We can replace each shortcut with a weighted-shortcut. May be it will get another +0.5 AP.

Isn't this a bit strange then that the head must be constrained to sum the weights to 1?

Batch-normalization try to do the same - moves the most values to range [0 - 1] - it increases mAP, speeds up the training, makes training more stable...

https://medium.com/@ilango100/batch-normalization-speed-up-neural-network-training-245e39a62f85

@AlexeyAB ah of course, the BN after the Conv2d() following a shortcut will do this automatically, it completely slipped my mind. Good example. Ok, then I've taken @Kyuuki93's cfg, touched it up a bit (fixed the 'normalization' typo, reverted it to yolov3-spp.cfg default anchors and 80-class configuration, and implemented weighted shortcuts for all shortcut layers.)

I will experiment with a few different weighting techniques using my typical 27 epoch coco results and post the results later on this week hopefully. I suppose the tests should be:

- yolov3-spp.cfg results (default)

- yolov3-spp.cfg (all shortcuts = weighted shortcuts)

- darknet53-bifpn3.cfg (all shortcuts in backbone and head = weighted shortcuts)

@glenn-jocher Also try to use this cfg-file that is made more similar to https://github.com/xuannianz/EfficientDet/blob/ccc795781fa173b32a6785765c8a7105ba702d0b/model.py

- csresnext50-bifpn-optimal.cfg.txt

(or try to use BiFPN-head from this cfg-file with darknet53 backbone)

Just for fair comparison, set all parameters in [net] and [yolo] to the same values in all 4 models.

@AlexeyAB thanks for the cfg! I've been having some problems with the route layers on these csresnext50 cfgs, maybe I can just copy the BiFPN part and attach it to darknet53, ah but the shortcut layer numbers will be different... ok maybe I'll just try and use it directly. Let's see...

@glenn-jocher Yes, you should change route layers and the first from= in weighted [shortcut] layers. Tomorow I will attach csdarknet53-bifpn-optimal.cfg

@AlexeyAB @glenn-jocher

I will get some free gpus in about 2~4 days.

If you want to try weighted shortcut in backbone, i can train it on imagenet.

@WongKinYiu @glenn-jocher

Yes, it will be nice.

Try to train 2 Classifiers with weighted-shortcut layers:

- csdarknet53-ws.cfg.txt (relu)

csresnext50-ws.cfg.txt (relu)

csresnext50-ws-mi2.cfg.txt (softmax) - preferably for training (multi-input weighted shortcut-layers with softmax normalization)

Which are similar to https://github.com/WongKinYiu/CrossStagePartialNetworks/blob/master/imagenet/results.md with mosaic, cutmix, mish, label smooth

Try to train these 2 models 416x416 with my BiFPN module which is more like a module by reference: https://github.com/xuannianz/EfficientDet/blob/ccc795781fa173b32a6785765c8a7105ba702d0b/model.py

For comparison with: https://github.com/ultralytics/yolov3/issues/698#issuecomment-587378576

Can you add

AP | AP50 | AP75for CSPResNeXt-50 optimal without any features?What is the Generic in this table?

Also what is the difference between RFB and RFBN? https://github.com/WongKinYiu/CrossStagePartialNetworks/blob/master/coco/results.md#cspresnext-50-optimal

- And why RFB degrades AP? Is it because RFB without batch-normalization, while RFBN with one?

Model | Size | fps | AP | AP50 | AP75 | APS | APM | APL | cfg | weight

-- | -- | -- | -- | -- | -- | -- | -- | -- | -- | --

PANet-(SPP, CIoU) | 512×512 | 44 | 42.4 | 64.4 | 45.9 | 23.2 | 45.5 | 55.3 | - | -

PANet-(SPP, RFB, CIoU) | 512×512 | - | 41.8 | 62.7 | 45.1 | 22.7 | 44.3 | 55.0 | - | -

Can you add AP | AP50 | AP75 for CSPResNeXt-50 optimal without any features?

still training.What is the Generic in this table?

it means apply learning rate, momentum... which is searched by generic algorithm. https://github.com/ultralytics/yolov3/issues/392Also what is the difference between RFB and RFBN? https://github.com/WongKinYiu/CrossStagePartialNetworks/blob/master/coco/results.md#cspresnext-50-optimal

And why RFB degrades AP? Is it because RFB without batch-normalization, while RFBN with one?

yes.

What is the Generic in this table?

it means apply learning rate, momentum... which is searched by generic algorithm. https://github.com/ultralytics/yolov3/issues/392

So it is about the optimal Hyperparameters that are obtained by the Genetic algorithm.

yes

@AlexeyAB @WongKinYiu ah yes, this is a "Genetic Algorithm", not "Generic". The current hyperparameters I found with it are tuned for yolov3-spp.cfg though, and it's unclear to me how well they generalize to other architectures/datasets. This could be one of the reasons why yolov3-spp trains well on ultralytics/yolov3 in comparison to others like csresnext-panet etc.

I completed the 3 runs (27 COCO epochs). Weighted shortcut only helped very slightly, and BiFPN actually worsened my results slightly. In general all 3 were extremely similar.

| Name | mAP

@0.5 | mAP

@0.5:0.95 | Comments

-- | -- | -- | -- | --

64 | yolov3-spp.cfg | 50.2 | 30.9 | baseline 63M

65 | yolov3-spp.cfg | 50.3 | 31.0 | weighted shortcuts 63M

66 | darknet53-bifpn3.cfg | 49.7 | 30.8 | weighted shortcuts 70M

67 | csdarknet53-bifpn-optimal.cfg | | | results pending 53M

I created a PyTorch weighted shortcut layer for this test. I did not normalize my shortcut weights with softmax or the "fast fusion" method from the paper, instead I used a simple sigmoid method to address the possible instability. I don't know if this difference affects things significantly or not. I suppose I should have just done a strict softmax. I can do that in the future.

class weightedFeatureFusion(nn.Module): # weighted sum of 2 or more layers https://arxiv.org/abs/1911.09070

def __init__(self, layers):

super(weightedFeatureFusion, self).__init__()

self.n = len(layers) + 1 # number of layers

self.layers = layers # layer indices

self.w = torch.nn.Parameter(torch.zeros(self.n)) # layer weights

def forward(self, x, outputs):

w = torch.sigmoid(self.w) * (2 / self.n) # sigmoid weights (0-1)

if self.n == 2:

return x * w[0] + outputs[self.layers[0]] * w[1]

elif self.n == 3:

return x * w[0] + outputs[self.layers[0]] * w[1] + outputs[self.layers[1]] * w[2]

else:

raise ValueError('weightedFeatureFusion() supports up to 3 layer inputs, %g attempted' % self.n)

UPDATE: @AlexeyAB I will run csdarknet53-bifpn-optimal.cfg to compare with above. I can't run csresnext50-bifpn-optimal.cfg yet due to the resnext bug with the grouped convolutions in my repo, but I'll try to get that sorted out this week or next week as well.

I looked at the actual fusion weights for yolov3-spp.cfg using the above process, and nothing seems out of the ordinary. They don't quite sum to 1 but not sure if it really matters.

for x in list(model.parameters()):

if len(x) == 2:

print(torch.sigmoid(x).detach())

tensor([0.68042, 0.24355])

tensor([0.43275, 0.43802])

tensor([0.63490, 0.33800])

tensor([0.38976, 0.61641])

tensor([0.45976, 0.52418])

tensor([0.49271, 0.47769])

tensor([0.48430, 0.46104])

tensor([0.48992, 0.43543])

tensor([0.48655, 0.41508])

tensor([0.47568, 0.38939])

tensor([0.42831, 0.35085])

tensor([0.40784, 0.60700])

tensor([0.45018, 0.53021])

tensor([0.46398, 0.47831])

tensor([0.48237, 0.43581])

tensor([0.49701, 0.41102])

tensor([0.49047, 0.40242])

tensor([0.47470, 0.38543])

tensor([0.47063, 0.34986])

tensor([0.42593, 0.57570])

tensor([0.46498, 0.51103])

tensor([0.49873, 0.47298])

tensor([0.53441, 0.46724])

@AlexeyAB I tried csdarknet53-bifpn-optimal.cfg.txt, but I'm getting the same error related to the channel sizes as I saw in resnext. I don't think this a bug, I think I simply need to update my code per https://github.com/ultralytics/yolov3/issues/698#issuecomment-570427687, which I still haven't done yet.

Also there is a typo in 'weights_normalizion'. I also saw the new model is reduced in size, 53M params vs I think 63M for yolov3-spp.cfg, which is more in line with the efficientdet paper, as the old darknet53-bifpn cfg I used actually increased the parameter count.

UPDATE: I realized the error message is actually about the shortcut layers. When shortcutting layers -1 to 85 I get these shapes:

-1, torch.Size([16, 1024, 26, 26])

85, torch.Size([16, 512, 26, 26])

This error is coming from line 835 in your cfg:

@glenn-jocher

Also there is a typo in 'weights_normalizion'.

What do you mean?

I did not normalize my shortcut weights with softmax or the "fast fusion" method from the paper, instead I used a simple sigmoid method to address the possible instability.

In ASFF the Softmax works much better than ReLU/Logistic, so may be we can try to train weights_normalizion=softmax in BiFPN too, since it doesn't affect on inference speed

https://github.com/AlexeyAB/darknet/issues/4382#issuecomment-564823931

But logically, softmax should give a greater advantage to ASFF than to BiFPN (since in ASFF the multipliers are different for each inference and the sum of multipliers is different every time, so they should be normalized dynamically). So this is not a priority.

Weighted shortcut only helped very slightly

Since new shortcut-layer not only weighted, but also multi-input, then we can fuse all shortcut outputs with the same resolution before each subsambling.

I will try to make such model and compare FPS:

I also saw the new model is reduced in size, 53M params vs I think 63M for yolov3-spp.cfg

I will run csdarknet53-bifpn-optimal.cfg to compare with above. I can't run csresnext50-bifpn-optimal.cfg yet due to the resnext bug with the grouped convolutions in my repo, but I'll try to get that sorted out this week or next week as well.

I used grouped-conv in the csdarknet53-bifpn-optimal.cfg model.

UPDATE: I realized the error message is actually about the shortcut layers. When shortcutting layers -1 to 85 I get these shapes:

-1, torch.Size([16, 1024, 26, 26])

85, torch.Size([16, 512, 26, 26])This error is coming from line 835 in your cfg:

This is a PRN (partial residual connections) when two tensors with different number of channels are added elementwise.

@WongKinYiu

About my suggestion:

Since new shortcut-layer not only weighted, but also multi-input, then we can fuse all shortcut outputs with the same resolution

I added csresnext50-ws-mi2.cfg.txt - preferably for training (multi-input weighted shortcut-layers with softmax normalization)

There: https://github.com/AlexeyAB/darknet/issues/4662#issuecomment-587490873

So may be better to train:

csresnext50-ws.cfg.txt

and

csresnext50-ws-mi2.cfg.txt

whitout 1. csdarknet53-ws.cfg.txt if you don't have enough GPUs.

On RTX 2070

csresnext50.cfg.txt- 68.0 FPScsresnext50-ws.cfg.txt- 67.5 FPScsresnext50-ws-mi2.cfg.txt- 67.5 FPS

@AlexeyAB

OK.

This is a PRN (partial residual connections) when two tensors with different number of channels are added elementwise.

@AlexeyAB ah I see, PRN. Output always matches input shape. Ok perfect, if I can fix this then cspresnext and your new darknet53 cfgs will both work. For the 3 cases:

- Case A larger 'from' should be maxpooled to fit? Or perhaps downsampled using nearest value? Or we could also simply slice the feature from 0 to the same size and input (we lose some data this way).

- Case B @WongKinYiu already explained this to me, the solution is to zero pad (all zeros on the right side, following the existing features) the smaller 'from' feature to match the larger one right?

- Case C is already handled correctly.

@glenn-jocher

Case A larger 'from' should be maxpooled to fit? Or perhaps downsampled using nearest value? Or we could also simply slice the feature from 0 to the same size and input (we lose some data this way).

There is no maxpool/downsampling. Just take the first C channels.

Or we could also simply slice the feature from 0 to the same size and input (we lose some data this way).

Yes.

@AlexeyAB @WongKinYiu I've updated my shortcut layer now to correctly handle all new situations: weighted and non-weighted, from smaller features (by zero pad right) and from larger features (by slicing), for any number of input layers.

I should be able to train the 2 new cfg files now. Do these parameter and layer counts seem correct?

- yolov3-spp.cfg (for reference): 225 layers, 6.29987e+07 parameters

- csdarknet53-bifpn-optimal.cfg: 338 layers, 4.99935e+07 parameters

- csresnext50-bifpn-optimal.cfg: 299 layers, 4.25729e+07 parameters

@glenn-jocher great!

I noticed that the shortcut zeropad operations in my implementation are slower (about 100X slower on CPU) than the slicing operations. The times (seconds) below are for 1000 shortcut operations for darknet53-bifpn (for different shortcut layers). I tried a different method: instead of padding the smaller 'from' feature, I added the 'from' feature to a sliced input, which should be mathematically equivalent. I didn't see any GPU speedup though, so this looks like its not a primary cause of slowdown with these new models, but the change did help reduce GPU RAM usage a bit.

zeropad 0.2164621353149414

zeropad 0.6748669147491455

zeropad 2.6399528980255127

slice 0.0072019100189208984

slice 0.006873130798339844

slice 0.006844043731689453

slice 0.006783962249755859

slice 0.007230997085571289

zeropad 0.1068871021270752

zeropad 0.6746640205383301

slice 0.0068128108978271484

zeropad 0.1016230583190918

The new models are training now, should be done in a day or two. Unfortunately they are quite a bit slower than yolov3-spp, about 2.5X slower (22 min epoch vs 50-60 min epoch) with multi-scale. My command is here.

python3 train.py --data coco2014.data --img-size 416 608 --epochs 27 --batch 12 --accum 6 --weights '' --device 0 --cfg csdarknet53-bifpn-optimal.cfg --nosave --name 67 --multi

Do these parameter and layer counts seem correct?

Yes.

@AlexeyAB I'm seeing poor results here after 15 epochs for csresnext50-bifpn-optimal.cfg (results67 below), so I've cancelled the training.

You have the anchor order for both csresnext50-bifpn-optimal.cfg and csdarknet53-bifpn-optimal.cfg the same as yolov3-spp.cfg, with mask=0,1,2 for the last output layer. Perhaps this order should be reversed as in darknet53-bifpn3.cfg?

I've also seperately confirmed that the majority of the slowdown is due to the grouped convolutions. When I set groups=1 for all convolutions in csdarknet53-bifpn-optimal.cfg my parameter count increases (from 50M to 57M), but the training speeds improves from 51min/epoch to 31min/epoch.

ID | Name | mAP@

0.5 | mAP@

0.5:0.95 | Comments

-- | -- | -- | -- | --

64 | yolov3-spp.cfg | 50.2 | 30.9 | baseline 63M

65 | yolov3-spp.cfg | 50.3 | 31.0 | weighted shortcuts 63M

66 | darknet53-bifpn3.cfg | 49.7 | 30.8 | weighted shortcuts 70M

67 | csdarknet53-bifpn-optimal.cfg | - | - | cancelled 50M

69 | csdarknet53-bifpn-optimal.cfg | 41.5 | 23.8 | groups=1 57M

70 | csresnext50-bifpn-optimal.cfg | 44.0 | 25.9 | groups=1 94M

@glenn-jocher

I'm seeing poor results here after 15 epochs for csresnext50-bifpn-optimal.cfg (results67 below), so I've cancelled the training.

Is it better than darknet53-bifpn3.cfg ?

It would be nice to compare with darknet53-bifpn3.cfg so we will understand whether we are moving in the right direction.

What does it mean result64 - 67 on your charts?

I've also seperately confirmed that the majority of the slowdown is due to the grouped convolutions. When I set groups=1 for all convolutions in csdarknet53-bifpn-optimal.cfg my parameter count increases (from 50M to 57M), but the training speeds improves from 51min/epoch to 31min/epoch.

Try to train csresnext50-bifpn-optimal.cfg with groups=1

You have the anchor order for both csresnext50-bifpn-optimal.cfg and csdarknet53-bifpn-optimal.cfg the same as yolov3-spp.cfg, with mask=0,1,2 for the last output layer.

Yes.

Perhaps this order should be reversed as in darknet53-bifpn3.cfg?

No.

@AlexeyAB I've added a table to https://github.com/AlexeyAB/darknet/issues/4662#issuecomment-589316861 to explain the run numbers. darknet53-bifpn3.cfg is there as run 66.

Ok, yes I will re-run csdarknet53-bifpn-optimal.cfg with groups=1 for all convolutions, and I will try csresnext50-bifpn-optimal.cfg with groups=1 after that.

@glenn-jocher

Case A larger 'from' should be maxpooled to fit? Or perhaps downsampled using nearest value? Or we could also simply slice the feature from 0 to the same size and input (we lose some data this way).

We do not get all the information from the from=

But in the main path we don't lose any information.

So actually we dont lose any information.

I tried a different method: instead of padding the smaller 'from' feature, I added the 'from' feature to a sliced input, which should be mathematically equivalent.

You should not crop layer -1 otherwise you will lose information and the accuracy will drop dramatically.

Just note that A and B are not equivalent:

@AlexeyAB yes don't worry, I'm 99% sure I'm handling the new shortcuts correctly. The change I made was to Case B. Rather than zero-pad from as we first talked about, I add from to a sliced input. Only the sliced values in input are affected, the rest of input remains unchanged (same as adding zeros to it).

The original pad pseudocode would be:

output = input * weight0 + [from , zero_padding] * weight1

And the updated pseudocode that avoids padding looks this for ch from channels.

output = input * weight0

output[:, 0:ch] = output[:, 0:ch] + from * weight1

The actual code looks like this.

```python

# Fusion

nc = x.shape[1] # input channels

for i in range(self.n - 1):

a = outputs[self.layers[i]] # feature to add

ac = a.shape[1] # feature channels

dc = nc - ac # delta channels

# Adjust channels

if dc > 0: # slice input

x[:, :ac] = x[:, :ac] + (a * w[i + 1] if self.weight else a)

elif dc < 0: # slice feature

x = x + (a[:, :nc] * w[i + 1] if self.weight else a[:, :nc])

else: # same shape

x = x + (a * w[i + 1] if self.weight else a)

```

@AlexeyAB @WongKinYiu runs are all done. None of the updates helped the results unfortunately. The higher the groupings the fewer parameters, but also the worse the results apparently.

I only did 10% coco training here, so its possible full training might return a different results, but typically 10% training results correlate fairly well with full training results. As a reference, run 64 acheives 50.2 [email protected] at 10% of training (table below), and 61.[email protected] mAP with full training.

I'm pretty confused then. If the bifpn cfgs are correct, then we are unable to replicate the improvement in the literature, at least on the ultralytics repo. But with this darknet repo, grouped convolutions _do_ show improvement?

ID | Name | mAP@

0.5 | mAP@

0.5:0.95 | Comments

-- | -- | -- | -- | --

64 | yolov3-spp.cfg | 50.2 | 30.9 | baseline 63M

65 | yolov3-spp.cfg | 50.3 | 31.0 | weighted shortcuts 63M

66 | darknet53-bifpn3.cfg | 49.7 | 30.8 | weighted shortcuts 70M

67 | csdarknet53-bifpn-optimal.cfg | - | - | cancelled 50M

69 | csdarknet53-bifpn-optimal.cfg | 41.5 | 23.8 | groups=1 57M

70 | csresnext50-bifpn-optimal.cfg | 44.0 | 25.9 | groups=1 94M

@glenn-jocher Hello,

I start training them few days ago, will get results after 4 weeks.

@glenn-jocher @WongKinYiu

If the bifpn cfgs are correct, then we are unable to replicate the improvement in the literature, at least on the ultralytics repo.

Perhaps automatic differentiation in Ultralytics/Pytorch incorrectly performs back propagation for normalization in your weighted-shortcut layers.

I used the same gradient as in the ASFF: https://arxiv.org/pdf/1911.09516v2.pdf

https://github.com/AlexeyAB/darknet/blob/6fb817f68b471da21c04f8bd5ffef0d3c4d67c30/src/blas.c#L181-L187

Then it performs such back propagation:

https://github.com/AlexeyAB/darknet/blob/6fb817f68b471da21c04f8bd5ffef0d3c4d67c30/src/blas.c#L216-L217

Also will waiting for results of ASFF(softmax), that shows improvement for small custom datasets: https://github.com/AlexeyAB/darknet/issues/3874#issuecomment-561064425

(just this is strange that ASFF + RFB(batchnorm=0) shows the best AP75 accuracy, while RFB(batchnorm=1) degrades AP75)

But on MSCOCO the ASFF(softmax) leads to Nan: https://github.com/AlexeyAB/darknet/issues/3772#issuecomment-580518150

@AlexeyAB I forgot you were coding gradients yourself, that's very impressive!

Incorrect gradients can always be an issue. I've seen problems arise with in-place operations (pytorch can't backprop these properly), but I made sure to avoid these in my shortcut module, and I also tested the new setup with anomaly detection once to check, by setting torch.autograd.set_detect_anomaly(True) before running (no anomalies were found). But the new shortcut operations actually help a bit on yolov3-spp.cfg (see table below), so at least for _same shape weighted shortcut_ operations everything is going well.

ID | Name | mAP@

0.5 | mAP@

0.5:0.95 | Comments

-- | -- | -- | -- | --

64 | yolov3-spp.cfg | 50.2 | 30.9 | baseline 63M

65 | yolov3-spp.cfg | 50.3 | 31.0 | weighted shortcuts 63M

The main mAP decreases appear to come from groups>1 in the convolutions, but also from the new heads. I suppose a simple test would be for me to take yolov3-spp.cfg, and change all the convolutions to groups=2, groups=4, groups=8 and add these results to the table? That would isolate the effect of increased groups.

@WongKinYiu

I fixed gradient for BiFPN and weighted-shortcut.

So try to re-run training of these models with new code: https://github.com/AlexeyAB/darknet/issues/4662#issuecomment-587490873

@WongKinYiu

- 3 fixes for BiFPN / weighted-[shortcut]: https://github.com/AlexeyAB/darknet/commit/cfa40fe890d0fc7d100cfec2ee4976bf9838cb30 and https://github.com/AlexeyAB/darknet/commit/3cb9125b95a860694cfafa5b3408aa6123749e1e and https://github.com/AlexeyAB/darknet/commit/f6baa62c9b6151b9f615a1e56434d237553fd4af

@glenn-jocher Did you try to use ASFF on ultralytics/Pytorch? https://github.com/ruinmessi/ASFF

The latest fix accelerates the backpropagation of BiFPN.

@AlexeyAB no, I have not tried YOLOv3-ASFF. I went tho the https://github.com/ruinmessi/ASFF repo but couldn't find a cfg. Do you have one?

@glenn-jocher

As in the most Pytorch projects, the model is hardcoded in Python: https://github.com/ruinmessi/ASFF/blob/master/models/yolov3_asff.py

There is cfg file for Darknet with ASFF and RFB: yolov3-spp-asff-rfb.cfg.txt

More: https://github.com/AlexeyAB/darknet/issues/4382#issuecomment-567010280

@AlexeyAB

csresnext50-ws.cfg.txt: 78.7% top-1, 94.7% top-5.

@WongKinYiu Thanks! So this is lower than csresnext50-omega.cfg 79.8% | 95.2%

@AlexeyAB yes, but it seems you add some constraint of weighted shortcut in new version, maybe it would get better results.

@WongKinYiu Yes, may be. But the main difference, limiting weight_updates makes training more stable at the very beginning, then it has almost no effect.

Please, share weights-file of csresnext50-ws.cfg.txt, I will check the weights of shortcut-layers.

@AlexeyAB here you are.

@WongKinYiu

@AlexeyAB yes, but it seems you add some constraint of weighted shortcut in new version, maybe it would get better results.

About bad acccuracy of csresnext50-ws.cfg.txt

Yes, before Commits on Mar 14, 2020 https://github.com/AlexeyAB/darknet/commit/fb4da5ce5fe8339255a09974ed983fa44c3afbe9 instability and too high delta at the very beginning of training spoiled weights of shortcut, so [shortcut] layer-27 is disabled and doesn't work at all.

Only the CSP-connection saved the situation, otherwise the accuracy would be 0.

So with new Darknet code training should be much better.

So this part of code doesn't work at all.

It is interesting to see what the results will be for csresnext50-ws-mi2.cfg.txt

Is this model still training?

yes, currently 1173k iterations.

@AlexeyAB

csresnext50-ws-mi2.cfg.txt: 79.9 top-1, 95.3 top-5.

@WongKinYiu It seems this is the new top-result: https://github.com/WongKinYiu/CrossStagePartialNetworks/blob/master/imagenet/results.md

Can you share weights file? I will check weights of shortcut.

@AlexeyAB

of course, csresnext50-ws-mi2-omega_final.weights

@WongKinYiu I think we can combine csresnext50-ws-mi2 + csresnext50sub and train with the new repo.

@WongKinYiu

csresnext50-ws-mi2.cfg.txt: 79.9 top-1, 95.3 top-5.

Some of weights are negative, but I just looked, this model uses softmax normalization, so negative values for it are not a problem

@WongKinYiu

I created 2 new models:

- 40 FPS (618x618)

csresnext50sub + csresnext50-ws-mi2- csresnext50sub-mi2.cfg.txt

- 34 FPS (618x618) the same, just it uses

route+maxoutinstead ofweighted-shortcutcsresnext50sub-mo.cfg.txt

csdarknet53-ws: 65.6 top-1, 87.3 top-5.

@AlexeyAB

I created 2 new models:

- 40 FPS (618x618)

csresnext50sub + csresnext50-ws-mi2- csresnext50sub-mi2.cfg.txt- 34 FPS (618x618) the same, just it uses

route+maxoutinstead ofweighted-shortcutcsresnext50sub-mo.cfg.txt

Do you mean train with width=608 and height=608?

@WongKinYiu No, train as usual 256x256.

608x608 it's just to know the final speed of the backbone of detector.

PS,

look at: https://github.com/AlexeyAB/darknet/issues/5079

OK, start training.

@AlexeyAB Hello,

i use 27 Feb repo for training bifpn model. 10a586140c99a905a504e6813b7cc629ff50ea42

but if i use latest code to test the performance, it gets totally wrong result.

is it because new repo use leaky relu instead of relu? d11caf486da34a562347db53c140900aef42140f

also, the inference speed of bifpn seems become very slow in latest repo.

update: csresnext50-bifpngamma: 512x512, 36.8/58.2/39.4.

@WongKinYiu Hi,

It seems - yes, this is the reason, if there are negative or vely low weights. Try to set relu istead of lrelu temporary for testing.

(also I found that I missed fix relu to lrelu in these places: https://github.com/AlexeyAB/darknet/commit/d6181c67cd72468a3b8c658586452a5c6fa8fcbf and https://github.com/AlexeyAB/darknet/commit/2614a231f06894c1a89de0023735deb846958343 )

update: csresnext50-bifpngamma: 512x512, 36.8/58.2/39.4.

Can you share cfg/weights, I will check negative weights and speed?

Also try to check training time of lrelu and softmax if training speed is ~ the same, then may be better to use softmax, since during inference (Detection) weights normalization will be applied once at the initialization step https://github.com/AlexeyAB/darknet/blob/2614a231f06894c1a89de0023735deb846958343/src/network.c#L1101-L1194

so detection will have the same speed non vs relu vs softmax

For example, models csresnext50-ws-mi2.cfg.txt and csresnext50sub-mi2.cfg.txt from this topic use softmax weights normalization

@WongKinYiu

Yes, the BiFPN module in this weights-file is totaly broken, since the most of weights are negative, i.e. them don't pass any information through weighted-[shortcut] layers.

But network wors because there are pass-through [route] layers before [yolo] layers.

Ways to solve this problem:

- for

weights_normalizion=reluuse the latest commit, which useslreluinstead ofrelu, constrains deltas by[-1;+1]and you should addburnin_update=2for each weighted-[shortcut]layer in cfg-file

- just use

weights_normalizion=softmax

@WongKinYiu Hi,

Can you show ~ remaining time for models?

efficientnet-lite3.cfghttps://github.com/AlexeyAB/darknet/issues/4447#issuecomment-602907153- BiFPN-ASFF

csresnext50sub-spp-asff-bifpn-rfb-db.cfg.txthttps://github.com/WongKinYiu/CrossStagePartialNetworks/issues/6#issuecomment-601886281 - csresnext50sub-mi2.cfg.txt https://github.com/AlexeyAB/darknet/issues/4662#issuecomment-601973917

- csresnext50sub-mo.cfg.txt https://github.com/AlexeyAB/darknet/issues/4662#issuecomment-601973917

- csdarknet53-omega-mi.cfg.txt https://github.com/AlexeyAB/darknet/issues/5117#issuecomment-605460850

- csdarknet53-omega-mi-db.cfg.txt https://github.com/AlexeyAB/darknet/issues/5117#issuecomment-605460850

@AlexeyAB

efficientnet-lite3: ~470 (459k/1200k)csresnext50sub-spp-asff-bifpn-rfb-db: no info (21 March repo, 49k/550k)csresnext50sub-mi2: no info (21 March repo, 480k/1200k)csresnext50sub-mo: no info (21 March repo, 440k/1200k)csdarknet53-omega-mi: ~480 (194k/1200k)csdarknet53-omega-mi-db: ~500 (190k/1200k)

@WongKinYiu Thanks!

Can you check intermediate Top1/5 accuracy of models csdarknet53-omega-mi and csdarknet53-omega-mi-db ?

Depending on which model shows the accuracy higher, you can train one of these model (without or with DropBlock) - I implemented two Classification models with Input Pyramid (for fusion Spatial and Semantic information):

They may degrade the accuracy of the classifier, but should improve the accuracy of the detector.

Only -2.5% FPS.

https://arxiv.org/abs/1912.00632v2

The image pyramid is obtained by downsampling(linear interpolate) the input image into four levels with a factor of 2.

In these models: The image pyramid is obtained by downsampling(linear interpolate) the input image into five levels with a factor of 2.

@AlexeyAB input pyramid looks very interesting. I just had an idea. Instead of downsampling by a linear interpolation, perhaps one could simply reshape the pyramid inputs by moving pixels around.

For example instead of downsampling (3,512,512) - > (3,256,256) we might be able to reshape (3,512,512) -> (12, 256, 256) with no information loss. I suppose the order would be (rgb,512,512) -> (rrrrggggbbbb,256,256), and it might generalize to any downsampling operation, perhaps in place of the maxpool layers for example in yolov3-tiny or the stride-2 convolutions in yolov3.

I'm not aware of any op that does this currently so it would have to be custom built somehow. What do you think?

@glenn-jocher

About loosing information:

- It’s not definitely proven what is better: maxpool-stride=2, conv3x3-stride=2, reshape-stride=2, ... for Subsampling for Semantic information.

reshape-stride=2+conv1x1is very similar toconv3x3-stride=2- information is not lost in both cases due to 3x3 kernel-size. So we already useconv3x3-stride=2in all our networks.- in

maxpool-stride=2we lose some information, but to get coordinates we shouldn't lose spatial information, but to get objectness/classes we should lose spatial information to achieve shift-invariance. So in thecsresnext50sub-mi2.cfg.txtwe already use 2 branches withconv3x3-stride=2and withmaxpool-stride=2https://github.com/AlexeyAB/darknet/issues/4662#issuecomment-601908931

Yes, there are 2 reshape layers in the Darknet:

[reorg](pjreddie's version - it is broken https://github.com/opencv/opencv/pull/9705#discussion_r141268864 ) and new[reorg3d](slightly fixed version - but I'm not sure that it is correct for all input parameters, it is better to implement it from scratch) that was used in Yolo v2

I think reshape is more suitable for Semantic information rather than for Spatial information, but you can check it by training 2 models with Input Pyramid using [local_avgpool] and [reorg3d]Input Pyramid performs other task than to save information about details. The Input Pyramid is used to bring more Spatial information (even with local avgpool truncation) than is contained in Semantic information.

@AlexeyAB

csdarknet53-omega-mi: 240k epoch, 34.4 top-1, 60.9 top-5.

csdarknet53-omega-mi-db: 240k epoch, 32.3 top-1, 58.1 top-5.

@WongKinYiu If you want you can train csdarknet53-omega-mi-ip.cfg.txt

@AlexeyAB currently 24k epoch.

@WongKinYiu

csdarknet53-omega-mi: 240k epoch, 34.4 top-1, 60.9 top-5.

csdarknet53-omega-mi-db: 240k epoch, 32.3 top-1, 58.1 top-5.

Try to train this model

csdarknet53-omega-mi-db(with DropBlock) from the begining with the latest Darknet version, I fixed and checked DropBlock: https://github.com/AlexeyAB/darknet/commit/a9bae4f0326b4a841756d5b1a6ed37821f6a9467Also try to stop training of

csresnext50sub-spp-asff-bifpn-rfb-db.cfg.txtand resume it with the new code: https://github.com/WongKinYiu/CrossStagePartialNetworks/issues/6#issuecomment-601886281

@AlexeyAB that's a very good point about reshaping layers that the regression may be more sensitive to small positional changes of the data than the obj/cls. I've always been worried about the loss of small spatial information as the image downsizes, especially for the smallest objects in P3.

That's also a very good point that downsampling operations are all 3x3 kernels, so they overlap enough that not much information should be lost. I'll try to experiment a bit with injecting the input pyramid in different areas.

One interesting thing about the samung paper was that they used a 7x7 kernel for the first convolution layer. I see this does not significantly affect the parameter count nor the FLOPS, but I also see the mixnet/efficientnet guys do not do this (they have 3x3 on conv0 like dn53), and I'm sure they must have experimented with it.

@glenn-jocher

One interesting thing about the samung paper was that they used a 7x7 kernel for the first convolution layer.

conv 5x5-7x7-9x9 should be used for stride=2 rather than for the 1st layer.

mixnet guys said - for layers with stride 2, a larger kernel can significantly improve the accuracy.: https://arxiv.org/pdf/1907.09595v3.pdf

As shown in the figure, large kernel size has

different impact on different layers: for most of layers, the accuracy doesn’t change much,

but for certain layers with stride 2, a larger kernel can significantly improve the accuracy.

Notably, although MixConv3579 uses only half parameters and FLOPS than the vanilla

DepthwiseConv9x9, our MixConv achieves similar or slightly better performance for

most of the layers.

@AlexeyAB @glenn-jocher

Hello,

Reshape (reorg) layer is usually used in the models of depth prediction and semantic segmentation. They usually called the process of "reorg/reversed reorg" as "spatial to depth (channel)/depth (channel) to spatial" tensorflow layer. I have changed the downsampling/upsampling layers to reorg/reversed reorg of Elastic, and it got a little bit accuracy improvement.

Also, there is a paper in CVPR 2020 use this technique, for your reference MuxConv.

@WongKinYiu

I have changed the downsampling/upsampling layers to reorg/reversed reorg of Elastic, and it got a little bit accuracy improvement.

Did you use [reorg] or [reorg3d]?

Since original [reorg] layer has a bug: https://github.com/opencv/opencv/pull/9705#discussion_r143136536

@AlexeyAB i used [reorg3d].

@AlexeyAB

CSPResNeXt-50 default BoF+MISH : top-1 = 79.8%, top-5 = 95.2%

csresnext50-ws.cfg.txt top-1 = 78.7%, top-5 = 94.7% negative weights, should be used burnin_update=2 and may be weights_normalizion=softmax more about it

csresnext50-ws-mi2.cfg.txt : top-1 = 79.9%, top-5 = 95.3%

csresnext50morelayers.cfg.txt : top-1 = 79.4%, top-5 = 95.2% url

csresnext50sub.cfg.txt : top-1 = 79.5%, top-5 = 95.3% url

csresnext50sub-mi2.cfg.txt: top-1 = 79.4%, top-5 = 95.3%, weights

csresnext50sub-mo.cfg.txt: top-1 = 79.2%, top-5 = 95.1%, weights

CSPDarknet-53 default BoF+MISH : top-1 = 78.7%, top-5 = 94.8%

csdarknet53-ws.cfg.txt top-1 = 65.6%, top-5 = 87.3%

csdarknet53-omega-mi.cfg.txt: top-1 = 78.6%, top-5 = 94.7%, weights

csdarknet53-omega-mi-db.cfg.txt: top-1 = 78.4%, top-5 = 94.5%

csdarknet53-omega-mi-ip.cfg.txt: top-1 = 77.8%, top-5 = 94.3%

@WongKinYiu

Can you add Top1/Top5 accuracy and attach weights-file for this model

csdarknet53-ws.cfg.txt

https://github.com/AlexeyAB/darknet/issues/4498#issuecomment-592191368

cfg files: https://github.com/AlexeyAB/darknet/issues/4662#issuecomment-587490873

Most helpful comment

Comparison on my dataset, all cases with same training settings and used MS COCO detector pre-trained weights, only different in backbone

| Model | [email protected]| [email protected]| precision(.7) | recall(.7)| inference time(416x416)|

| --- | --- | --- | --- | --- | --- |

|yolov3| 91.79%| 63.09% |0.95| 0.71| 13.25ms|

|csresnext50-panet|92.80%|64.16%|0.96|0.67|15.61ms |

|darknet53-bifpn3(P3-5)|91.74%|63.48%|0.95|0.71|15.25ms|

ALL network has SPP-layer, inference time test on RTX 2080Ti, BiFPN block use

darkenet53-bifpn3-sppgot very similar performance withyolov3-spp, I think the reason could beyolov3-sppthe option of next step could be

but recently my machines was occupied by other task, I will try further experiment when GPUs got free