Darknet: Crowdsource tagging for correcting mscoco | openimagesv5

https://github.com/AlexeyAB/darknet/issues/4033

Taking the above issue forward. We need to resolve the tagging part and for that i recommend we crowdsource and divide the dataset into multiple parts. Every person can tag 10,000 images and share the txt files back. This would help everyone.

Thanks.

All 16 comments

I agree and can volunteer for this task.

I can also correct tags for 10,000 images.

Hmm. I'd be interested in using the updated tags once available!

@dexception

Please divide and make annotation files in pairs of 10,000. Each of us can check and share the updated tags. Lets start with MSCOCO first.

Will be sharing the updated annotations for person, vehicles soon.

Rest of the classes will have to updated by others.

How about having a reliable classifier - if it detects more than the ground truth - tag this img for review.

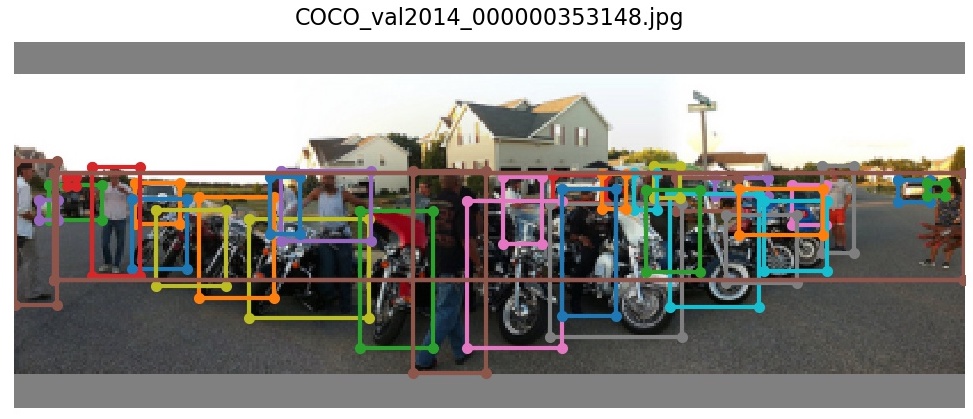

@holger-prause do you mean that for example we could run COCO through the most accurate object detection algorithm and then manually review images where more objects are found than in the original labelling?

We could do this iteratively so that we pick up missed objects.

maybe checking for outliers in BBox size (by class) can achieve the same results faster for firsts iterations

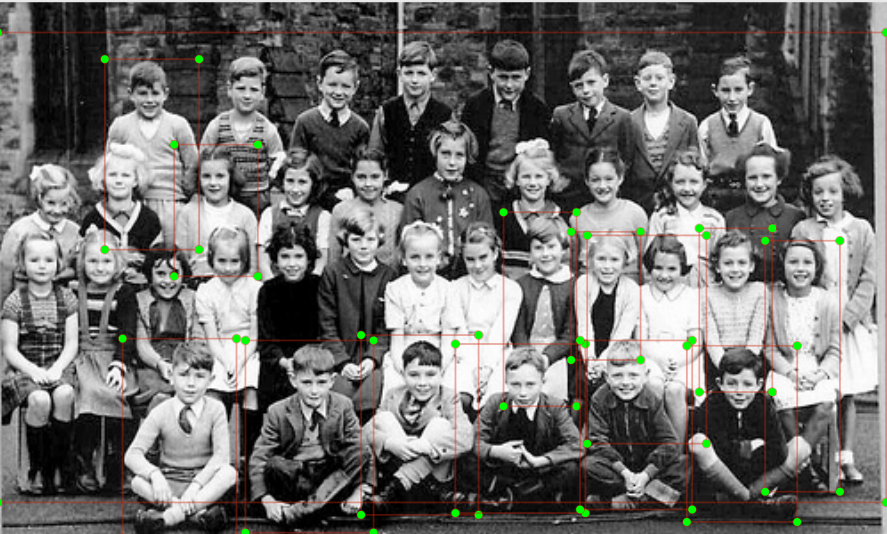

I have checked the coco and open images dataset. This is one thing cannot be done with automation. We would need multiple rounds of checking annotations. The small objects have been missed like a thousand times. Men have been tagged as person one image and tagged as man in next image. Sometimes a partial hand has been tagged as person and other times there is no tagging. Open images is just horrible.

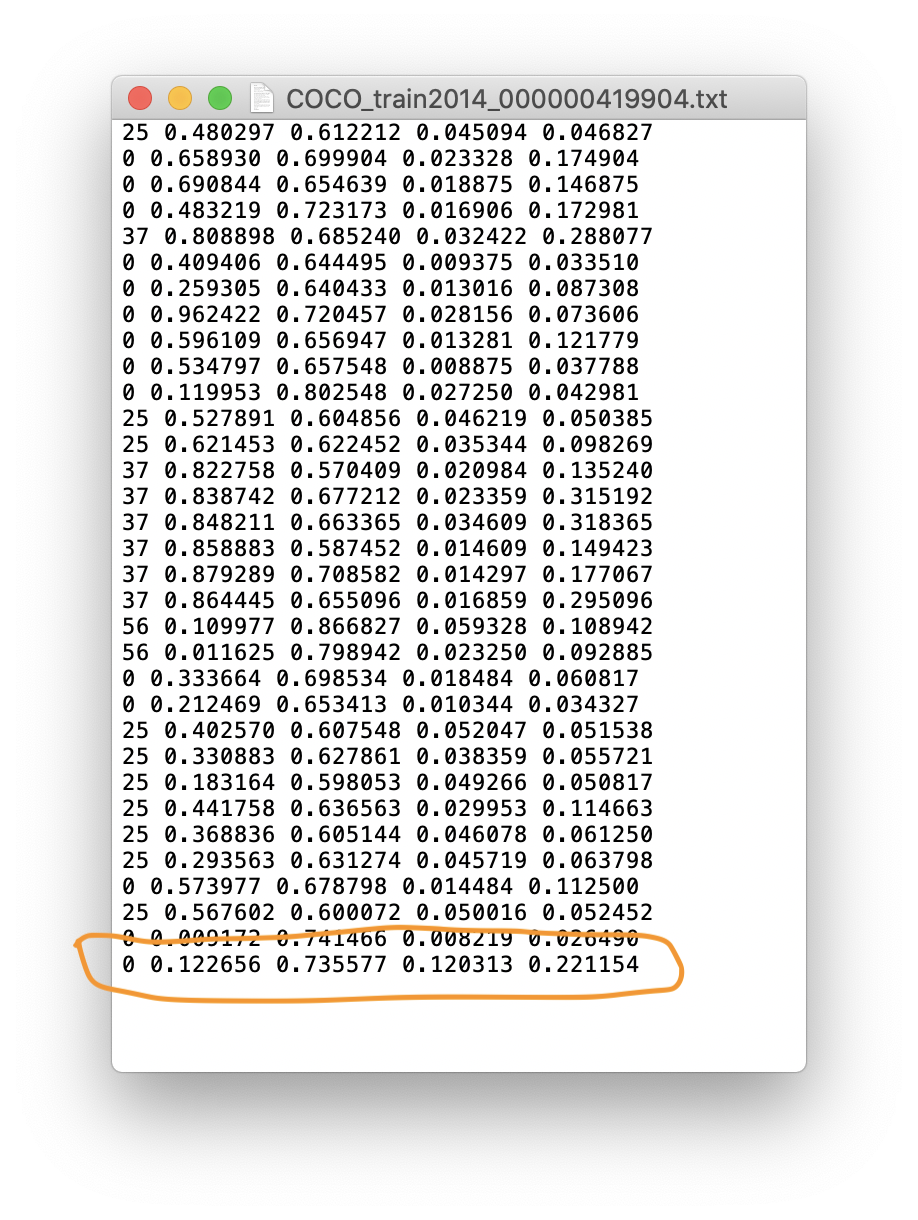

@jamessmith90 @holger-prause @dexception @xjohnxjohn @Ronales @FranciscoReveriano we discovered some False Positive labelling errors in the official COCO labels in 3 images (1 train, 2 val) with reproducible indicators: https://github.com/ultralytics/yolov3/issues/714

The indicators are:

- All FPs are class 0

- All are on last row

- Often they are duplicated or triplicated

60% of tagging is done. So this will take a while.

@dexception oh awesome! I’m happy to wait.

@dexception Nice!

60% of tagging is done. So this will take a while.

Is it 60 percent of 10,000 images or the entire dataset?

I have 2 data entry guys. So they are only working on tagging person and vehicle categories. Not all the categories which i already mentioned other people will have to do.

@dexception ah I see. Unfortunately since mAP is the mean AP of the 80 classes, updating 2 classes will likely not have a significant impact. The largest change (if both classes went from say 50 to 100 AP), would be only about +1% mAP.

@glenn-jocher The val and test dataset are also horribly tagged.

Most helpful comment

Will be sharing the updated annotations for person, vehicles soon.

Rest of the classes will have to updated by others.