Darknet: Darknet mAP metric give different result than other repos implementation

Hi,

I trained a 3 classes Tiny Yolo v3 model and then evaluate its [email protected] using 'AUC' mode. I compared the result with 2 other repos that implement official metrics (this and this one) but the result was unexpectedly different.

I obtained the same number of TP, FP (and total_true_positive obviously) but the mAP is different.

With your repo:

detections_count = 1469, unique_truth_count = 467

name = maize, ap = 94.02%, TP = 150, FP = 24

name = bean, ap = 91.14%, TP = 151, FP = 41

name = carrot, ap = 79.26%, TP = 112, FP = 51

With the first mentioned repo:

maize - mAP: 91.39 %, TP: 150, FP: 24, npos: 162

bean - mAP: 85.93 %, TP: 151, FP: 41, npos: 171

carrot - mAP: 74.80 %, TP: 112, FP: 51, npos: 134

npos = 467

With the second repo:

91.39% = maize AP, TP = 150, FP = 24

85.93% = bean AP, TP = 151, FP = 41

74.80% = carrot AP, TP = 112, FP = 51

mAP = 84.04%

All repos including darknet use AUC (all points interpolation). I'm a little bit confused because you stated that in you repo you use official PascalVOC metric (and when I use map "-points 0 (AUC) for ImageNet, PascalVOC 2010-2012, your custom dataset" seems to confirm that you implement PascalVOC metric).

Using 11-points interpolation with all repos give same result for class 'maize' but different ones for other classes.

All 7 comments

I noted another strange behavior, detections_count must be equal to the sum of True Positive and False Positive for each classes.

In my previous post those values are different: detections_count = 1469 vs. TP + FP = 529.

Am I missing something obvious or there is something wrong with mAP computation?

@laclouis5 Hi

I noted another strange behavior, detections_count must be equal to the sum of True Positive and False Positive for each classes.

In my previous post those values are different: detections_count = 1469 vs. TP + FP = 529.

Can you show screenshot?

Do you use the latest version of Darknet?

Can you describe full way what do you do to get mAP with other repo? Do you run ./darknet detector valid ... ? Do you use the same iou_threshold=0.5 as in the Darknet by default? Do you calculate [email protected] or [email protected] ?...

I obtained the same number of TP, FP (and total_true_positive obviously) but the mAP is different.

Do these repositories calculate TP, FP separately from mAP, i.e. by using different confidence thresholds?

Since my repo calculates

- mAP with

confidence thresholds = .005https://github.com/AlexeyAB/darknet/blob/5a6afe96d3aa8aed19405577db7dba0ff173c848/src/detector.c#L721 - but TP,FP with

confidence thresholds = 0.25https://github.com/AlexeyAB/darknet/blob/5a6afe96d3aa8aed19405577db7dba0ff173c848/src/detector.c#L1435 https://github.com/AlexeyAB/darknet/blob/5a6afe96d3aa8aed19405577db7dba0ff173c848/src/detector.c#L868

@laclouis5 Hi

I noted another strange behavior, detections_count must be equal to the sum of True Positive and False Positive for each classes.

In my previous post those values are different: detections_count = 1469 vs. TP + FP = 529.Can you show screenshot?

Do you use the latest version of Darknet?

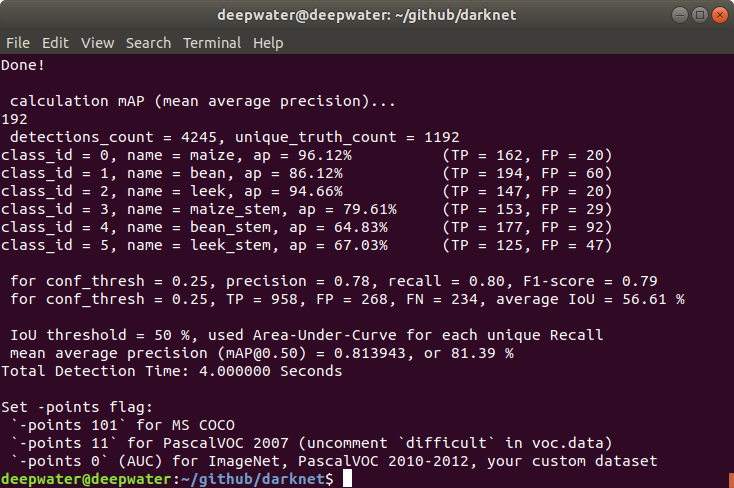

Yes, the screenshot is made with the latest version freshly pulled and built this morning (not the same classes and detector as in my previous post but same problem).

mAP computed on 191 images stored in data/val with the following command:

./darknet detector map results/yolov3-tiny_3l_6/obj.data results/yolov3-tiny_3l_6/yolov3-tiny_3l.cfg results/yolov3-tiny_3l_6/yolov3-tiny_3l_best.weights.

Nothing in bad.list or in bad_label.list (I don't know why darknet shows 192 images processed).

>

Can you describe full path what do you do to get mAP with other repo? Do you run ./darknet detector valid ... ? Do you use the same

iou_threshold=0.5as in the Darknet by default? Do you calculate [email protected] or [email protected] ?...I use

performDetect()Python wrapper with a confidence threshold of 0.25 in order to obtain detections files then I compute TP/FP/mAP using other repos with a IoU threshold of 0.5 ([email protected]).

This answers your following question: nop, same threshold for mAP and TP/FP (0.25).

>

I obtained the same number of TP, FP (and total_true_positive obviously) but the mAP is different.

Do these repositories calculate TP, FP separately from mAP, i.e. by using different confidence thresholds?

Since my repo calculates

- mAP with

confidence thresholds = 0.001https://github.com/AlexeyAB/darknet/blob/5a6afe96d3aa8aed19405577db7dba0ff173c848/src/detector.c#L721- but TP,FP with

confidence thresholds = 0.25https://github.com/AlexeyAB/darknet/blob/5a6afe96d3aa8aed19405577db7dba0ff173c848/src/detector.c#L1435

https://github.com/AlexeyAB/darknet/blob/5a6afe96d3aa8aed19405577db7dba0ff173c848/src/detector.c#L868

This would explain everything! I thought the threshold on confidence was 0.25 both for TP/FP and mAP.

Maybe detection_count is a little bit misleading as we don't know to what it refers (mAP or TP/FP) and at what threshold it was computed.

In my previous post those values are different: detections_count = 1469 vs. TP + FP = 529.

detections_count = 1469 for confidence threshold = 0.005

while TP+FP=529 for confidence threshold = 0.25

I use performDetect()Python wrapper with a confidence threshold of 0.25 in order to obtain detections files then I compute TP/FP/mAP using other repos with a IoU threshold of 0.5 ([email protected]).

You must calculate mAP for confidence threshold = 0.005

Thanks, that's much better!

@laclouis5 @AlexeyAB , I am facing the same issues. Here are the steps I made use of

For calculating the mAP in darknet

1)

./alexy_repo/darknet/darknet detector map cfg/traffic_hanoi_docker.data /mnt/pruned_weights/yolov3-2/prune.cfg /mnt/pruned_weights/yolov3-2/prune_37100.weights -i 2

Results:

calculation mAP (mean average precision)...

2108

detections_count = 129823, unique_truth_count = 54449

class_id = 0, name = car, ap = 90.41% (TP = 31562, FP = 5770)

class_id = 1, name = bike, ap = 86.02% (TP = 5351, FP = 1708)

class_id = 2, name = truck, ap = 87.58% (TP = 1613, FP = 479)

class_id = 3, name = bus, ap = 88.94% (TP = 1841, FP = 472)

class_id = 4, name = auto, ap = 84.08% (TP = 935, FP = 269)

class_id = 5, name = pedestrian, ap = 67.91% (TP = 5320, FP = 2727)

for conf_thresh = 0.25, precision = 0.80, recall = 0.86, F1-score = 0.83

for conf_thresh = 0.25, TP = 46622, FP = 11425, FN = 7827, average IoU = 63.42 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.841559, or 84.16 %

Total Detection Time: 30.000000 Seconds

2) For calculating mAP using repo https://github.com/Cartucho/mAP. I first run the python wrapper by setting the thresh to 0.005

results = darknet.detect(net=net, meta=meta, im=frame, thresh=0.005)

The results are saved as txt files class, confidence, xmin, ymin, xmax, ymax and fed as input to above third party mAP computer. The results are extremely different.

39.15% = auto AP

67.26% = bike AP

52.20% = bus AP

89.89% = car AP

36.84% = pedestrian AP

60.68% = truck AP

mAP = 57.67%

Hope you can help me with this?

First, darkness map function is a little bit misleading, in the bellow output:

detections_count = 129823, unique_truth_count = 54449

class_id = 0, name = car, ap = 90.41% (TP = 31562, FP = 5770)

class_id = 1, name = bike, ap = 86.02% (TP = 5351, FP = 1708)

...

detection_count and unique_truth_count and mAPs are given for conf_thresh = 0.005 while TP and FP for each class are given for conf_thresh = 0.25.

In your case first make sure that all your ground truth and detection annotation files are both in (xmin, ymin, xmax, ymax) absolute format and that classes labels are the same. Helper functions are given in scripts/extra/ folder of Cartucho and there is documentation.

Also check that you got the same number of TF and FP with Cartucho than with darknet map.

Perform a sanity check by overlapping ground truth boxes and detection boxes on the same image.