Darknet: EfficientNet | Implementation ?

All 214 comments

+1

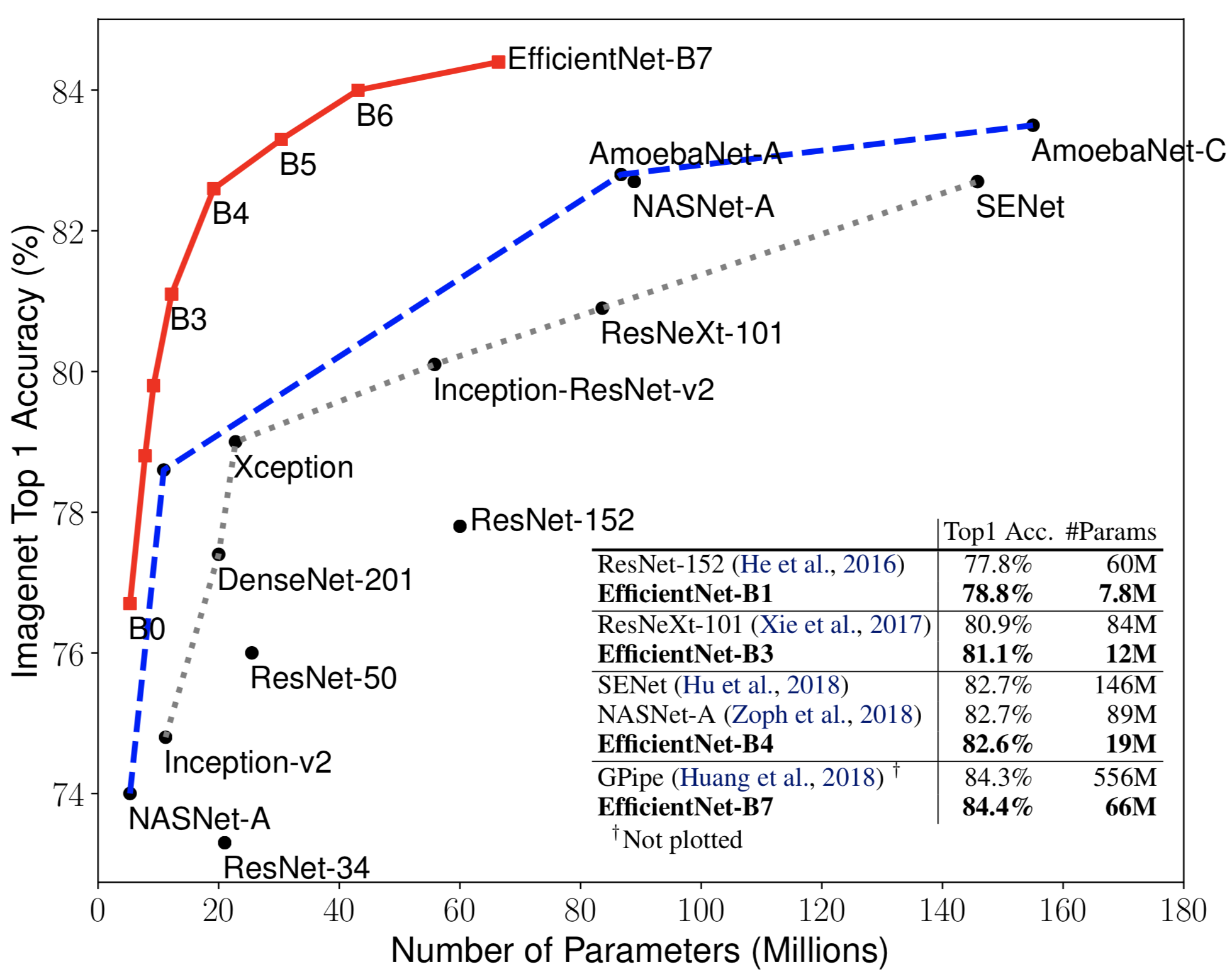

Paper: https://arxiv.org/abs/1905.11946v2

Classifier

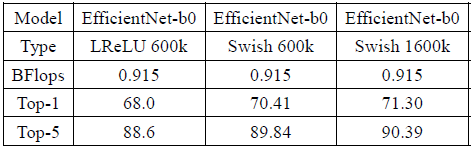

EfficientNet B0 (224x224) 0.9 BFLOPS - 0.45 B_FMA (16ms / RTX 2070), 4.9M params: efficientnet_b0.cfg.txt - Training 2.5 days

71.3% Top1 - 90.4% Top5 - accuracy weights file: https://drive.google.com/open?id=1nGdWz76A2EpNhWIfDeAI3hribboilux-

While (Official) EfficientNetB0 (224x224) 0.78 BFLOPS - 0.39 FMA, 5.3M params - that is trained by official code https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet with batch size equals to 256 has lower accuracy: 70.0% Top1 and 88.9% Top5

Detector - 3.7 BFLOPs, 45.0 [email protected] on COCO test-dev.

cfg-file: enet-coco.cfg.txt

weights file: https://drive.google.com/open?id=1FlHeQjWEQVJt0ay1PVsiuuMzmtNyv36m

efficientnet-lite3-leaky.cfg: top-1 73.0%, top-5 92.4%. - change relu6 to leaky: activation=leaky https://github.com/AlexeyAB/darknet/blob/master/cfg/efficientnet-lite3.cfg

Classifiers: - Can be trained on ImageNet(ILSVRC2012) by using 4 x GPU 2080 TI:

EfficientNet B0 XNOR (224x224) 0.8 BFLOPS + 25 BOPS (18ms / RTX 2070): efficientnet_b0_xnor.cfg.txt - 5 days

EfficientNet B3 (288x288) 3.5 BFLOPS - 1.8 B_FMA (28ms/RTX 2070): efficientnet_b3.cfg.txt - 11 days

EfficientNet B3 (320x320) 4.3 BFLOPS - 2.2 B_FMA (30ms/RTX 2070): efficientnet_b3_320.cfg.txt - 14 days

EfficientNet B4 (384x384) 10.2 BFLOPS - 5.1 B_FMA (46ms/RTX 2070): efficientnet_b4.cfg.txt - 26 days

Training command:

./darknet classifier train cfg/imagenet1k_c.data cfg/efficientnet_b0.cfg -topk

Continue training:

./darknet classifier train cfg/imagenet1k_c.data cfg/efficientnet_b0.cfg backup/efficientnet_b0_last.weights -topk

Content of imagenet1k_c.data:

classes=1000

train = data/imagenet1k.train_c.list

valid = data/inet.val_c.list

backup = backup

labels = data/imagenet.labels.list

names = data/imagenet.shortnames.list

top=5

Dataset - each image in imagenet1k.train_c.list and inet.val_c.list has one of 1000 labels from imagenet.labels.list, for example n01440764

- imagenet.labels.list: https://github.com/AlexeyAB/darknet/blob/master/data/imagenet.labels.list

- imagenet.shortnames.list: https://github.com/AlexeyAB/darknet/blob/master/data/imagenet.shortnames.list

ILSVRC2012 training dataset - annotated images - 138 GB: https://github.com/AlexeyAB/darknet/blob/master/scripts/get_imagenet_train.sh

ILSVRC2012 validation dataset:

- images - 6.3 GB: http://www.image-net.org/challenges/LSVRC/2012/nnoupb/ILSVRC2012_img_val.tar

- annotations - 2.2 MB: http://www.image-net.org/challenges/LSVRC/2012/nnoupb/ILSVRC2012_bbox_val_v3.tgz

Set validation labels: https://github.com/AlexeyAB/darknet/blob/master/scripts/imagenet_label.sh

read: https://pjreddie.com/darknet/imagenet/

More: http://www.image-net.org/challenges/LSVRC/2012/nonpub-downloads

# (width_coefficient, depth_coefficient, resolution, dropout_rate)

'efficientnet-b0': (1.0, 1.0, 224, 0.2),

'efficientnet-b1': (1.0, 1.1, 240, 0.2),

'efficientnet-b2': (1.1, 1.2, 260, 0.3),

'efficientnet-b3': (1.2, 1.4, 300, 0.3),

'efficientnet-b4': (1.4, 1.8, 380, 0.4),

'efficientnet-b5': (1.6, 2.2, 456, 0.4),

'efficientnet-b6': (1.8, 2.6, 528, 0.5),

'efficientnet-b7': (2.0, 3.1, 600, 0.5),

CLICK ME - EfficientNet B0 model details

#alpha=1.2, beta=1.1, gamma=1.15

#d=pow(alpha, fi), w=pow(beta, fi), r=pow(gamma, fi)

#fi=0, d=1.0, w=1.0, r=1.0 - theoretically

# in practice: https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_builder.py#L28-L40

# 'efficientnet-b0': (1.0, 1.0, 224, 0.2):

# width=1.0, depth=1.0, resolution=224, dropout=0.2

BLOCKS 1 - 7:

'r1_k3_s11_e1_i32_o16_se0.25', 'r2_k3_s22_e6_i16_o24_se0.25',

'r2_k5_s22_e6_i24_o40_se0.25', 'r3_k3_s22_e6_i40_o80_se0.25',

'r3_k5_s11_e6_i80_o112_se0.25', 'r4_k5_s22_e6_i112_o192_se0.25',

'r1_k3_s11_e6_i192_o320_se0.25',

In details: https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_builder.py#L61-L69

BLOCK-1

# r1_k3_s11_e1_i32_o16_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 1

# input_filters=int(options['i']), input_filters = 32

# output_filters=int(options['o']), output_filters = 16

# expand_ratio=int(options['e']), expand_ratio = 1

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 1,1

BLOCK-2

# r2_k3_s22_e6_i16_o24_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 2

# input_filters=int(options['i']), input_filters = 16

# output_filters=int(options['o']), output_filters = 24

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-3

# r2_k5_s22_e6_i24_o40_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 5

# num_repeat=int(options['r']), num_repeat = 2

# input_filters=int(options['i']), input_filters = 24

# output_filters=int(options['o']), output_filters = 40

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-4

# r3_k3_s22_e6_i40_o80_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 3

# input_filters=int(options['i']), input_filters = 40

# output_filters=int(options['o']), output_filters = 80

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-5

# r3_k5_s11_e6_i80_o112_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 5

# num_repeat=int(options['r']), num_repeat = 3

# input_filters=int(options['i']), input_filters = 80

# output_filters=int(options['o']), output_filters = 112

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 1,1

BLOCK-6

# r4_k5_s22_e6_i112_o192_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 5

# num_repeat=int(options['r']), num_repeat = 4

# input_filters=int(options['i']), input_filters = 112

# output_filters=int(options['o']), output_filters = 192

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-7

# r1_k3_s11_e6_i192_o320_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 1

# input_filters=int(options['i']), input_filters = 192

# output_filters=int(options['o']), output_filters = 320

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 1,1

CLICK ME - EfficientNet B3 model details

#alpha=1.2, beta=1.1, gamma=1.15

#d=pow(alpha, fi), w=pow(beta, fi), r=pow(gamma, fi)

#fi=3, d=1.73, w=1.33, r=1.52 - theoretically

# in practice: https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_builder.py#L28-L40

# 'efficientnet-b3': (1.2, 1.4, 300, 0.3):

# width=1.2, depth=1.4, resolution=300 (320), dropout=0.3

# https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_model.py#L120-L125

# repeats_new = int(math.ceil(depth * repeats)) ### ceil - Rounds x upward,

# https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_builder.py#L134-L137

# width_coefficient=width_coefficient,

# depth_coefficient=depth_coefficient,

# depth_divisor=8,

# min_depth=None)

#

# https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_model.py#L101-L117

# multiplier = width_coefficient = 1.2

# divisor = 8

# min_depth = none

# min_depth = divisor = 8

filters = filters * 1.2

new_filters = max(8, (int(filters + 4) // 8) * 8) ## //===floor in this case

if new_filters < 0.9 * filters: new_filters += 8

16 *1.2=19,2

new_filters = max(8, int(19,2+4)//8 * 8) = 16 (>=16)

24 *1.2=28,8

new_filters = max(8, int(28,8+4)//8 * 8) = 32 (>24)

32 *1.2=38,4

new_filters = max(8, int(38,4+4)//8 * 8) = 40 (>32)

40 *1.2=48

new_filters = max(8, int(48+4)//8 * 8) = 48 (>40)

80 *1.2=96

new_filters = max(8, int(96+4)//8 * 8) = 96 (>80)

112 *1.2=134,4

new_filters = max(8, int(134,4+4)//8 * 8) = 136 (>112)

192 *1.2=230,4

new_filters = max(8, int(230,4+4)//8 * 8) = 232 (>192)

320 *1.2=384

new_filters = max(8, int(384+4)//8 * 8) = 384 (>320)

8 *1.2=9,6

new_filters = max(8, int(9,6+4)//8 * 8) = 8 (==8)

64 *1.2=76,8

new_filters = max(8, int(76,8+4)//8 * 8) = 80 (>64)

96 *1.2=115,2

new_filters = max(8, int(115,2+4)//8 * 8) = 112 (>96)

144 *1.2=172,8

new_filters = max(8, int(172,8+4)//8 * 8) = 176 (>144)

384 *1.2=460,8

new_filters = max(8, int(460,8+4)//8 * 8) = 464 (>384)

576 *1.2=691,2

new_filters = max(8, int(691,2+4)//8 * 8) = 688 (>576)

960 *1.2=1152

new_filters = max(8, int(1152+4)//8 * 8) = 1152 (>960)

1280 *1.2=1536

new_filters = max(8, int(1536+4)//8 * 8) = 1536 (>1280)

BLOCKS 1 - 7: (for b0)

'r2_k3_s11_e1_i32_o16_se0.25', 'r4_k3_s22_e6_i16_o24_se0.25',

'r4_k5_s22_e6_i24_o40_se0.25', 'r6_k3_s22_e6_i40_o80_se0.25',

'r6_k5_s11_e6_i80_o112_se0.25', 'r8_k5_s22_e6_i112_o192_se0.25',

'r2_k3_s11_e6_i192_o320_se0.25',

In details: https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_builder.py#L61-L69

BLOCK-1

# r1_k3_s11_e1_i32_o16_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 2 //1

# input_filters=int(options['i']), input_filters = 40 //32

# output_filters=int(options['o']), output_filters = 16 //16

# expand_ratio=int(options['e']), expand_ratio = 1

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 1,1

BLOCK-2

# r2_k3_s22_e6_i16_o24_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 3 //2

# input_filters=int(options['i']), input_filters = 16 //16

# output_filters=int(options['o']), output_filters = 32 //24

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-3

# r2_k5_s22_e6_i24_o40_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 5

# num_repeat=int(options['r']), num_repeat = 3 //2

# input_filters=int(options['i']), input_filters = 32 //24

# output_filters=int(options['o']), output_filters = 48 //40

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-4

# r3_k3_s22_e6_i40_o80_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 5 //3

# input_filters=int(options['i']), input_filters = 48 //40

# output_filters=int(options['o']), output_filters = 96 //80

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-5

# r3_k5_s11_e6_i80_o112_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 5

# num_repeat=int(options['r']), num_repeat = 5 //3

# input_filters=int(options['i']), input_filters = 96 //80

# output_filters=int(options['o']), output_filters = 136 //112

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 1,1

BLOCK-6

# r4_k5_s22_e6_i112_o192_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 5

# num_repeat=int(options['r']), num_repeat = 6 //4

# input_filters=int(options['i']), input_filters = 136 //112

# output_filters=int(options['o']), output_filters = 232 //192

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-7

# r1_k3_s11_e6_i192_o320_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 2 //1

# input_filters=int(options['i']), input_filters = 232 //192

# output_filters=int(options['o']), output_filters = 384 //320

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 1,1

CLICK ME - EfficientNet B4 model details

#alpha=1.2, beta=1.1, gamma=1.15

#d=pow(alpha, fi), w=pow(beta, fi), r=pow(gamma, fi)

#fi=4, d=2.07, w=1.46, r=1.75 - theoretically

# in practice: https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_builder.py#L28-L40

# efficientnet-b4': (1.4, 1.8, 380, 0.4):

# width=1.4, depth=1.8, resolution=380, dropout=0.4

# https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_model.py#L120-L125

# repeats_new = int(math.ceil(depth * repeats)) ### ceil - Rounds x upward,

# https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_builder.py#L134-L137

# width_coefficient=width_coefficient,

# depth_coefficient=depth_coefficient,

# depth_divisor=8,

# min_depth=None)

#

# https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_model.py#L101-L117

# multiplier = width_coefficient = 1.4

# divisor = 8

# min_depth = none

# min_depth = divisor = 8

filters = filters * 1.4

new_filters = max(8, (int(filters + 4) // 8) * 8) ## //===floor in this case

if new_filters < 0.9 * filters: new_filters += 8

16 *1.4=22.4

new_filters = max(8, int(22.4+4)//8 * 8) = 24 (>16)

24 *1.4=33.6

new_filters = max(8, int(33.6+4)//8 * 8) = 32 (>24)

32 *1.4=44.8

new_filters = max(8, int(44.8+4)//8 * 8) = 48 (>32)

40 *1.4=56

new_filters = max(8, int(56+4)//8 * 8) = 56 (>40)

80 *1.4=112

new_filters = max(8, int(112+4)//8 * 8) = 112 (>80)

112 *1.4=156,8

new_filters = max(8, int(156,8+4)//8 * 8) = 160 (>112)

192 *1.4=268,8

new_filters = max(8, int(268,8+4)//8 * 8) = 272 (>192)

320 *1.4=448

new_filters = max(8, int(448+4)//8 * 8) = 448 (>320)

8 *1.4=11,2

new_filters = max(8, int(11,2+4)//8 * 8) = 8 (==8)

64 *1.4=89,6

new_filters = max(8, int(89,6+4)//8 * 8) = 88 (>64)

96 *1.4=134,4

new_filters = max(8, int(134,4+4)//8 * 8) = 136 (>96)

144 *1.4=201,6

new_filters = max(8, int(201,6+4)//8 * 8) = 200 (>144)

384 *1.4=537,6

new_filters = max(8, int(537,6+4)//8 * 8) = 536 (>384)

576 *1.4=806,4

new_filters = max(8, int(806,4+4)//8 * 8) = 808 (>576)

960 *1.4=1344

new_filters = max(8, int(1344+4)//8 * 8) = 1344 (>960)

1280 *1.4=1792

new_filters = max(8, int(1792+4)//8 * 8) = 1792 (>1280)

BLOCKS 1 - 7:

'r2_k3_s11_e1_i32_o16_se0.25', 'r4_k3_s22_e6_i16_o24_se0.25',

'r4_k5_s22_e6_i24_o40_se0.25', 'r6_k3_s22_e6_i40_o80_se0.25',

'r6_k5_s11_e6_i80_o112_se0.25', 'r8_k5_s22_e6_i112_o192_se0.25',

'r2_k3_s11_e6_i192_o320_se0.25',

In details: https://github.com/tensorflow/tpu/blob/05f7b15cdf0ae36bac84beb4aef0a09983ce8f66/models/official/efficientnet/efficientnet_builder.py#L61-L69

BLOCK-1

# r1_k3_s11_e1_i32_o16_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 2 //1

# input_filters=int(options['i']), input_filters = 48 //32

# output_filters=int(options['o']), output_filters = 24 //16

# expand_ratio=int(options['e']), expand_ratio = 1

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 1,1

BLOCK-2

# r2_k3_s22_e6_i16_o24_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 4 //2

# input_filters=int(options['i']), input_filters = 24 //16

# output_filters=int(options['o']), output_filters = 32 //24

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-3

# r2_k5_s22_e6_i24_o40_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 5

# num_repeat=int(options['r']), num_repeat = 4 //2

# input_filters=int(options['i']), input_filters = 32 //24

# output_filters=int(options['o']), output_filters = 56 //40

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-4

# r3_k3_s22_e6_i40_o80_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 6 //3

# input_filters=int(options['i']), input_filters = 56 //40

# output_filters=int(options['o']), output_filters = 112 //80

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-5

# r3_k5_s11_e6_i80_o112_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 5

# num_repeat=int(options['r']), num_repeat = 6 //3

# input_filters=int(options['i']), input_filters = 112 //80

# output_filters=int(options['o']), output_filters = 160 //112

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 1,1

BLOCK-6

# r4_k5_s22_e6_i112_o192_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 5

# num_repeat=int(options['r']), num_repeat = 8 //4

# input_filters=int(options['i']), input_filters = 160 //112

# output_filters=int(options['o']), output_filters = 272 //192

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 2,2

BLOCK-7

# r1_k3_s11_e6_i192_o320_se0.25

# return efficientnet_model.BlockArgs(

# kernel_size=int(options['k']), kernel_size = 3

# num_repeat=int(options['r']), num_repeat = 2 //1

# input_filters=int(options['i']), input_filters = 272 //192

# output_filters=int(options['o']), output_filters = 448 //320

# expand_ratio=int(options['e']), expand_ratio = 6

# id_skip=('noskip' not in block_string),

# se_ratio=float(options['se']) if 'se' in options else None, se_ratio = 0.25

# strides=[int(options['s'][0]), int(options['s'][1])]) strides = 1,1

https://www.dlology.com/blog/transfer-learning-with-efficientnet/

https://github.com/zsef123/EfficientNets-PyTorch/tree/master/models

https://medium.com/@lessw/efficientnet-from-google-optimally-scaling-cnn-model-architectures-with-compound-scaling-e094d84d19d4

In other words, to scale up the CNN, the depth of layers should increase 20%, the width 10% and the image resolution 15% to keep things as efficient as possible while expanding the implementation and improving the CNN accuracy.

The MBConv block is nothing fancy but an Inverted Residual Block (used in MobileNetV2) with a Squeeze and Excite block injected sometimes.

MobileNetV2: Inverted Residuals and Linear Bottlenecks: https://arxiv.org/pdf/1801.04381v4.pdf

MobileNetV2 graph: http://ethereon.github.io/netscope/#/gist/d01b5b8783b4582a42fe07bd46243986

MobileNetV2 proto: https://github.com/shicai/MobileNet-Caffe/blob/master/mobilenet_v2_deploy.prototxt

MobileNetv2 Darknet-cfg: https://github.com/WePCf/darknet-mobilenet-v2/blob/master/mobilenet/test.cfg (should be trained from the begining, since the src/image.c and examples/classifier.c are modified in WePCf-repo, search for "mobilenet" to see what are changed)

|  |

|  |

|

|-|-|

| | |

MobileNet_v2:

EfficientNet_b0:

|  |

|  |

|

|-|-|

| | |

EfficientNet_b0: efficientnet_b0.cfg.txt - Accuracy: Top1 = 57.6%, Top5 = 81.2% - 150 000 iterations (something goes wrong)

Would like to share this link.

https://pypi.org/project/gluoncv2/

Interesting to see the imagenet-1k comparison chart.

Model | Top 1 Error | Top 5 Error | Params | Flops

DarkNet-53 | 21.41 | 5.56 | 41,609,928 | 7,133.86M

EfficientNet-B0b | 23.41 | 6.95 | 5,288,548 | 414.31M

With the difference of 2% in top 1 error with number of parameters are 1/8 and 1/17 less flops.

Would love to see the inference time and accuracy as object detection.

Also a tiny version wouldn't be bad after all.

This is like running yolov3-tiny with yolov3 accuracy.

@dexception

Have you ever seen a graphic representation of EfficientNet b1 - b7 models (other than b0), or their exact text description, like Caffe proto-files?

EfficientNet_b4: efficientnet_b4.cfg.txt

@AlexeyAB

Keras, Pytorch and Mxnet implementation is definitely there:

https://github.com/qubvel/efficientnet

https://github.com/lukemelas/EfficientNet-PyTorch

https://github.com/titu1994/keras-efficientnets

https://github.com/zsef123/EfficientNets-PyTorch

https://github.com/DableUTeeF/keras-efficientnet

https://github.com/qubvel/efficientnet

https://github.com/mnikitin/EfficientNet/blob/master/efficientnet_model.py

The code and research paper is different. But the code is correct.

https://github.com/tensorflow/tpu/issues/383

I don't think there is any caffe implementation as of yet.

Hello, I draw the model from Keras implementation: https://github.com/qubvel/efficientnet .

Here are b0 and b1.

CLICK ME - EfficientNet B0 and B1 model diagrams

I use the code:

`from efficientnet import EfficientNetB1

from keras.utils import plot_model

model = EfficientNetB1()

plot_model(model, to_file='EfficientNetB1.png')`

EfficientNet_b0: efficientnet_b0.cfg.txt - Accuracy: Top1 = 19.3%, Top5 = 40.6% (something goes wrong)

Maybe squeeze and excitation blocks are missing?

@WongKinYiu Thanks!

Can you also add model diagram for B4?

Maybe squeeze and excitation blocks are missing?

I think yes, there should be:

- squeeze and excitation blocks https://towardsdatascience.com/squeeze-and-excitation-networks-9ef5e71eacd7 and https://arxiv.org/abs/1709.01507v4 and https://github.com/hujie-frank/SENet

- dropout

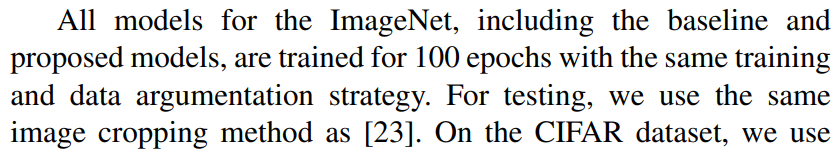

- batch=120 and should be trained at least 1 000 000 - 1 600 000 iterations

@dexception Thanks!

Model diagram for EfficientNets.

CLICK ME - EfficientNet B0 model diagram

CLICK ME - EfficientNet B1 model diagram

CLICK ME - EfficientNet B2 model diagram

CLICK ME - EfficientNet B3 model diagram

CLICK ME - EfficientNet B4 model diagram

CLICK ME - EfficientNet B5 model diagram

CLICK ME - EfficientNet B6 model diagram

CLICK ME - EfficientNet B7 model diagram

@WongKinYiu Thanks!

It seems now it looks like your diagram:

efficientnet_b0.cfg.txt

- top1 = 69.49%

- top5 = 89.44%

Should be used: should be trained at least 1.6 M iterations with learning_rate=0.256 policy=step scale=0.97 step=10000 (initial learning rate 0.256 that decays by 0.97 every 2.4 epochs) to achieve Top1 = 76.3%, Top5 = 93.2%

Trained weights-file, 500 000 iterations with batch=120: https://drive.google.com/open?id=1MvX0skcmg87T_jn8kDf2Oc6raIb56xq9

Just

- I use

[dropout]instead of DropConnect

On your diagrams Lambda is a [avgpool].

MBConv blocks includes:

- Squeeze-and-Excitation blocks (layers:

[avgpool]->[conv]->[conv]->[scale_channels]) - and

[dropout]-layer before each[shortcut]-residual layer

as it is done here: https://github.com/qubvel/efficientnet/blob/master/efficientnet/model.py - Swish activations

@AlexeyAB Good job! And thank you for sharing the cfg file.

I will also implement SNet of ThunderNet as backbone to compare with EfficientNet.

@WongKinYiu Yes, this is interesting that SNet+ThunderNet achieved the same accuracy 78.6% [email protected] as Yolo v2, but by using 2-stage-detector with 24 FPS on ARM CPU: https://paperswithcode.com/sota/object-detection-on-pascal-voc-2007

@AlexeyAB I also want to implement CEM (Context Enhancement Module) and SAM (Spatial Attention Module) of ThunderNet.

CEM + YOLOv3 got 41.2% [email protected] with 2.85 BFLOPs.

CEM + SAM + YOLOv3 got 42.0% [email protected] with 2.90 BFLOPs.

CEM:

SAM:

Results:

I'd be interested in running a trial with efficientnet and sharing the results - do you have a B6 or B7 version of the model? Do I use it in the same way as I would with any of the other cfg files? No need to manually calculate anchors and enter classes in the cfg?

Oh I see - efficientnet is a full Object Detector? But maybe the B7 model with a Yolo head... ?

@LukeAI

This is imagenet classification.

Ok so I realise that this is image classification - I have an image classification problem with 7 classes - if necessary I could resize all my images to 32x32 - how could I train/test on my dataset with the .cfg ?

@AlexeyAB

Nice work on EfficientNet.

If implemented successfully this would give the fastest training and inference time among all implementations.

@AlexeyAB

Since we are already discussing the newer models here

https://github.com/AlexeyAB/darknet/issues/3114

This issue should be merged with this.

Because eventually we will have yolo-head with EfficientNet once the niggles are sorted out.

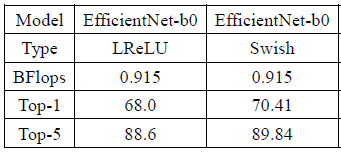

Will Swish be implemented in darknet soon?

which is based on RELU/RELU6?

Do you have scale_channels layer implement?3q

@WongKinYiu Thanks!

It seems now it looks like your diagram: efficientnet_b0.cfg.txt

- top1 = 68.04%

- top5 = 88.59%

Should be used: should be used Swish instead of leaky-ReLU, should be trained at least 1M iterations with

learning_rate=0.256 policy=step scale=0.97 step=10000(initial learning rate 0.256 that decays by 0.97 every 2.4 epochs)Trained weights-file, 378 000 iterations with batch=120: https://drive.google.com/open?id=1PWbM3en8mOqIbe9kIrEY-ljvvcmTR5AK

Just

- I use

[dropout]instead of DropConnect- I use

activation=leaky-relu (slope=0.1) instead of SwishOn your diagrams Lambda is a [avgpool].

MBConv blocks includes:

- Squeeze-and-Excitation blocks (layers:

[avgpool]->[conv]->[conv]->[scale_channels])- and

[dropout]-layer before each[shortcut]-residual layer

as it is done here: https://github.com/qubvel/efficientnet/blob/master/efficientnet/model.py

@AlexeyAB

Can you share other cfg files for EfficientNet ? I would like to give it a try.

@ChenCong7375 @beHappy666

Do you have scale_channels layer implement?3q

Yes.

Will Swish be implemented in darknet soon?

which is based on RELU/RELU6?

There are already implemented in the last commits:

Swish is based on sigmoid,

swish = x * sigmoid(x)

later I will addh-swish = x * ReLU6(x+3) / 6from MobileNet v3: https://github.com/AlexeyAB/darknet/issues/3494Squeeze-n-excitation blocks that is based on

[scale_channels]-layer

@dexception I will add b0, b4 and may be other models in 1-2 days. I just should test it.

It would be nice if you can train them about 1-1.5 million iterations (at least 100 epochs with batch=120).

@dexception I will try for sure.

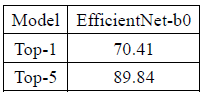

Just want to mention this ....so that we are on track:

EfficientNet B0 Stats:

Difference of 8.26% Top 1 Accuracy with the actual.

Difference of 4.61% Top 5 Accuracy with the actual.

Flops: 0.915 vs 0.39 with the actual. (2.34 Times)

https://github.com/AlexeyAB/darknet/files/3307881/efficientnet_b0.cfg.txt

@dexception

EfficientNet B0 Stats:

Difference of 8.26% Top 1 Accuracy with the actual.

Difference of 4.61% Top 5 Accuracy with the actual.

It is just because there wasn't used Siwsh-activation - I will add. And because it was trained 360 000 iterations instead of 1 600 000 iterations with another learning rate policy - I will change.

Flops: 0.915 vs 0.39 with the actual. (2.34 Times)

This is strange, since I used absolutely the same model. Also you can compare their Flops for ResNet50 or 101 with

ResNet50:

4.1 BFlopsshown in their paper Table 2: https://arxiv.org/pdf/1905.11946v2.pdfResNet50:

9.74 BFlopsshown in Joseph's site: https://pjreddie.com/darknet/imagenet/ResNet50:

10 BFlopsshown in Darknet

So it seems they calculate two operations (ADD+MUL) as one FMA-operation (which is used in CPUs, GPUs and probably in their TPUs): https://en.wikipedia.org/wiki/FMA_instruction_set

So we use correct model, just we calculate Flops in different ways, our approach is correct: https://en.wikipedia.org/wiki/FLOPS

https://github.com/AlexeyAB/darknet/blob/88cccfcad4f9591a429c1e71c88a42e0e81a5e80/src/convolutional_layer.c#L363

https://github.com/AlexeyAB/darknet/blob/88cccfcad4f9591a429c1e71c88a42e0e81a5e80/src/convolutional_layer.c#L550

Output of ResNet50:

@AlexeyAB

Thanks for the explanation. Learned alot from you.

My main objective is to use EfficientNet for Object Detection.

Can't wait to try it.

@AlexeyAB 3q

@dexception @beHappy666 @nseidl @WongKinYiu @LukeAI @mdv3101 @ChenCong7375

I added 4 cfg-files Classifier EfficientNets: B0, B3, B3_320, B4: https://github.com/AlexeyAB/darknet/issues/3380#issuecomment-501263052

(there are used: squeeze-n-excitation, swish-sigmoid, dropout, residual-connections, grouped-convolutionals)

To get the highest Top1/Top5 results, you should train it at least 1 600 000 iterations with batch=128.

Also I added EfficientNet B0 XNOR where are replaced Depth-wise-conv-layers by XNOR-conv-layers.

You can try to train it on ImageNet (ILSVRC2012), I wrote there how to do it: https://github.com/AlexeyAB/darknet/issues/3380#issuecomment-501263052

After you train one of them on ImageNet, it can be used as pre-trained weights-files for detection networks.

Then I will create a Detection network: EfficientNet-backend + TridentNet (or FPN as in Yolov3) + Yolo_Head

I will add GIoU, Mixup, Scale_xy, and may be new_PAN and Assisted Excitation of Activations, If I have time to make them: https://github.com/AlexeyAB/darknet/projects/1#card-22787888

Then you can train it on MS COCO and get state-of-art results.

Also you can try to train EfficientNet on Stylized-ImageNet + ImageNet and get state-of-art results:

@AlexeyAB

I have always hated the idea of putting names of the categories within the name of images.

For now i have no choice but to follow it. Eventually it would better to have a single csv file for classification rather than this.

@dexception This is not Darknet's idea.

This is done in default ILSVRC2012_img_train.tar (ImageNet).

Maybe in the future I will make an alternative with txt or csv file/files, but this is not a priority.

@AlexeyAB

Just started training for EfficientNet b0 model.

I have a 2080 TI(only one) machine.

batch=128

subdivision=4

I guess it is going to take a week.

@AlexeyAB I can train cifar with EfficientNet_b0 on my titanXp, I think there must be some error in my detection cfg. #3500

I am looking forward to your object-detection work on EfficientNet. Thank you very much.

@dexception here.

efficientnet_b0_cg.cfg.txt

I think maybe scale_channels_layer has cuda init problem.

When random parameter of yolo layer is set to 1, it will get CUDNN_STATUS_EXECUTION_FAILED error.

If disable cudnn, it will get illegal memory access error.

When random parameter of yolo layer is set to 0, anything is fine.

@AlexeyAB I can train cifar with EfficientNet_b0 on my titanXp, I think there must be some error in my detection cfg. #3500

I am looking forward to your object-detection work on EfficientNet. Thank you very much.

@ChenCong7375

Can you try and train on efficientNet-b3 model ?

https://github.com/AlexeyAB/darknet/files/3340717/efficientnet_b3.cfg.txt

@WongKinYiu

What init problem do you mean? https://github.com/AlexeyAB/darknet/blob/54e2d0b0e8909bc1da8a2d15113b4f2669ce2f4e/src/scale_channels_layer.c#L7-L40

When random parameter of yolo layer is set to 1, it will get CUDNN_STATUS_EXECUTION_FAILED error.

If disable cudnn, it will get illegal memory access error.When random parameter of yolo layer is set to 0, anything is fine.

Do you mean an error occurs during backpropagation from yolo-layer (training)?

As you can see it doesn't use cuDNN: https://github.com/AlexeyAB/darknet/blob/54e2d0b0e8909bc1da8a2d15113b4f2669ce2f4e/src/scale_channels_layer.c#L96-L116

To get correct error place, you should build Darknet with DEBUG=1

@WongKinYiu

What init problem do you mean?

When random parameter of yolo layer is set to 1, it will get CUDNN_STATUS_EXECUTION_FAILED error.

If disable cudnn, it will get illegal memory access error.

When random parameter of yolo layer is set to 0, anything is fine.Do you mean an error occurs during backpropagation from yolo-layer (training)?

As you can see it doesn't use cuDNN:

To get correct error place, you should build Darknet with

DEBUG=1

I am not sure add resize_scale_channels_layer to network.c is necessary or not.

Or maybe error occurs in other layer.

I get a fever now, I will check it using 'DEBUG=1' after I feel better.

@WongKinYiu

I am not sure add resize_scale_channels_layer to network.c is necessary or not.

Yes, it is there: https://github.com/AlexeyAB/darknet/blob/54e2d0b0e8909bc1da8a2d15113b4f2669ce2f4e/src/scale_channels_layer.c#L42-L58

@WongKinYiu

I am not sure add resize_scale_channels_layer to network.c is necessary or not.

Yes, it is there:

I mean here.

But even though I add resize_scale_channels_layer to network.c, the error still occurs.

I have no other idea why it will get error when set random=1 now.

@WongKinYiu I fixed it: https://github.com/AlexeyAB/darknet/commit/5a6afe96d3aa8aed19405577db7dba0ff173c848

I don't get an error if I set width=320 height=320 random=1

@WongKinYiu I fixed it: 5a6afe9

I don't get an error if I set width=320 height=320 random=1

Hello, previous I got error at w=h=416, after training 50~80 epochs.

I will try new repo after tomorrow, thank you.

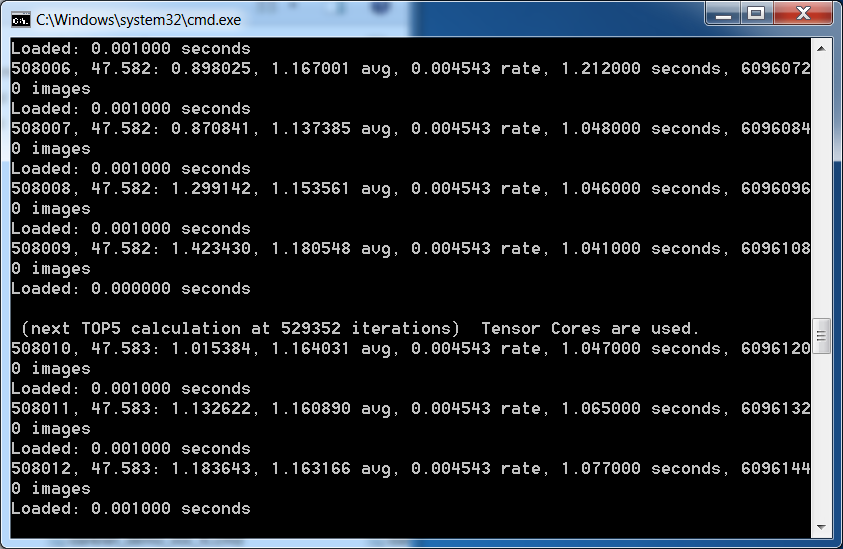

@AlexeyAB The iterations are moving too slowly. They are stopping after every 20-30 iterations.

Is this normal ?

@dexception

The iterations are moving too slowly. They are stopping after every 20-30 iterations.

Is this normal ?

With what message?

(next TOP5 calculation at 20011 iterations) Tensor Cores are disabled until the first 3000 iterations are reached.

(next TOP5 calculation at 20011 iterations) Tensor Cores are disabled until the first 3000 iterations are reached.

(next TOP5 calculation at 20011 iterations) Tensor Cores are disabled until the first 3000 iterations are reached.

(next TOP5 calculation at 20011 iterations) Tensor Cores are disabled until the first 3000 iterations are reached.

The iterations are moving too slowly. They are stopping after every 20-30 iterations.

Is this normal ?

Does the program crash completely after each 30 iterations or just pause for a while?

It pauses for a min and then this line is printed.

(next TOP5 calculation at 20011 iterations) Tensor Cores are disabled until the first 3000 iterations are reached.

@dexception This is very strange. I didn't meet this.

May be it calculates Top5 too often.

Try to set 1000 there: https://github.com/AlexeyAB/darknet/blame/master/src/classifier.c#L144

Is it possible to use efficientnet with yolov3 for object detection, with training in Darknet here?

@nseidl

After you train one of them on ImageNet, it can be used as pre-trained weights-files for detection networks.

Then I will create a Detection network: EfficientNet-backend + TridentNet (or FPN as in Yolov3) + Yolo_HeadI will add GIoU, Mixup, Scale_xy, and may be new_PAN and Assisted Excitation of Activations, If I have time to make them: https://github.com/AlexeyAB/darknet/projects/1#card-22787888

Then you can train it on MS COCO and get state-of-art results.

@dexception This is very strange. I didn't meet this.

May be it calculates Top5 too often.

Try to set 1000 there: https://github.com/AlexeyAB/darknet/blame/master/src/classifier.c#L144

Still Facing the same issue.

So exactly after 30 iterations it is pausing.

@dexception

What batch, subdivisions and GPU do you use?

Can you show GPU-VRAM usage during training?

What CUDA, cuDNN and OS do you use?

How many GPUs do you use?

What training command do you use?

Show your

obj.datafileDo you train on Local or Remote server?

What batch, subdivisions and GPU do you use?

Batch : 128

Subdivisions: 4

Can you show GPU-VRAM usage during training?

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 17652 C ./darknet 6521MiB |

+-----------------------------------------------------------------------------+

index, timestamp, utilization.gpu [%], power.draw [W], temperature.gpu

0, 2019/07/05 09:42:29.860, 0 %, 217.12 W, 58

0, 2019/07/05 09:42:30.863, 68 %, 103.37 W, 55

0, 2019/07/05 09:42:31.864, 0 %, 215.44 W, 58

0, 2019/07/05 09:42:32.869, 52 %, 103.22 W, 56

0, 2019/07/05 09:42:33.870, 16 %, 116.11 W, 56

0, 2019/07/05 09:42:34.872, 38 %, 102.11 W, 56

0, 2019/07/05 09:42:35.873, 30 %, 101.66 W, 56

0, 2019/07/05 09:42:36.875, 18 %, 189.39 W, 57

0, 2019/07/05 09:42:37.876, 50 %, 101.23 W, 56

0, 2019/07/05 09:42:38.878, 6 %, 218.42 W, 59

0, 2019/07/05 09:42:39.879, 63 %, 102.96 W, 56

0, 2019/07/05 09:42:40.881, 0 %, 213.42 W, 59

0, 2019/07/05 09:42:41.882, 68 %, 102.96 W, 56

0, 2019/07/05 09:42:42.885, 0 %, 212.70 W, 60

0, 2019/07/05 09:42:43.887, 68 %, 103.08 W, 56

0, 2019/07/05 09:42:44.889, 0 %, 212.85 W, 60

0, 2019/07/05 09:42:45.890, 69 %, 103.03 W, 57

0, 2019/07/05 09:42:46.892, 0 %, 102.55 W, 56

What CUDA, cuDNN and OS do you use?

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2018 NVIDIA Corporation

Built on Sat_Aug_25_21:08:01_CDT_2018

Cuda compilation tools, release 10.0, V10.0.130

Cudnn:

cudnn-10.0-linux-x64-v7.5.0.56.tgz

How many GPUs do you use?

1 GPU i.e Nvidia RTX 2080 TI

What training command do you use?

nohup ./darknet classifier train cfg/imagenet1k.data cfg/efficientnet_b0.cfg /efficientnet_b0/backup/efficientnet_b0_last.weights -topk -dont_show &

Show your obj.data file

classes=1000

train = /opt/dataset/imagenet_2012/imagenet1k.train.list

valid = /opt/dataset/imagenet_2012/inet.val.list

backup = /opt/work/project_efficientnet/darknet/efficientnet_b0/backup/

labels = /opt/work/project_efficientnet/darknet/cfg/imagenet.labels.list

names = /opt/work/project_efficientnet/darknet/cfg/imagenet.shortnames.list

top=5

Do you train on Local or Remote server?

Remote Server via ssh

With the current speed i am only able to manage 50k interations daily. So training the entire dataset for EfficientNet B0 would take a month. So something is definitely wrong here.

@WongKinYiu I fixed it: 5a6afe9

I don't get an error if I set width=320 height=320 random=1

I still get same error after training 30~60 iterations.

I think maybe w & h of resize_scale_channels_layer is not correct.

In make_scale_channels_layer:

l.out_w = w2;

l.out_h = h2;

In resize_scale_channels_layer:

l->out_w = w;

l->out_h = h;

while w & h should be 1 in scale_channels_layer (assert(w == 1 & h == 1);).

But I don't know why it can run several iterations...

I am waiting for replace Darknet53 with Efficient in backbone of YOLO

@hitle451997

This is going to take a while.

@dexception

Maybe only the display slows down, but training goes without slowing down?

It seems that your HDD or CPU is a bottleneck, because Loading time higher than 0 sec.

Try to use SSD.

@AlexeyAB

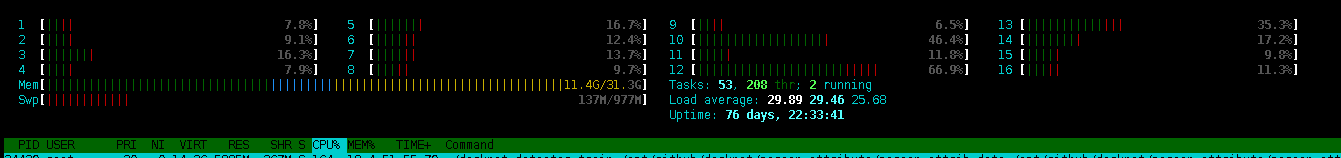

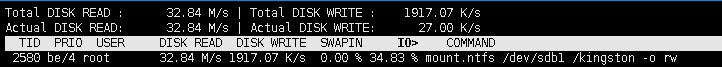

Htop

iotop

I think system is quite resourceful.

Xeon cpu with 16 cores should enough for training.

@dexception

So HDD is a bottleneck.

Load time should be ~0 sec - I use SSD Samsung EVO 850 Pro 1 TB:

@AlexeyAB

Let me put the data on SSD and start training again.

Will share you the details tomorrow.

@AlexeyAB

Read Speed has increased from : 4.88 M/s to 32.84 M/s

6.7 Times increase in speed. :-)

Now if somebody wants to train on Open Images Dataset. Imagine the horror of buying 18TB SSD.

@dexception About ~ $4K for 2 x 8 TB SSD: https://www.amazon.com/Intel-P4510-3-1x4-Solid-SSDPE2KX080T801/dp/B0782Q4CV9

@AlexeyAB

So if we train an EfficientNet-B0 model on OpenImages and then use it as pretrained weights, would it not give better accuracy on than a pretrained model that was trained on imagenet ? There must be some difference ? Right ? What world be that difference ? Would it better or worse ?

@dexception

ImageNet contains about 1.3 million images for Classification - 0.14 TB (140 GB).

Google Open Images dataset contains over 30 million images and 15 million bounding boxes for Detection - 18 TB (18 000 GB).

So yes, its better to train the model on OpenImages.

I just don't know should we immediately train the Detector, or do we need to train the Classifier for some time, and then use this pre-trained weights for the Detector training.

Do you have 18 TB SSD and want to train on OpenImages?

@AlexeyAB

Google Open Images dataset contains over 30 million images and 15 million bounding boxes for Detection - 18 TB (18 000 GB).

Is it necessary to take all classes? what about extracting some of them? I extracted 2 classes from openimage and size was about 20-30 GB

@dexception

- ImageNet contains about 1.3 million images for Classification - 0.14 TB (140 GB).

- Google Open Images dataset contains over 30 million images and 15 million bounding boxes for Detection - 18 TB (18 000 GB).

So yes, its better to train the model on OpenImages.

I just don't know should we immediately train the Detector, or do we need to train the Classifier for some time, and then use this pre-trained weights for the Detector training.Do you have 18 TB SSD and want to train on OpenImages?

Well the reason why i was asking question was that we are training our current models on darknet53 which is training on mscoco/imagenet ... so it would be better to use the pre trained weights from

https://pjreddie.com/media/files/yolov3-openimages.weights

This will prove the difference in terms of accuracy(if any) that we get. Then decide whether it is worth training any models on open-images.

If there is too much difference in accuracy then why not merge both datasets and then create pretrained models ? This is a topic that i have not seen discussed anywhere.

I don't have the hardware to train OpenImages right now. Even how time it would take to train such a big dataset ..couple of years on my RTX 2080TI. This is a job only TPU's can handle.

Lets have the EfficientNet-B0 model ready as a classifier first then work on object detection.

@dexception @AlexeyAB really interesting work, excited to see what comes out.

I have a couple of questions / comments:

- Is any eventual Object Detector that comes out of this project likely to be very low FPS, at least for the time being? (Like in this result that found efficientnet-B0 based network more than an order of magnitude slower than darknet-tiny using this repo.) https://github.com/AlexeyAB/darknet/issues/3580

- Would openimages be inappropriate for training a classifier like efficientnet given that it principally contains complex scenes with many different classes in, rather than just one class per scene like in imagenet?

@LukeAI

I am quite puzzled myself. It was last year since i opened the issue:

https://github.com/AlexeyAB/darknet/issues/1232

And since then i have evaluated more than 400 open source repositories and many many research papers. Many commercial implementations.

Apart from LBP for single class detection implementation from https://github.com/ShiqiYu/libfacedetection i haven't come across any open source implementation that is remotely close to the commercial offerings from various vendors. Yolov3-Tiny is still by far the best trade-off in terms of accuracy/speed/cost.

@AlexeyAB

Please correct me if i am wrong.

@LukeAI

Is any eventual Object Detector that comes out of this project likely to be very low FPS, at least for the time being? (Like in this result that found efficientnet-B0 based network more than an order of magnitude slower than darknet-tiny using this repo.) #3580

I don't unserstand, do you want to find very slow Detector? )

Would openimages be inappropriate for training a classifier like efficientnet given that it principally contains complex scenes with many different classes in, rather than just one class per scene like in imagenet?

I don't know.

I can propose, that it would be better to train Classifier EfficientNet on ImageNet (0.13 TB), and the train Detector-basedon-EfficientNet on OpenImages 18 TB.

@dexception

Did you train EfficientNetB0 for 1 M - 1.6 M iterations and what Top1/5 accuracy did you get?

Yes, Yolov3 and tiny are the best models, just we should make something like v4 with new features: SPP + PAN2 + GIoU + scales_x_y + Assisted Excitation of Activations + squeeze_n_excitation + swish_activation + corner_head.

Apart from LBP for single class detection implementation from https://github.com/ShiqiYu/libfacedetection i haven't come across any open source implementation that is remotely close to the commercial offerings from various vendors. Yolov3-Tiny is still by far the best trade-off in terms of accuracy/speed/cost.

Yes, old approaches LBP, DFM, HaarCascades... are fast but can detect only 1 object only from 1 side with ~the same exposure, tilt, rotation ....

In all object detection challengaes (PascalVOC, OpenImages, MS COCO, ImageNet, Kitty, Cityscapes,...) the winners are Deep Learning models in all places, all last 4 years.

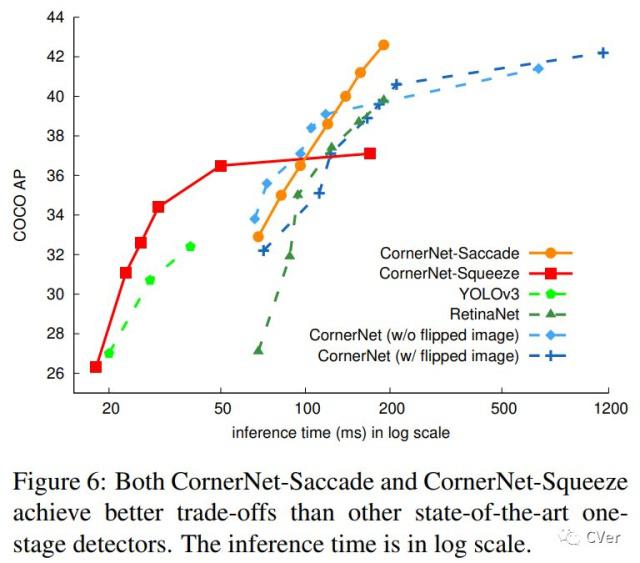

In terms of inference time, Yolov3-tiny and Yolov3 are the optimal models may be except CornerNet

(but we should compare Yolov3 + SPP + PAN2 + GIoU + scales_x_y + Assisted Excitation of Activations vs CornerNet, may be we should use [corner]-layer-head instead of [yolo]-layer-head):

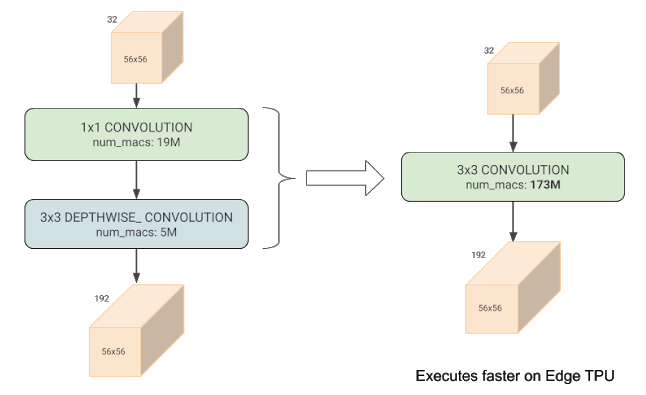

In terms of BFLOPS, the best model EfficientNet, but it isn't the fastest until there will be mainstream hardware devices with very fast depthwise-convolutional layer processing:

I don't unserstand, do you want to find very slow Detector? )

No, of course not... I'm just wondering if this efficientnet/yolo project has any near-future potential to become a high AP realtime OD algoirithm on GPUs.

I can propose, that it would be better to train Classifier EfficientNet on ImageNet (0.13 TB), and the train Detector-basedon-EfficientNet on OpenImages 18 TB.

This sounds very sensible. Just want to point out that 18 TB is the entire dataset. Only a subset of Openimages is actually annotated with bounding boxes and that weighs in at 561 GB uncompressed (see here

Yes, Yolov3 and tiny are the best models, just we should make something like v4 with new features: SPP + PAN2 + GIoU + scales_x_y + Assisted Excitation of Activations + squeeze_n_excitation + swish_activation + corner_head.

Sounds great. I want to contribute if I can. Even if that's just running experiments. My findings on the above points seem to indicate that SPP is unambiguously better (as everybody knows by now), PAN2 helps a little (although does slow things down - difficult to say if the trade is unambiguously worth it), Swish helps a little and is essentially no cost. Don't really know about the other stuff.

I want to train a Darknet53 network with swish activations on Imagenet so that we have a starting point of pre-trained weights to run other experiments from. Efficientnet looks like it has more long term potential but perhaps not on currently existing GPUs?

- Are there any other large single class datasets that you know of that I could throw in with Imagenet to get even more breadth and diversity?

- What resolution should I train at? PJ Reddie uses 448 x 448 with letterboxing in his highest performing darknet53 but presumably higher resolution would give better weights for training higher resolution ODs? I'm also unsure if I should be cropping, letterboxing or distorting. Would appreciate any thoughts?

I've just been checking out some of the human-verified image-level only images from openimages Here are the first five from the training set:

https://c7.staticflickr.com/6/5499/10245691204_98dce75b5a_o.jpg

https://farm1.staticflickr.com/5615/15335861457_ec2be7a54e_o.jpg

https://c7.staticflickr.com/8/7590/17048042861_97168daff8_o.jpg

https://farm5.staticflickr.com/5582/18233009494_029b52ca79_o.jpg

https://farm6.staticflickr.com/4126/5145819744_b4a7871064_o.jpg

Possibly we could download just those images that have only one kind of class label? And then train a classifier on imagenet, openimages, imagenet + openimages - and then train an OD on all three starting weights on some different dataset to see how much, if any, difference it makes?

@AlexeyAB

I was assigned other work and since i only have one GPU to work with i had to stop training and will resume once i am finished with new assignment. I guess we all know how software industry works. :-)

Its crazy sometimes.

I am going to share my real life practical experience with you and going to be quite blunt about it :-)

Your missing the point when i am talking this repo https://github.com/ShiqiYu/libfacedetection

The default opencv implementation of LBP is not that fast. There are huge performance improvements in his code. I am just wondering whether certain C optimizations can give a boost or not.

As long as we are using fully connected layers i doubt we will have any improvements in yolov3-tiny's inference time. Activation function also needs to be replaced. Please refer to 3.1 section of this paper:

Compact Convolutional Neural Network Cascade

https://arxiv.org/pdf/1508.01292

just we should make something like v4 with new features: SPP + PAN2 + GIoU + scales_x_y + Assisted Excitation of Activations + squeeze_n_excitation + swish_activation + corner_head.

As long as it doesn't increase the computations in the network and doesn't increase the cost of the overall machine.

Int8 Quantization

Couple of months back i did a comparison between TensorRT yolo quantization vs yolov2_light repo.

https://github.com/AlexeyAB/yolo2_light/issues/51

TensorRT quantization is way better but still the accuracy that they claim in the papers is not even close.

Please refer to this issue:

https://devtalk.nvidia.com/default/topic/1050874/tensorrt/int8-calibration-is-not-accurate-see-image-diff-with-and-without/post/5354732/#5354732

I guess yolov2_light's quantization needs a calibration table, the way Nvidia is doing it.

Improvements

I would love to have the option of having an 8 point coordinate(x1,y1,x2,y2,x3,y3,x4,y4).

Increasing possibilities of using it as a text detector and on satellite images.

Also for weapon detection this would help because when your tagging images often hand is major part of the 7 shape of the gun. So when your using it it real life if somebody is sitting with his hands folded, or shaking hands or holding a mobile phone or a cup there are false alerts since hand was part of the tagging. And daily we get about 50 false alerts in production from a single camera.

Cost

Imagine my horror when somebody says you need to monitor 2 security cameras on i5 without GPU. They want everything from Person Detection to a bunch of 10 other things. But i tell them i need a GPU and the cost goes up 4 times per camera.

So the point is that, this darknet repo is the best real time object detection repo but we are missing the above points and we should focus on keeping this repo the best real time object detection repo.

@LukeAI

In theory we all agree adding more data will increase the accuracy so its just a matter of getting hardware. So its a long term thing since none of us have access to huge machines.

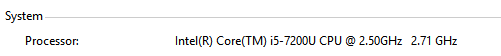

What is the inference time for efficientnet B0 on a high end cpu?

What is the inference time for efficientnet B0 on a high end cpu?

Took the latest clone of the repo 2 minutes ago.

Ran on my laptop with 2 GB 940 MX GPU memory

Ran this 10 times. The time is consistent.

GPU Time:

darknet.exe classifier predict cfg/imagenet1k.data cfg/efficientnet_b0.cfg efficientnet_b0_last.weights data/eagle.jpg

data/eagle.jpg: Predicted in 0.251000 seconds.

Darknet19

darknet.exe classifier predict cfg/imagenet1k.data cfg/darknet19.cfg darknet19.weights data/eagle.jpg

data/eagle.jpg: Predicted in 0.025000 seconds.

Darknet53

darknet.exe classifier predict cfg/imagenet1k.data cfg/darknet53.cfg darknet53.weights data/eagle.jpg

data/eagle.jpg: Predicted in 0.054000 seconds.

Thanks! What about if you run on CPU?

Processor used:

CPU Timings:

darknet_no_gpu.exe classifier predict cfg/imagenet1k.data cfg/efficientnet_b0.cfg efficientnet_b0_last.weights data/eagle.jpg

Used AVX

Used FMA & AVX2

data/eagle.jpg: Predicted in 0.216000 seconds.

Darknet19

darknet_no_gpu.exe classifier predict cfg/imagenet1k.data cfg/darknet19.cfg darknet19.weights data/eagle.jpg

Used AVX

Used FMA & AVX2

data/eagle.jpg: Predicted in 0.162000 seconds.

Darknet53

darknet_no_gpu.exe classifier predict cfg/imagenet1k.data cfg/darknet53.cfg darknet53.weights data/eagle.jpg

Used AVX

Used FMA & AVX2

data/eagle.jpg: Predicted in 0.494000 seconds.

@dexception

As long as we are using fully connected layers i doubt we will have any improvements in yolov3-tiny's inference time. Activation function also needs to be replaced. Please refer to 3.1 section of this paper:

Compact Convolutional Neural Network Cascade

https://arxiv.org/pdf/1508.01292

What do you mean?

Yolov3-tiny, Yolov3, Yolov2-tiny, Yolov2 - don't use fully connected layers.

As long as it doesn't increase the computations in the network and doesn't increase the cost of the overall machine.

GIoU + scales_x_y + Assisted Excitation of Activations + corner_head- don't increase inference timeSPP + PAN2 + squeeze_n_excitation + swish_activation- increase inference timy slightly, but much improves accuracy. There is no point in thinking only about accuracy or only execution time. You must be on the pareto optimality curve.

For example, just assuming, even if SPP + PAN2 greatly increases the execution time, but allows you to go to a more optimal Pareto-curve, then we can simply reduce the network resolution or the number of layers and we will get better accuracy with less execution time.

Couple of months back i did a comparison between TensorRT yolo quantization vs yolov2_light repo.

AlexeyAB/yolo2_light#51

TensorRT quantization is way better but still the accuracy that they claim in the papers is not even close.

Please refer to this issue:

https://devtalk.nvidia.com/default/topic/1050874/tensorrt/int8-calibration-is-not-accurate-see-image-diff-with-and-without/post/5354732/#5354732

I guess yolov2_light's quantization needs a calibration table, the way Nvidia is doing it.

What does it mean - better? Does it have higher mAP or less inference time, or both?

I do it based on this presentation by using KL_divergence with saturation http://on-demand.gputechconf.com/gtc/2017/presentation/s7310-8-bit-inference-with-tensorrt.pdf there is nothing about calibration table, what do you mean?

Currently OpenCV-dnn is the fastest module to run Yolov3 and Yolov3-tiny on CPU in real-time.

I am also working on implementing Yolo v3 on a chip Intel Myriad X (NCS2), so currently it can process Yolov3-tiny 20 FPS on Intel Atom CPU + Intel Myriad X (1 watt - 100$).

@LukeAI

I can try to implement Darknet53 with swish-activation + squeeze_n_excitation_blocks, so you can try to train it on ImageNet for 1 - 1.6 M iterations on ImageNet + Stylized ImageNet.

If I will have a time. You can just now try to train Darknet53 + swish-activation.

Are there any other large single class datasets that you know of that I could throw in with Imagenet to get even more breadth and diversity?

I would recommend you to train this Classifier on ImageNet + Stylized ImageNet - it gives + several % of Top1:

- how to create Stylized ImageNet: https://github.com/rgeirhos/Stylized-ImageNet

- just to know: https://github.com/rgeirhos/texture-vs-shape

Joseph Redmon trained Darknet53 on 256x256 resolution for 800K iterations https://github.com/pjreddie/darknet/blob/master/cfg/darknet53.cfg#L10-L11

And only then change network resolution to 448x448 and continue training for 100K iterations https://github.com/pjreddie/darknet/blob/master/cfg/darknet53_448.cfg#L10-L11

No, of course not... I'm just wondering if this efficientnet/yolo project has any near-future potential to become a high AP realtime OD algoirithm on GPUs.

Every two years, the performance of the GPU and the CPU increases, neurochips (Intel Myriad X) or built-in neurochips appear in smartphones. So with all these improvements, yes - Yolo-v3 will be the most accurate with the same speed and the fastest with the same accuracy of all universal detection algorithms.

@AlexeyAB

https://github.com/NVIDIA-AI-IOT/deepstream_reference_apps/blob/master/yolo/README.md

Once you do calibration on a dataset a calibration table is generated and this used for inference.

This dataset can be same to one one which you have trained your model.

Please go through the app and see the inference time which is quite similar to yolo2_light but way more accurate than yolo2_light repo. Infact nearly twice as more accurate in some cases.

Fully Connected Layers

I know yolov2, yolov3 doesn't use fully connected layers. Quote from the article :

the lack of fully-connected layer gives a 50% increase in speed of a forward propagation procedure.

I was referring to the approach in the article.

https://arxiv.org/pdf/1508.01292

Yolov4-Tiny [Future Release]

A faster and more accurate version of Yolov3-Tiny.

Please share a cfg file with darknet19 + swish-activation + squeeze_n_excitation_blocks

NCS2

I have also tried the Intel Neural Computing Stick V2. Can't reveal much about it. As it is still buggy.

So waiting for the next release.

@LukeAI

EfficientNet-B0

Given the inference time tested using cudnn 7.6.1(latest as of today). I understand that the FP16 implementation of depthwise-conv is different from fp32. So the inference time would be faster with CUDNN_HALF. Nvidia has promised to improve it in future on fp32.

Efficient-NetB0 will be faster than darknet53 on CPU. Almost twice as fast.

So it is not completely useless.

@dexception

Once you do calibration on a dataset a calibration table is generated and this used for inference.

This dataset can be same to one one which you have trained your model.

It seems it is something like this: https://github.com/AlexeyAB/yolo2_light/blob/master/bin/yolov3-tiny.cfg#L25

Please go through the app and see the inference time which is quite similar to yolo2_light but way more accurate than yolo2_light repo. Infact nearly twice as more accurate in some cases.

What mAP did you get in both cases?

What dataset did you use?

I have also tried the Intel Neural Computing Stick V2. Can't reveal much about it. As it is still buggy.

So waiting for the next release.

Yes, we are waiting for bug fixes.

They promise to fix several bugs and implement fast async implementation of Yolov3 on NCS2 by using OpenCV-dnn with OpenVINO backend: https://github.com/opencv/opencv/issues/15023#issuecomment-510845216

With --async=3 it should work with the highest FPS in new OpenCV and new OpenVINO libraries: https://github.com/opencv/opencv/pull/14516

@AlexeyAB

Dataset : photos from social media+google images

Yolov3-Tiny mAP: 80%

I did't compare the actual mAP from TensorRT with Yolov2_light after quantization but TensorRT one was better. The case with yolo2_light quantization is quite similar to the once i also discussed with you while comparing yolov3-tiny with yolov3-tiny-xnor.

https://github.com/AlexeyAB/darknet/issues/2605

We discussed that the bounding boxes are not accurate even though they might give decent [email protected]

TensorRT quantization.

TensorRT quantization is generated for a specific dataset that results in higher mAP. Can you share how you came up with the calibration in the cfg file ? Is there a way to generate it on a dataset and then copy paste it in the cfg file ?

Waiting for your inputs regarding:

- darknet19 + swish-activation + squeeze_n_excitation_blocks.

- Rotated Rectangle with 8 point coordinate(x1,y1,x2,y2,x3,y3,x4,y4) based yolov3-tiny, yolov3

@dexception

We discussed that the bounding boxes are not accurate even though they might give decent [email protected]

TensorRT quantization.

Without exact [email protected] values on the same dataset we can't say that one model is better than other, because one model can have higher mAP but can require lower confidence-threshold, so with the same

-thresh 0.25it will have lower TP, but with lower threshold-thresh 0.15it can have more TP.If you are worried about more accurate bboxes, you should compare [email protected] or @0.90 instead of @0.5

Also it is a bad practice - visual compariosn of the models just by few images. So mAP comparison is required on validation dataset with several thousand images.

TensorRT quantization is generated for a specific dataset that results in higher mAP. Can you share how you came up with the calibration in the cfg file ? Is there a way to generate it on a dataset and then copy paste it in the cfg file ?

./darknet detector calibrate obj.data tiny-yolo-voc.cfg tiny-yolo-voc.weights -input_calibration 100 it will calibrate the model on training dataset from obj.data file for the first 100 images.

and will create input_calibration.txt file with input_calibration = 15.7342, 4.41852, 9.17237, 9.70713, 13.1849, 14.9823, 15.1913, 8.62978, 15.7353, 15.6297, 15.6939, 15.4093, 15.8055, 16 params which you should copy paste to the [net] section in used cfg-file.

But default input_calibration params in yolov3.cfg/tiny seems works better. Just use lower threshold.

But I'm not sure that quantization before int8 after learning is generally a good idea. Perhaps it is required training int8. Or may be XNOR-net is better.

What is this calibration?

@dexception

EfficientNet-B0

Given the inference time tested using cudnn 7.6.1(latest as of today). I understand that the FP16 implementation of depthwise-conv is different from fp32. So the inference time would be faster with CUDNN_HALF. Nvidia has promised to improve it in future on fp32.

Efficient-NetB0 will be faster than darknet53 on CPU. Almost twice as fast.

So it is not completely useless.

@AlexeyAB

* **EfficientNet B0** (224x224) 0.9 BFLOPS - 0.45 B_FMA (16ms / RTX 2070): [efficientnet_b0.cfg.txt](https://github.com/AlexeyAB/darknet/files/3336187/efficientnet_b0.cfg.txt) - **2.5 days** * **EfficientNet B0 XNOR** (224x224) 0.8 BFLOPS + 25 BOPS (18ms / RTX 2070): [efficientnet_b0_xnor.cfg.txt](https://github.com/AlexeyAB/darknet/files/3342957/efficientnet_b0_xnor.cfg.txt) - **5 days** * **EfficientNet B3** (288x288) 3.5 BFLOPS - 1.8 B_FMA (28ms/RTX 2070): [efficientnet_b3.cfg.txt](https://github.com/AlexeyAB/darknet/files/3340717/efficientnet_b3.cfg.txt) - **11 days** * **EfficientNet B3** (320x320) 4.3 BFLOPS - 2.2 B_FMA (30ms/RTX 2070): [efficientnet_b3_320.cfg.txt](https://github.com/AlexeyAB/darknet/files/3340753/efficientnet_b3_320.cfg.txt) - **14 days** * **EfficientNet B4** (384x384) 10.2 BFLOPS - 5.1 B_FMA (46ms/RTX 2070): [efficientnet_b4.cfg.txt](https://github.com/AlexeyAB/darknet/files/3340718/efficientnet_b4.cfg.txt) - **26 days**

I'm a little confused! So efficientnet is really slow only if using fp32? Were these impressive inference times achieved using CUDNN_HALF ?

**Output from RTX 2080 TI**

Cuda 10

CUDNN 7.5.0

CUDA allocate done!

224 224

data/eagle.jpg: Predicted in 0.003562 seconds.

**Output from GTX 1050 TI**

CUDA : 9.0

CUDNN: 7.6.1

CUDA allocate done!

224 224

data/eagle.jpg: Predicted in 0.113574 seconds.

**Output from GTX 1060**

CUDA 10.0

CUDNN 7.5.0

CUDA allocate done!

224 224

data/eagle.jpg: Predicted in 0.129041 seconds.

@AlexeyAB I used your 500k model for efficientnet-b0 weights to resume training and after the weights were saved, ran the following command the mAP dropped.

./darknet classifier valid cfg/imagenet1k.data cfg/efficientnet_b0.cfg efficientnet_b0_last.weights

top 1: 0.317949, top 5: 0.533333

@AlexeyAB

Can you clarify what is going on ?

Because i have trained the model till 500k and there seems to be a huge difference your accuracy and my accuracy ? Is it because you have train a model continuously and should not resume in between ?

@dexception

I resumed training several times.

It is because I used other learning rate and policy. You should train 1.6M iterations.

@AlexeyAB

The model is in training mode. Its has reached 600k.

I will share the model when it reaches 1.6 million.

Hello,

I have checked the code of new operations, some bugs are listed below:

avgpool layer

Originally, avgpool layer is designed for using in the last layer of classifier.

If there is an avgpool layer, resize_network will break.

https://github.com/AlexeyAB/darknet/blob/master/src/network.c#L550scale_channels layer

scale_channels layer usually has size equal to 1x1xc, but it should resize the output equal to the size of "from" layer.

https://github.com/AlexeyAB/darknet/blob/master/src/scale_channels_layer.c#L42-L58

I think it should be similar to the resize function of route layer.

https://github.com/AlexeyAB/darknet/blob/master/src/route_layer.c#L39convolutional layer with swish activation function

swish activation function is seen as a special case in convolutional layer.some code I am not sure will have problem

scale_channels layer is not listed in get_layer_string function.

https://github.com/AlexeyAB/darknet/blob/master/src/network.c#L172-L223

And above bugs may get error when training a detector.

(CUDNN_STATUS_EXECUTION_FAILED, illegal memory access, illegal instruction was encountered...)

I have tried:

- CUDA 8 + TITAN X

- CUDA 8 + 1080 ti

- CUDA 9 + 1080 ti

- CUDA 9 + TITAN X (Pascal)

- CUDA 10 + 1080

- CUDA 10 + 2080 ti

- CUDA 10 + TITAN X (Pascal)

All of these get errors.

@AlexeyAB

Should i stop training ?

@dexception How many iterations and TopK do you get currently?

share my result.

@WongKinYiu How many iterations did you train?

@WongKinYiu How many iterations did you train?

600k only.

@WongKinYiu You should train 1M - 1.6M iterations

https://github.com/AlexeyAB/darknet/issues/3380#issuecomment-511189891

@WongKinYiu You should train 1M - 1.6M iterations

#3380 (comment)

Because currently I can not use EfficientNet_b0 as backbone of the detector, so I stop training.

(Training depthwise convolutional layer takes looooooooooooong time.)

Could you provide cfg file of EfficientNet_b0 with learning rate policy for 1.6M iterations?

I will get available GPU next Tuesday, then I can train it.

@WongKinYiu

EfficientNet_b0 with learning rate policy for 1.6M iterations: https://github.com/AlexeyAB/darknet/issues/3380#issuecomment-501263052

@WongKinYiu

EfficientNet_b0 with learning rate policy for 1.6M iterations: #3380 (comment)

got it, thank you.

@AlexeyAB

That is strange .. i am not getting the same accuracy as @WongKinYiu

@WongKinYiu

Can you share your cfg file which you trained for 600k iterations ?

My Results:

efficientnet_b0_729100.weights

Used https://github.com/AlexeyAB/darknet/files/3336187/efficientnet_b0.cfg.txt

top 1: 0.546160, top 5: 0.799100

@dexception

I use this cfg file.

https://github.com/AlexeyAB/darknet/issues/3380#issuecomment-503746180

@WongKinYiu

Got it ..there is a policy difference.

@AlexeyAB

Are you sure 1.6 million iterations are enough ?

Here is the link for the currently trained model:

Results:

efficientnet_b0_729100.weights

Used https://github.com/AlexeyAB/darknet/files/3336187/efficientnet_b0.cfg.txt

top 1: 0.546160, top 5: 0.799100

https://drive.google.com/open?id=1vYRmFjYgCMdt3f9XTebjf_kU9_qb-Z_Y

@dexception

Are you sure 1.6 million iterations are enough ?

I think yes. Just try to train 1.6m.

Although I can not train the detector contains conv+swish & squeeze-and-excitation layers with random=1,

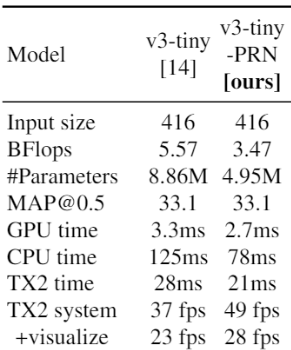

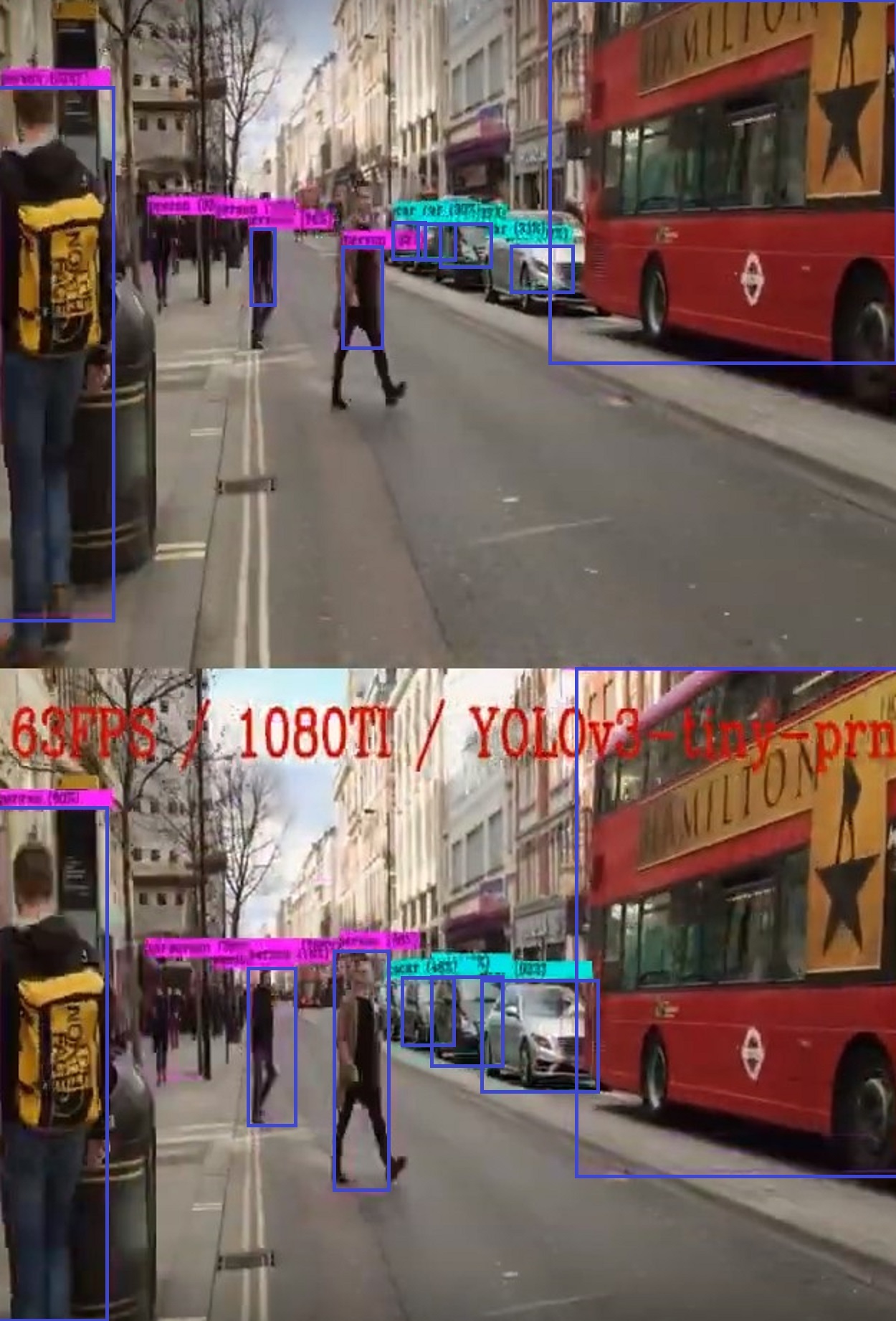

I get 42.2% [email protected] with 2.6 BFLOPs on COCO test-dev (random=0).

(YOLOv3-tiny get 33.1% [email protected] with 5.6 BFLOPs)

If the bugs are fixed, I think it may get better performance than CenterNet based methods.

@WongKinYiu Can you share your cfg-file for Detector (2.6 BFLOPS)?

Is it based on efficientnet_b0.cfg.txt ?

@WongKinYiu Can you share your cfg-file for Detector (2.6 BFLOPS)?

Is it based on efficientnet_b0.cfg.txt ?

No, it is not based on efficientnet_b0.

I found that the performance of efficientnet_b0 with leaky ReLU and swish activation function have large gap.

So I change the activation function of my proposed architecture.

I use image size=320*320 for testing, so the computation cost is 2.6 BFLOPs.

The network has more than 300 layers in cfg file.

Even though it only has 2.6 BFLOPs, its inference time is 2~3 times than YOLOv3-tiny on GPU. (~0.8 times on CPU)

The paper is under writing.

If you need cfg file, I can send it to you, but please do not share it before my paper is published.

If you don't need cfg file, I can share you my experiments for implementing state-of-the-art methods.

For example, https://github.com/AlexeyAB/darknet/issues/3380#issuecomment-503780307 .

@WongKinYiu Yes, please send me cfg-file: [email protected]

Did you change source coda of Darknet framework to implement CEM + SAM?

I found that the performance of efficientnet_b0 with leaky ReLU and swish activation function have large gap.

Did you train it 600k iterations?

@AlexeyAB

I do not change source code for the model which get 42.2% [email protected] with 2.6 BFLOPs.

But for supporting SAM module, yes.

I change the scale channels layer from 1 * 1 * c to w * h * c.

(I am not familiar to add new layer on darknet, so I modify existed layer.)

squeeze-and-excitation = avgpool + conv + scale_channels

SAM = conv + scale_maps

Yes, both of EfficientNet-b0 with leaky ReLU and swish activation function trained 600k iterations.

Here are the stat-of-the-art methods I have implemented.

(Some of them are borrowed from your cfg files.)

- Yolov3: An incremental improvement.

- Feature pyramid networks for object detection.

- Path aggregation network for instance segmentation.

- Sparsely Aggregated Convolutional Networks.

- Spatial pyramid pooling in deep convolutional networks for visual recognition.

- ThunderNet: Towards Real-time Generic Object Detection.

- Pelee: A real-time object detection system on mobile devices.

- Tiny-DSOD: Lightweight Object Detection for Resource Restricted Usage.

- Scale-Aware Trident Networks for Object Detection.

- DC-SPP-YOLO: Dense Connection and Spatial Pyramid Pooling Based YOLO for Object Detection.

- Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression.

- Bag of Freebies for Training Object Detection Neural Networks.

3, 4, 5, 6 make obvious improvement of mAP.

11 get impressive performance on [email protected]:0.95.

12 can not get good results on lightweight models.

@WongKinYiu would you consider sending me your detector .cfg ? I have access to an array of GPUs and I'd been happy to train on a few standard datasets and send you the results. I'd love to study the .cfg and I'd be happy to keep secret until you are ready to release. ezekiel.incorrigible at gmail.com

2.2 BFLOPs, 41.0 [email protected] on COCO test-dev.

@AlexeyAB

Any update ?

@WongKinYiu very exciting! did you do pretraining on imagenet for the feature extractor? do you have any pretrained weights?

@hwijune u can just replace all swish to leaky.

@LukeAI i use the imagenet pretrained model from https://github.com/AlexeyAB/darknet/issues/3380#issuecomment-503746180