Darknet: Implement Yolo-LSTM (~+4-9 AP) for detection on Video with high mAP and without blinking issues

Implement Yolo-LSTM detection network that will be trained on Video-frames for mAP increasing and solve blinking issues.

- https://arxiv.org/abs/1705.06368v3

- https://arxiv.org/abs/1506.04214v2

- Multi-object Tracking with Neural Gating Using Bilinear LSTM: https://web.engr.oregonstate.edu/~lif/1925.pdf

Think about - can we use Transformer (Vaswani et al., 2017) / GPT2 / BERT for frame-sequences instead of word-sequences https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf and https://vk.com/away.php?to=https%3A%2F%2Farxiv.org%2Fpdf%2F1706.03762.pdf&cc_key=

Or can we use Transformer-XL https://arxiv.org/abs/1901.02860v2 or UNIVERSAL TRANSFORMERS https://arxiv.org/abs/1807.03819v3 for Long-time sequences?

All 387 comments

Yes, I was looking this similar thing a few weeks ago. You might find this paper interesting.

Looking Fast and Slow: Memory-Guided Mobile Video Object Detection

https://arxiv.org/abs/1903.10172

Mobile Video Object Detection with Temporally-Aware Feature Maps

https://arxiv.org/pdf/1711.06368.pdf

source code:

https://github.com/tensorflow/models/tree/master/research/lstm_object_detection

@i-chaochen Thanks! It's new interest state-of-art solution by using conv-LSTM not only for increasing accuracy, but also for speedup.

Did you understand what do they mean here?

Does it mean that there are two models:

- f0 (large model

320x320with depth1.4x) - f1 (small model

160x160with depth0.35x)

And states c will be updated only by using f0 model during both Training and Detection (while f1 will not update sates c)?

We also observe that one inherent weakness of the LSTM is its inability to completely preserve its state across updates in practice. The sigmoid activations of the input and forget gates rarely saturate completely, resulting in a slow state decay where long-term dependencies are gradually lost. When compounded over many steps, predictions using the f1 degrade unless f0 is rerun.

We propose a simple solution to this problem by simply skipping state updates when f1 is run, i.e. the output state from the last time f0 was run is always reused. This greatly improves the LSTM’s ability to propagate temporal information across long sequences, resulting in minimal loss of accuracy even when f1 is exclusively run for tens of steps.

@i-chaochen Thanks! It's new interest state-of-art solution by using conv-LSTM not only for increasing accuracy, but also for speedup.

Did you understand what do they mean here?

Does it mean that there are two models:

- f0 (large model

320x320with depth1.4x)- f1 (small model

160x160with depth0.35x)And states c will be updated only by using f0 model during both Training and Detection (while f1 will not update sates c)?

We also observe that one inherent weakness of the LSTM is its inability to completely preserve its state across updates in practice. The sigmoid activations of the input and forget gates rarely saturate completely, resulting in a slow state decay where long-term dependencies are gradually lost. When compounded over many steps, predictions using the f1 degrade unless f0 is rerun.

We propose a simple solution to this problem by simply skipping state updates when f1 is run, i.e. the output state from the last time f0 was run is always reused. This greatly improves the LSTM’s ability to propagate temporal information across long sequences, resulting in minimal loss of accuracy even when f1 is exclusively run for tens of steps.

Yes. You have a very sharp eye!

Based on their paper, f0 is for accuracy and f1 is for speed.

They use f0 occasionally for the updates of state, whilst f1 most of time for speed up the testing.

Thus, following this "simple" intuition, part of this paper contribution is to use "Reinforcement Learning" to learn an optimized interleaving policy for f0 and f1.

We can try to have this interleaving first.

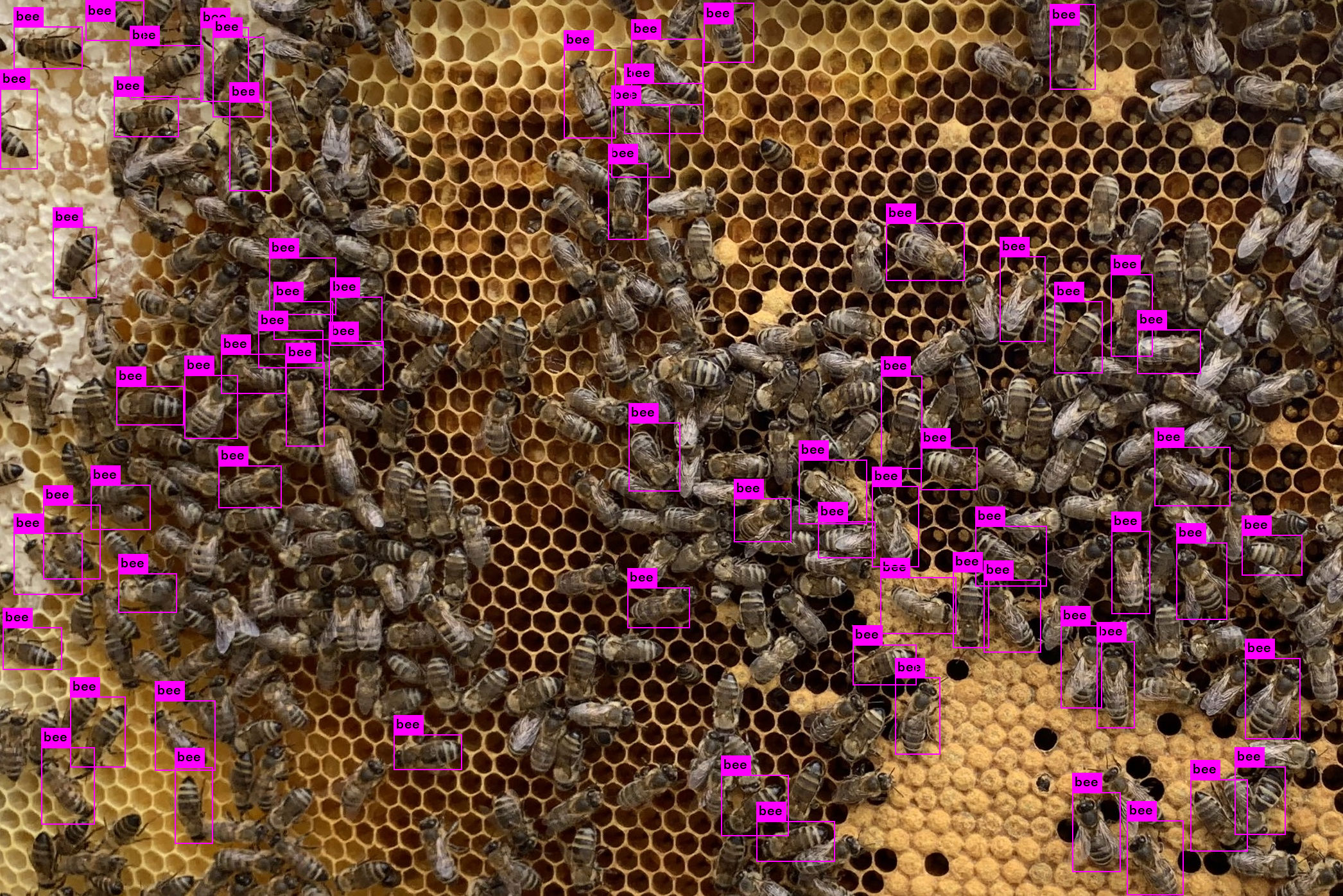

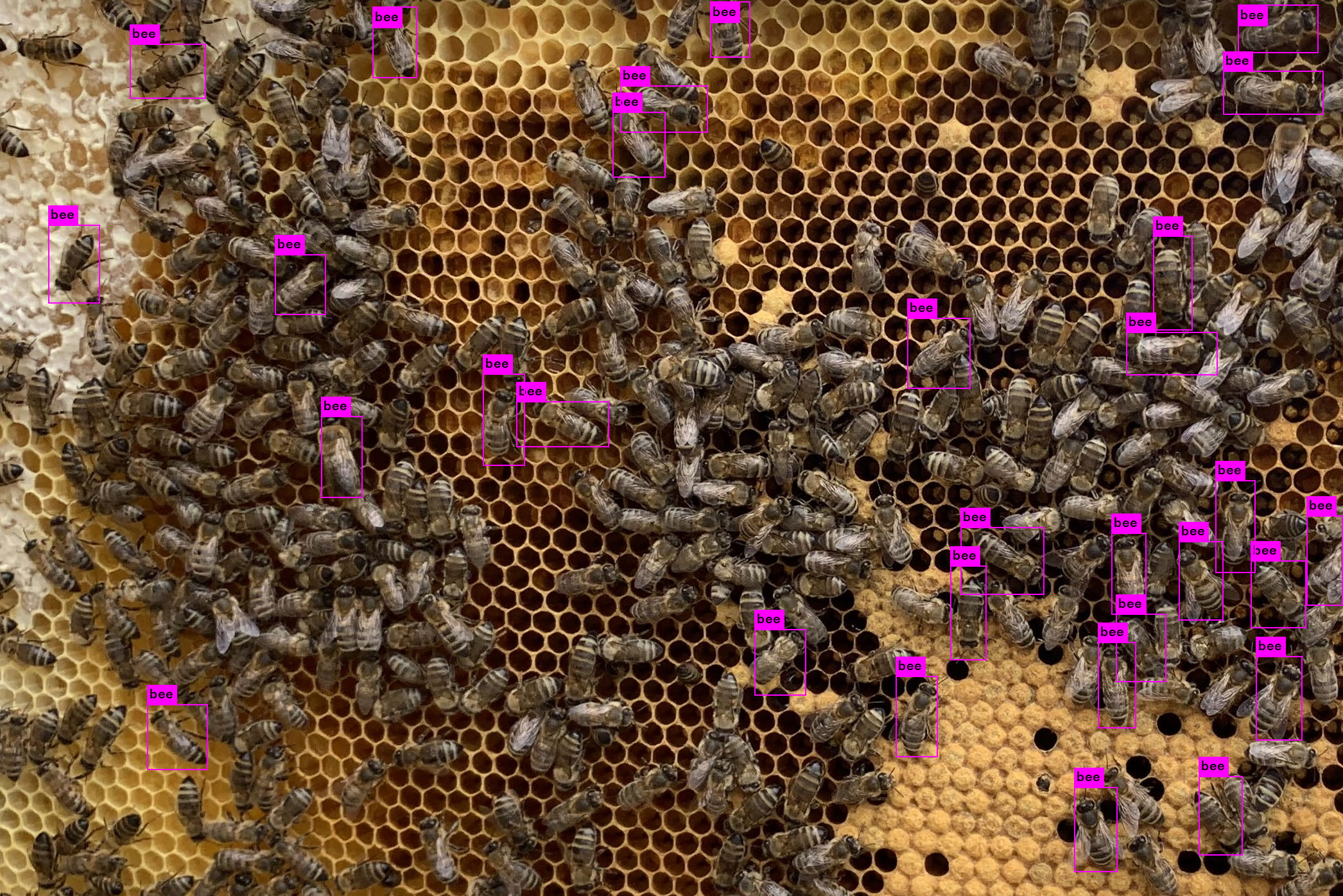

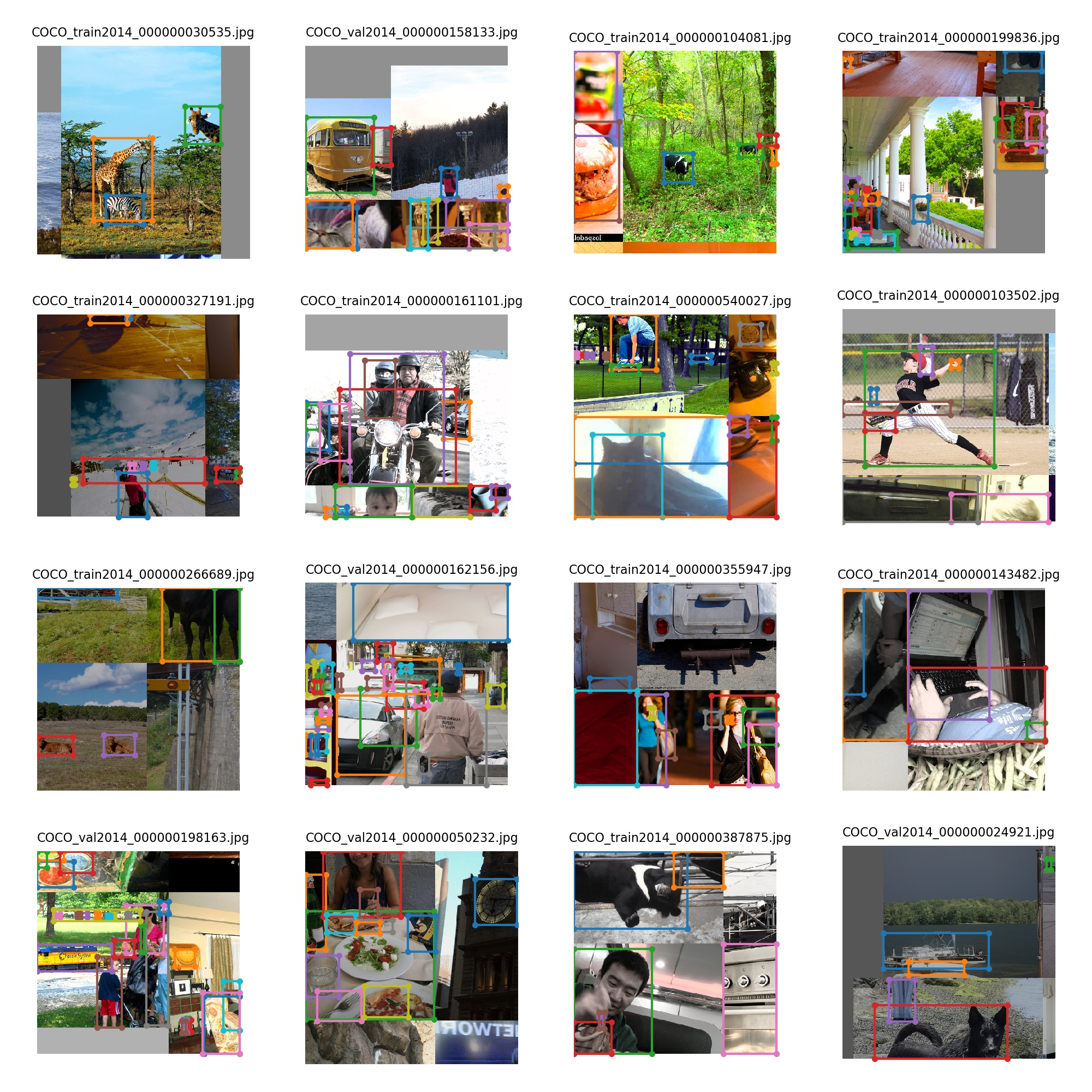

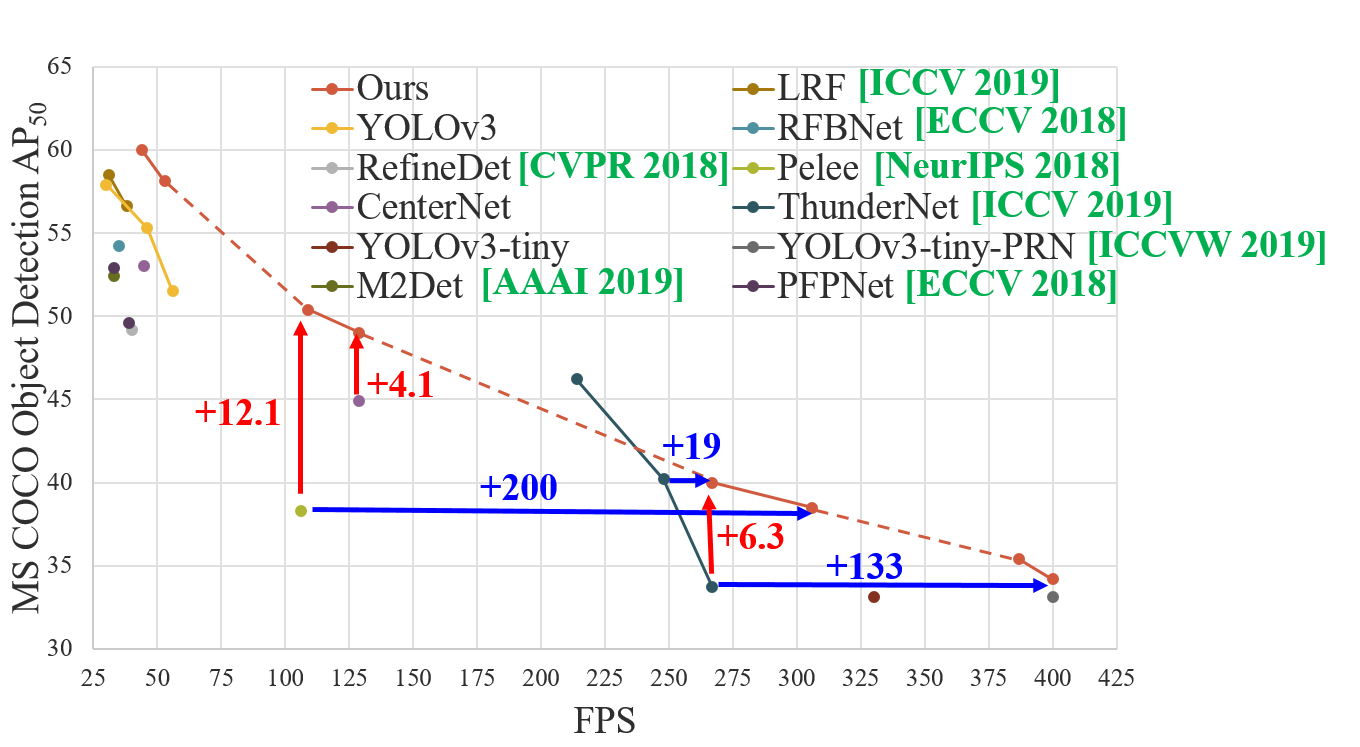

Comparison of different models on a very small custom dataset - 250 training and 250 validation images from video: https://drive.google.com/open?id=1QzXSCkl9wqr73GHFLIdJ2IIRMgP1OnXG

Validation video: https://drive.google.com/open?id=1rdxV1hYSQs6MNxBSIO9dNkAiBvb07aun

Ideas are based on:

LSTM object detection - model achieves state-of-the-art performance among mobile methods on the Imagenet VID 2015 dataset, while running at speeds of up to 70+ FPS on a Pixel 3 phone: https://arxiv.org/abs/1903.10172v1

PANet reaches the 1st place in the COCO 2017 Challenge Instance Segmentation task and the 2nd place in Object Detection task without large-batch training. It is also state-of-the-art on MVD and Cityscapes: https://arxiv.org/abs/1803.01534v4

There are implemented:

convolutional-LSTM models for Training and Detection on Video, without interleaving lightweight network - may be will be implemented later

PANet models -

_pan-networks - there is used[reorg3d]+[convolutional] size=1instead of Adaptive Feature Pooling (depth-maxpool) for the Path Aggregation - may be will be implemented later_pan2-networks - there is used maxpooling[maxpool] maxpool_depth=1 out_channels=64acrosss channels as in original PAN-paper, just previous layers are [convolutional] instead of [connected] for resizability

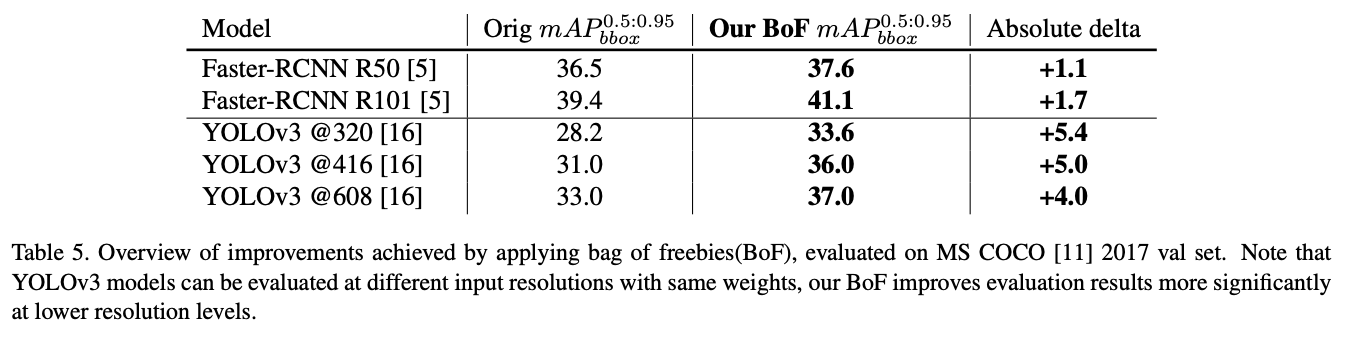

| Model (cfg & weights) network size = 544x544 | Training chart | Validation video | BFlops | Inference time RTX2070, ms | mAP, % |

|---|---|---|---|---|---|

| yolo_v3_spp_pan_lstm.cfg.txt (must be trained using frames from the video) | - | - | - | - | - |

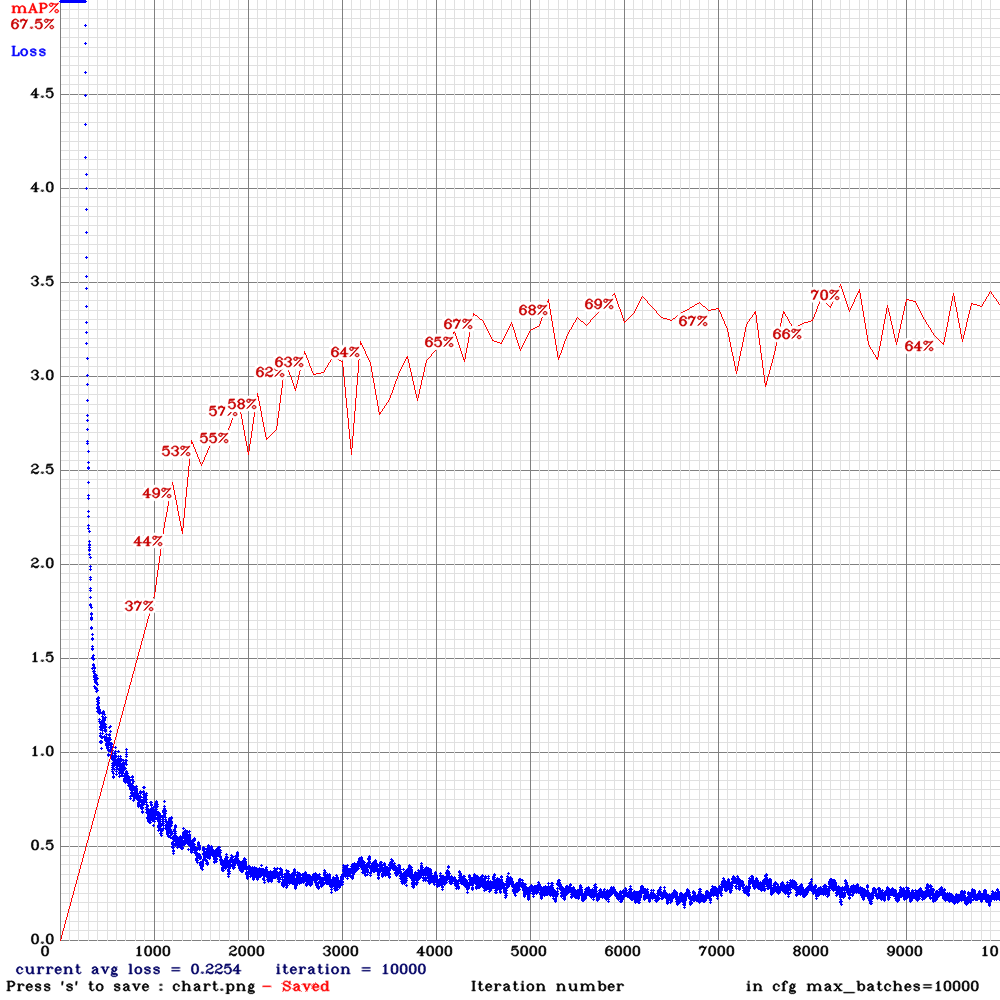

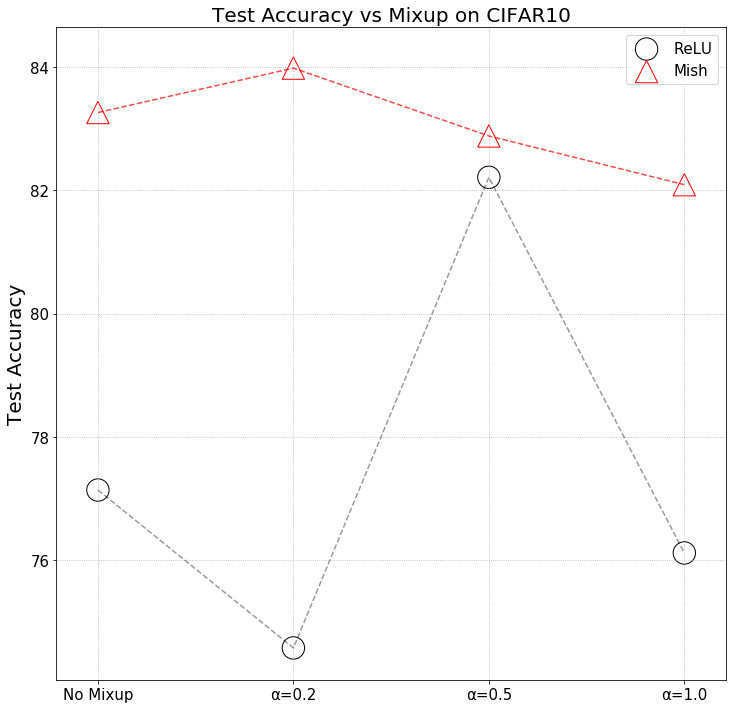

| yolo_v3_tiny_pan3.cfg.txt and weights-file Features: PAN3, AntiAliasing, Assisted Excitation, scale_x_y, Mixup, GIoU |  | video | 14 | 8.5 ms | 67.3% |

| video | 14 | 8.5 ms | 67.3% |

| yolo_v3_tiny_pan5 matrix_gaussian_GIoU aa_ae_mixup_new.cfg.txt and weights-file Features: MatrixNet, Gaussian-yolo + GIoU, PAN5, IoU_thresh, Deformation-block, Assisted Excitation, scale_x_y, Mixup, 512x512, use -thresh 0.6 |  | video | 30 | 31 ms | 64.6% |

| video | 30 | 31 ms | 64.6% |

| yolo_v3_tiny_pan3 aa_ae_mixup_scale_giou blur dropblock_mosaic.cfg.txt and weights-file |  | video | 14 | 8.5 ms | 63.51% |

| video | 14 | 8.5 ms | 63.51% |

| yolo_v3_spp_pan_scale.cfg.txt and weights-file |  | video | 137 | 33.8 ms | 60.4% |

| video | 137 | 33.8 ms | 60.4% |

| yolo_v3_spp_pan.cfg.txt and weights-file |  | video | 137 | 33.8 ms | 58.5% |

| video | 137 | 33.8 ms | 58.5% |

| yolo_v3_tiny_pan_lstm.cfg.txt and weights-file (must be trained using frames from the video) |  | video | 23 | 14.9 ms | 58.5% |

| video | 23 | 14.9 ms | 58.5% |

| tiny_v3_pan3_CenterNet_Gaus ae_mosaic_scale_iouthresh mosaic.txt and weights-file |  | video | 25 | 14.5 ms | 57.9% |

| video | 25 | 14.5 ms | 57.9% |

| yolo_v3_spp_lstm.cfg.txt and weights-file (must be trained using frames from the video) |  | video | 102 | 26.0 ms | 57.5% |

| video | 102 | 26.0 ms | 57.5% |

| yolo_v3_tiny_pan3 matrix_gaussian aa_ae_mixup.cfg.txt and weights-file |  | video | 13 | 19.0 ms | 57.2% |

| video | 13 | 19.0 ms | 57.2% |

| resnet152_trident.cfg.txt and weights-file train by using resnet152.201 pre-trained weights |  | video | 193 | 110ms | 56.6% |

| video | 193 | 110ms | 56.6% |

| yolo_v3_tiny_pan_mixup.cfg.txt and weights-file |  | video | 17 | 8.7 ms | 52.4% |

| video | 17 | 8.7 ms | 52.4% |

| yolo_v3_spp.cfg.txt and weights-file (common old model) |  | video | 112 | 23.5 ms | 51.8% |

| video | 112 | 23.5 ms | 51.8% |

| yolo_v3_tiny_lstm.cfg.txt and weights-file (must be trained using frames from the video) |  | video | 19 | 12.0 ms | 50.9% |

| video | 19 | 12.0 ms | 50.9% |

| yolo_v3_tiny_pan2.cfg.txt and weights-file |  | video | 14 | 7.0 ms | 50.6% |

| video | 14 | 7.0 ms | 50.6% |

| yolo_v3_tiny_pan.cfg.txt and weights-file |  | video | 17 | 8.7 ms | 49.7% |

| video | 17 | 8.7 ms | 49.7% |

| yolov3-tiny_3l.cfg.txt (common old model) and weights-file |  | video | 12 | 5.6 ms | 46.8% |

| video | 12 | 5.6 ms | 46.8% |

| yolo_v3_tiny_comparison.cfg.txt and weights-file (approximately the same conv-layers as conv+conv_lstm layers in yolo_v3_tiny_lstm.cfg) |  | video | 20 | 10.0 ms | 36.1% |

| video | 20 | 10.0 ms | 36.1% |

| yolo_v3_tiny.cfg.txt (common old model) and weights-file |  | video | 9 | 5.0 ms | 32.3% |

| video | 9 | 5.0 ms | 32.3% |

| | | | | - | - |

| | | | | - | - |

Great work! Thank you very much for sharing this result.

LSTM indeed improves results. I wonder have you evaluated the inference time with LSTM as well?

Thanks

How to train LSTM networks:

Use one of cfg-file with

LSTMin filenameUse pre-trained file

for Tiny: use

yolov3-tiny.conv.14that you can get from https://pjreddie.com/media/files/yolov3-tiny.weights by using command./darknet partial cfg/yolov3-tiny.cfg yolov3-tiny.weights yolov3-tiny.conv.14 14for Full: use http://pjreddie.com/media/files/darknet53.conv.74

You should train it on sequential frames from one or several videos:

./yolo_mark data/self_driving cap_video self_driving.mp4 1- it will grab each 1 frame from video (you can vary from 1 to 5)./yolo_mark data/self_driving data/self_driving.txt data/self_driving.names- to mark bboxes, even if at some point the object is invisible (occlused/obscured by another type of object)./darknet detector train data/self_driving.data yolo_v3_tiny_pan_lstm.cfg yolov3-tiny.conv.14 -map- to train the detector./darknet detector demo data/self_driving.data yolo_v3_tiny_pan_lstm.cfg backup/yolo_v3_tiny_pan_lstm_last.weights forward.avi- run detection

If you encounter CUDA Out of memeory error, then reduce the value time_steps= twice in your cfg-file.

The only conditions - the frames from the video must go sequentially in the train.txt file.

You should validate results on a separate Validation dataset, for example, divide your dataset into 2:

train.txt- first 80% of frames (80% from video1 + 80% from video 2, if you use frames from 2 videos)valid.txt- last 20% of frames (20% from video1 + 20% from video 2, if you use frames from 2 videos)

Or you can use, for example:

train.txt- frames from some 8 videosvalid.txt- frames from some 2 videos

LSTM:

@i-chaochen I added the inference time to the table. When I improve the inference time for LSTM-networks, I will change them.

@i-chaochen I added the inference time to the table. When I improve the inference time for LSTM-networks, I will change them.

Thanks for updates!

What do you mean the inference time for seconds? is for the whole video? How about the inference time for each frame or FPS?

@i-chaochen This is a millisecond, I fixed )

Interesting, it seems yolo_v3_spp_lstm has less BFLOPs(102) than yolo_v3_spp.cfg.txt (112), but it still slower...

@i-chaochen

I removed some overheads (for calling a lot of functions and reading / writing to GPU-RAM) - I replaced these several functions for: f, i, g, o, c https://github.com/AlexeyAB/darknet/blob/b9ea49af250a3eab3b8775efa53db0f0ff063357/src/conv_lstm_layer.c#L866-L869

to the one fast function add_3_arrays_activate(float *a1, float *a2, float *a3, size_t size, ACTIVATION a, float *dst);

Hi @AlexeyAB

I am trying to use yolo_v3_tiny_lstm.cfg to improve small object detection for videos .However I am getting the following error

14 Type not recognized: [conv_lstm]

Unused field: 'batch_normalize = 1'

Unused field: 'size = 3'

Unused field: 'pad = 1'

Unused field: 'output = 128'

Unused field: 'peephole = 0'

Unused field: 'activation = leaky'

15 Type not recognized: [conv_lstm]

Unused field: 'batch_normalize = 1'

Unused field: 'size = 3'

Unused field: 'pad = 1'

Unused field: 'output = 128'

Unused field: 'peephole = 0'

Unused field: 'activation = leaky'

Could you please advice me on this

Many thanks

@NickiBD For these models you must use the latest version of this repository: https://github.com/AlexeyAB/darknet

@AlexeyAB

Thanks alot for the help .I will update my repository .

@AlexeyAB hi, how did you run yolov3-tiny on the Pixel smart phone, could you give some tips? thanks very much.

Hi @AlexeyAB,

I have trained yolo_v3_tiny_lstm.cfg and I want to convert it to .h5 and then to .tflite for the smart phone . However ,I am getting Unsupported section header type: conv_lstm_0 and unsupported operation while converting . I really need to solve this issue .Could you please advice me on this.

Many thanks .

@NickiBD Hi,

Which repository and which script do you use for this conversion?

Hi @AlexeyAB,

I am using the converter in Adamdad/keras-YOLOv3-mobilenet to convert to .h5 and it was converting for other models e.g. yolo-v3-tiny 3layers ,modified yolov3 ,... .Could you please tell me which converter to use .

Many thanks .

@NickiBD

It is a new layer [conv_lstm], so there is no any converter yet that supports it.

You should request from the converter author for adding convLSTM-layer (with disabled peephole-connection)

Or for adding convLSTM-layer (with peephole-connection) - but you should train with peephole=1 in each [lstm]-layer in yolo_v3_tiny_lstm.cfg

It will use in Keras, or

So ask it from:

- Keras: https://github.com/Adamdad/keras-YOLOv3-mobilenet

- Pytorch: https://github.com/ultralytics/yolov3#darknet-conversion

- TensorFlow: https://github.com/mystic123/tensorflow-yolo-v3 or https://github.com/jinyu121/DW2TF

As I see conv-LSTM is implemented in:

- Keras -

keras.layers.ConvLSTM2D(without peephole - it's good): https://keras.io/layers/recurrent/ - TensorFlow -

tf.contrib.rnn.ConvLSTMCell: https://www.tensorflow.org/api_docs/python/tf/contrib/rnn/ConvLSTMCell - Pytorch - isn't implemented yet: https://github.com/pytorch/pytorch/issues/1706

Conv-LSTM layer is based on this paper - Page 4: http://arxiv.org/abs/1506.04214v1

And can be used with peephole=1 or without peephole=0 Peehole-connection (red-boxes):

In peephole I use * - Convolution instead of o - Element-wise-product (Hadamard product),

so convLSTM is still resizable - can be used with any network input resolution:

@AlexeyAB

Thank you so much for all the info and the guidance .I truly appreciate it .

So could Yolov3_spp_pan.cfg be used with standard pretrained weights eg. coco ?

@LukeAI You must train yolov3_spp_pan.cfg from the begining by using one of pre-trained weights:

or

darknet53.conv.74that you can get from: http://pjreddie.com/media/files/darknet53.conv.74 (trained on ImageNet)or

yolov3-spp.conv.85that you can get from https://pjreddie.com/media/files/yolov3-spp.weights by using command./darknet partial cfg/yolov3-spp.cfg yolov3-spp.weights yolov3-spp.conv.85 85(trained on MS COCO)

@AlexeyAB

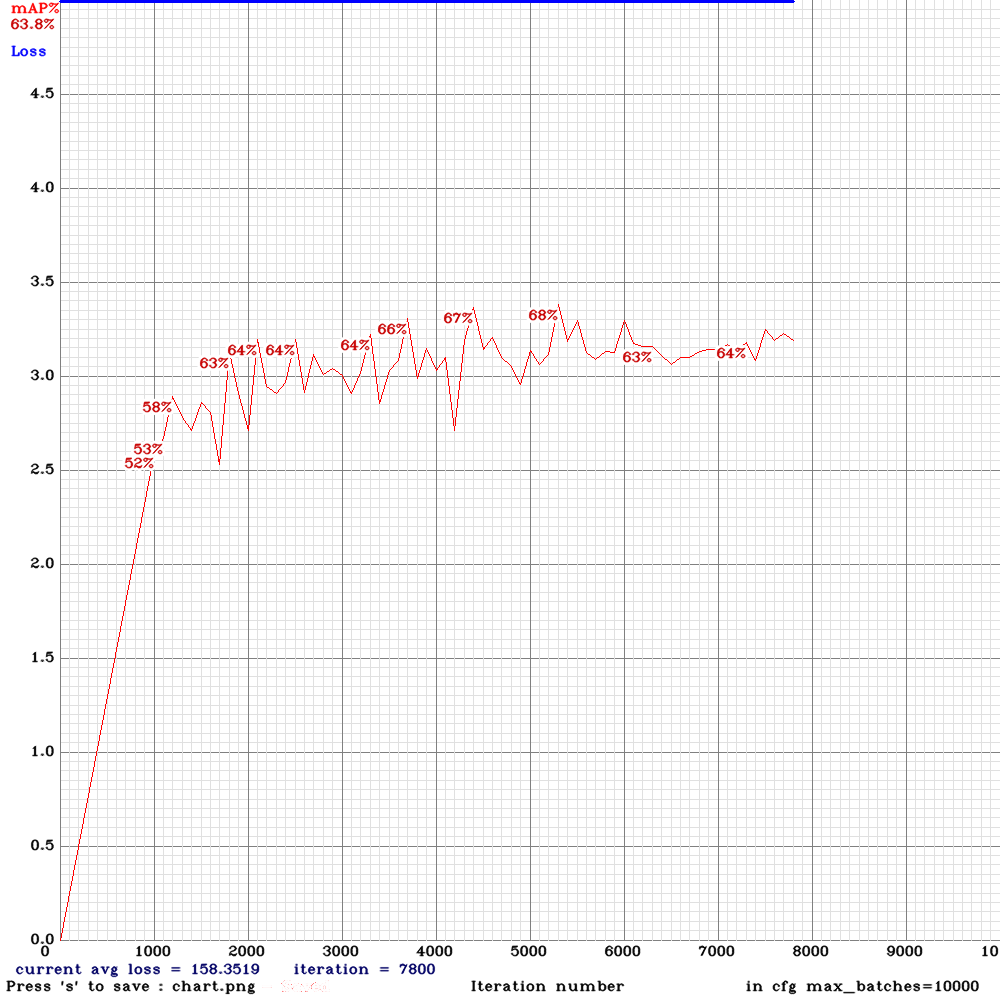

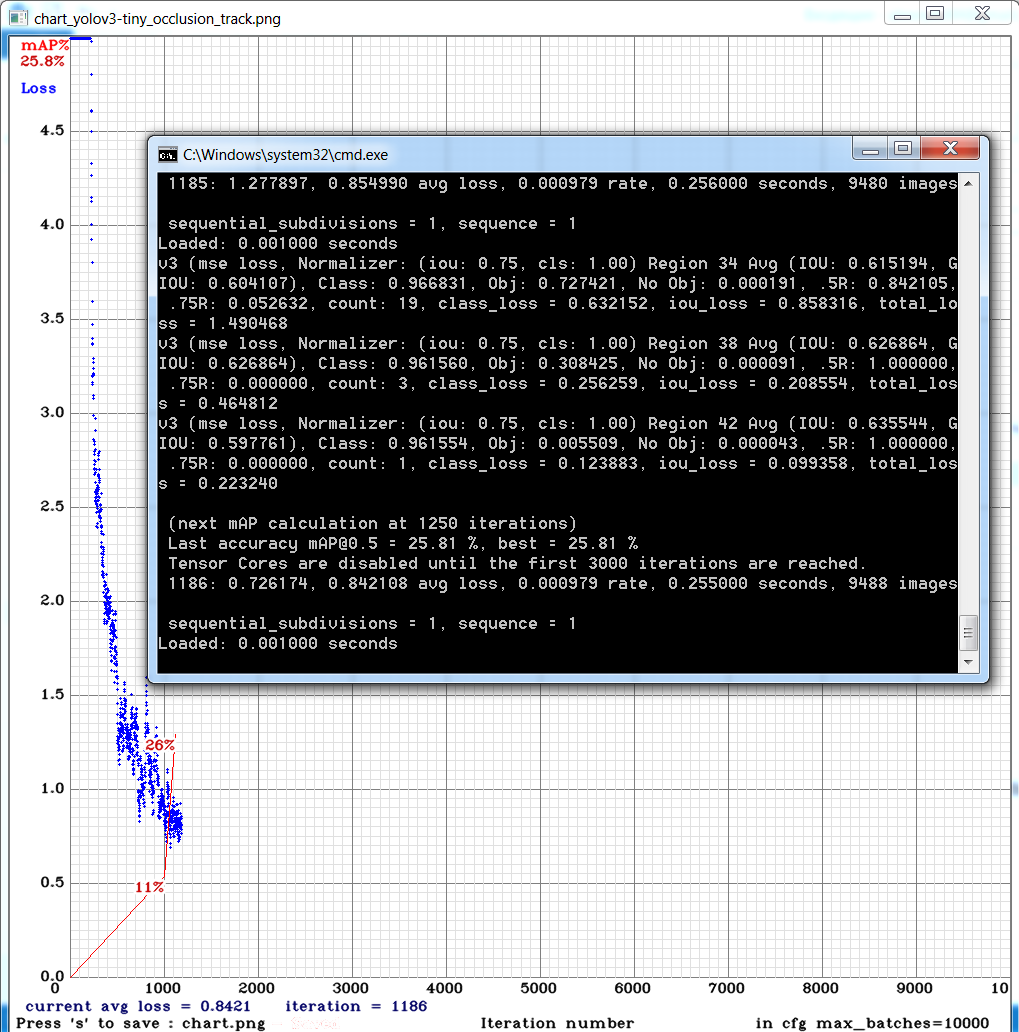

Sorry to disturb you again . I am now training yolo_v3_tiny_lstm.cfg with my custom dataset for 10000 iterations .I used the weights for 4000 iterations (mAP ~65%) for detection and the detection results were good .However, after 5000 iterations , the mAP dropped to zero and now I am on 6500 iteration it is almost mAP~2% .The frames from the video are sequentially ordered in the train.txt file and random=0. Could you please advice me on this that what might be the problem?

Thanks .

@NickiBD

Can you show me

chart.pngwith Loss & mAP charts?And can you show output of

./darknet detector mapcommand?

Hi @AlexeyAB

These is the output of ./darknet detector map:

layer filters size input output

0 conv 16 3 x 3 / 1 416 x 416 x 3 -> 416 x 416 x 16 0.150 BF

1 max 2 x 2 / 2 416 x 416 x 16 -> 208 x 208 x 16 0.003 BF

2 conv 32 3 x 3 / 1 208 x 208 x 16 -> 208 x 208 x 32 0.399 BF

3 max 2 x 2 / 2 208 x 208 x 32 -> 104 x 104 x 32 0.001 BF

4 conv 64 3 x 3 / 1 104 x 104 x 32 -> 104 x 104 x 64 0.399 BF

5 max 2 x 2 / 2 104 x 104 x 64 -> 52 x 52 x 64 0.001 BF

6 conv 128 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 128 0.399 BF

7 max 2 x 2 / 2 52 x 52 x 128 -> 26 x 26 x 128 0.000 BF

8 conv 256 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 256 0.399 BF

9 max 2 x 2 / 2 26 x 26 x 256 -> 13 x 13 x 256 0.000 BF

10 conv 512 3 x 3 / 1 13 x 13 x 256 -> 13 x 13 x 512 0.399 BF

11 max 2 x 2 / 1 13 x 13 x 512 -> 13 x 13 x 512 0.000 BF

12 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

13 conv 256 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 256 0.089 BF

14 CONV_LSTM Layer: 13 x 13 x 256 image, 128 filters

conv 128 3 x 3 / 1 13 x 13 x 256 -> 13 x 13 x 128 0.100 BF

conv 128 3 x 3 / 1 13 x 13 x 256 -> 13 x 13 x 128 0.100 BF

conv 128 3 x 3 / 1 13 x 13 x 256 -> 13 x 13 x 128 0.100 BF

conv 128 3 x 3 / 1 13 x 13 x 256 -> 13 x 13 x 128 0.100 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

15 CONV_LSTM Layer: 13 x 13 x 128 image, 128 filters

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

conv 128 3 x 3 / 1 13 x 13 x 128 -> 13 x 13 x 128 0.050 BF

16 conv 256 1 x 1 / 1 13 x 13 x 128 -> 13 x 13 x 256 0.011 BF

17 conv 512 3 x 3 / 1 13 x 13 x 256 -> 13 x 13 x 512 0.399 BF

18 conv 128 1 x 1 / 1 13 x 13 x 512 -> 13 x 13 x 128 0.022 BF

19 upsample 2x 13 x 13 x 128 -> 26 x 26 x 128

20 route 19 8

21 conv 128 1 x 1 / 1 26 x 26 x 384 -> 26 x 26 x 128 0.066 BF

22 CONV_LSTM Layer: 26 x 26 x 128 image, 128 filters

conv 128 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 128 0.199 BF

conv 128 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 128 0.199 BF

conv 128 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 128 0.199 BF

conv 128 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 128 0.199 BF

conv 128 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 128 0.199 BF

conv 128 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 128 0.199 BF

conv 128 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 128 0.199 BF

conv 128 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 128 0.199 BF

23 conv 128 1 x 1 / 1 26 x 26 x 128 -> 26 x 26 x 128 0.022 BF

24 conv 256 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 256 0.399 BF

25 conv 128 1 x 1 / 1 26 x 26 x 256 -> 26 x 26 x 128 0.044 BF

26 upsample 2x 26 x 26 x 128 -> 52 x 52 x 128

27 route 26 6

28 conv 64 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 64 0.089 BF

29 CONV_LSTM Layer: 52 x 52 x 64 image, 64 filters

conv 64 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.199 BF

conv 64 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.199 BF

conv 64 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.199 BF

conv 64 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.199 BF

conv 64 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.199 BF

conv 64 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.199 BF

conv 64 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.199 BF

conv 64 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.199 BF

30 conv 64 1 x 1 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.022 BF

31 conv 64 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 64 0.199 BF

32 conv 128 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 128 0.399 BF

33 conv 18 1 x 1 / 1 52 x 52 x 128 -> 52 x 52 x 18 0.012 BF

34 yolo

35 route 24

36 conv 256 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 256 0.797 BF

37 conv 18 1 x 1 / 1 26 x 26 x 256 -> 26 x 26 x 18 0.006 BF

38 yolo

39 route 17

40 conv 512 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x 512 0.797 BF

41 conv 18 1 x 1 / 1 13 x 13 x 512 -> 13 x 13 x 18 0.003 BF

42 yolo

Total BFLOPS 11.311

Allocate additional workspace_size = 33.55 MB

Loading weights from LSTM/yolo_v3_tiny_lstm_7000.weights...

seen 64

Done!

calculation mAP (mean average precision)...

2376

detections_count = 886, unique_truth_count = 1409

class_id = 0, name = Person, ap = 0.81% (TP = 0, FP = 0)

for thresh = 0.25, precision = -nan, recall = 0.00, F1-score = -nan

for thresh = 0.25, TP = 0, FP = 0, FN = 1409, average IoU = 0.00 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.008104, or 0.81 %

Total Detection Time: 155.000000 Seconds

Set -points flag:

-points 101 for MS COCO

-points 11 for PascalVOC 2007 (uncomment difficult in voc.data)

-points 0 (AUC) for ImageNet, PascalVOC 2010-2012, your custom dataset

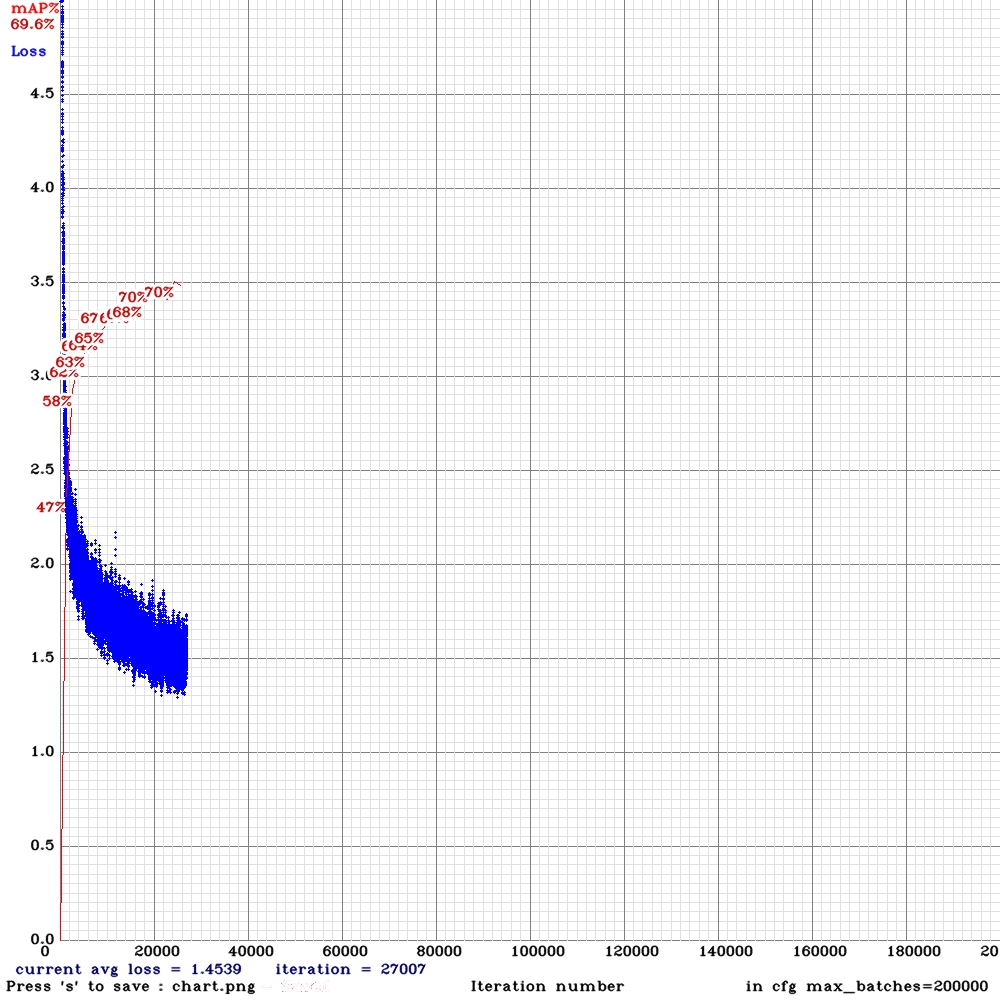

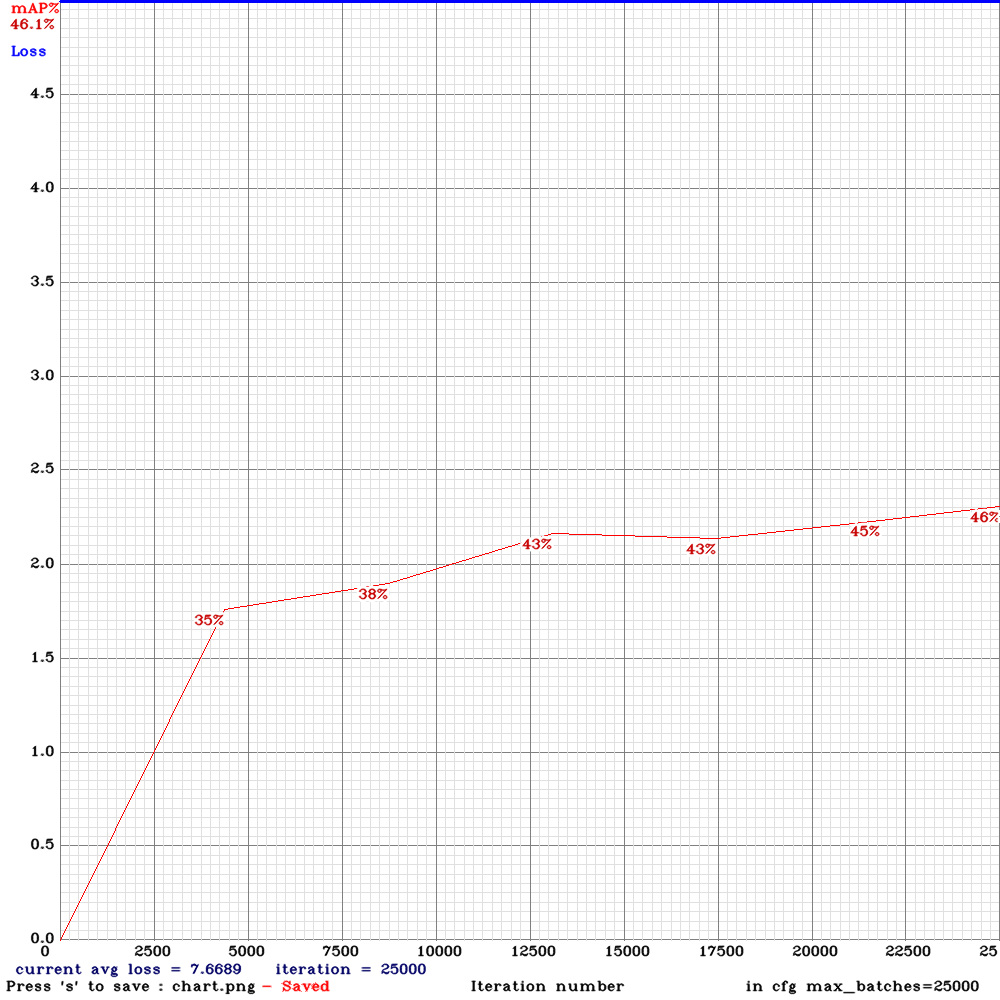

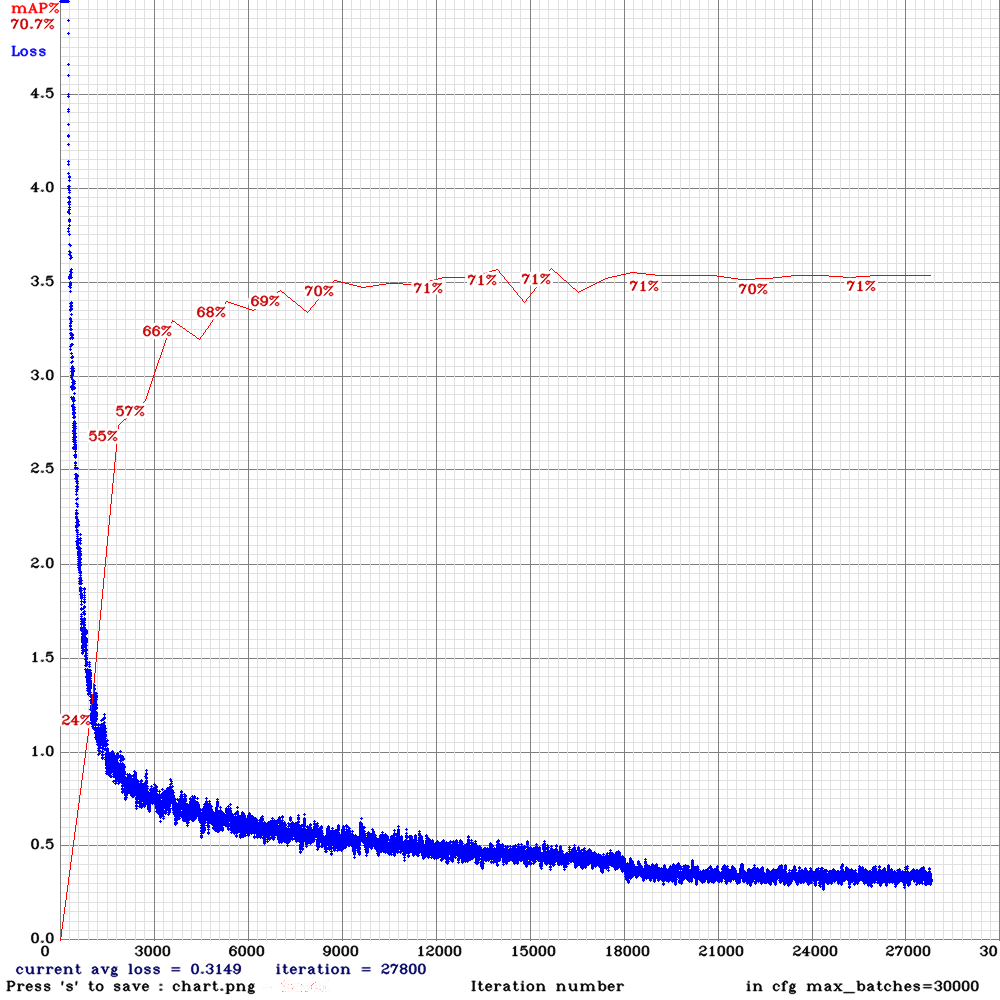

Chart :

Many thanks

@NickiBD

The frames from the video are sequentially ordered in the train.txt file and random=0.

How many images do you have in train.txt?

How many different videos (parts of videos) did you use for Training dataset?

It seems something is still unstable in training LSTM, may be due to SGDR, so try to change these lines:

policy=sgdr

sgdr_cycle=1000

sgdr_mult=2

steps=4000,6000,8000,9000

#scales=1, 1, 0.1, 0.1

seq_scales=0.5, 1, 0.5, 1

to these lines

policy=steps

steps=4000,6000,8000,9000

scales=1, 1, 0.1, 0.1

seq_scales=0.5, 1, 0.5, 1

And train again.

@AlexeyAB

Thank you so much for the advice .I will make the changes and will train again .

Regarding your questions :I have ~7500 images(including some augmentation of the images ) extracted from ~100 videos for training .

@NickiBD

I have ~7500 images(including some augmentation of the images ) extracted from ~100 videos for training .

So there are something like ~100 sequences of ~75 frames for each.

Yes, you can use it. But better to use ~200 sequential frames.

All frames in one sequence must use the same augmentation (the same cropping, scaling, color, ...). So you can make good video from these ~75 frames.

@AlexeyAB

Many thanks for all the advice.

@AlexeyAB really looking forward to trying this out - very impressive results indeed and surely worth writing a paper on? Are you planning to do so?

@NickiBD let us know how those .cfg changes work out :)

@NickiBD If it doesn't help, then also try to add parameter state_constrain=75 for each [conv_lstm] layer in cfg-file. This correlates with the maximum number of frames to remember.

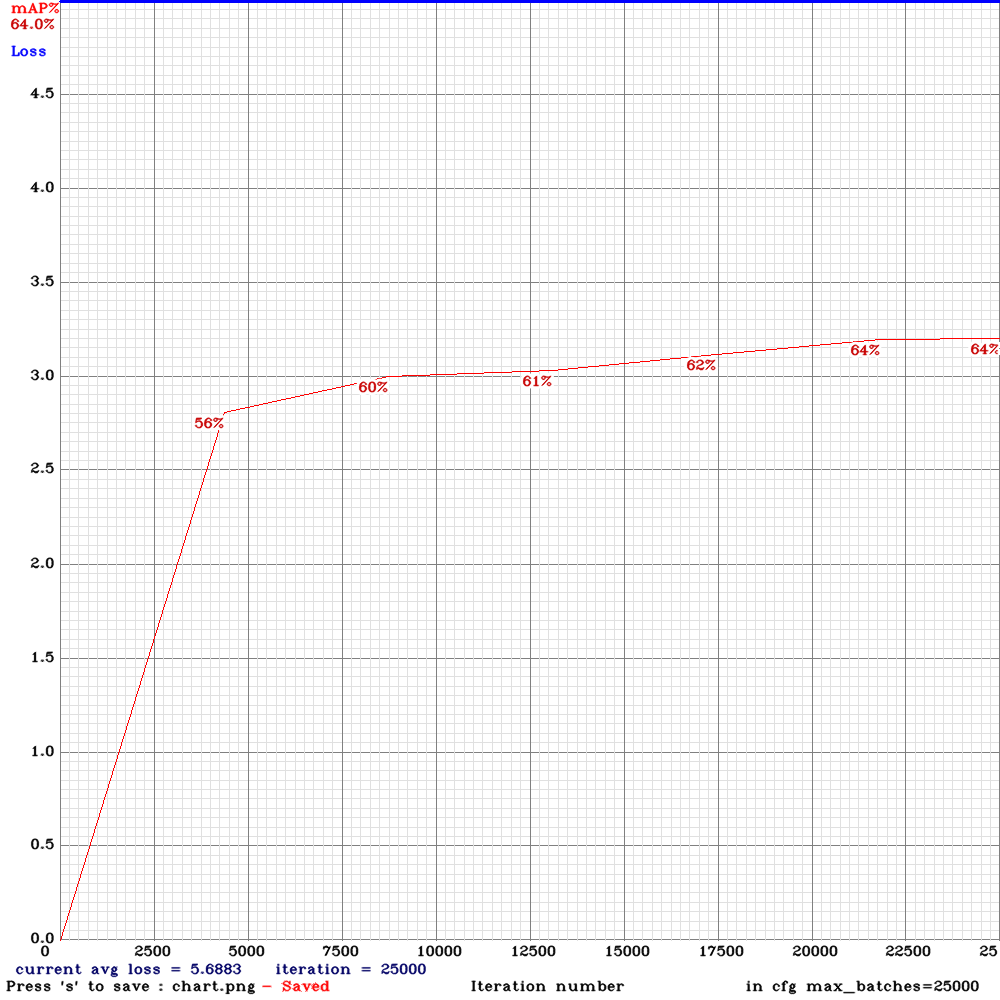

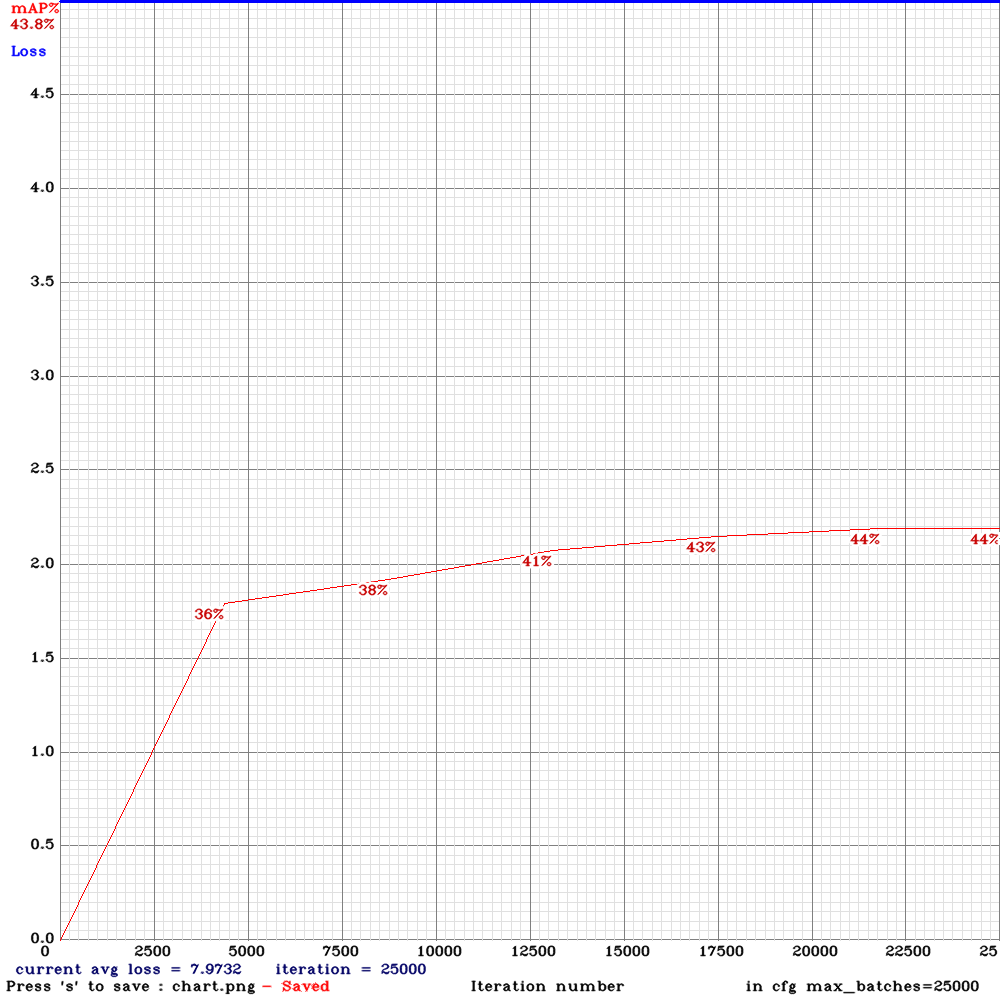

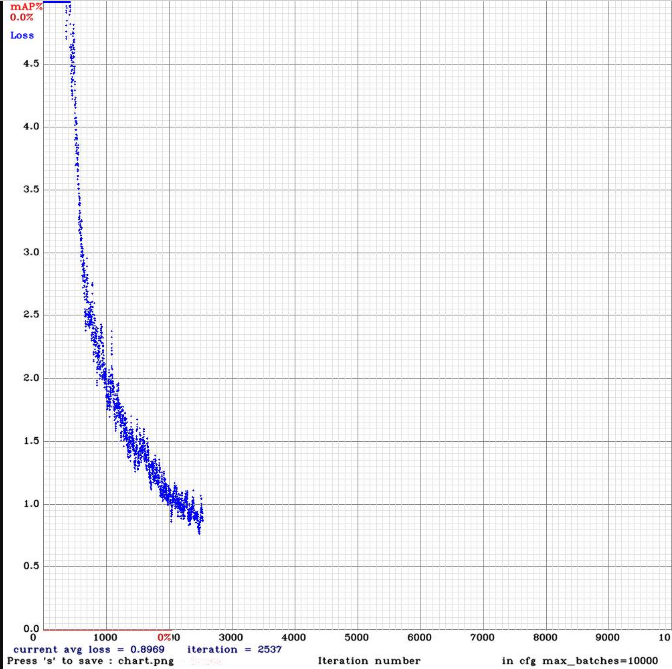

Also do you get better result with lstm-model yolo_v3_tiny_lstm.cfg than with https://github.com/AlexeyAB/darknet/blob/master/cfg/yolov3-tiny_3l.cfg and can you show chart.png for yolov3-tiny_3l.cfg(not lstm)?

@LukeAI May be yes, after several improvements.

Have you implemented yolo_v3_spp_pan_lstm.cfg ?

@AlexeyAB

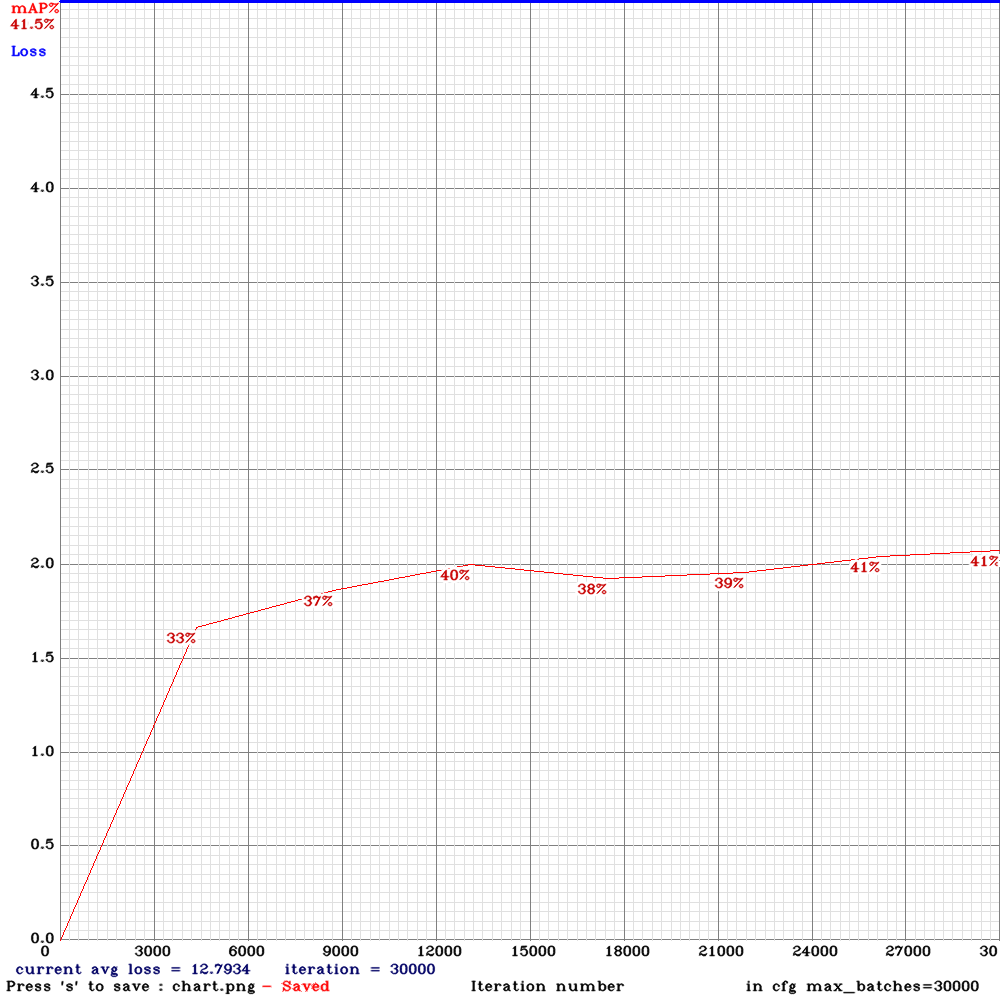

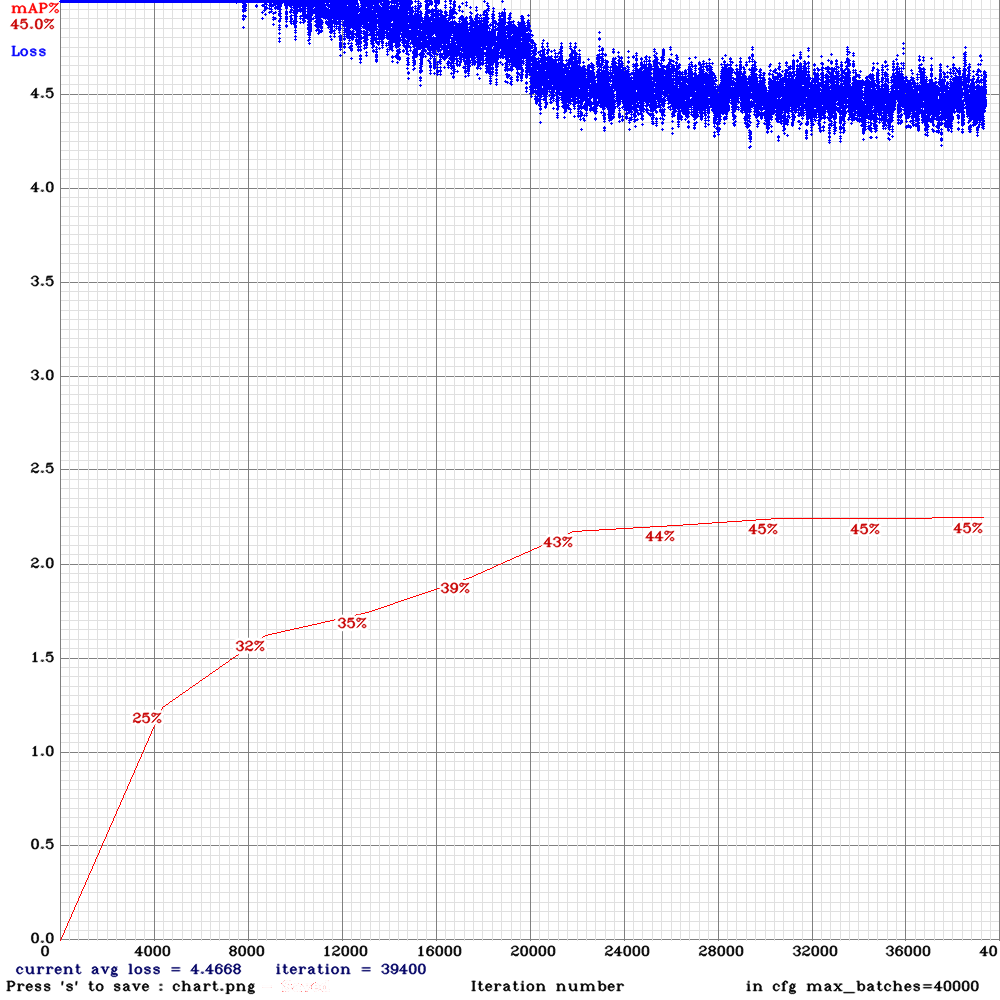

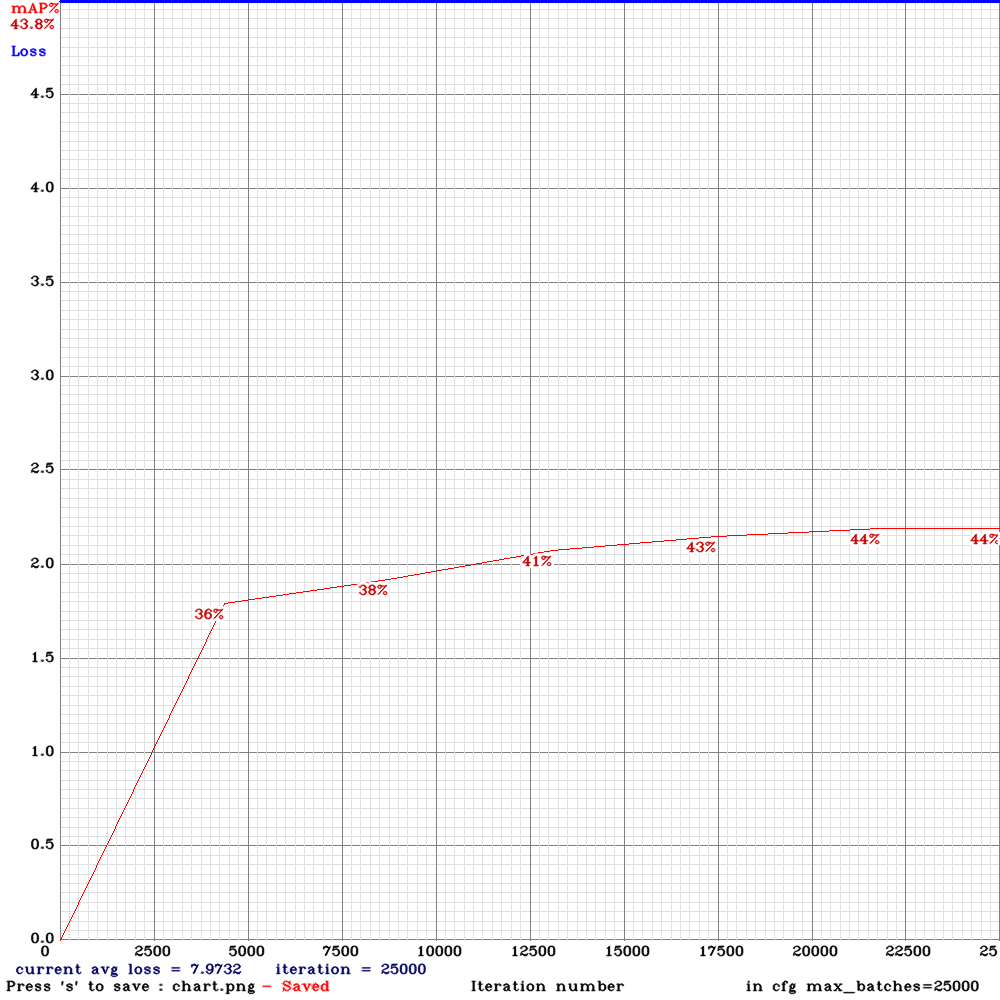

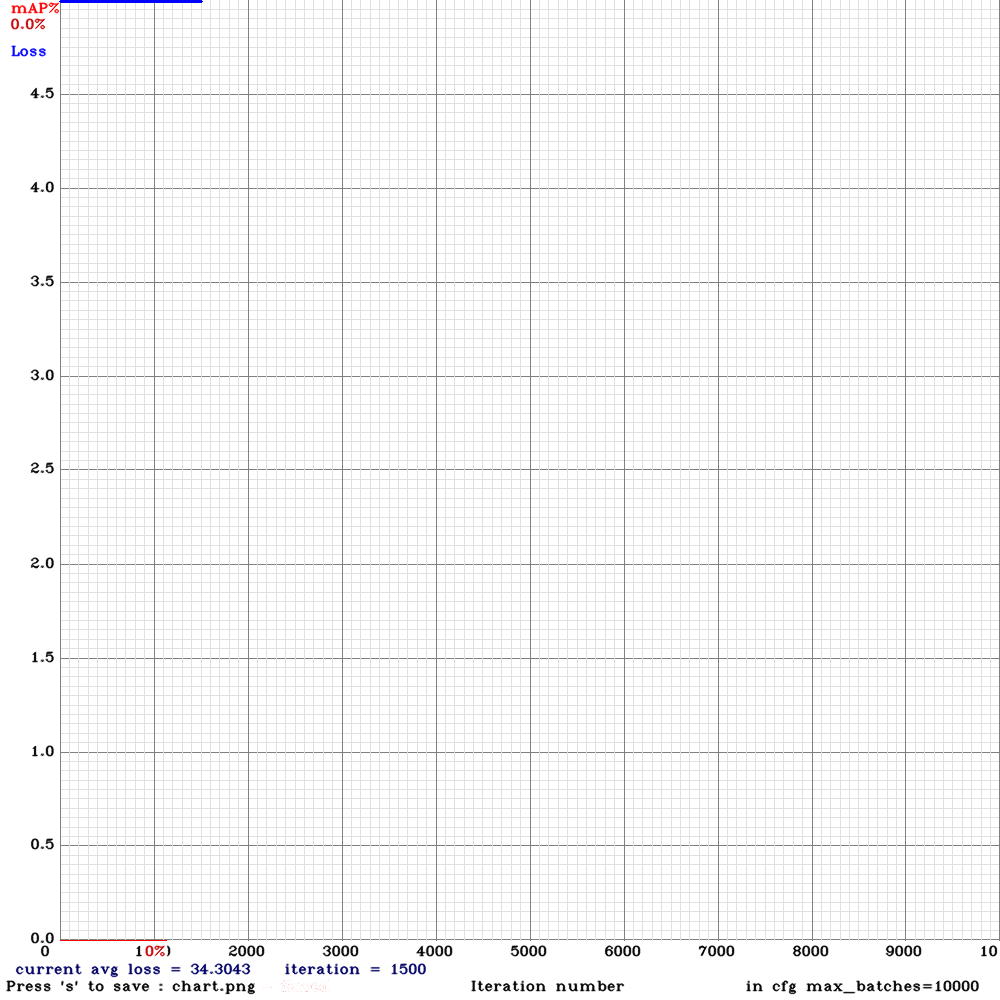

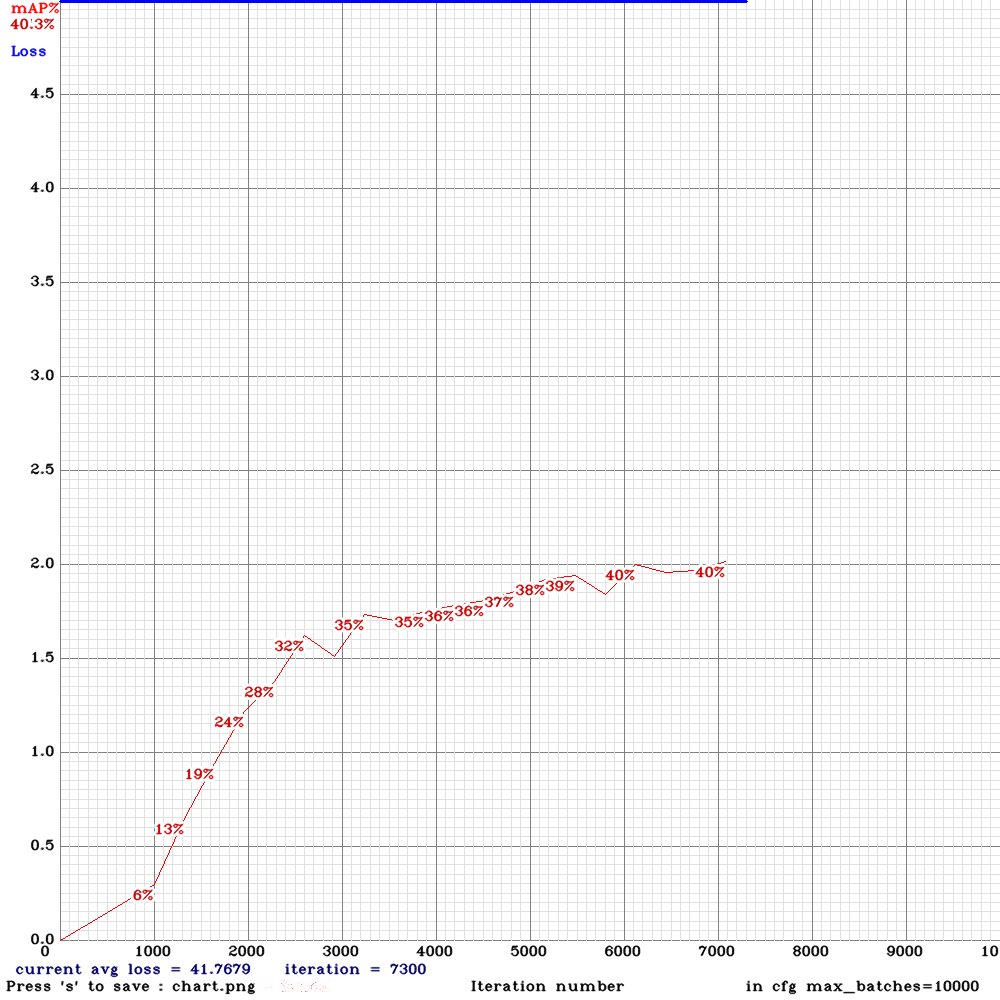

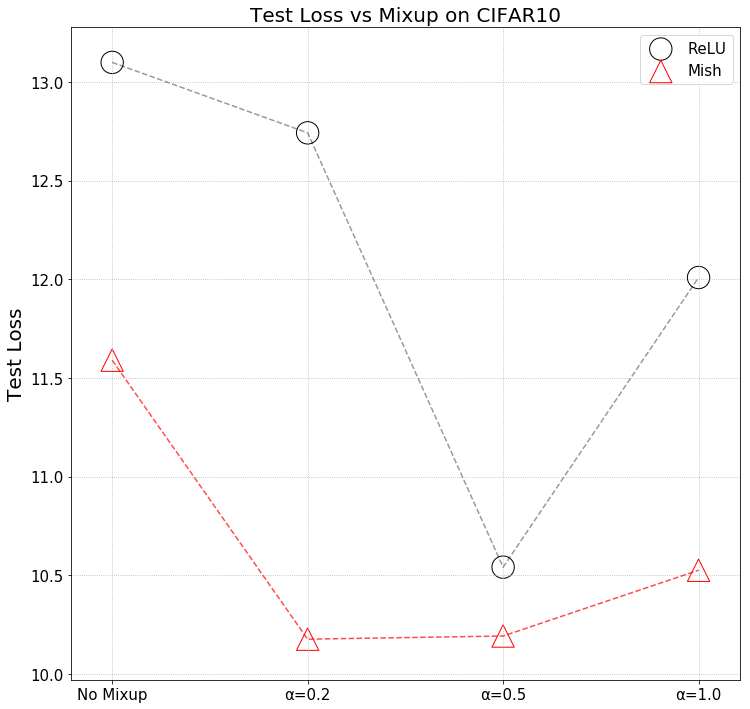

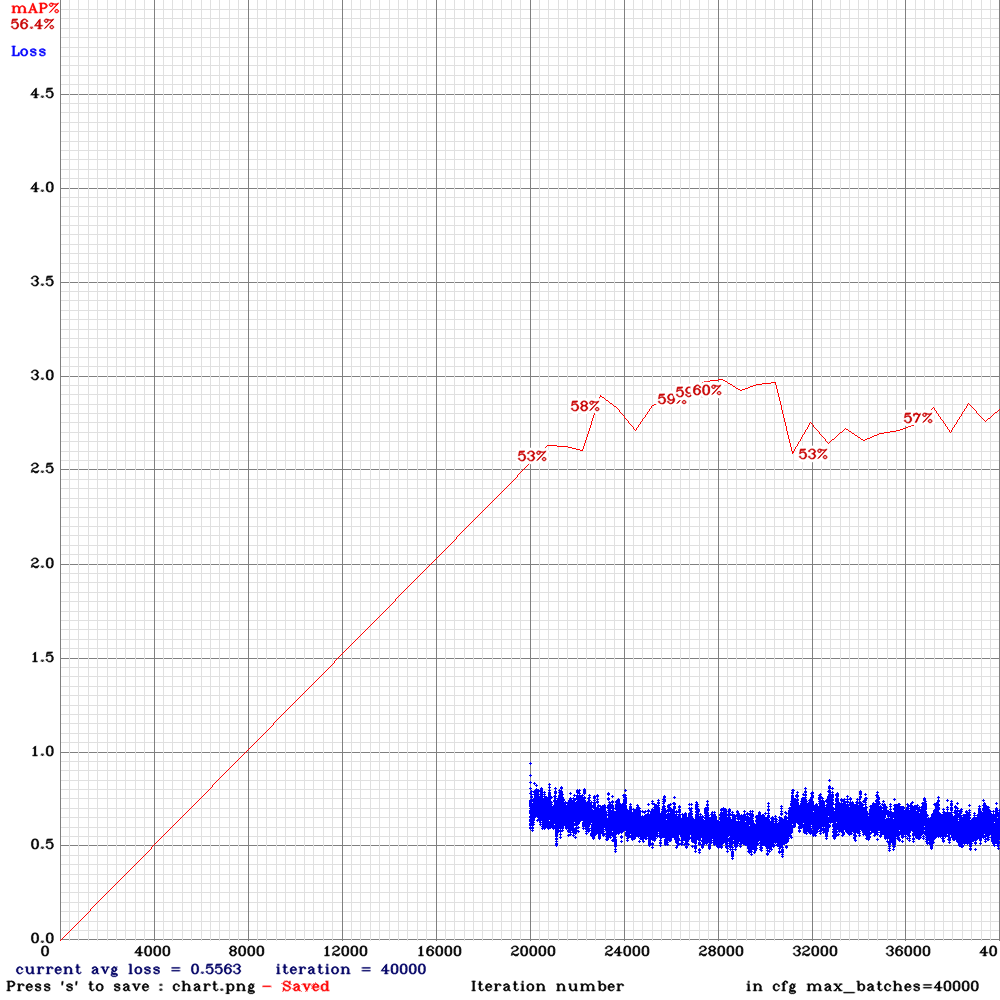

Thank you for the guidance .This is the chart of yolo_v3_tiny3l.cfg .Based on the results I got in iterations before becoming unstable ,The detection results of yolo_v3_tiny_lstm was better than yolo_v3_tiny3l.cfg

@NickiBD So do you get higher mAp with yolo_v3_tiny3l.cfg than with yolo_v3_tiny_lstm.cfg?

@AlexeyAB

yes ,mAP is so far higher than yolo_v3_tiny_lstm.cfg

Hi @AlexeyAB

I'm using yolo_v3_spp_pan.cfg and trying to modify it for my use case, I see that the filters parameter is set to 24 for classes=1 instead of 18. How did you calculate this?

@sawsenrezig filters = (classes + 5) * 4

@AlexeyAB what is the formula for number of filters in the conv layers before yolo layers for yolov3_tiny_3l ?

@LukeAI In any cfg-file filters = (classes + 5) * num / number_of_yolo_layers

- classes: https://github.com/AlexeyAB/darknet/blob/55dcd1bcb8d83f27c9118a9a4684ad73190e2ca3/cfg/yolov3-tiny_3l.cfg#L222

- num: https://github.com/AlexeyAB/darknet/blob/55dcd1bcb8d83f27c9118a9a4684ad73190e2ca3/cfg/yolov3-tiny_3l.cfg#L223

and count number_of_yolo layers:

- https://github.com/AlexeyAB/darknet/blob/55dcd1bcb8d83f27c9118a9a4684ad73190e2ca3/cfg/yolov3-tiny_3l.cfg#L133

- https://github.com/AlexeyAB/darknet/blob/55dcd1bcb8d83f27c9118a9a4684ad73190e2ca3/cfg/yolov3-tiny_3l.cfg#L175

- https://github.com/AlexeyAB/darknet/blob/55dcd1bcb8d83f27c9118a9a4684ad73190e2ca3/cfg/yolov3-tiny_3l.cfg#L219

ok! Wait... what is 'num' ?

ok! Wait... what is 'num' ?

'num' means the number of anchors.

Hi @AlexeyAB

Once again thank you for all your help .I tried to apply all your valuable suggestions except that I dont have 200 frames in each video sequence at the moment .However,still the training is unstable in my case and the accuracy drops significantly after 6000 iterations (almost 0) and goes up a bit after wards Could you please advice me on this .

Many thanks in advance .

@NickiBD Try to set state_constrain=10 for each [conv_lstm] layer in your cfg-file. And use the remaining default settings, except filters= and classes=.

Hi @AlexeyAB

Many thanks for the advice. I will apply that and let you know the result.

Hi @AlexeyAB

I tried to applied the the change but it is still unstable in my case and drops to 0 after 7000 iterations .However, the yolov3 -tiny PAN-lSTM worked fine with almost the same settings as the original cfg file and it was stable .Could you please give me advice of what might be the reason ?

As I need a very fast and accurate model for very small object detection to be worked on a smart phone ,I dont know whether yolov3 -tiny PAN-lSTM is better than yolov3-tiny 3l or yolov3-tiny -lstm for very small object detection . I would be really grateful if you could assist me on this on as well .

Many thanks for all the help.

@NickiBD Hi,

I tried to applied the the change but it is still unstable in my case and drops to 0 after 7000 iterations .However, the yolov3 -tiny PAN-lSTM worked fine with almost the same settings as the original cfg file and it was stable .Could you please give me advice of what might be the reason ?

Do you mean that

yolo_v3_tiny_pan_lstm.cfg.txtworks fine, butyolo_v3_tiny_lstm.cfg.txtdrops after 7000 iterations?What is the max, min and average size of your objects? Calculate anchors and show me.

What is the average sequence length (how many frames in one sequence) in your dataset?

As I need a very fast and accurate model for very small object detection to be worked on a smart phone ,I dont know whether yolov3 -tiny PAN-lSTM is better than yolov3-tiny 3l or yolov3-tiny -lstm for very small object detection . I would be really grateful if you could assist me on this on as well .

Many thanks for all the help.

Theoretically the best model for small objects should be - use it with the latest version of this repository:

- on images:

yolo_v3_tiny_pan_mixup.cfg.txt - on videos:

yolo_v3_tiny_pan_lstm.cfg.txt

@AlexeyAB Hi,

Thanks alot for the reply and all your advice.

yes .yolo_v3_tiny_PAN_lstm works fine and is stable but the accuracy of yolo_v3_tiny_lstm.cfg drops to 0 after 7000 iterations.

These are the calculated anchors :

5, 11, 7, 29, 13, 20, 12, 52, 23, 59, 49, 71

The number of frames varies as some videos are short and some are long. The number of frames are 75-100 frames for each video in the dataset.

Many thanks again for all the help.

@NickiBD

So use yolo_v3_tiny_pan_lstm.cfg.txt instead of yolo_v3_tiny_lstm.cfg.txt,

since yolo_v3_tiny_pan_lstm.cfg.txt is better in any case, especially for small objects.

Use default anchors.

Could you please give me advice of what might be the reason ?

yolo_v3_tiny_lstm.cfg.txtuses longer sequences (time_steps=16 X augment_speed=3 = 48) thanyolo_v3_tiny_pan_lstm.cfg.txt(time_steps=3 X augment_speed=3 = 9),

so if you trainyolo_v3_tiny_lstm.cfg.txton short video-sequences it can lead to unstable training.yolo_v3_tiny_lstm.cfg.txtisn't good for small objects. Since you use dataset with small objects, so it can lead to unstable training

@AlexeyAB

Thank you so much for all the advice .

@AlexeyAB

I see that you added TridentNet already! Do you have any results / training graphs? Maybe on the same dataset and network size as your table above so that we can compare?

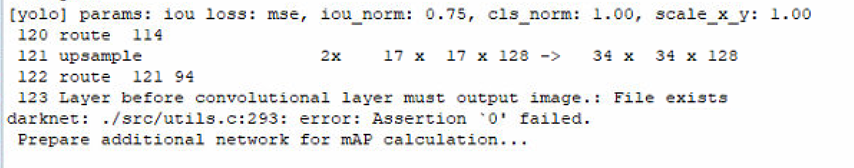

@AlexeyAB

I am trying to train a yolo_v3_tiny_pan_mixup. I have downloaded the weights yolov3-tiny_occlusion_track_last.weights but been unable to train directly from the weights or able to extract from them:

$ ./darknet partial my_stuff/yolo_v3_tiny_pan_mixup.cfg my_stuff/yolov3-tiny_occlusion_track_last.weights yolov3-tiny.conv.14 14

GPU isn't used

layer filters size input output

0 conv 16 3 x 3 / 1 768 x 432 x 3 -> 768 x 432 x 16 0.287 BF

1 max 2 x 2 / 2 768 x 432 x 16 -> 384 x 216 x 16 0.005 BF

2 conv 32 3 x 3 / 1 384 x 216 x 16 -> 384 x 216 x 32 0.764 BF

3 max 2 x 2 / 2 384 x 216 x 32 -> 192 x 108 x 32 0.003 BF

4 conv 64 3 x 3 / 1 192 x 108 x 32 -> 192 x 108 x 64 0.764 BF

5 max 2 x 2 / 2 192 x 108 x 64 -> 96 x 54 x 64 0.001 BF

6 conv 128 3 x 3 / 1 96 x 54 x 64 -> 96 x 54 x 128 0.764 BF

7 max 2 x 2 / 2 96 x 54 x 128 -> 48 x 27 x 128 0.001 BF

8 conv 256 3 x 3 / 1 48 x 27 x 128 -> 48 x 27 x 256 0.764 BF

9 max 2 x 2 / 2 48 x 27 x 256 -> 24 x 14 x 256 0.000 BF

10 conv 512 3 x 3 / 1 24 x 14 x 256 -> 24 x 14 x 512 0.793 BF

11 max 2 x 2 / 1 24 x 14 x 512 -> 24 x 14 x 512 0.001 BF

12 conv 1024 3 x 3 / 1 24 x 14 x 512 -> 24 x 14 x1024 3.171 BF

13 conv 256 1 x 1 / 1 24 x 14 x1024 -> 24 x 14 x 256 0.176 BF

14 conv 512 3 x 3 / 1 24 x 14 x 256 -> 24 x 14 x 512 0.793 BF

15 conv 128 1 x 1 / 1 24 x 14 x 512 -> 24 x 14 x 128 0.044 BF

16 upsample 2x 24 x 14 x 128 -> 48 x 28 x 128

17 route 16 8

18 Layer before convolutional layer must output image.: File exists

darknet: ./src/utils.c:293: error: Assertion `0' failed.

Aborted (core dumped)

Could you advise me as to how to extract seed weights to begin training?

I also notice that the tiny_occlusion weights are provided for yolo_v3_pan_scale is this correct?

@LukeAI

I also notice that the tiny_occlusion weights are provided for yolo_v3_pan_scale is this correct?

There is correct weights-file, just incorrect filename (may be I will change it later).

I am trying to train a yolo_v3_tiny_pan_mixup. I have downloaded the weights yolov3-tiny_occlusion_track_last.weights but been unable to train directly from the weights or able to extract from them:

You set incorrect network resolution, width and height must be multiple of 32, while 432 isn't. Set 416.

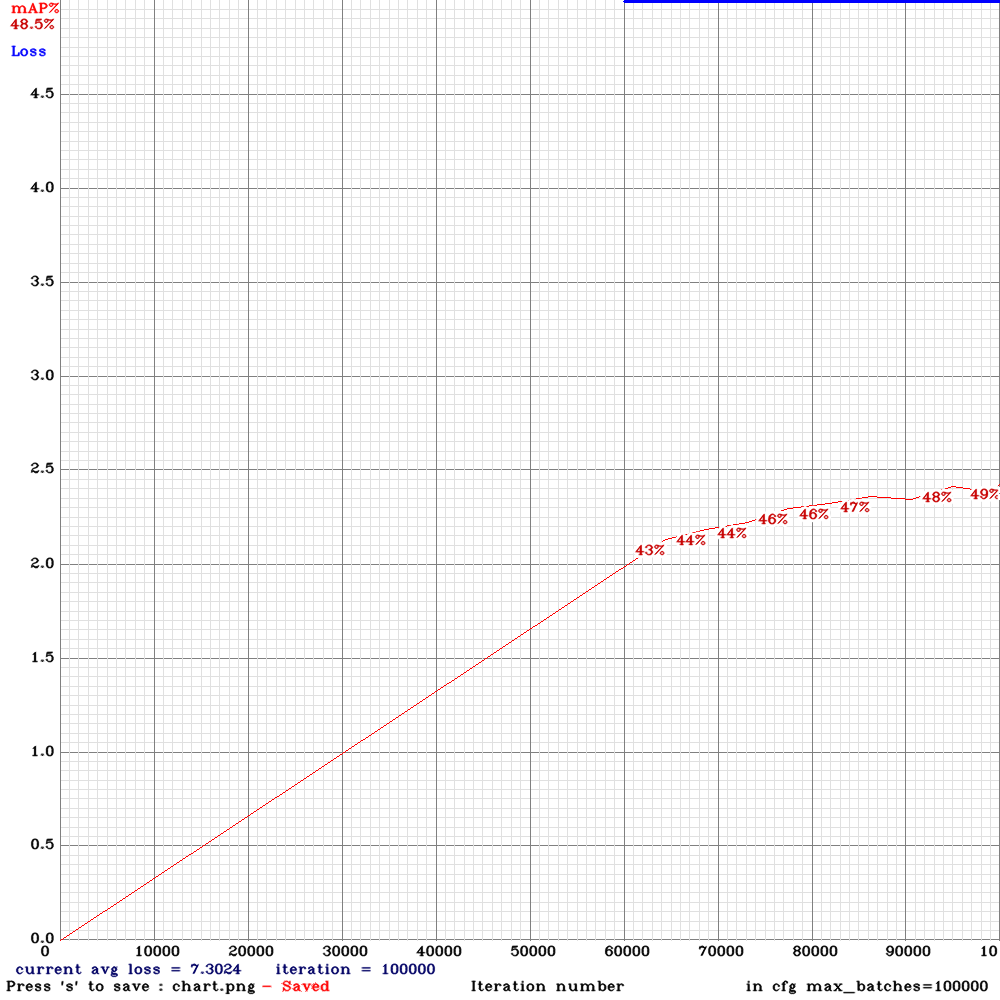

I see that you added TridentNet already! Do you have any results / training graphs? Maybe on the same dataset and network size as your table above so that we can compare?

I am training it right now, it is very slow. That's why they used original ResNet152 (without any changes and improvements) for the fastest transfer learning from pre-trained ResNet152 on Imagenet.

Hi alexey, do you have an example of how use the Yolo-Lstm after training ?

@Dinl Hi, what do you mean? Just use it as usual.

Run it on Video-file or with Video-camera (web-cam, IP-cam http/rtsp):

./darknet detector demo data/self_driving.data yolo_v3_tiny_pan_lstm.cfg.txt yolo_v3_tiny_pan_lstm_last.weights rtsp://login:[email protected]:554

Look at the result video https://drive.google.com/open?id=1ilTuesCfaFphPPx-djhp7bPTC31CAufx

Or press on other video URLs there: https://github.com/AlexeyAB/darknet/issues/3114#issuecomment-494148968

@LukeAI I added TridentNet to the table: resnet152_trident.cfg.txt

@AlexeyAB Hi

I trained network with config yolo_v3_spp.cfg, mAP = ~74% at 60k iterations.

Total train images 86k.

Network size is 416x416

batch=64

subdivisions=16

random=1 at all [yolo] layers

Dataset is mostly COCO, classes = 2

Right now i am training with yolo_v3_spp_pan.cfg expecting to get higher mAP

Network size is 416x416

batch=64

subdivisions=32

random=1 at all [yolo] layers

All lines i have changed in the original config file are:

width=416

height=416

...

max_batches = 100000

steps=80000,90000

...

--3x--

[convolutional]

size=1

stride=1

pad=1

filters=28 (for 2 classes)

activation=linear

[yolo]

mask = 0,1,2,3

anchors = 8,8, 10,13, 16,30, 33,23, 32,32, 30,61, 62,45, 64,64, 59,119, 116,90, 156,198, 373,326

classes=2

num=12

jitter=.3

ignore_thresh = .7

truth_thresh = 1

random=1

--3x--

But mAP is about ~66%, expecting to get +~4% i got -8% ))

Is it expected behavior or i missed something in config?

Can it be because of anchors? i did not recalculate them.

Darknet is up to date.

Thanks for help.

@dreambit Hi,

Do you use the latest version of this repository?

Can you rename both yolo_v3_spp.cfg and yolo_v3_spp_pan.cfg files to txt-files and attach them to your message?

Do you have chart.png for both training processes?

@AlexeyAB Thanks for quick reply

Do you use the latest version of this repository?

commit 94c806ffadc4b052bfaabe1904b79cabc6c10140 (HEAD -> master, origin/master, origin/HEAD)

Date: Sun Jun 9 03:07:04 2019 +0300

final fix

Unfortunately chart for spp.cfg is lost, pan is in progress right now, i will show u both charts later.

Config files:

yolov3-spp.cfg.txt

yolo_v3_spp_pan.cfg.txt

I manually calculated mAP for spp.cfg

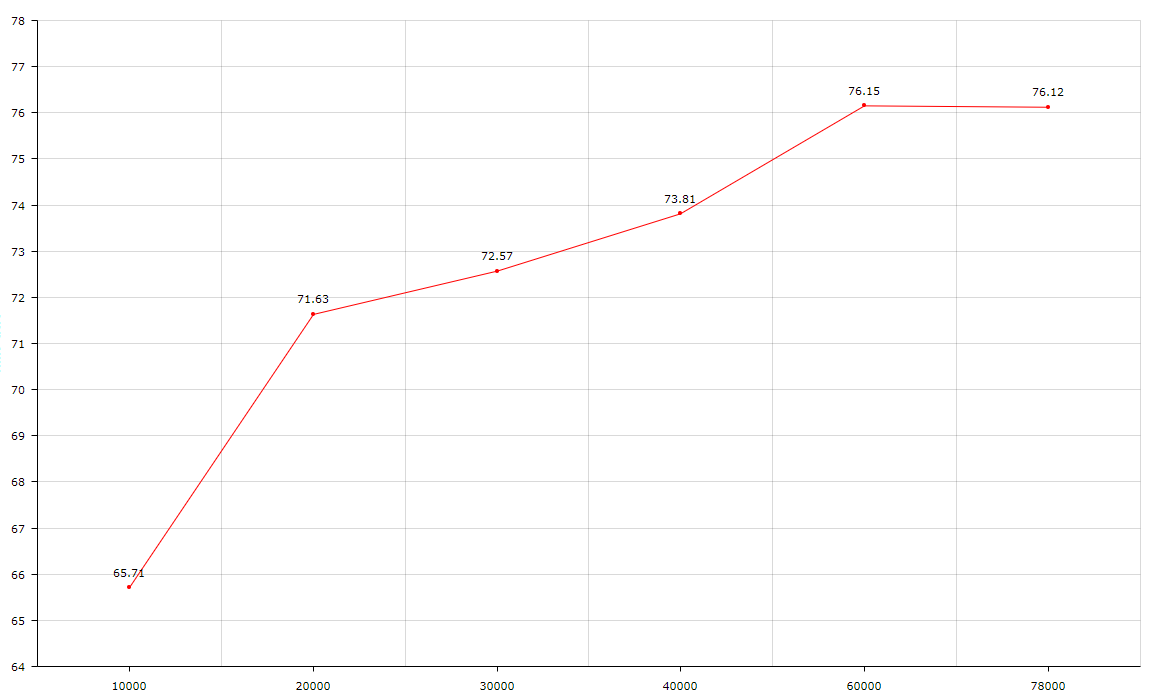

10k

for conf_thresh = 0.25, precision = 0.64, recall = 0.61, F1-score = 0.63

for conf_thresh = 0.25, TP = 18730, FP = 10367, FN = 11832, average IoU = 49.59 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.657086, or 65.71 %

20k

```

for conf_thresh = 0.25, precision = 0.80, recall = 0.60, F1-score = 0.69

for conf_thresh = 0.25, TP = 18349, FP = 4635, FN = 12213, average IoU = 63.53 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.716259, or 71.63 %

**30k**

for conf_thresh = 0.25, precision = 0.75, recall = 0.65, F1-score = 0.70

for conf_thresh = 0.25, TP = 19778, FP = 6562, FN = 10784, average IoU = 59.62 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.725672, or 72.57 %

**40k**

for conf_thresh = 0.25, precision = 0.75, recall = 0.66, F1-score = 0.71

for conf_thresh = 0.25, TP = 20293, FP = 6681, FN = 10269, average IoU = 60.05 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.738118, or 73.81 %

**60k**

for conf_thresh = 0.25, precision = 0.73, recall = 0.70, F1-score = 0.72

for conf_thresh = 0.25, TP = 21518, FP = 7818, FN = 9044, average IoU = 59.27 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.761458, or 76.15 %

**78k**

for conf_thresh = 0.25, precision = 0.74, recall = 0.70, F1-score = 0.72

for conf_thresh = 0.25, TP = 21439, FP = 7444, FN = 9123, average IoU = 59.99 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.761211, or 76.12 %

**According to best.weights**

```

for conf_thresh = 0.25, precision = 0.74, recall = 0.71, F1-score = 0.72

for conf_thresh = 0.25, TP = 21599, FP = 7775, FN = 8963, average IoU = 59.45 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.764087, or 76.41 %

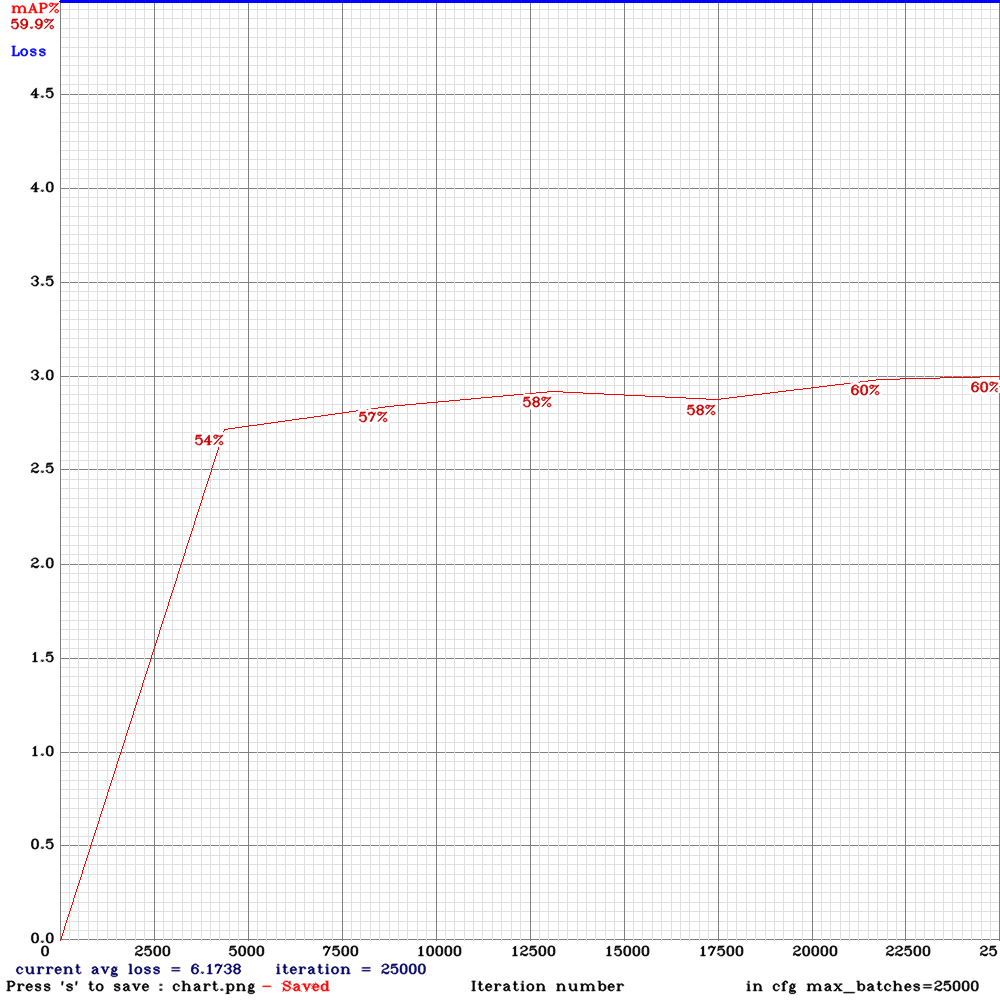

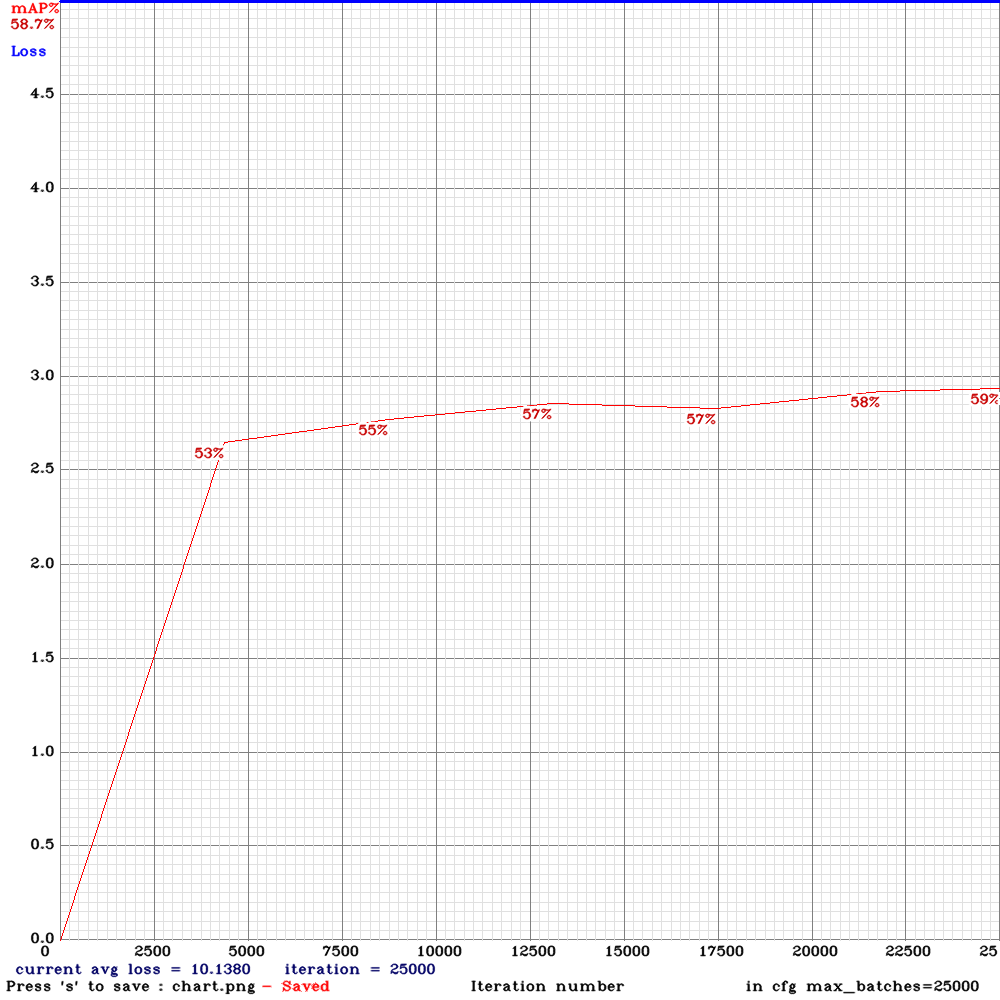

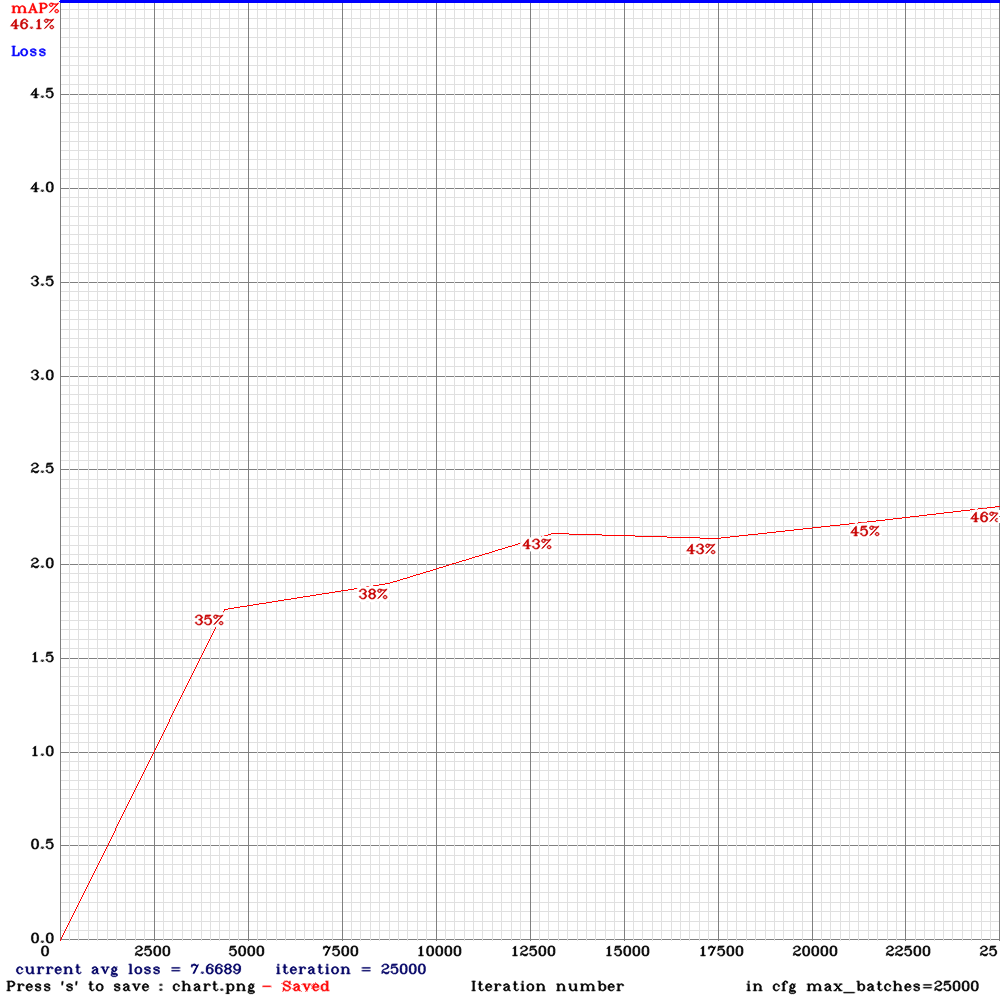

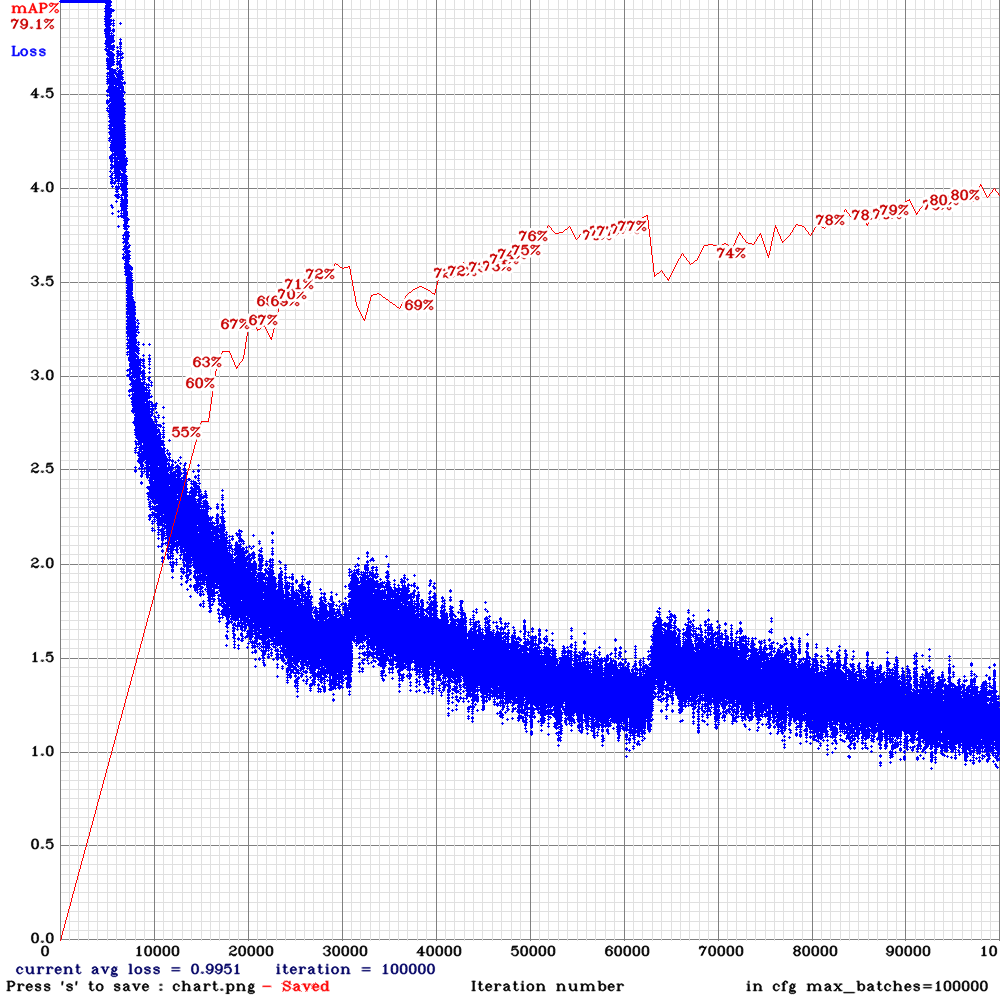

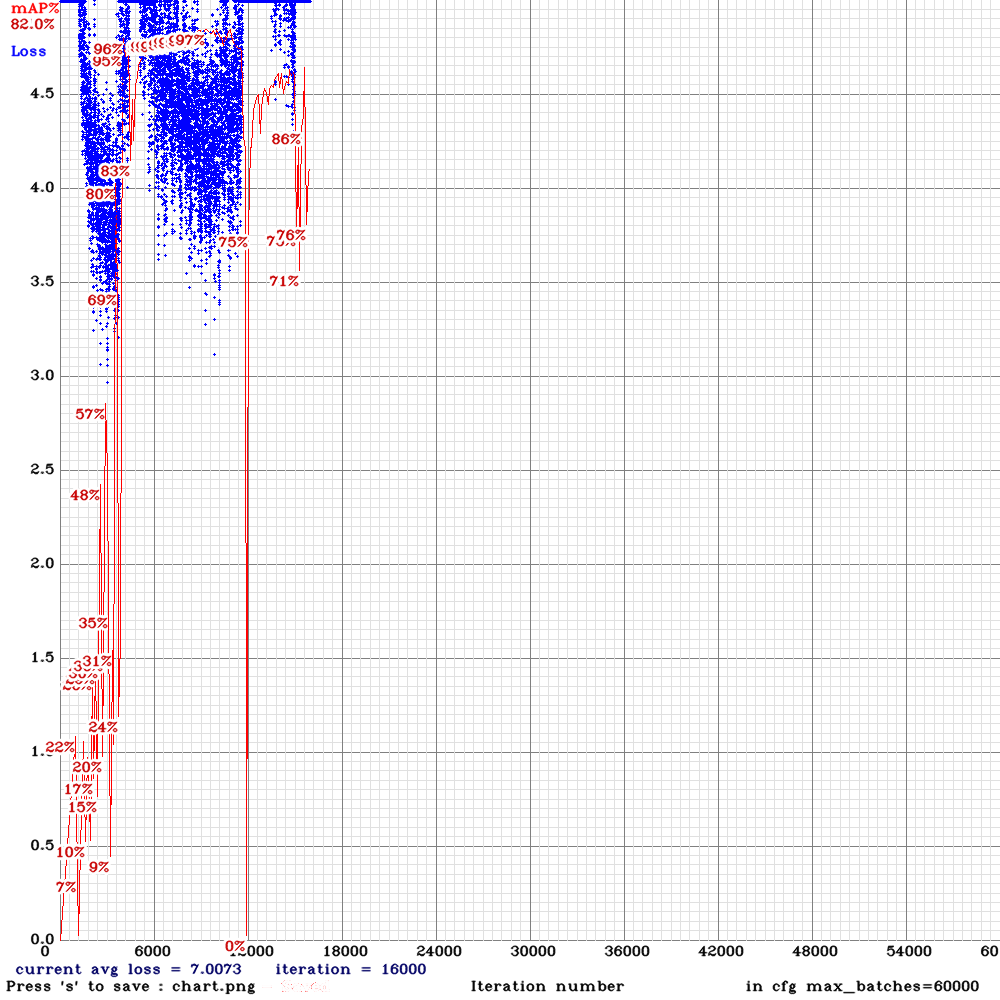

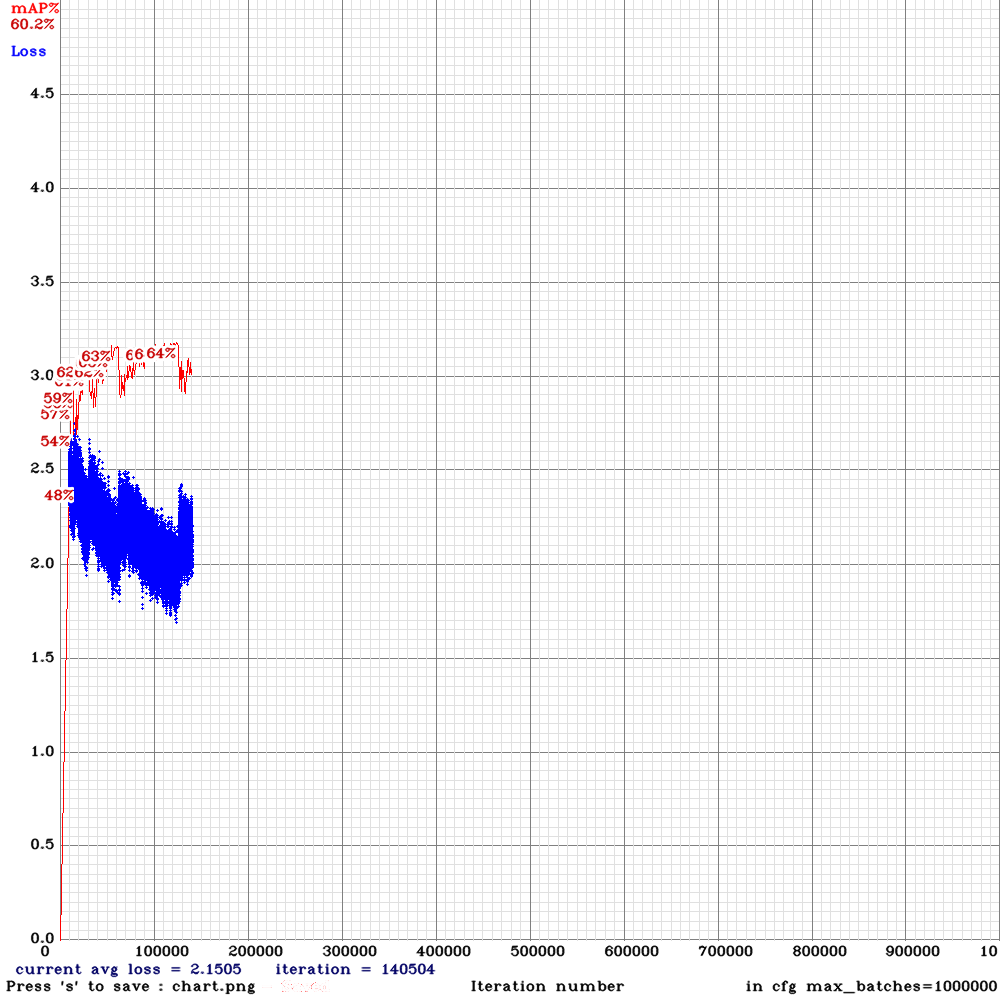

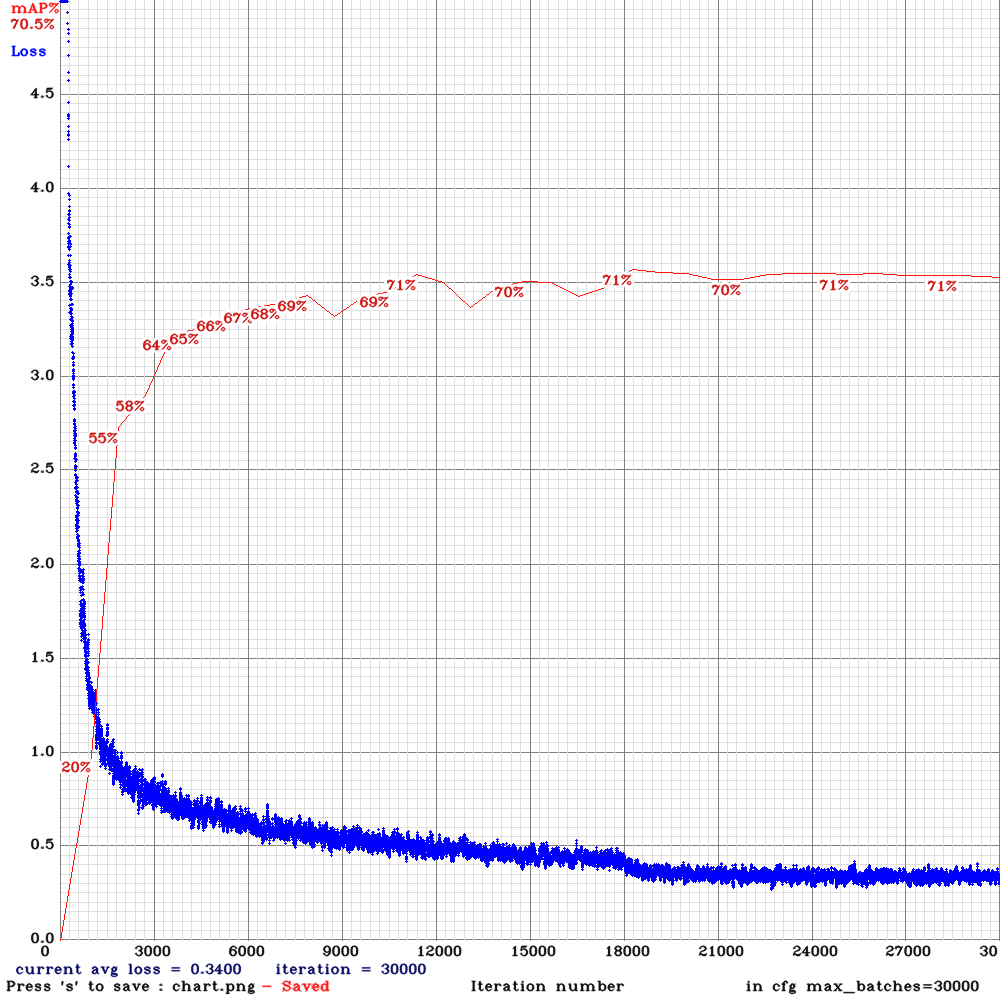

As chart:

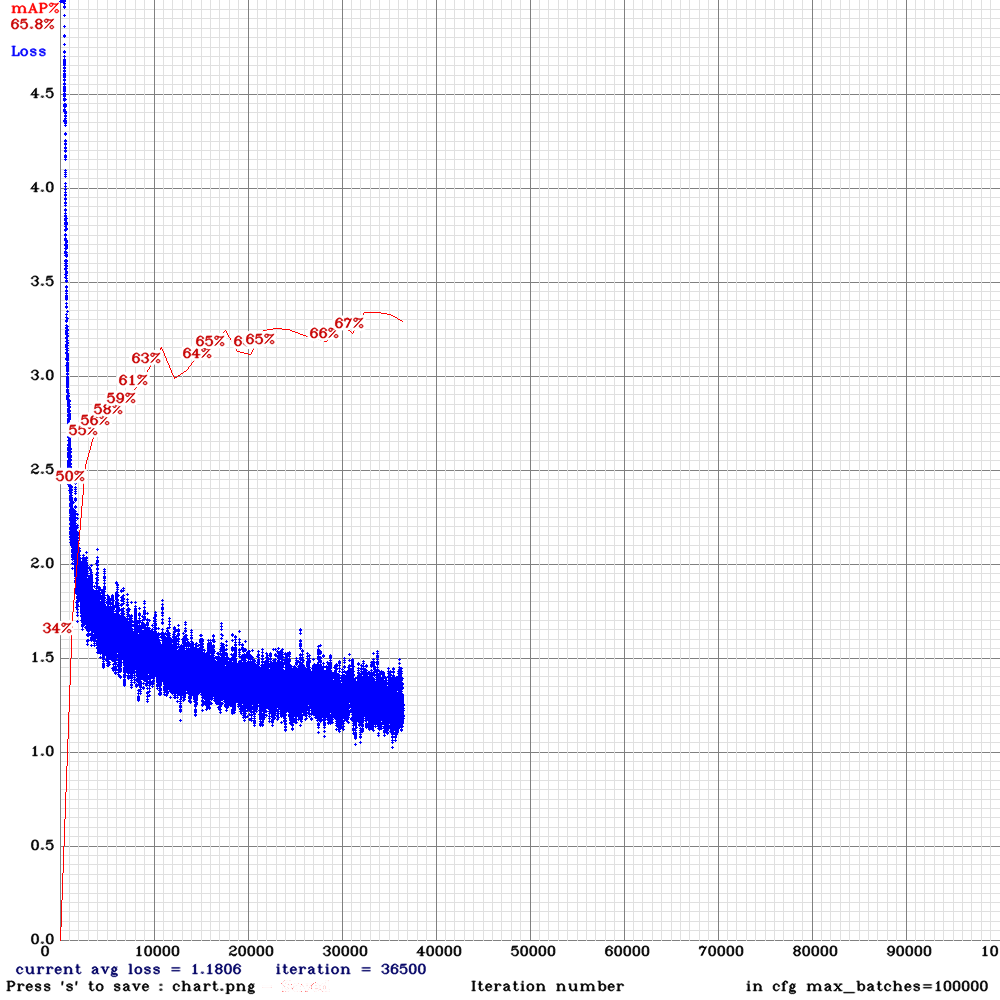

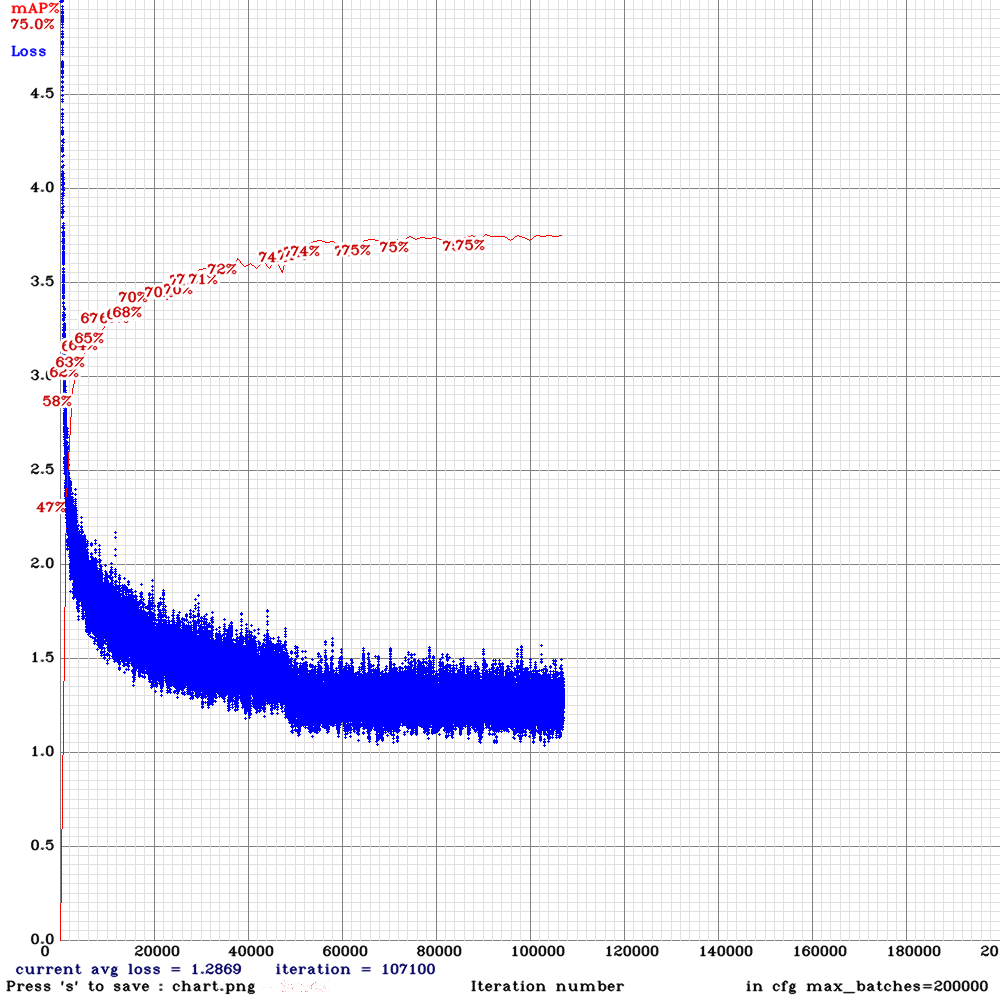

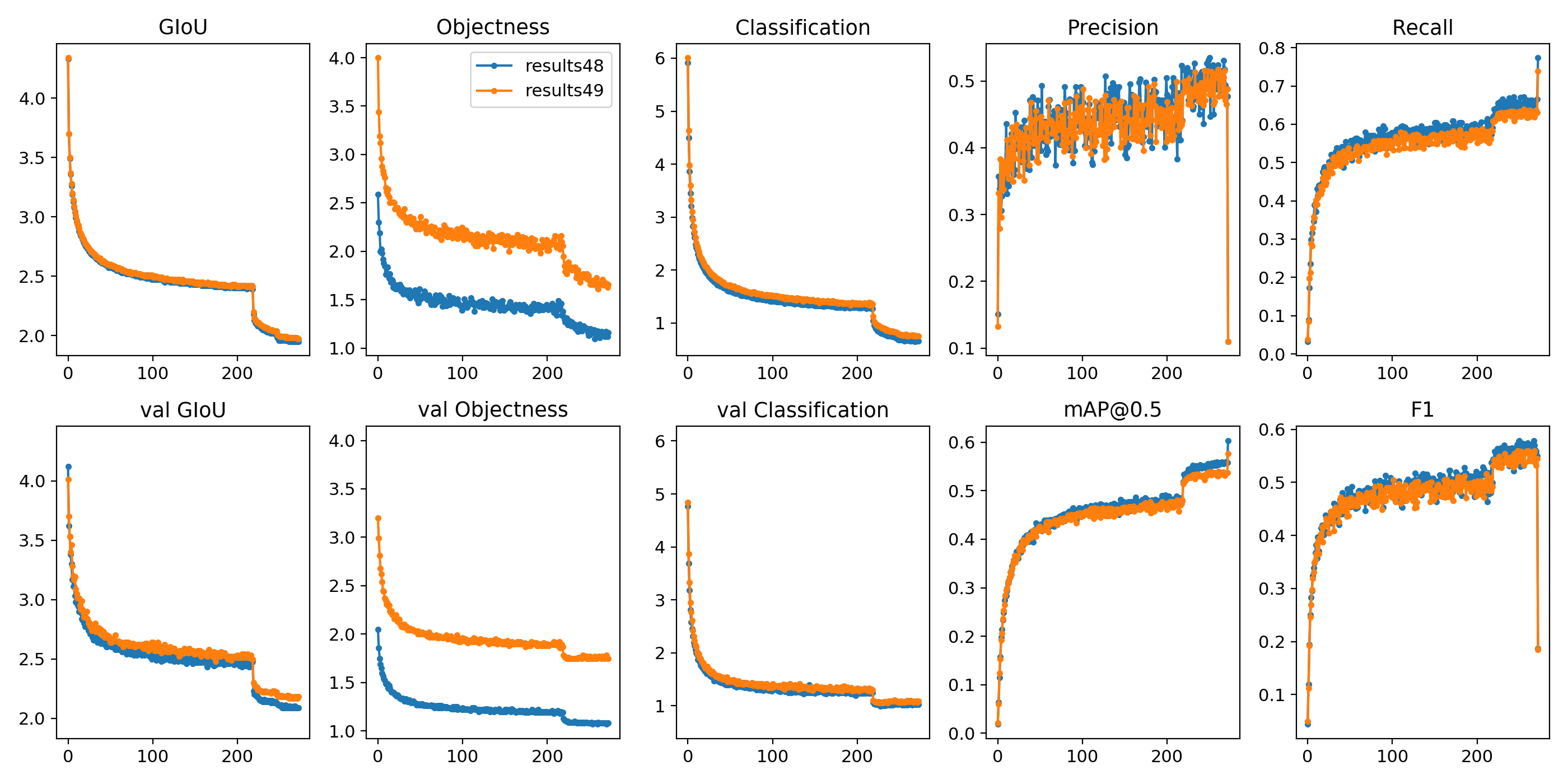

Last spp-pan chart:

@dreambit Thanks!

Try to train

yolov3_spp.cfgbut withsubdivisions=32will it have the same high mAP ~76%?If yes, then try to train pan-model with the same learning-policy parameters and the same anchors:

yolo_v3_spp_pan_new.cfg.txt will it have high accuracy mAP ~76% or more?

If it doesn't help, and PAN still has lower mAP, then I will try to make a new layer [maxpool_depth] and new pan-model.

@AlexeyAB are you planning to make a yolo_v3_spp_pan_lstm.cfg ? (or maybe it should be yolo_v3_spp_pan_scale_mixup_lstm.cfg ?) It looks like it would probably top the league in AP.

@LukeAI I'm not planning yet, since we should check whether LSTM, PAN, Mixup and Scale works properly and give improvements in the most cases, what is not yet obvious (also may be fix these approaches):

PAN drops accuracy:

But mAP is about ~66%, expecting to get +~4% i got -8% ))https://github.com/AlexeyAB/darknet/issues/3114#issuecomment-502398291get higher mAp with yolo_v3_tiny3l.cfg than with yolo_v3_tiny_lstm.cfghttps://github.com/AlexeyAB/darknet/issues/3114#issuecomment-496903878scale_x_y = 1.05, 1.1, 1.2 with yolo_v3_tiny_pan_lstm.cfg.txt and it decreases the mAP.https://github.com/AlexeyAB/darknet/issues/3293#issuecomment-499043719Mixup drops -3% mAP accuracy: https://github.com/AlexeyAB/darknet/issues/3272#issuecomment-500781961

EfficientNet_b0 has low accuracy:

Top1 = 57.6%, Top5 = 81.2% - 150 000 iterations (something goes wrong): https://github.com/AlexeyAB/darknet/issues/3380#issuecomment-502364112

Theoretically, there should be a network:

efficientnet_b4_lstm_spp_pan_mixup_scale_trident_yolo.cfg

or

efficientnet_b4_lstm_spp_pan_mixup_scale_trident_corner.cfg )

@AlexeyAB Thanks.

Try to train yolov3_spp.cfg but with subdivisions=32 will it have the same high mAP ~76%?

If yes, then try to train pan-model with the same learning-policy parameters and the same anchors:

yolo_v3_spp_pan_new.cfg.txt will it have high accuracy mAP ~76% or more?

I've started both on two machines, i'll report results to you at 20, 40, ~60k

@LukeAI I'm not planning yet, since we should check whether LSTM, PAN, Mixup and Scale works properly and give improvements in the most cases, what is not yet obvious (also may be fix these approaches):

Makes sense! I'm running some trials now, will report here.

Does higher subdivisions mean potentially higher accuracy? Because it means smaller minibatches?

@LukeAI No, higher subdivisions -> lower accuracy and lower memory consumption.

Higher minibatch = batch/subdivisions -> higher accuracy.

So to get higher accuracy - use lower subdivisions.

How to train LSTM networks:

./yolo_mark data/self_driving cap_video self_driving.mp4 1- it will grab each 1 frame from video (you can vary from 1 to 5)./yolo_mark data/self_driving data/self_driving.txt data/self_driving.names- to mark bboxes, even if at some point the object is invisible (occlused/obscured by another type of object)./darknet detector train data/self_driving.data yolo_v3_tiny_pan_lstm.cfg yolov3-tiny.conv.14 -map- to train the detector

@AlexeyAB , Thanks for this enhancement. I will definitely try this out.

Could you tell me -

- what is the LSTM config file training full yolov3 model and not a tiny one?

- command for training full model...You mentioned the training command only for the tiny model above. Can I use the below ?

./darknet detector train data/self_driving.data cfg/weights/darknet53.conv.74 -map

Thanks much!

@kmsravindra Hi,

there is only this yet (LSTM+spp, but without PAN, Mixup, Scale, GIoU): https://github.com/AlexeyAB/darknet/files/3199654/yolo_v3_spp_lstm.cfg.txt

Yes,

darknet53.conv.74is suitable for all full-models except TridentNet

Hi @AlexeyAB,

I have a question regarding object detection on videos: what's the difference between using LSTM and running YoloV3 for example on a video?

Hi @AlexeyAB,

I am trying to train resnet152_trident.cfg.txt with resnet152.201 pretrained weights . I am using the default config .However, it is very slow ,does not show me the training graph and has not created any weights after 6 hours . Could you Please advice me on this and what I am doing wrong .

Many thanks for all the help .

@YouthamJoseph

I have a question regarding object detection on videos: what's the difference between using LSTM and running YoloV3 for example on a video?

That LSTM-Yolo is faster and more accurate than Yolov3.

LSTM-Yolo uses recurrent LSTM-layers with memory, so it takes into account previous several frames and previous detections, it allows to achive much higher accuracy.

@NickiBD How many iterations did you train?

I will try to add resnet101_trident.cfg.txt that is faster than resnet152_trident.cfg.txt

@AlexeyAB

Thanks for the reply and providing resnet101_trident.cfg.txt. I cannot see the training graph to see at what stage the training is and I don't know the reason behind it .

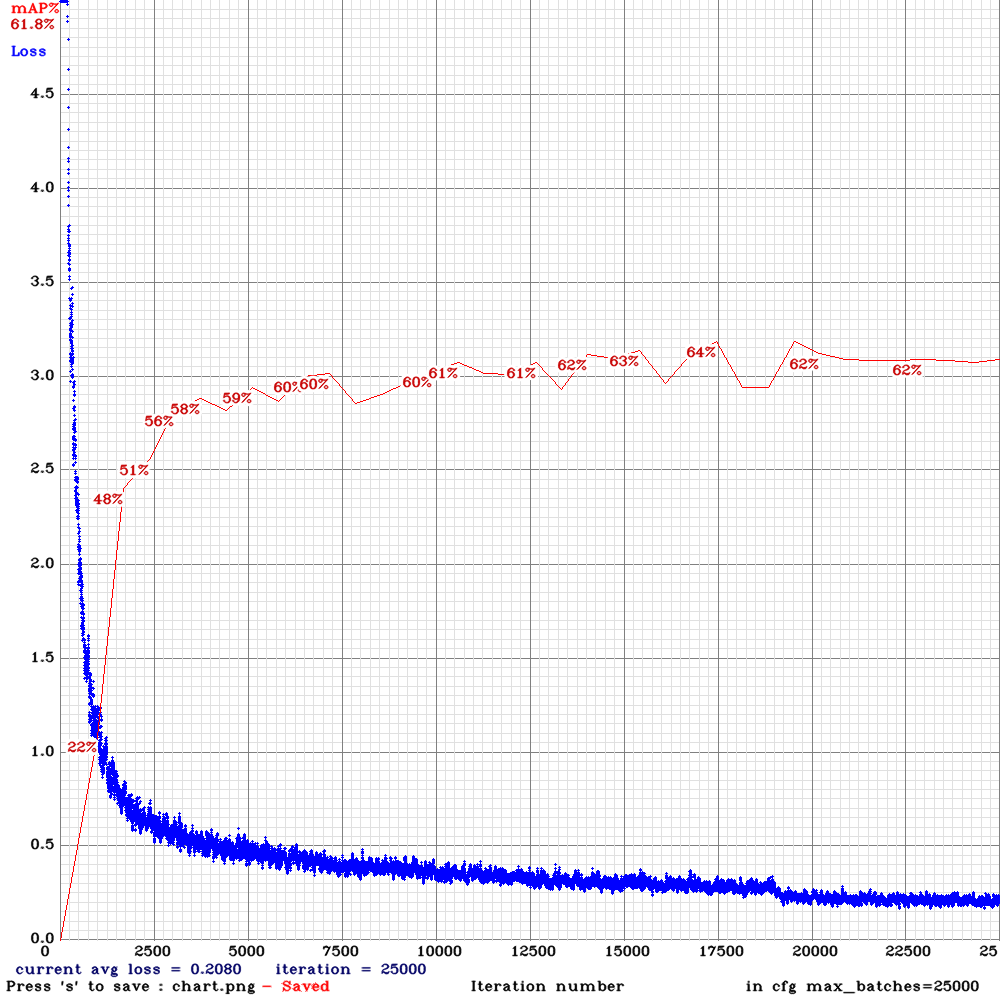

@AlexeyAB Hi

Try to train yolov3_spp.cfg but with subdivisions=32 will it have the same high mAP ~76%?

If yes, then try to train pan-model with the same learning-policy parameters and the same anchors:

yolo_v3_spp_pan_new.cfg.txt will it have high accuracy mAP ~76% or more?

intermediate results:

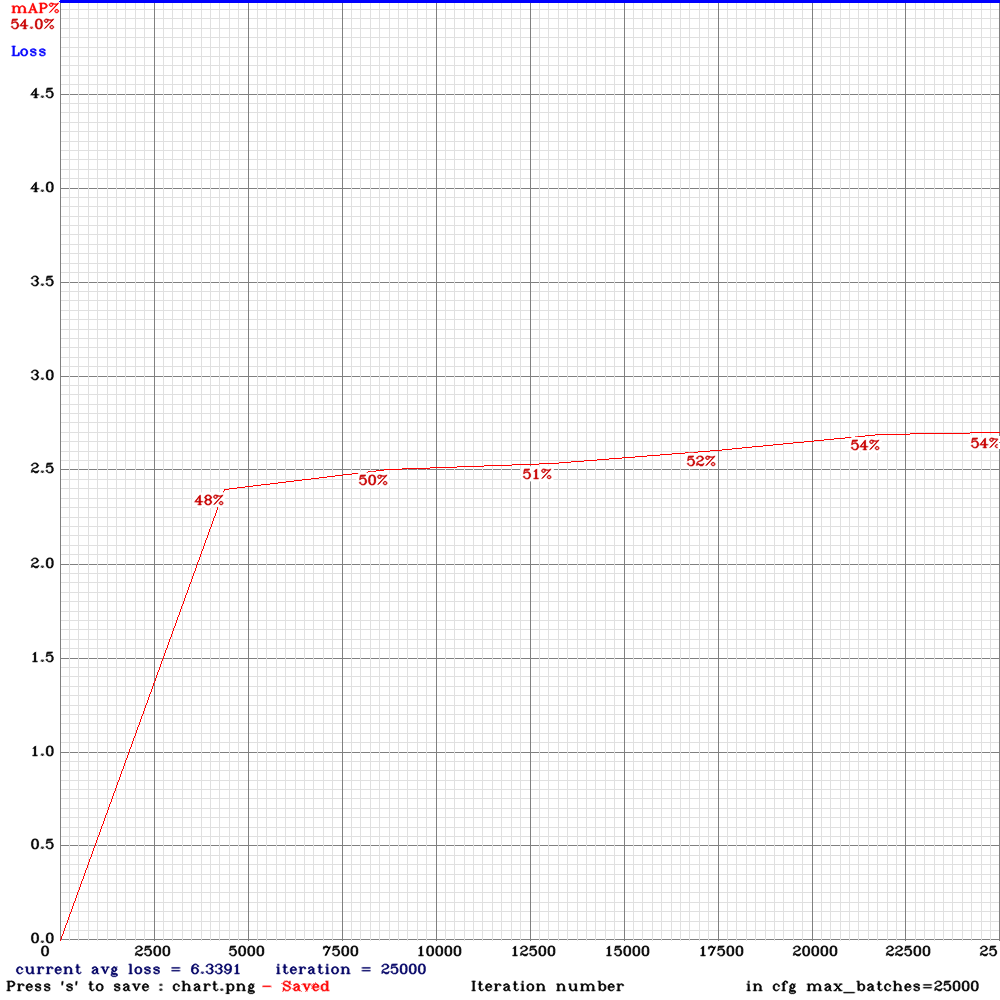

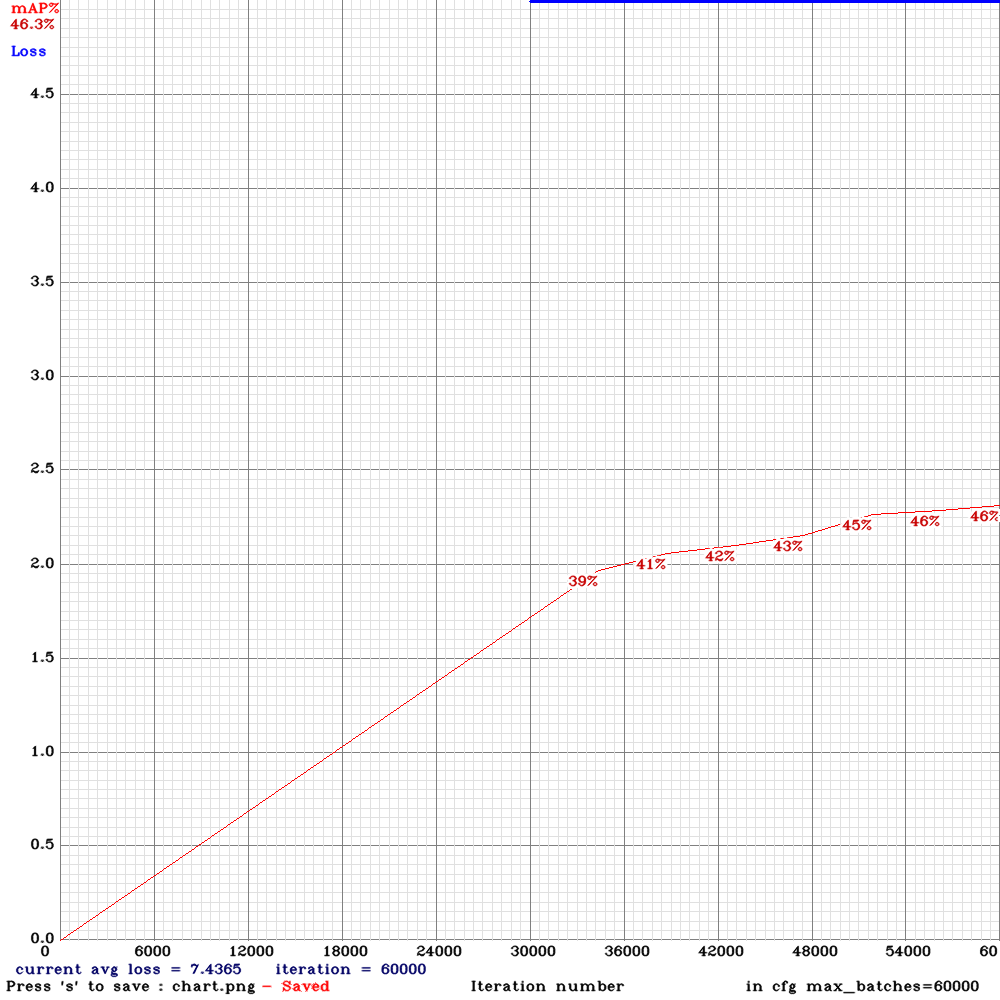

spp-pan:

training on gtx 1080ti

spp:

training om rtx 2070 with cuda_half enabled.

Previous results was trained on 1080ti, i don't know if that matters

You ideas? :)

For training, how many sequentially frames per video are needed? after the n frames sequence should be any indicator that new sequence start?

@dreambit So currently spp-pan (71%) is better than spp (67%) in contrast to previous results: https://github.com/AlexeyAB/darknet/issues/3114#issuecomment-502437431

It seems something is wrong with using Tensor Cores, I will disable TC temporary for training. May be I should use loss-scale even if I don't use Tensor Cores FP16 for activation: https://docs.nvidia.com/deeplearning/sdk/mixed-precision-training/index.html#mptrain

Can you attach new SPP and SPP-PAN cfg-files? Did you use the same batch/subdivisions, steps, policy and anchors in the both cfg-files?

@Dinl Use at least 200 frames per video (sequence).

@AlexeyAB

Can you attach new SPP and SPP-PAN cfg-files? Did you use the same batch/subdivisions, steps, policy and anchors in the both cfg-files?

- Try to train yolov3_spp.cfg but with subdivisions=32 will it have the same high mAP ~76%?

- If yes, then try to train pan-model with the same learning-policy parameters and the same anchors: yolo_v3_spp_pan_new.cfg.txt will it have high accuracy mAP ~76% or more?

yolo_v3_spp_pan_new.cfg.txt

yolov3-spp-front.cfg.txt

It seems something is wrong with using Tensor Cores, I will disable TC temporary for training. May be I should use loss-scale even if I don't use Tensor Cores FP16 for activation: https://docs.nvidia.com/deeplearning/sdk/mixed-precision-training/index.html#mptrain

So it is not yet clear is it because of subdivisions right?

Hi @AlexeyAB, thank you for sharing your valuable knowlodge with us.

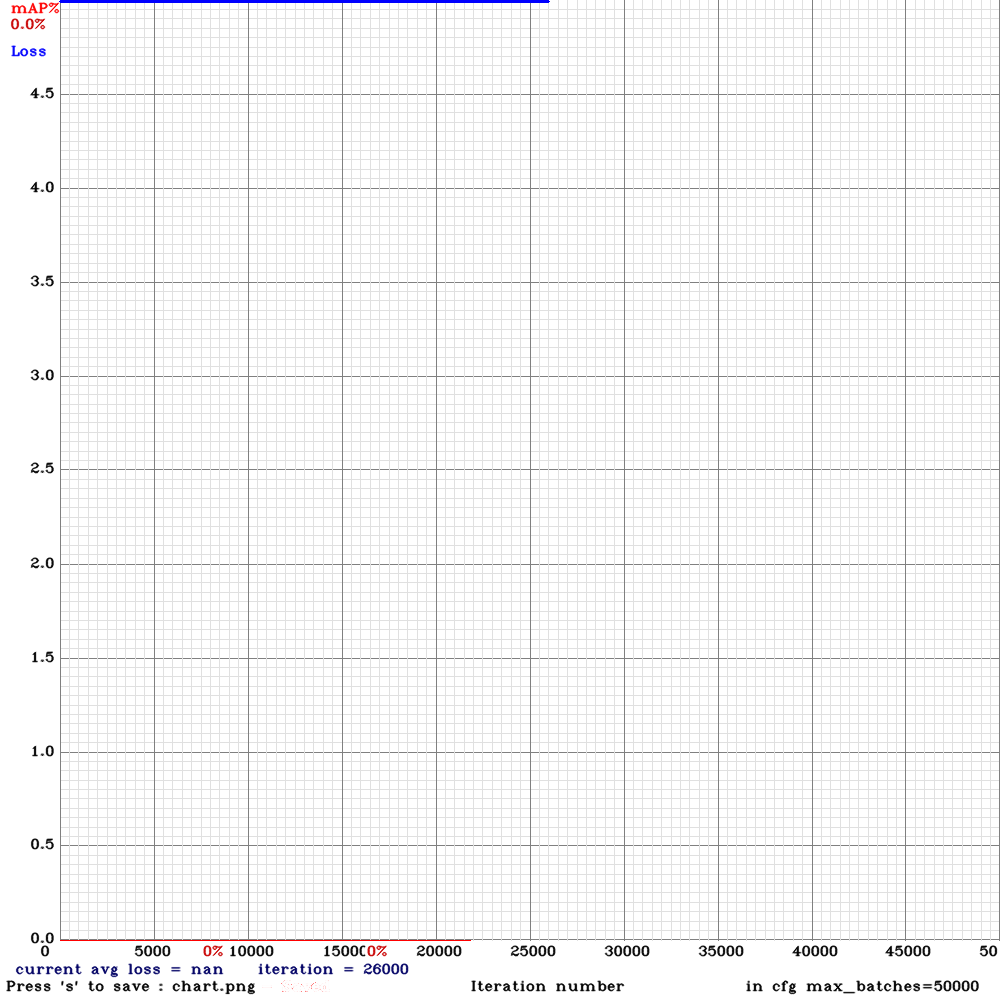

I facing problems when I tried the yolo_v3_tiny_pan_lstm.cfg file. I am using the default anchors. I only changed the classes and filters. Also, I set hue =0 as my task is traffic light detection and classification(decide what colour it is).

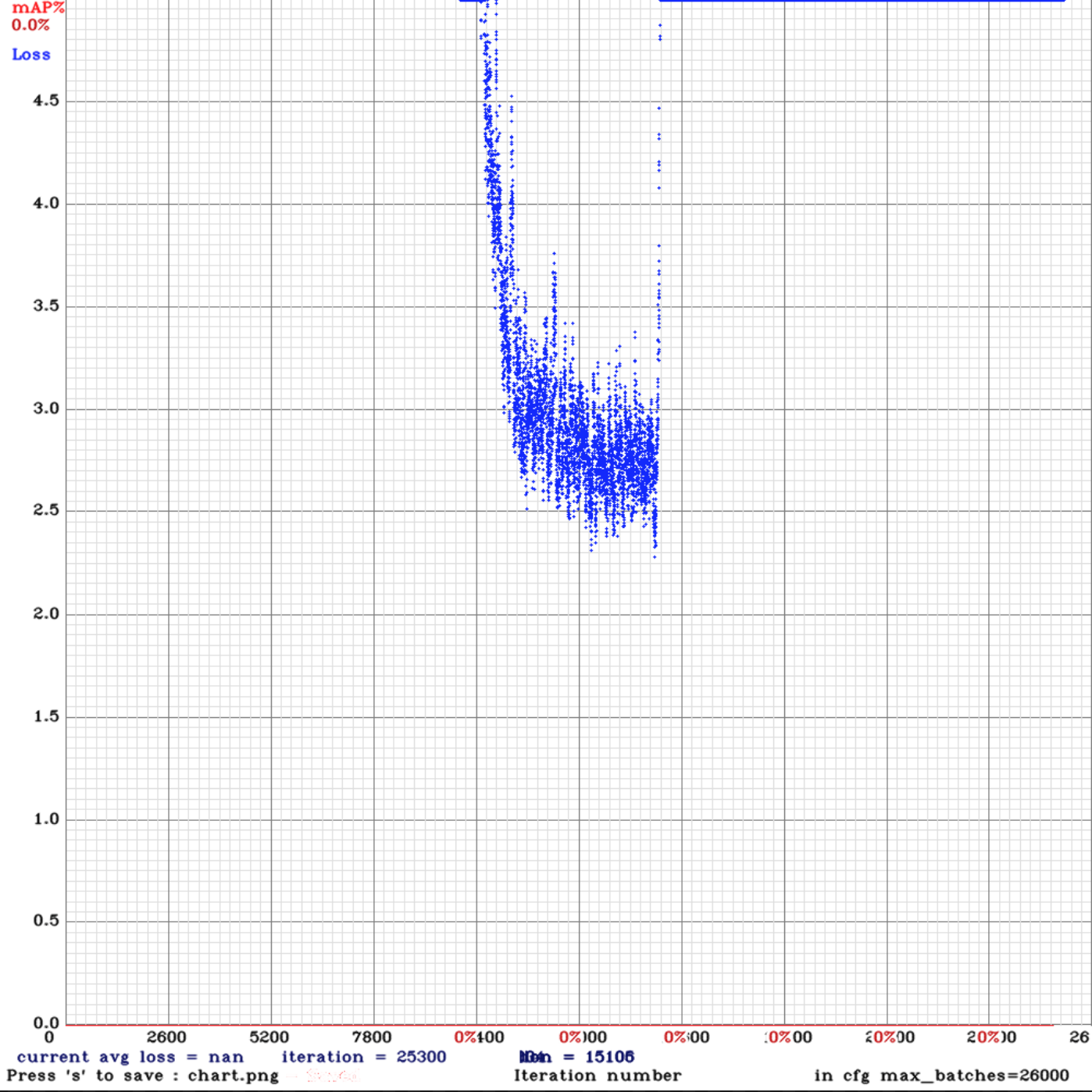

The problem is I got 0% mAP and the loss is getting nan values after ~15000 iterations

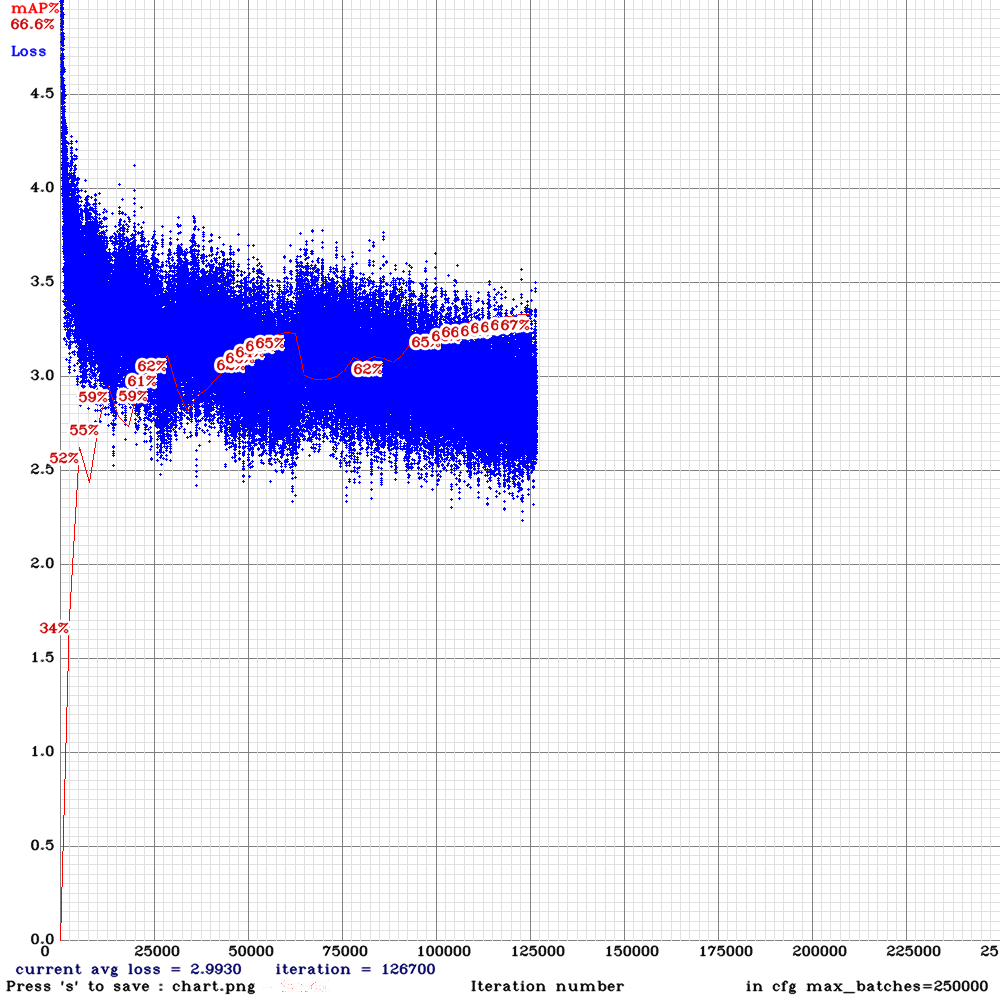

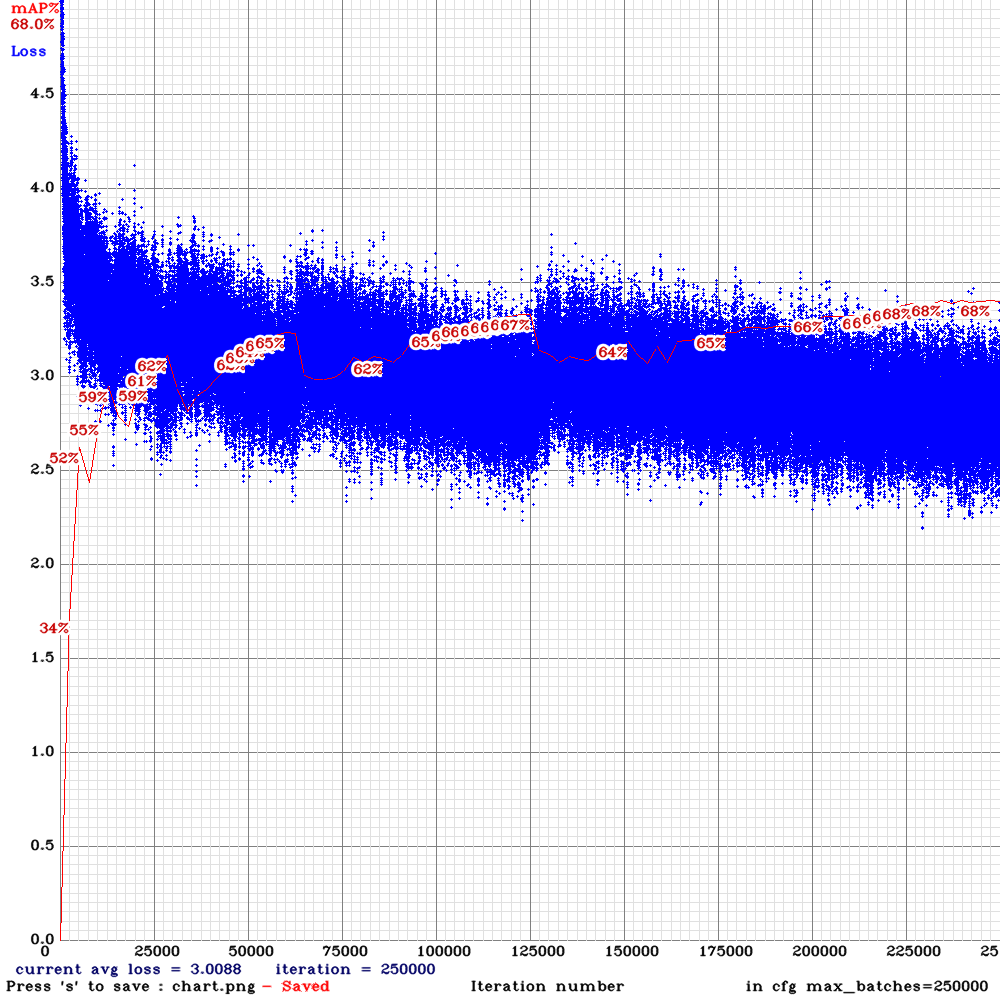

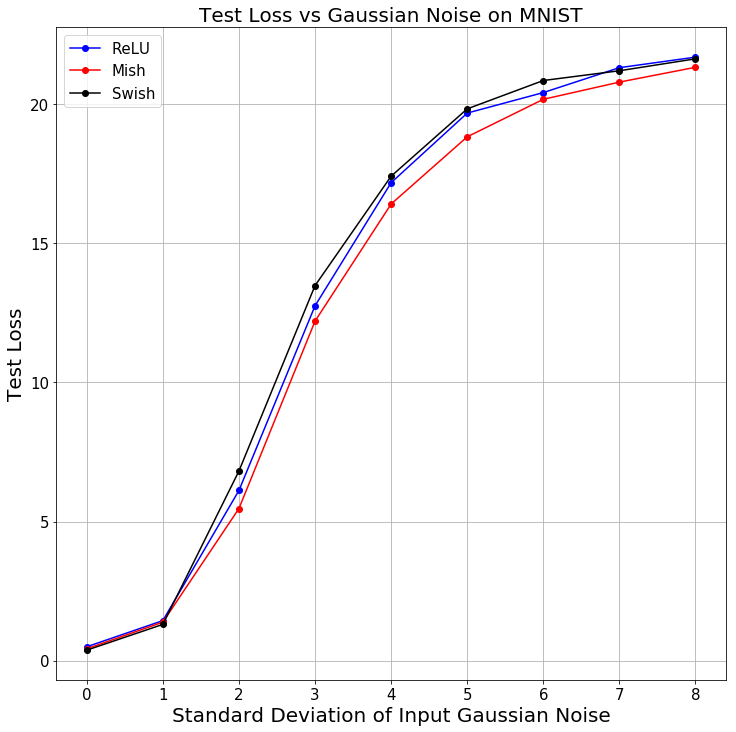

This is the mAP chart I got after several thousands of iterations:

This is the command I used for training:

./darknet detector train tldcl.data yolo_v3_tiny_pan_lstm.cfg yolo_v3_tiny_pan_lstm_last.weights -dont_show -mjpeg_port 8090 -map

Keeping in mind that the testing and training sets are correct as they are working using the normal yolov3.cfg file and are achieving 60+% mAP.

The training set consists of 12 video sequences averaging 442 frames for each. (Bosch Small Traffic Light Dataset)

Do you know what could possibly cause this?

@YouthamJoseph Hi,

What pre-trained weights did you use initially, is it yolov3-tiny.conv.14? As described here: https://github.com/AlexeyAB/darknet/issues/3114#issuecomment-494154586

Try to set state_constrain=16 for each [conv_lstm] layer, set sequential_subdivisions=8, set sgdr_cycle=10000 and start training from the begining.

No, I realized that I should've used this.

Thank you for pointing out what I have been missing.

Do you have a full version of this yolo_v3_tiny_pan_lstm.cfg yet?

Accordingly this issues https://github.com/AlexeyAB/darknet/issues/3426 I trained new model using yolo_v3_spp_pan.cfg with darknet53.conv.74.

After training I got 71%mAP and compare results with my standart yolov3.cfg with 59%mAP. And result with spp_pan is worse then standart yolo3 config. The dataset is the same.

For exemple

count = 68 objects at

and 31 objects at

@Lepiloff

After training I got 71%mAP and compare results with my standart yolov3.cfg with 59%mAP. And result with spp_pan is worse then standart yolo3 config.

"result with spp_pan is worse then standart yolo3" - what do you mean? As I see spp_pan gives better mAP than standard yolo3.

It seems that your training dataset is very different from test-set. Try to run detection with flag -thresh 0.1

I mean what count of objects lower despite highest mAp. If dataset the same, shouldn't there be better results with 71%? I tried to do with trash = 0.1 and of course the results got better.

It seems that your training dataset is very different from test-set

Now I think it is so. To improve accuracy, I will add new images

@Lepiloff Did u use the test dataset during mAP calculation? Or same for training and test? (valid = train.txt)?

@Lepiloff

If dataset the same, shouldn't there be better results with 71%?

You got better result with mAP=71%. (better ratio of TP, FP and FN).

Now you just should select optimal TP and FP by changing -thresh 0.2 ... 0.05

Accordingly this issues #3426 I trained new model using

yolo_v3_spp_pan.cfgwithdarknet53.conv.74.

After training I got 71%mAP and compare results with my standart yolov3.cfg with 59%mAP. And result with spp_pan is worse then standart yolo3 config. The dataset is the same.

For exemple

count = 68 objects at

and 31 objects at

Rotation will drastically improve accuracy in this particular context.

I suggest we train and test on voc, kitti or mscoco datasets.

Hi, I have some results to share. I trained four different networks on the same very large, challenging, self-driving car dataset. The dataset was made up of 9:16 images with 8 classes: person, car, truck, traffic light, cyclist/motorcyclist, van, bus, dontcare. I trained using mixed precision on a 2080Ti.

I used the .cfg files as provided above - the only edits I made were to recalculate anchors and filters and to set width=800 height=448.

Yolo-v3-spp

Yolo-v3-spp (with mixup=1)

Yolo-v3-tiny-pan-mixup

yolov3-pan-scale

I salute @AlexeyAB experimentation and look forward to trying new versions of the experimental architectures - unfortunately in my use-case, none of them were an improvement over the baseline yolov3-spp

For some reason - Loss never gets down below 6 in any of my trainings - I think it might be because the dataset is quite challenging - many obfuscated instances and very poorly illuminated images. Would be interested if anybody has any thoughts on this?

@LukeAI Thanks!

- Can you share all your cfg-files?

- Did you use KITTI-dataset?

For this particular experiment, I used the Berkley Deep Drive Dataset but merged three or four of their classes into 'dontcare'

yolo_v3_spp.cfg.txt

yolo_v3_spp_pan_scale.cfg.txt

yolo_v3_tiny_pan_mixup.cfg.txt

Do you have any suggestions of config options that may improve AP? The objects will always be the same way up - no upside down trucks etc. and they exist in a great diversity of lighting conditions - bright sun and night time with artificial lighting. Also a fairly high diversity of scales - people will be in the distance and also very close.

@LukeAI

I think you should use default anchors in all models.

On the one hand, anchors should be placed on correspond layers.

On the other hand, there should be enough number of anchors for each layer, not less than initially.

Also should be calculated statistic, how many objects are of each size, and how close objects (with this size) are to each other to decide how many anchors are required in each layer. Since this algorithms aren't implemented, its better to use default anchors.

Also for correct comparison of model, I think should be used the same batch and subdivisions. Only if you want just to get higher mAP rather than comparison of models, and don't have enough GPU-VRAM for low subdivisions for some models, then you can use different subdivisions.

@AlexeyAB Hi

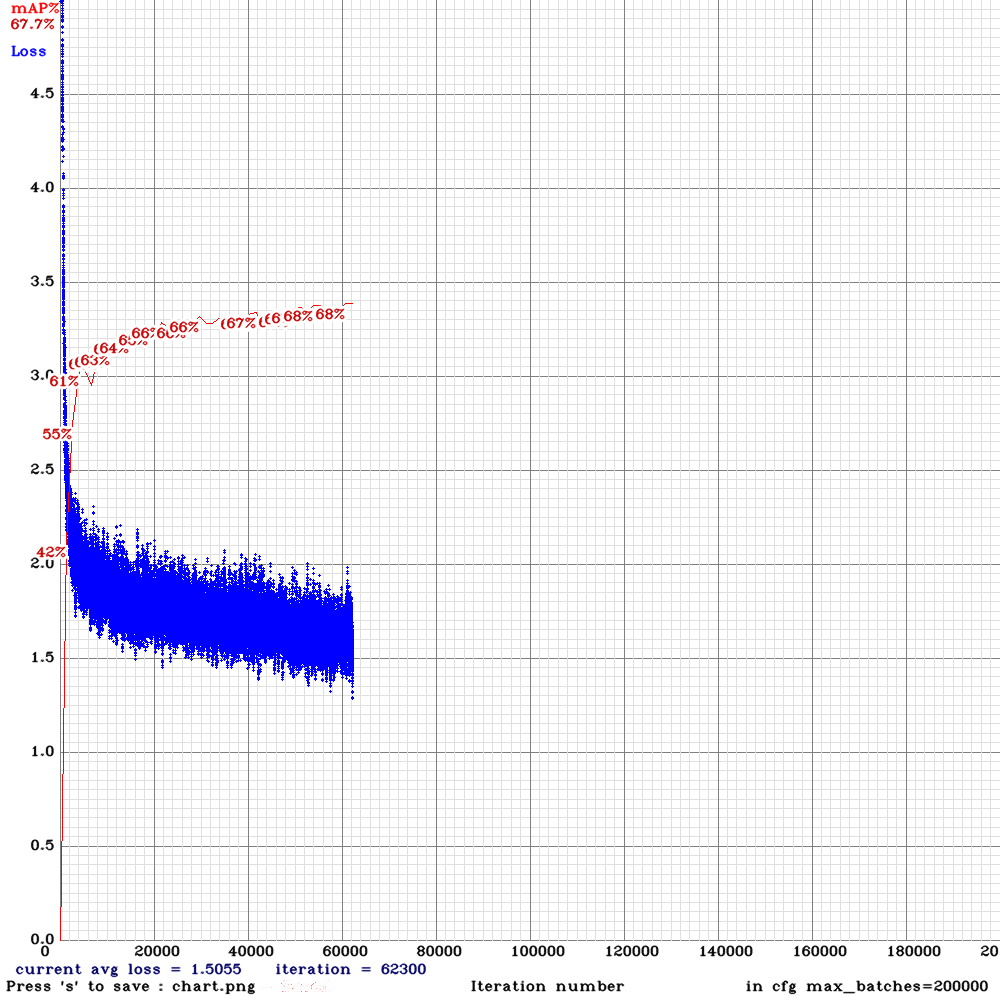

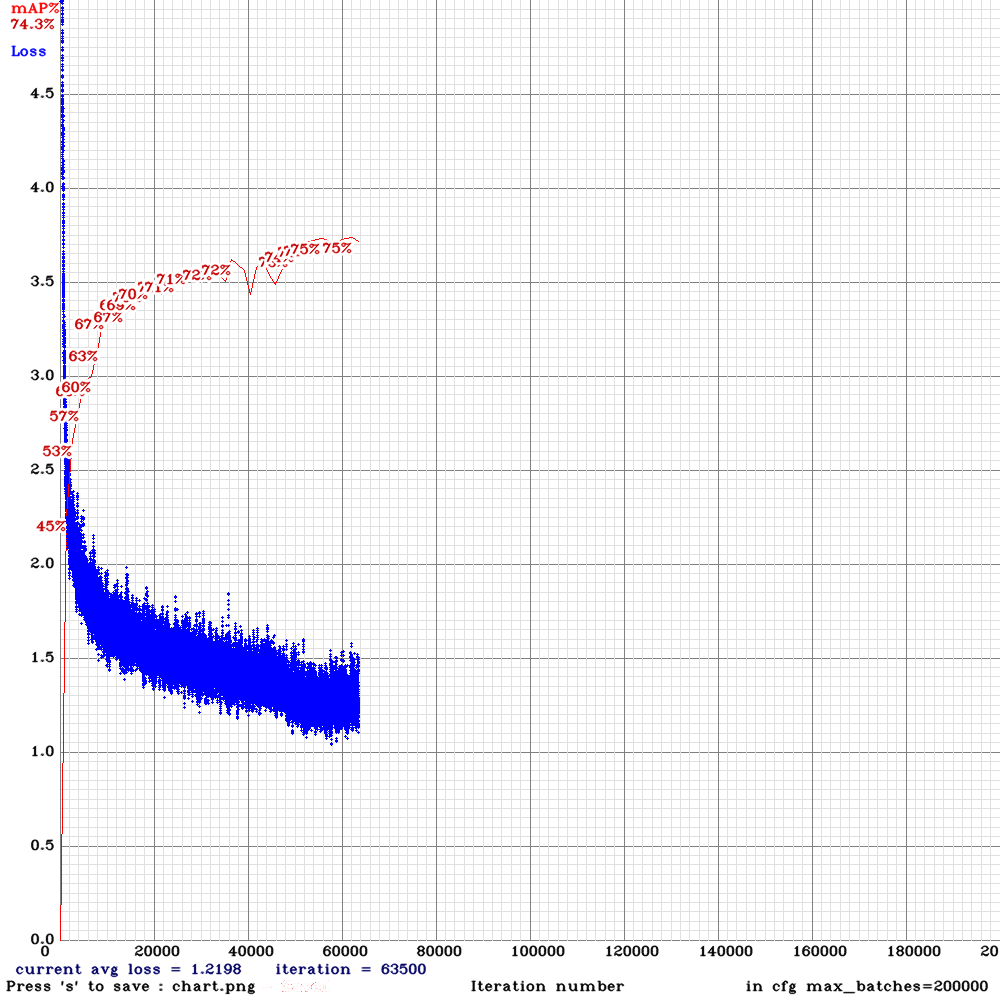

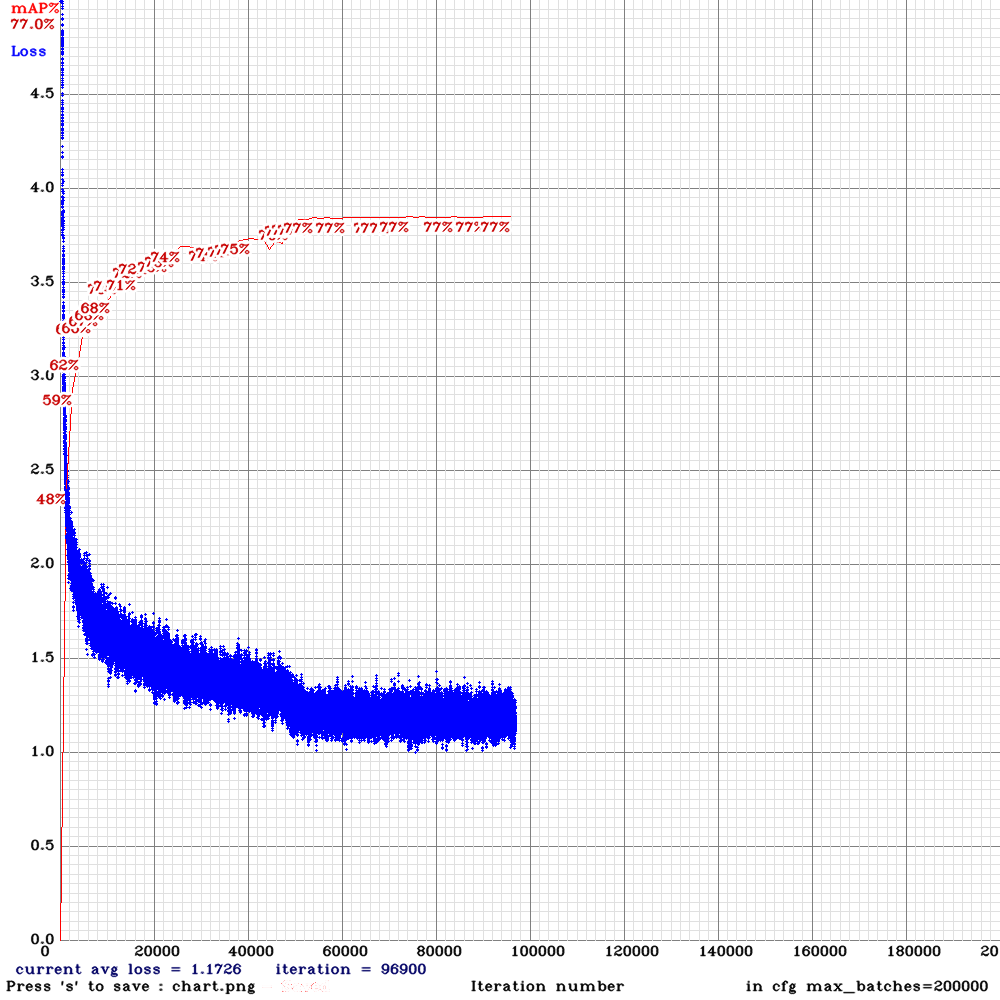

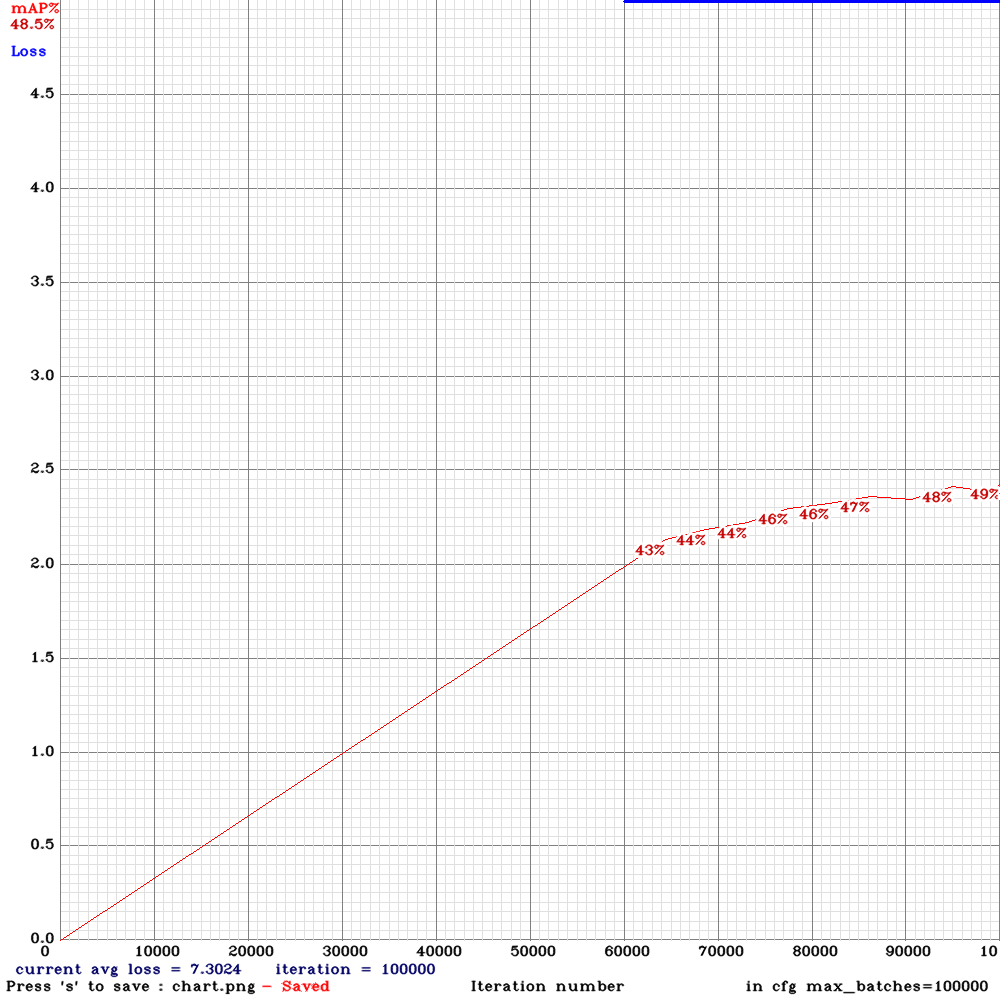

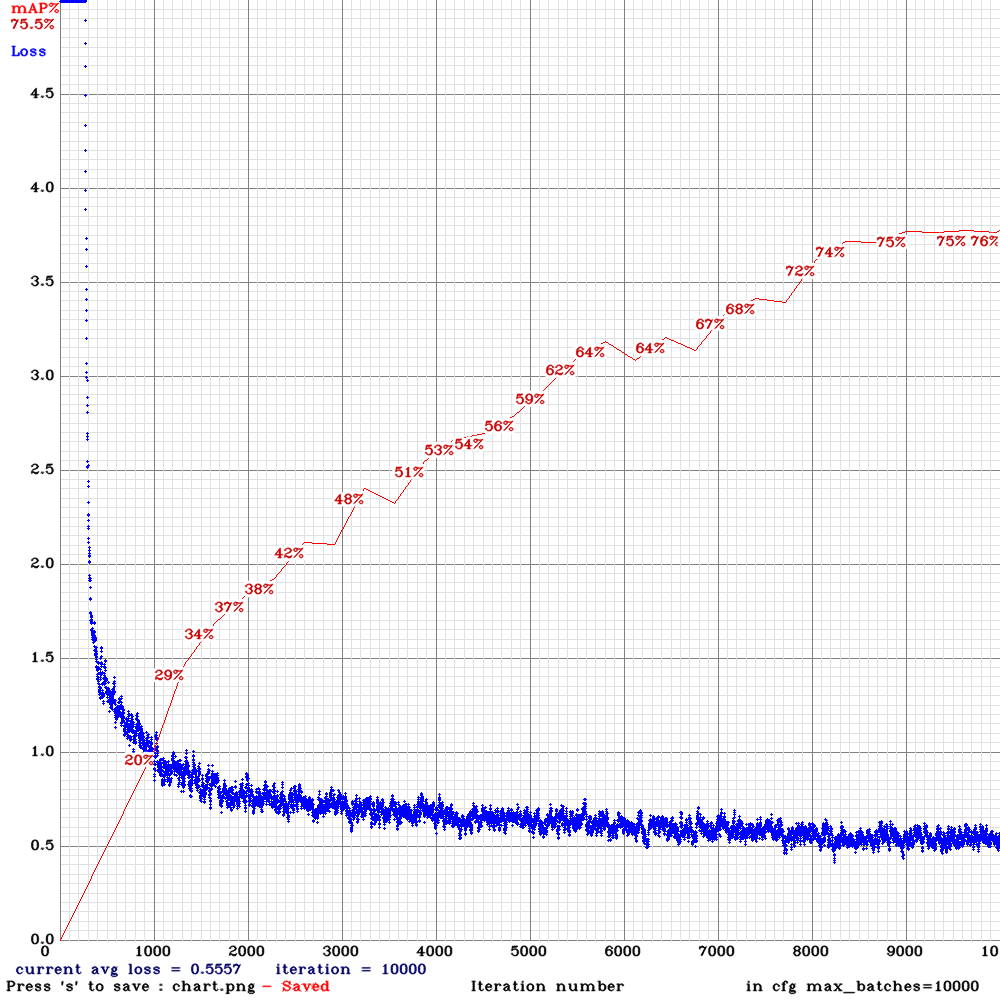

My final results:

spp on 2070 with CUDNN_HALF

spp-pan on 1080ti

spp on 1080ti

It seems like low mAP on spp was because of CUDNN_HALF right? not subdivision?

Since spp-pan does not give higher mAP in my case, i going to run spp with subdivision=4 on azure p100 with 16gb memory

@LukeAI

It seems like low mAP on spp was because of CUDNN_HALF right? not subdivision?

It seems - yes.

Since spp-pan does not give higher mAP in my case, i going to run spp with subdivision=4 on azure p100 with 16gb memory

Do you want to increase mAP of SPP model more? To get mAP higher than 75% with SPP.

So may be I will create maxpool-depth layer for PAN.

Do you want to increase mAP of SPP model more? To get mAP higher than 75% with SPP.

yes, i hope(with subdivision=4 and random=0, 416x416)

So may be I will create maxpool-depth layer for PAN.

That would be great. i would run it on the same machine and share results.

@AlexeyAB

It seems like low mAP on spp was because of CUDNN_HALF right? not subdivision?

It seems - yes.

Does it also affect inference accuracy? Or just training?

@dreambit Only training.

Would I get better results if I trained without CUDNN_HALF but still ran inference with CUDNN_HALF for the speed?

I'll try yolov3-spp again with the default anchors - will they likely still perform better even though I am using 9:16 images?

@LukeAI

Would I get better results if I trained without CUDNN_HALF but still ran inference with CUDNN_HALF for the speed?

Yes.

In the last commit I temporary disabled CUDNN_HALF for training, so you can just download the latest version of darknet.

I'll try yolov3-spp again with the default anchors - will they likely still perform better even though I am using 9:16 images?

May be yes.

Does anyone know if there is a way to get the track_id of each detected object from the lstm layers ?

Just looked into the json stream and it seems not to be there...

just to report back - I did indeed get better results with the original anchors, even though i was using 9:16 - but there must be some better way to calculate optimal anchors for 9:16 images?

@NickiBD @LukeAI @dreambit @i-chaochen @passion3394

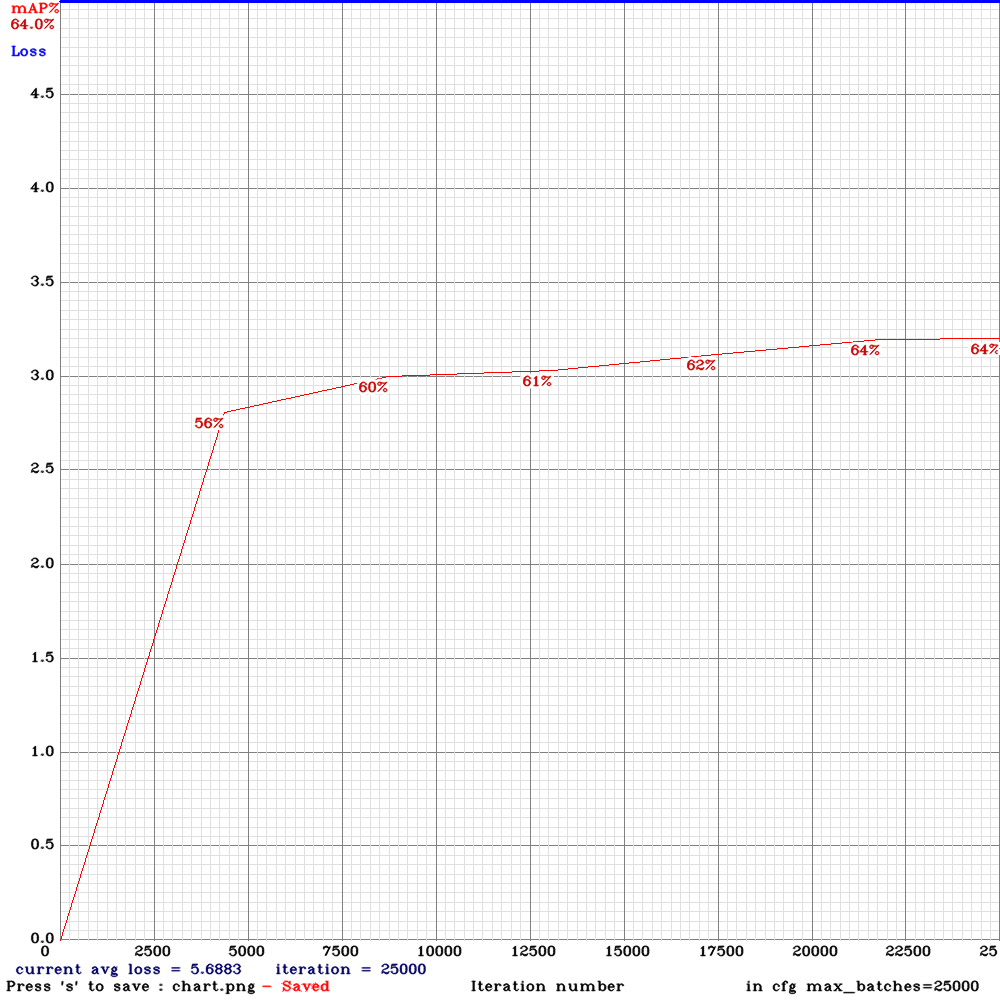

I implemented PAN2 and added yolo_v3_tiny_pan2.cfg.txt with PAN-block that is much more similar to original PAN network. It may be faster and more accurate.

@AlexeyAB

Do you want to increase mAP of SPP model more? To get mAP higher than 75% with SPP.

Results:

spp with subdivision = 32, random=1

spp with subdivision = 4, random=0

For reference, yolov3_spp.png

yolo_v3_tiny_pan2

yolo_v3_tiny_pan2.cfg.txt

@LukeAI Yes, yolov3_spp should be more accurate than yolo_v3_tiny_pan2.cfg.txt, since yolo_v3_tiny_pan2.cfg.txt is a tiny model.

You should compare yolo_v3_tiny_pan2.cfg.txt with yolo_v3_tiny_pan.cfg.txt or yolov3-tiny_3l.

Yes I realise that! The other models trained with comparable config are earlier in this thread although those were trained with mixed precision

@LukeAI

So as I see new PAN2 yolo_v3_tiny_pan2.cfg.txt (46% mAP) more accurate than old PAN Yolo-v3-tiny-pan-mixup (41% mAP): https://github.com/AlexeyAB/darknet/issues/3114#issuecomment-504367455

@AlexeyAB Hi ,

As you mentioned, yolo_v3_tiny_pan2.cfg.txt is (~88% mAP ) with my data set which is more accurate than yolo_v3_tiny_pan1.cfg.txt (~78%) and the training is faster.

I implemented PAN2 and added yolo_v3_tiny_pan2.cfg.txt with PAN-block that is much more similar to original PAN network. It may be faster and more accurate.

@AlexeyAB Thanks.

Do you have a config file for not tiny model? (like spp + pan2)

@dreambit Not yet, but I will add when I will have a time.

@AlexeyAB

How to train with yolo_v3_spp_pan_scale.cfg, Use which .conv file ?

Thanks!

@AlexeyAB If you have time, would you consider releasing a yolo_v3_tiny_pan2_lstm model? Both your results and mine show superiority in both accuracy and inference time of the pan2 model over pan1.

Hi, in my case i modified original yolo_v3_spp_pan_scale.cfg decreasing saturation to 0.5 (from 1.0), changed learning rate to 0.0001 (from 0.001) and switching to SGDR policy and i managed to go from ~70% to ~93% in small objects detection without overfitting.

Just letting this here in case someone want to test it.

@AlexeyAB

Can you please help and explain the following terms in layman language:

SPP

PAN

PAN2

LSTM

Mixup

Thanks!

@AlexeyAB

Can you please help and explain the following terms in layman language:SPP

PAN

PAN2

LSTM

MixupThanks!

Dude, have you read this?

https://github.com/AlexeyAB/darknet/issues/3114#issuecomment-494148968

Hi, in my case i modified original yolo_v3_spp_pan_scale.cfg decreasing saturation to 0.5 (from 1.0), changed learning rate to 0.0001 (from 0.001) and switching to SGDR policy and i managed to go from ~70% to ~93% in small objects detection without overfitting.

Just letting this here in case someone want to test it.

Please could you upload your .cfg ? I'd like to try :)

What dataset was it?

Hi, in my case i modified original yolo_v3_spp_pan_scale.cfg decreasing saturation to 0.5 (from 1.0), changed learning rate to 0.0001 (from 0.001) and switching to SGDR policy and i managed to go from ~70% to ~93% in small objects detection without overfitting.

Just letting this here in case someone want to test it.Please could you upload your .cfg ? I'd like to try :)

What dataset was it?

Sure, there it is. https://pastebin.com/5g2gGjwx

It was tested in my own custom dataset, focused on detecting small objects (smaller than 32x32 in many cases).

@keko950 What initial weights file did you use for the training ? Thanks !

@keko950 What initial weights file did you use for the training ? Thanks !

Hi, i used darknet53.conv.74

Also, if you are going to try this, you can try to start your train with a higher learning rate and every 10k iter lower it a bit, like 5x or 10x times, in some cases, it helps.

@keko950 What initial weights file did you use for the training ? Thanks !

Hi, i used darknet53.conv.74

Also, if you are going to try this, you can try to start your train with a higher learning rate and every 10k iter lower it a bit, like 5x or 10x times, in some cases, it helps.

Thanks @keko950 . Could it be that you got the indices of the newly created anchors wrong in your cfg ?The third yolo layer should be the last one in your cfg file and not vice versa. This is how the vanilla cfg files are doing it imho...

What do you think ?

Best Alexander

@keko950 Sorry forget what I wrote. Apparently the order was changed in the new cfg files...

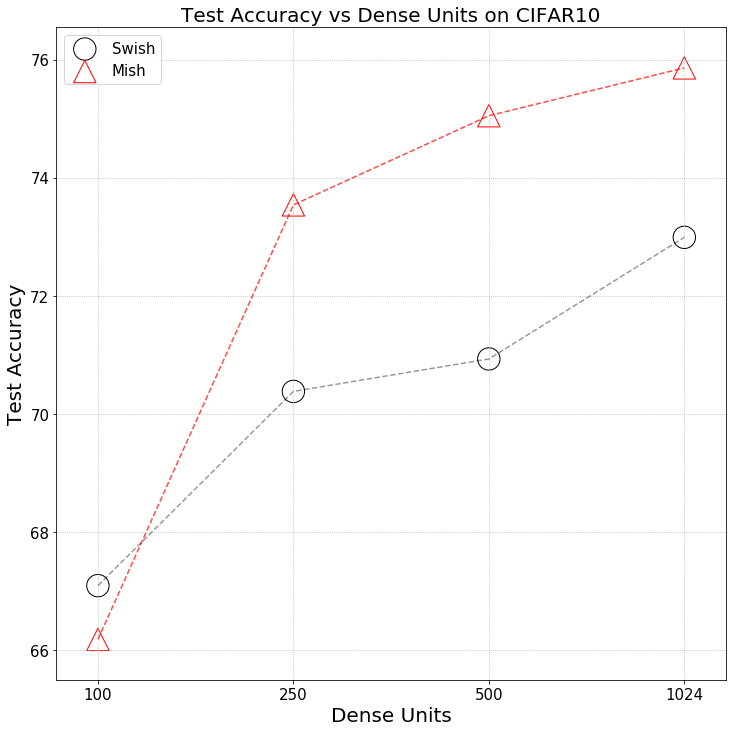

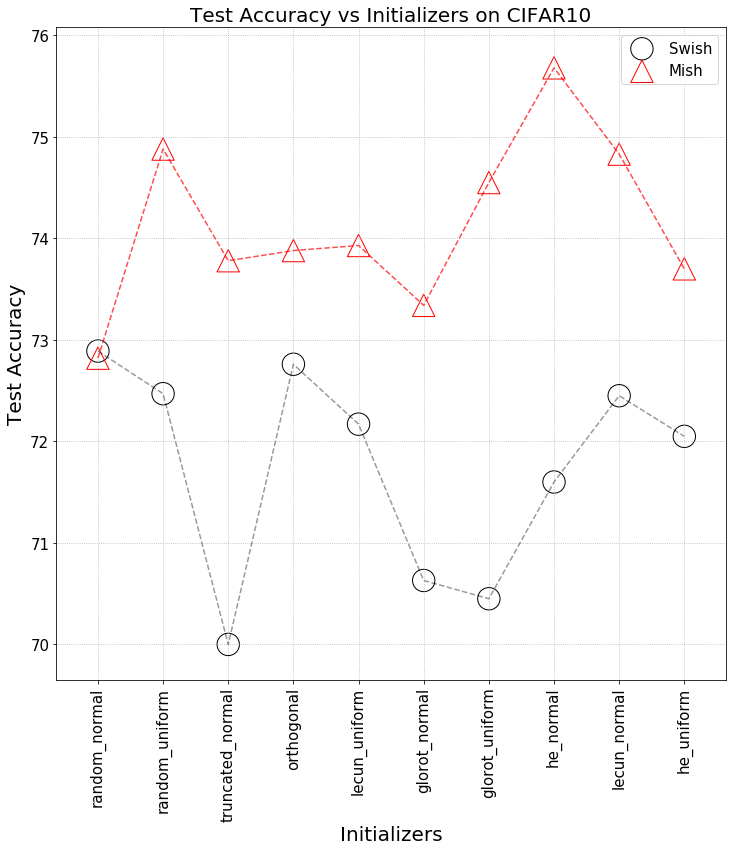

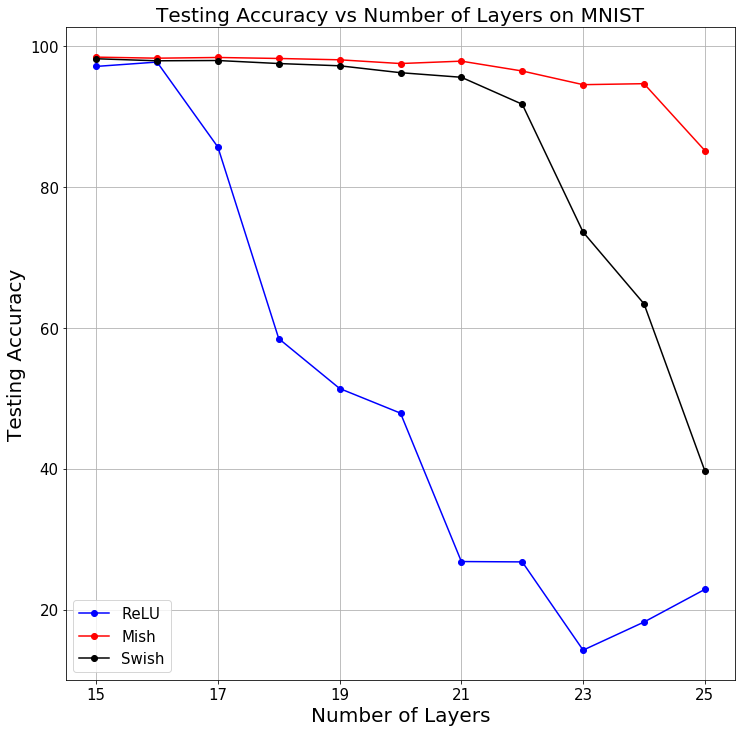

May be of interest:

tiny-yolo-3l

tiny-pan2

tiny-pan2-swish (same as tiny-pan2 but replaced all leaky relu with swish - had to train for a lot longer)

@AlexeyAB

I don't want to discourage anyone here but the accuracy is still directly proportional to the number BFlops. We should focus on Pruning, it is a better return on the time spend.

@LukeAI

Can you add a yolov3_tiny_mixup chart as well ..? I think it will increase accuracy without increasing the number of BFlops.

@jamessmith90 I have tried a few runs with mixup=1 and found that it hurt accuracy in all cases. see some results here: https://github.com/AlexeyAB/darknet/issues/3272

Do you have any results or citations on pruning? It's interesting but I thought that it was more relevant to architectures with lots of fully connected layers and less so with fully convolutional networks.

pan2-swish looks like an interesting point in the quality/FPS trade-off. I want to try pretraining on imagenet with swish activations and then retraining tiny-pan2-swish from that. Think it'd probably break through 50% with an inference time of just 7ms.

@LukeAI

Does adding swish activation to yolov3_tiny model makes it slow ?

@AlexeyAB

If the end goal here is to do introduce more efficient models then yolov3_tiny_mobilenetV2 and yolov3_tiny_shufflenetV2 should also be added.

But pruning should get higher priority.

@jamessmith90

Pruning does not always give performance gains on the GPU - since sparse-GEMM sparse-dense is slower than GEMM dense-dense: https://github.com/tensorflow/tensorflow/issues/5193#issuecomment-350989310

So there is required block-pruning: https://openai.com/blog/block-sparse-gpu-kernels/ implementation on TensorFlow: https://github.com/openai/blocksparse (Theory https://openreview.net/pdf?id=rJl-b3RcF7 ) but may be its much better to use XNOR-nets than Pruning or Depthwise?

There is suggestion https://github.com/AlexeyAB/darknet/issues/3568 but there is very few detailts and it is tested only on HAND-dataset http://www.robots.ox.ac.uk/~vgg/data/hands/downloads/hand_dataset.tar.gz

As you can see, there are several models with higher mAP and lower BFLOPS and inference time, than in yolov3 / tiny models, so mAP isn't proportional to BFLOPS: https://github.com/AlexeyAB/darknet/issues/3114#issuecomment-494148968

Even BFLOPS and inference time are not always proportional.

There are implemented EfficientNet B0-B7 models with the best ratio Accuracy/BFLOPS, which better than any existing models (MobilenetV2/v3, ShufflenetV2...) at the moment: https://github.com/AlexeyAB/darknet/issues/3380

- These models are optimal for Smartphones and TPUs ($1M)

- But all these models EfficientNet/Mobilenet/Shufflenet are not with the best ratio Accuracy/Inference_time on GPU, because depth-wise convolutional is slow on cuDNN/GPU.

@AlexeyAB

Can we add gpu memory and cuda cores usage for a fixed resolution of a particular GPU for comparison ?

Checkout the faster implementation from tensorflow:

https://github.com/tensorflow/tensorflow/blob/master/tensorflow/core/kernels/depthwise_conv_op_gpu.h

@AlexeyAB

Why anchor indeces are inputted other way around in yolo_v3_spp_pan_scale.cfg? What I mean is in yolo_v3_spp_pan_scale.cfg first yolo layer takes anchors less than 30x30, second less than 60x60 and the third one takes the rest.

Also, I calculated anchors on my dataset, but there is a big imbalance (there is only 1 anchor greater than 60x60). Is it a good idea to:

-slightly modify generated anchors manually to fix the imbalance?

-calculate anchors for larger width and hight than the actual width and height used in cfg file to avoid imbalance?

Or would you suggest to stick with original anchor values?

Thanks.

@AlexeyAB

Please add FPS in the table.

@suman-19 FPS = 1000 / inference_time, there is already inference_time

@AlexeyAB

I am looking for the maximum FPS metric that a model can achieve in terms of handling CCTV camera streams. As per my tests i was able to manage 7 streams of yolov3-tiny on a 1080 ti running at 25 fps.

A metric like that would be very good to measure scalability.

Keep up the good work. Your doing an amazing job.

@AlexeyAB , Hi

Have few questions on yolo-lstm if you could help answer -

- Is there any latency metric tracked as well for yolov3 (with and without lstm) i.e., the time taken for the network to infer on the given image

- How does the lstm network inside yolo know which is the start frame of sequence and the end frame of sequence if train.txt contains all sequences which are approximately 200? Do we need to specify the number of frames in each sequence for the lstm to be read from the train.txt? It cannot be approximate 200 frames...right?

Thanks!

@kmsravindra Hi,

https://github.com/AlexeyAB/darknet/issues/3114#issuecomment-494148968

yolo_v3_spp.cfg.txt - 23.5 ms

yolo_v3_spp_lstm.cfg.txt - 26.0 ms

Initially Yolo doesn't know where is start and end of sequence. But Yolo learns to know it during training - if the image changes almost completely and all objects disappear, then this is the beginning of a new sequence.

Yolo studies it automatically.

Thanks @AlexeyAB.

Initially Yolo doesn't know where is start and end of sequence. But Yolo learns to know it during training - if the image changes almost completely and all objects disappear, then this is the beginning of a new sequence.

Regarding the above comment, then I am wondering, perhaps is it preferred to have sequences that look as much different as possible from each other so that the network could possibly deliver good results...

Assuming we can also include "no object" frames in the same sequence with blank annotation txt files as before. For example when I have a moving camera, the camera might move out and come back in the same sequence (when this happens the objects in the scene temporarily go out of view for a brief while) ...Will that be ok to include such frames in the sequence?

Also I have some frames in the sequences where the camera motion might cause big motion blur on the image ( with no object being visible for couple of frames in the sequence). Will it be ok to include such frames as well in the sequence as no-object frames with blank annotation txt files?

So by including no object frames, I am assuming the yolo_lstm network would be able to learn to ignore images where the frames contain no objects ? OR is yolo_lstm purely used for object tracking where the object has to be continuously be visible (or occluded) in all the frames of the sequence?

Thanks!

@kmsravindra

It is not necessary. In any cases Yolo will understand where is end and start of sequence, I think.

Do you want to have 2 sequences one with objects and another without? Yes, I think it is a good idea.

Mark objects as you want to detect them. Usually I mark even very blurred objects.

You can use yolo_lstm in any cases as you want. Yolo_lstm will understand what do you want. I usually mark blurred and occluded objects.

@AlexeyAB

I have trained tiny-yolov3 network on lots of uav images. How can i do transfer learning or fine tuning with that weights on yolo_v3_tiny_pan_lstm.cfg.txt?

Another question is i have tried what you say above for training this network and got so many nans on regions 53 and 56.But region 46 usually fine ,what should i do? Can you help me?

@brkyc3 Do

./darknet partial cfg/yolov3-tiny.cfg yolov3-tiny.weights yolov3-tiny.conv.14 14

and use yolov3-tiny.conv.14

Another question is i have tried what you say above for training this network and got so many

nans on regions 53 and 56.But region 46 usually fine ,what should i do? Can you help me?

Usually this is normal. You shouldn't do anything.

It means that many objects do not correspond to these yolo layers.

Hi @AlexeyAB,

Thank you for this great work. I trained yolo_v3_spp_lstm on video frames containing only humans and terrain areas. I stuck with the 80/20 ratio for training and obtained mAP 94%, which is good enough. But when I try to use ./darknet detector demo command on another 2 mins video, it processes the video in 2 seconds. How can I force the model to run on each frame of the video? Shortly, I'd like the demo command to output a two-minute video with predictions.

TIA,

@enesozi Hi,

Run ./darknet detector demo ... -out_filename res.avi

then open res.avi file in any mdeiaplayer.

Or use for detection from video Camera.

I can already save the output video. The problem is that output video duration is 2 seconds while the input video duration is 2 minutes.

Can the LSTM be trained on static images as well as sequences? For example, can you train on COCO alongside your own sequences for more data? Or should we only train on sequences?

@rybkaa I didn't try it. You can try.

@AlexeyAB Is it possible to train static images on a non-LSTM model then initialize the non-LSTM weights on the LSTM model to then train the LSTM on video sequences?

@AlexeyAB , Hi

What is the difference between the reorg3d layer in pan and the reorg layer in yolov2. Is there a mixup operation that can be directly enabled in the old yolov3-spp, by adding mixup=1?

@ou525

reorg layer in yolov2 has a logical bug, reorg3d is a correct layer.

You can use mixup=1 in the [net] section in any detector.

@AlexeyAB, can you provide a detailed description of the reorg3d layer?

@AlexeyAB Hi ,

There are maxpool_depth= 1 and out_channels= 64 in YOLOtiny-PAN v2 configuration in [maxpool] header . I was wondering whether there are any equivalents in keras (Tensorflow) as I want to convert yolotiny-PAN to .h5 . I wanted to add them; however, I couldnot find anything useful. Do I need to implement them from scratch ? Could you please assist me with this as I really need to do this. I truly appreciate all your help .

Lyft recently released a massive sequential annotated frames dataset. Might be useful to anybody experimenting with LSTM or video detectors.

@NickiBD share it with us if you do so? :)

Lyft recently released a massive sequential annotated frames dataset. Might be useful to anybody experimenting with LSTM or video detectors.

Hi @LukeAI,

Did you use the Lyft dataset with Yolo-LSTM? It seems they used a specific data format. I couldn't find a way to associate annotations with jpeg files. scene.json file points to lidar data. Moreover, annotations contain 3D bounding boxes.

@enesozi no, I didn't try it myself - I guess you would have to do the projections yourself - plz share if you do so!

@AlexeyAB sorry for bothering you. The background: serval months ago, I use the yolov3-spp to train my custom dataset(about 39000 pics), and the mAP is about 96%, everything goes well. serval days ago, I try to use yolov3_pan_scale to train the same dataset, and the init model parameters are from the best yolov3-spp .weight file. But the training failed, I can post everything to help localize the problem. Please help, thanks.

I'm interested in using LSTM on high-end mobile devices, specifically the Jetson Xavier. From the article mentioned above, it says it achieves 70+ fps on a pixel 3. The Jetson Xavier is far better and I'm only able to achieve 26 fps. I am using yolo_v3_tiny_lstm.cfg on a very large sequence of 3 videos totaling 16.5K images.

Am I missing something here or is it that the new LSTM is still in its infancy and requires more work? Should it be expected to perform at 70+ fps?

@javier-box It is implemented only for increasing mAP. The light-branch part for increasing FPS isn't implemented yet.

@AlexeyAB

Got it. Looking forward to it!

Can't thank you enough for your contribution and hope to contribute to your effort as mine gets recognized.

I'm really looking forward to using the LSTM feature. I was just about to start trying to develop it myself, so it's great to see it being done by you.

I wanted to try it out by downloading a few of the cfg files and weights from the comment above. I am getting no objects detected at all, even when I set the threshold to 0.000001. An example of the command I am using is:

./darknet detector demo data/self_driving/self_driving.data data/LSTM/pan/yolo_v3_tiny_pan_lstm.cfg ~/Downloads/yolo_v3_tiny_pan_lstm_last.weights data/self_driving/self_driving_valid.avi -out_filename yolov3_pan.avi -thresh 0.000001

(I have modified the structure of the data folder a bit, and modified self_driving.data to match.)

Since you wrote "must be trained using frames from the video", I tried training the weights files, but darknet immediately saved a *_final.weights file and exited. That new weights file gave no detections as before. This is the command I used to try to train the network:

./darknet detector train data/self_driving/self_driving.data data/LSTM/pan2/yolo_v3_tiny_pan2.cfg.txt data/LSTM/pan2/yolov3-tiny_occlusion_track_last.weights

In case it makes a difference, I am testing things on a computer without a GPU. Once I am confident that everything is set up correctly, I will migrate it to a computer with a GPU.

I am still getting the expected results when I run ./darknet detector demo ... using cfg and weight files with no LSTM component.

I am using the latest version of the repo (4c315ea from Aug 9).

Besides that, I have a couple of quick questions

- why is the layer called ConvLSTM, rather than just LSTM? Is it because you use the convolution rather than the Hadamard product for the peephole connection?

- why do you limit yourself to

time_steps=3? If an object is occluded, it can easily be hidden for longer than three frames, so I would have thought that the LSTM should be trained to have a longer memory than that? If you really only want to cover 3 time_steps, you might get away with using a simpler RNN, since the gradient is unlikely to vanish.

@chrisrapson

Besides that, I have a couple of quick questions

- why is the layer called ConvLSTM, rather than just LSTM? Is it because you use the convolution rather than the Hadamard product for the peephole connection?

Because there are can be:

- LSTM that is based on Convolutional layers

- LSTM that is based on Fully-connected layers

- why do you limit yourself to

time_steps=3? If an object is occluded, it can easily be hidden for longer than three frames, so I would have thought that the LSTM should be trained to have a longer memory than that? If you really only want to cover 3 time_steps, you might get away with using a simpler RNN, since the gradient is unlikely to vanish.

Because usually you don't have enough GPU RAM to process more than 3 frames in one mini-batch.

You can try to increase this value.

@javier-box It is implemented only for increasing mAP. The light-branch part for increasing FPS isn't implemented yet.

Hello @AlexeyAB,

If I may ask, when do you foresee developing the light-branch part? Weeks aways? Months away? Year(s) aways?

Thanks.

I've now been able to test the yolo_v3_tiny_pan_lstm example on a GPU machine, and everything worked. I guess that means that the functionality is not yet available for CPU execution. I can see quite a few #ifdef GPU statements in the conv_lstm_layer.c file, so maybe it's something to do with one of them.

Sorry about my first question, I was getting confused between the terms ConvLSTM and Convolutional-LSTM, which are explained here: https://medium.com/neuronio/an-introduction-to-convlstm-55c9025563a7. I have also seen the "Convolutional-LSTM" referred to as a CNN-LSTM.

ConvLSTM is explained in the paper by Shi that you linked in the first post in this thread, so I should have read that first. Here's the link again: https://arxiv.org/abs/1506.04214v2

Now that I understand that, I think I also understand why the time_steps limitation depends on RAM.

It seems like the ConvLSTM layer takes a 4-D tensor as an input (time_steps, width, height, RGB_channels)? A ConvLSTM network doesn't remember its hidden state from earlier frames, it is passed earlier frames along with the current frame as additional input. Each new frame is evaluated from scratch. Did I get that right? Besides the RAM limitation, it seems like quite a lot of redundant input and computation? When you start evaluating the next frame (t+1), won't it have to repeat the convolution operations on frames (t, t-1, t-2...) which have already been evaluated? They don't appear to be stored anywhere. I assume that the benefit of evaluating each frame from scratch is that it simplifies the end-to-end training?

@chrisrapson

It seems like the ConvLSTM layer takes a 4-D tensor as an input (time_steps, width, height, RGB_channels)? A ConvLSTM network doesn't remember its hidden state from earlier frames, it is passed earlier frames along with the current frame as additional input.

It uses 5D tensor [mini_batch, time_steps, channels, height, width] for Training.

where mini_batch=batch/subdivisions

ConvLSTM remember its hidden state from earlier frames, but backpropagates only for time_steps frames for each mini_batch.

Training looks like

- froward:

t + 0*time_steps, t + 0*time_steps + 1, ... , t + 1*time_steps - backward:

t + 1*time_steps, t + 1*time_steps-1, ..., t + 0*time_steps - froward:

t + 1*time_steps, t + 1*time_steps + 1, ... , t + 2*time_steps - backward:

t + 2*time_steps, t + 2*time_steps-1, ..., t + 1*time_steps

...

I fixed some bugs for resnet152_trident.cfg.txt (TridentNet), and re-uploaded trained model, valid-video and chart.png.

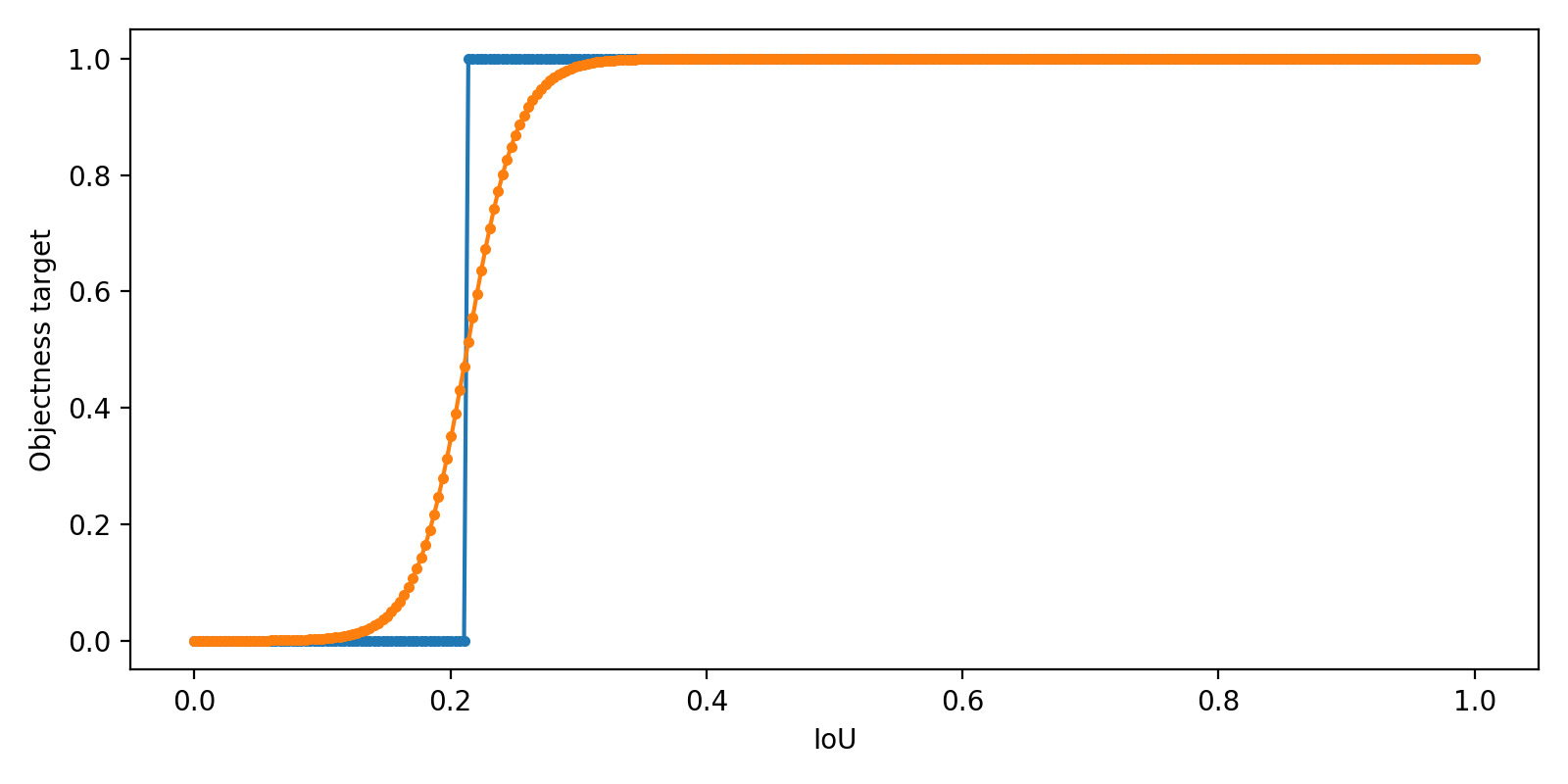

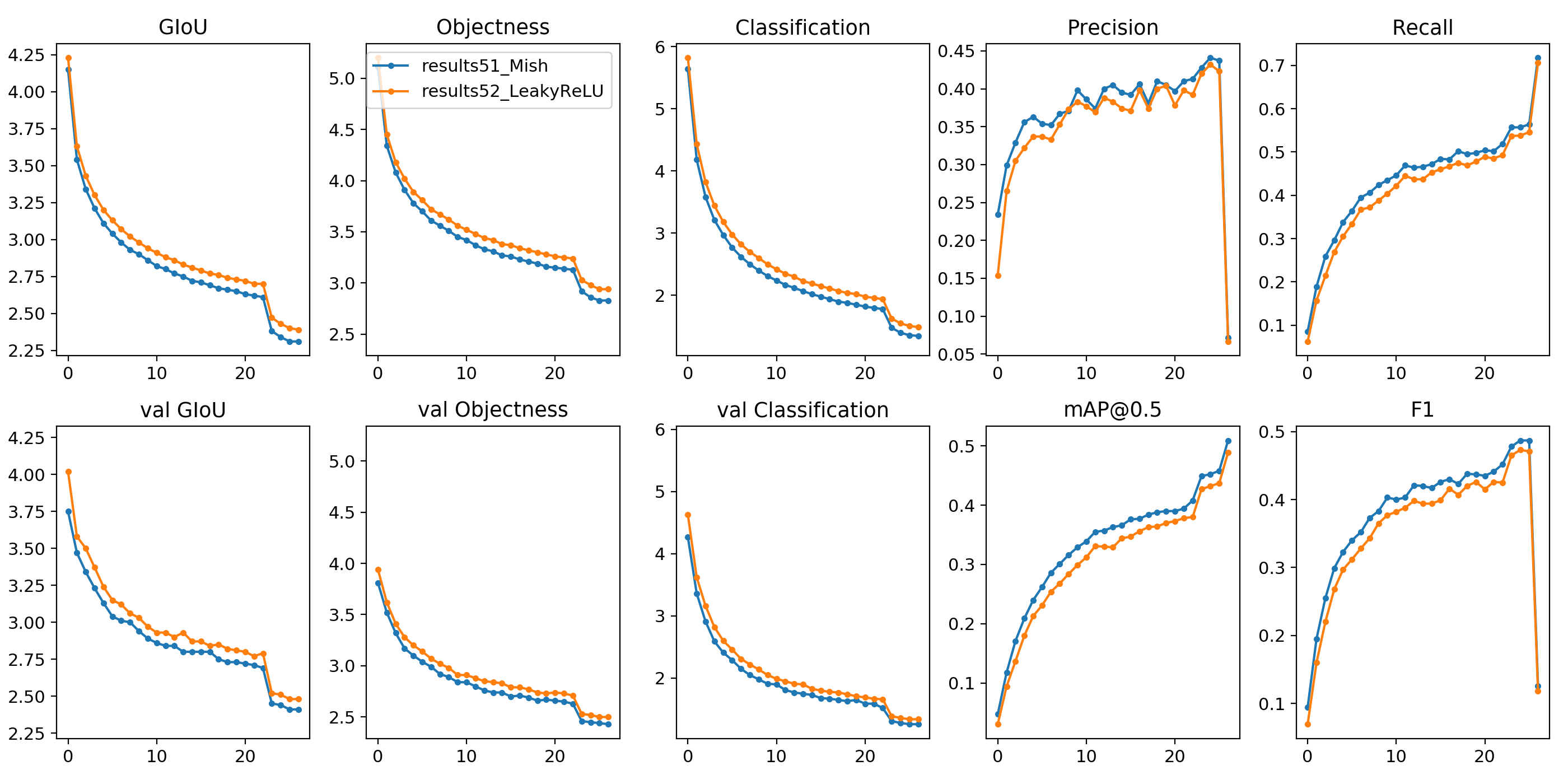

I added yolo_v3_tiny_pan3.cfg.txt model just with all possible features which I made recently: PAN3 (stride_x, stride_y), AntiAliasing, Assisted Excitation, scale_x_y, Mixup, GIoU, swish-activation, SGDR-lr-policy, ...

14 BFlops - 8.5 ms - 67.3% [email protected]

I didn't search the best solution and didn't search what features inceases the mAP and what decreases the mAP - just added everything to one pan. It seems it works with this small dataset.

I didn't add: CEM, SAM, Squeeze-and-excitation, Depth-wise-conv, TridentNet, EfficientNet .... etc blocks, which doesn't work fast on GPU.

If somebody want - you can try to train it on MS COCO to check the mAP.

Just train it as usual yolov3-tiny model:

./darknet detector train data/self_driving.data yolov3-tiny_occlusion_track.cfg yolov3-tiny.conv.15 -map

or

./darknet detector train data/self_driving.data yolov3-tiny_occlusion_track.cfg -map

- cfg: https://github.com/AlexeyAB/darknet/files/3580764/yolo_v3_tiny_pan3_aa_ae_mixup_scale_giou.cfg.txt

- weights: https://drive.google.com/open?id=1CVC-XfxbXVALIwy5QbjLZnO2M3I8tsm7

- video: https://drive.google.com/open?id=1QxG6Imnb1kYP9QbCjr-kDNv2bxFi0Zrz

training pan3 now... it's looking good so far - but when will we get fullsized pan3??

@LukeAI Do you mean not Tiny yolo_v3_spp3_pan3.cfg.txt with (PAN3, AntiAliasing, Assisted Excitation, scale_x_y, Mixup, GIoU)?

I think in a week.

Amazing work! Thanks for sharing.

@AlexeyAB yes, exactly. I'll try it in whatever form you release it, although I may also try without mixup, GIoU and SGDR as these have generally lead to slightly worse performance in experiments I have run.

I added

yolo_v3_tiny_pan3.cfg.txtmodel just with all possible features which I made recently: PAN3 (stride_x, stride_y), AntiAliasing, Assisted Excitation, scale_x_y, Mixup, GIoU, swish-activation, SGDR-lr-policy, ...14 BFlops - 8.5 ms - 67.3% [email protected]

- I didn't search the best solution and didn't search what features inceases the mAP and what decreases the mAP - just added everything to one pan. It seems it works with this small dataset.

- I didn't add: CEM, SAM, Squeeze-and-excitation, Depth-wise-conv, TridentNet, EfficientNet .... etc blocks, which doesn't work fast on GPU.

- If somebody want - you can try to train it on MS COCO to check the mAP.

Just train it as usual yolov3-tiny model:

./darknet detector train data/self_driving.data yolov3-tiny_occlusion_track.cfg yolov3-tiny.conv.15 -mapor

./darknet detector train data/self_driving.data yolov3-tiny_occlusion_track.cfg -map

- cfg: https://github.com/AlexeyAB/darknet/files/3580764/yolo_v3_tiny_pan3_aa_ae_mixup_scale_giou.cfg.txt

- weights: https://drive.google.com/open?id=1CVC-XfxbXVALIwy5QbjLZnO2M3I8tsm7

- video: https://drive.google.com/open?id=1QxG6Imnb1kYP9QbCjr-kDNv2bxFi0Zrz

What filters should i change in order to train with 2 classes?

@keko950 I have changed like in instructions:

filters = (classes + 5) x masks) = 35 for 2 classes

in last layer filter = 28

@keko950

In yolo_v3_tiny_pan3_aa_ae_mixup_scale_giou.cfg.txt:

- For the 1st and 2nd [yolo] the

filters=(classes + 5) * 5 - For the 3d [yolo] the

filters=(classes + 5) * 4

actually on mixup - I did find a small improvement when training with quite a small dataset. when training with large datasets it made it worse. It may be that the mixup is an effective data augmentation tactic with small datasets that need more examples with different background but with large datasets, the value of that drops and is exceeded by the cost of giving your detector synthetic data which means that it can't properly learn true contextual cues.

@LukeAI What large dataset did you use? Did you use MS COCO?

not with this new one. I mean in the past when I ran yolov3-spp.cfg with and without mixup on different datasets. the result was better without mixup on bdd100k and better with mixup on a small (~1% size of bdd100k) private dataset.

Hmmm.. i am actually receiving loss: -nan , what could be wrong?

CFG:

CLICK ME - CFG

[net]

Testing

batch=1

subdivisions=1

Training

batch=64

subdivisions=8

width=512

height=512

channels=3