Darknet: why "decay*batch" and "learning_rate/batch" ?

Hi AlexeyAB.

Thanks for your kindness.

I have a question about the "update_convolutional_layer" in convolutional_layer.c

There are following code.

- axpy_cpu(size, -decay*batch, l.weights, 1, l.weight_updates, 1);

- axpy_cpu(size, learning_rate/batch, l.weight_updates, 1, l.weights, 1);

Why multiply decay and batch? (-decay*batch)

Why divide learning rate and batch? (learning_rate/batch)

All 8 comments

@doobidoob Hi,

As result we get:

weights_update =

weights_update_new + weights_update_old*momentum - (weights_old*momentum + weights_new)*decay*batch

We know, that weights_update accumulates deltas for each image, so weights_update is ~proportional to the batch size, but weights is not.

Then we should multiply weights (new and old) by batch.

So it will work in the same way for any batch size.

Also as result we get:

weights_newest = weights_new*(1 - decay*lr) - weights_old*momentum*(decay*lr) + (weights_update_new + weights_update_old*momentum)*lr/batch

For the same reason, because weights_update is ~proportional to the batch size, then we should divide weights_update by batch.

So it will work in the same way for any batch size.

Also in the main formula:

weights_newest = weights_new*(1 - decay*lr) - weights_old*momentum*(decay*lr) + (weights_update_new + weights_update_old*momentum)*lr/batch =

= weights_new - (weights_new + weights_old*momentum)*decay*lr + (weights_update_new + weights_update_old*momentum)*lr/batch

We use

momentumfor old:weights_oldandweights_update_old.

It is used for accumulation of motion of weights changing. It uses a running average of the gradients (i.e. how much the history affects the further change of weights).We use

decayforweights(new and old).

The greater the absolute value of the weight, the more the absolute value of this weight will decrease. It reduces the value of frequent features of objects, and gives an advantage to rare features of objects. It eliminates dysbalance when, for example, the number of images with Dogs is much greater than the images with Cars.

@AlexeyAB Thank you very much!!

Also in the main formula:

weights_newest = weights_new*(1 - decay*lr) - weights_old*momentum*(decay*lr) + (weights_update_new + weights_update_old*momentum)*lr/batch=

= weights_new - (weights_new + weights_old*momentum)*decay*lr + (weights_update_new + weights_update_old*momentum)*lr/batch

- We use

momentumfor old:weights_oldandweights_update_old.

It is used for accumulation of motion of weights changing. It uses a running average of the gradients (i.e. how much the history affects the further change of weights).- We use

decayforweights(new and old).

The greater the absolute value of the weight, the more the absolute value of this weight will decrease. It reduces the value of frequent features of objects, and gives an advantage to rare features of objects. It eliminates dysbalance when, for example, the number of images with Dogs is much greater than the images with Cars.

Thanks for the explanation!

I wonder why update the weight and bias separately?

You used axpy_cpu / adam_update_gpu for updating bias and weight, separately, but the size of layer is the same for weight and bias, and the update is basically a for loop to all layers to update weight/bias.

So I think it could speed up the training (slightly?) @AlexeyAB If two of them can be combined into one, use only one for loop to update two things at once.

Correct me if I was wrong, thanks!

@i-chaochen It's just that easier to do.

More over, we update weights, bias and scales separately.

Also it is implemented as 8 different kernel functions )

https://github.com/AlexeyAB/darknet/blob/e6469eb071521a4ff5be8c0e9cceb524d0a65a95/src/blas_kernels.cu#L209-L222

Adam is used rarely.

Also update_convolutional_layer_gpu() is called only for each batch, not mini-batch.

So I think it will improve speed less than ~1%.

@i-chaochen It's just that easier to do.

More over, we update weights, bias and scales separately.

What is the scale in the neural network?

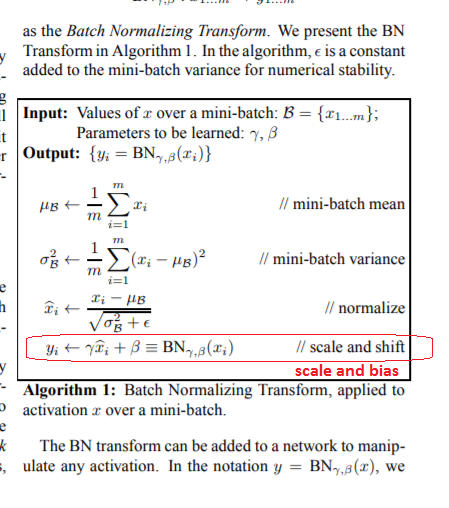

Ha! Yes, sorry for asking a silly question. Forgot the BN also needs to be learned!

Most helpful comment

@doobidoob Hi,

As result we get:

We know, that

weights_updateaccumulates deltas for each image, soweights_updateis ~proportional to the batch size, butweightsis not.Then we should multiply

weights(new and old) bybatch.So it will work in the same way for any batch size.

Also as result we get:

weights_newest = weights_new*(1 - decay*lr) - weights_old*momentum*(decay*lr) + (weights_update_new + weights_update_old*momentum)*lr/batchFor the same reason, because

weights_updateis ~proportional to the batch size, then we should divideweights_updatebybatch.So it will work in the same way for any batch size.