Darknet: Optimizer and loss function.

Hi, can you provide some info about the optimizer (ADAM, RMSProp, etc.) used in YOLOv3? Also, where can I find the optimizer and the loss function in the source code? Thank you.

All 12 comments

@gnoya Hi,

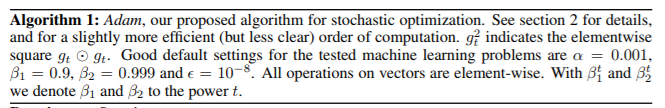

There is Adam (adaptive moment estimation): https://arxiv.org/abs/1412.6980

ADAM ~= RMSProp + Nesterov Accelerated Gradient

ADAM (adaptive moment estimation) - it combines both the idea of the accumulation of movement and the idea of a weaker updating of the weights for typical features.

There are already momentum and decay even without Adam optimizer: https://github.com/AlexeyAB/darknet/issues/1943#issuecomment-439560675

momentum- accumulation of movement. It is used for accumulation of motion of weights changing. It uses a running average of the gradients (i.e. how much the history affects the further change of weights).decay- a weaker updating of the weights for typical features. It reduces large weights, i.e. it reduces the value of frequent features of objects, and gives an advantage to rare features of objects. It eliminates dysbalance in dataset when, for example, the number of images with Dogs is much greater than the images with Cars.

Adam in Yolo:

This article suggests to use B1=0.9 B2=0.999 eps=0.00000001

In the Darknet by default: B1=0.9 B2=0.999 eps=0.000001 if you set adam=1 here: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/cfg/yolov3.cfg#L17

You can use these paramters in your cfg-file: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/src/parser.c#L629-L634

Note: if you trained weights-file with adam=1, then you should use adam=1 in cfg-file for Detection too.

If you set adam=1 then during training weights will be updated for each iteration (for each batch images) by using this algorithm: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/src/convolutional_kernels.cu#L632-L644

instead of: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/src/convolutional_kernels.cu#L644-L656

More about it: http://ruder.io/optimizing-gradient-descent/

Loss functions for Yolo v3:

- delta for box: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/src/yolo_layer.c#L94-L109

- delta for class: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/src/yolo_layer.c#L112

void delta_yolo_class(float *output, float *delta, int index, int class_id, int classes, int stride, float *avg_cat, int focal_loss)

{

int n;

if (delta[index + stride*class_id]){

delta[index + stride*class_id] = 1 - output[index + stride*class_id];

if(avg_cat) *avg_cat += output[index + stride*class_id];

return;

}

// default

for (n = 0; n < classes; ++n) {

delta[index + stride*n] = ((n == class_id) ? 1 : 0) - output[index + stride*n];

if (n == class_id && avg_cat) *avg_cat += output[index + stride*n];

}

}

@AlexeyAB when I set adam=1, I hit avg-loss as nan even in the second batch iteration. Any suggestions for that ?

@NEELMCW

Do you get good avg-loss with

adam=0?Did you try several times to train with

adam=1?Do you use this repository https://github.com/AlexeyAB/darknet or https://github.com/pjreddie/darknet ?

What base cfg-file do you use?

What command do you use for training?

Try to use such values

adam=1

B1=0.9

B2=0.999

eps=0.00000001

@AlexeyAB

Without setting any adam in config file . I get good avg loss Never tried adam =0

I am using the default yolov3 config as base config but for 4 classes

I am using https://github.com/AlexeyAB/darknet repo only

@NEELMCW

Without setting any adam in config file . I get good avg loss Never tried adam =0

adam=0 by default if cfg file doens't contain adam parameter.

So adam isn't required for your dataset.

ADAM (adaptive moment estimation) - it combines both the idea of the accumulation of movement and the idea of a weaker updating of the weights for typical features.

There are already momentum and decay even without Adam optimizer: https://github.com/AlexeyAB/darknet/issues/1943#issuecomment-439560675

momentum- accumulation of movement. It is used for accumulation of motion of weights changing. It uses a running average of the gradients (i.e. how much the history affects the further change of weights).decay- a weaker updating of the weights for typical features. It reduces large weights, i.e. it reduces the value of frequent features of objects, and gives an advantage to rare features of objects. It eliminates dysbalance in dataset when, for example, the number of images with Dogs is much greater than the images with Cars.

@AlexeyAB Thanks for the explanation. I am venturing into solving the imbalance in the desired objects in my data set ? How do we choose the decay factor if its useful in this regard ?

@NEELMCW Just use default decay - it is optimal for the most cases: https://github.com/AlexeyAB/darknet/blob/9f7d7c58b5284eec9a1b96eb30c63ae254ded740/cfg/yolov3.cfg#L12

Or you can try to use decay=0.001 or decay=0.005

@AlexeyAB hi~

What's the default Optimizer of YOLOv3?

Is it AdaGrad?

Hi, @NEELMCW, have you solved your problem? I get the same problem when "adam=1" is set.

Hi, @AlexeyAB , have you ever used the adam setting? I use it with the darknet53.conv.74 wights file, and the loss gets NANs. Can you give me some help? Thanks.

can anyone please tell me how can I change the loss function used for classification from crossentropy to mse loss (although used for regression)?

Most helpful comment

@gnoya Hi,

There is Adam (adaptive moment estimation): https://arxiv.org/abs/1412.6980

ADAM ~= RMSProp + Nesterov Accelerated GradientADAM (adaptive moment estimation) - it combines both the idea of the accumulation of movement and the idea of a weaker updating of the weights for typical features.

There are already

momentumanddecayeven without Adam optimizer: https://github.com/AlexeyAB/darknet/issues/1943#issuecomment-439560675momentum- accumulation of movement. It is used for accumulation of motion of weights changing. It uses a running average of the gradients (i.e. how much the history affects the further change of weights).decay- a weaker updating of the weights for typical features. It reduces large weights, i.e. it reduces the value of frequent features of objects, and gives an advantage to rare features of objects. It eliminates dysbalance in dataset when, for example, the number of images with Dogs is much greater than the images with Cars.Adam in Yolo:

This article suggests to use

B1=0.9 B2=0.999 eps=0.00000001In the Darknet by default:

B1=0.9 B2=0.999 eps=0.000001if you setadam=1here: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/cfg/yolov3.cfg#L17You can use these paramters in your cfg-file: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/src/parser.c#L629-L634

Note: if you trained weights-file with

adam=1, then you should useadam=1in cfg-file for Detection too.If you set

adam=1then during training weights will be updated for each iteration (for eachbatchimages) by using this algorithm: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/src/convolutional_kernels.cu#L632-L644instead of: https://github.com/AlexeyAB/darknet/blob/08e8e0c8c2d3cddfb6ee065e813ae5023f9568d2/src/convolutional_kernels.cu#L644-L656

More about it: http://ruder.io/optimizing-gradient-descent/