Darknet: training error(Floating point exception)

Hello.

I was going to use the Tesla V100 for tracking.

There is currently one V 100 and

CUDA 9.2

OPTIONS 3.4.1

At Makefile

GPU = 1

CUNSN = 1

CUNSN_HALF = 1

OPEN CV=1

AVX = 1

OPENMP=1

LIBSO = 1

# set GPU = 1 and CUOSN=1 to speedup on GPU

# set CUMPN_HALF = 1 to further speedup 3x times on GPU DDR-2, Titan 100

# set AVX=1 and OPENMP=1 to speedup on CPU

This setting and the system are in use.

There is no problem with the detectecting.

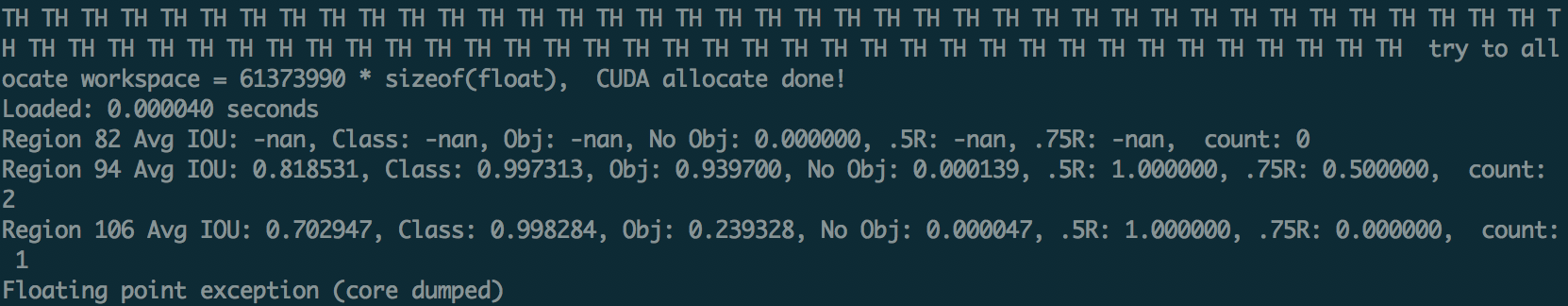

During tracking, a Floating point received error is displayed and the operation is terminated.

Why? I need your help.

i use to -dont_show option

=======================

Also, the Tesla V100, which is designated as batch = 64 and subdivisions=16, takes about nine seconds.

The gtx 1080 time specified by batch = 64 and subdivisions = 32 requires 14 seconds.

Is it true that this is the difference in learning time?

Tesla V100 is not as fast as I expected.

All 22 comments

and, Turning off the CUNSN_HALF=0 option will not result in an error.

Hello,

CUDA 9.2

OPTIONS 3.4.1

Do you mean you use OpenCV 3.4.1? Use OpenCV 3.4.0. OpenCV 3.4.1 isn't supported due to C API bug: https://github.com/AlexeyAB/darknet#you-only-look-once-unified-real-time-object-detection-versions-2--3

What cuDNN version do you use? Can you show screenshot of installator of cuDNN?

During tracking, a Floating point received error is displayed and the operation is terminated.

Why? I need your help.

- Do you mean during Detection instead of Tracking? What command do you use for Detection?

Also, the Tesla V100, which is designated as batch = 64 and subdivisions=16, takes about nine seconds.

- It should take

1.27 secondsfor 1 iteration of Training on Tesla V100 - if there are usedbatch=64 subdivision=4 width=416 height=416 random=0in cfg-file andCUDNN_HALF=1 OPENCV=1in the Makefile

and, Turning off the CUNSN_HALF=0 option will not result in an erro

Can you show screenshot of this error?

There is no such options as

CUNSN_HALF=orCUNSN=

https://github.com/AlexeyAB/darknet/blob/0e1d52500d49cf6c25944282509990f0060cebdf/Makefile#L1-L11

- Detection - Forward inference time:

| Model | FP32 (Tesla V100), sec | Tensor Cores FP16/32 (Tesla V100), sec | Speedup X times|

|---|---|---|---|

| yolov3.cfg | 0.031 | 0.011 | 2.8x |

| yolo-voc.2.0.cfg | 0.02 | 0.0062 | 3.2x |

| tiny-yolo-voc.cfg | 0.003 | 0.0027 | 1.1x |

- Training - Forward+Backward+Update time (

batch=64 subdivision=4 width=416 height=416 random=0):

| Model | FP32 (Tesla V100), sec | Tensor Cores FP16/32 (Tesla V100), sec | Speedup X times|

|---|---|---|---|

| yolov3.cfg | 1.9 | 1.27 | 1.5x |

| yolo-voc.2.0.cfg | 0.89 | 0.39 | 2.3x |

| tiny-yolo-voc.cfg | 0.23 | 0.165 | 1.4x |

I’ve actually been having this same issue.

I’m using:

V100 (Driver 396.26)

CUDA 9.2

CUDNN 7.1

CUDNN_HALF = 1

OpenCV 3.4.0

Thanks in advance!

@benjaminrwilson Can you show screenshot of this error?

@benjaminrwilson Thanks!

Do you get this error during detection on video or list of images?

./darknet detector test cfg/coco.data yolov3.cfg yolov3.weights -dont_show < data/train.txtDo you get this error during training if you compile with

CUDNN_HALF=0?Do you use the latest code from this repository?

Can you show your cfg-file?

Will you get this

Floating point exceptionif you will try to get mAP by using default yolov3.cfg & yolov3.weights with your dataset, if you setvalid=train.txt?

./darknet detector map data/obj.data yolov3.cfg yolov3.weights

I know that result will be 0%, but this is just to check that dataset doesn't have obvious errors.

- This works with CUDNN_HALF = 1.

- No, it works if I make without half precision.

- Yes.

- It's attached. (Just simple 2 class)

- This works correctly to my knowledge. I didn't get a floating point exception.

Thanks for all the help!

@benjaminrwilson

- What command do you use for training?

- Do you get this error if you train with

width=416 height=416? - Do you get this error usually at the 1st iterations, or sometimes it can occur at 100 or 1000 iteration?

- Do you get this error if you train it on another dataset, for example, Pascal VOC or MS COCO?

- ./darknet detector train [.DATA] [.CFG] [.WEIGHTS]

- Yes, I still get the error.

- The error always occurs immediately.

- I will have to get back to you on this. I will have to download another dataset first.

@AlexeyAB

I have confirmed that the same issue exists for when using the VOC dataset.

I've tried uncommenting that, and I still get the error unfortunately.

@benjaminrwilson

Oh, I'm sorry.

I told you another way.

@benjaminrwilson

I've just corrected the error.

Perhaps the cause was CUDA Version.

It was lowered from 9.2 to 9.1 and was successfully made when used.

I hope it will help.

@ryumansang

Did you downgrade cuDNN too?

What cuDNN version did you use when you had CUDA 9.2 that cause an error?

- cuDNN v7.1.4 (May 16, 2018), for CUDA 9.2 ?

- cuDNN v7.1.2 (Mar 21, 2018), for CUDA 9.1 & 9.2 ?

- cuDNN v7.0.5 (Dec 11, 2017), for CUDA 9.1 ?

@AlexeyAB

That's right. Downgrade CUDA from version 9.2 to version 9.1.

CUDA 9.1 & cuDNN 7.0.5 installed.

Perhaps the CUDA 9.2 & cuDNN 7.1.2 had a problem.

@AlexeyAB

I've verified that it does not work with CUDA 9.0 and CUDNN 7.1.2, but I will have to try with CUDNN 7.0.

Also, does the 2.8x performance correspond with a 2.8x increase in frame rate (on Yolov3 inference)?

@benjaminrwilson

- try to use

Download cuDNN v7.0.5 (Dec 11, 2017), for CUDA 9.1: https://developer.nvidia.com/rdp/cudnn-archive - and

CUDA Toolkit 9.0 (Sept 2017)https://developer.nvidia.com/cuda-toolkit-archive

Also, does the 2.8x performance correspond with a 2.8x increase in frame rate (on Yolov3 inference)?

If your CPU isn't a bottleneck in the video-decompression stage, then yes, 2.8x increase in frame rate.

@AlexeyAB

I've confirmed that installing cuDNN v7.0.5 resolves this issue. Do you think cuDNN v7.1+ may be supported for half-precision in the future?

Thanks again for all the help!

@benjaminrwilson

Yes, new cuDNNs will support mixed-precision (fp32+fp16) for Tensor Cores, it gives ~3x real performance increasing without drop in accuracy for Detecton and Training.

It is even more than DP4A (int8) that gives only 1.5x real performance increasing with drop in accuracy for Detection only.

So Tensor Cores with mixed-precision (fp32+fp16) is the best feature for the last several years for nVidia GPU.

@AlexeyAB

The performance increases sounds great! Sorry, I think it I was a bit unclear. I meant do you think this fork will support using cuDNN 7.1+ with half precision in the future (since I think it's currently incompatible due to that floating point error)?

@benjaminrwilson I think there is just some bug in cuDNN or incompatibility issue in CUDA 9.0 and CUDNN 7.1.2.

So yes, it will support any new cuDNN versions with mixed-precision.

If there will be changes in the API of new cuDNN versions or other issues, I will add support for the new cuDNN/API.

@AlexeyAB

Thanks again for all the help. This is a very nice fork of Darknet, and I really appreciate all the work you've put into this! I'll keep an eye out for any updates supporting the new versions of cuDNN!