Cypress: 3.0 - custom reporters broken now that tests are run in isolation

Is this a Feature or Bug?

Bug

Current behavior:

Each test suite overrides the previous one's results.

Desired behavior:

Before 3.0, results were aggregated and the final report contained all test suite results.

Steps to reproduce:

Run with several test suites:

cypress run --reporter junit --reporter-options "mochaFile=results.xml

After completion, results.xml only contains the last test suite results.

Versions

3.0

All 64 comments

Also related, coverage aggregation also broke, this no longer works:

const istanbul = require('istanbul-lib-coverage')

const map = istanbul.createCoverageMap({})

after(() => {

cy.window().then((win) => {

const coverage = win.__coverage__

if (coverage) {

map.merge(coverage)

}

cy.writeFile('.nyc_output/out.json', JSON.stringify(map))

})

})

For both coverage + junit, you'll need output to a folder and use dynamic file names (I think this is supported by one of the junit reporters out of the box - we've used it on on our projects internally) and then aggregate them at the end.

We internally discussed this change causing the problem you're describing but it's something that needs to be solved in most situations especially in CI when doing parallelization / load balancing. It's possible that Cypress can bundle in support for doing junit report combining out of the box, but for coverage you'll definitely need to merge the maps at the end yourself. I don't believe it's too difficult.

@knoopx did you find what you were looking for? With the junit reporter you can pass [hash] in the filename so that it will not overwrite the previous ones. Then you can use a tool to combine them if necessary.

For jenkins, being able to have separate junit files already solves the problem for reports. This is sure handy!

Using [hash] workarounds the issue, thank you.

@knoopx did you get a fix for the istanbul coverage or only junit?

@adubatl the [hash] fix is only for junit. For the coverage reports you effectively need to do the same thing. Use cy.writeFile to write the coverage with a dynamic file name and then join them up after the run completes.

Just got it going. Is this a feature you all intend to build into cypress one day?

This is an issue running with mochawesome too (and they don't currently support combining reports). Our CI environment setup also strongly encourages having a single report due to various reasons I won't go into here. Is there any chance of having it be an option to combine results before it's passed to the reporter? If not, any suggestions for how we can generate filenames per spec file?

@adubatl Hi there. Any chance you’d share your code regarding coverage aggregation? :)

@adubatl which feature are you referring to? If you're talking about junit, then yes, we could do this by default - both setting the junit filename to be the spec we're running and also combining it after the end of the run.

@StormPooper why is this an issue with mochawesome? Can't you set a custom name of the report? We could likely give you a plugin event like before:spec:file and after:spec:file so that if you cannot set it dynamically you could at least rename the outputted report so they won't override each other.

I guess I don't really understand why you'd need to combine the reports. CI doesn't understand anything other than the junit reports - and our Dashboard service already provides you a great view into the test run with each spec file independently broken down (that's kind of the point now).

@HugoGiraudel Couldn't you do something like this...

// support/index.js

after(() => {

if (Cypress.env('COVERAGE') {

cy.window().its('__coverage__').then(cov) => {

const spec = window.location.hash

cy.writeFile(`cypress/coverage/${spec}-coverage.json`, cov)

})

}

})

And then after the run just combine all the coverage reports with standard node code.

@brian-mann Just including some coverage output at the end of cypress runs without any custom user managed stuff. Nothing in particular just curious if you had any default test coverage output in your future features. Thanks for the quick responses!

@brian-mann your suggestion for junit was to use [hash] to generate filenames pers suite, but I can't see an equivalent option for mochawesome (from what I can see, I'd have to move the reporterOptions to code instead of Cypress.json and work out the spec file being run to pass the right filename in, but maybe I'm missing something obvious). I suspect other custom reporters may hit similar problems if their reporter doesn't have dynamic report naming as a feature already.

In regards to wanting a single report for all spec files, our current flow is that TeamCity runs our tests whenever a build of the app is updated for multiple environments (there are multiple build jobs to trigger them with different environmental variables, etc) and we use mochawesome to generate a single HTML report for the project, which is surfaced in TeamCity as a build report tab. This is useful to quickly see at a glance everything that's passing and failing without having to look at multiple reports or parse logs.

Additionally, TeamCity doesn't support globs for surfacing the report, so with the changes in 3.0 we'd have to update our TC config every time we add/remove/rename a spec file, after having worked out how to have consistent report names per spec file, as above.

@knoopx How were you able to get the [hash] to work? My file is written with the literal .hash. in the filename.

@benpolinsky you need to pass in a literal [hash] and not hash

@brian-mann Whoops, that is what I intended to write.

I'm not having any luck. Trying on a different computer using the last three example specs.

Running npx cypress run --reporter junit --reporter-options "mochaFile=results/my-test-output.[hash].xml,toConsole=true" on the command line and the resulting file is `results/my-test-output.hash.xml'

On the previous computer, I attempted using the module api with no success.

Ah, I've got it. It works as an option in cypress.json.

@brian-mann

How can we know that all spec run has been finished? Is there any Cypress hook available? If yes, then at where we have to define that cypress hook?

In which file we have to write node code which merges all file's coverage?

Thanks.

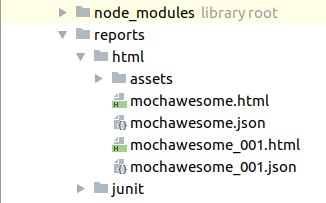

@StormPooper fyi.. For mochawesome set the overwrite flag to false and you should be good to go.

By default, report files are overwritten by subsequent report generation. Passing --overwrite=false will not replace existing files. Instead, if a duplicate filename is found, the report will be saved with a counter digit added to the filename. (ie. mochawesome_001.html).

@brianTR I've seen that option, but that doesn't help us in our particular scenario, as they aren't predictable names (so I'd have to set up a bunch of tabs for each potential number and hope we don't go over it) and if a new spec file is added then it's impossible to tell from the files which report is for which spec file (so a.spec.js, b.spec.js, c.spec.js would mean _001 is now for b, not c).

@brian-mann @jennifer-shehane

Will anything be done about this for 3.0.2? I've heard different things in gitter. It would be nice to be able to retrieve an aggregated set of results without different workarounds for different reporters.

What's the approach here?

@abataub how do you know when the run is finished? You use our module API, which will yield you all of the results or you simply chain something off of cypress run like cypress run && echo "finished"

@joshmccure nothing for this will be done with 3.0.2 because other than mochawesome we're not seeing anything that is a fire that needs putting out. We have talked internally about exposing a service which will aggregate the results for you and spit out junit or mochawesome or whatever it is you want.

The reason this likely needs to be moved to the service level is because of parallelization releasing in 3.1.0. In that case the reports will not only be split out by spec file but they will be split out across machines and there won't be an easy way to aggregate the results except if you have a service that knows everything about the run. That's the logical place to put it. For now you can also see all of the results aggregated correctly in the Dashboard - although I do understand the desire to want to have junit or mochawesome reports.

Thanks, @brian-mann. I'll try it out.

For now you can also see all of the results aggregated correctly in the Dashboard - although I do understand the desire to want to have junit or mochawesome reports.

This feature-break is about promoting the dashboard rather than support the users.

It's a very bad move to have N html reports, in out case about 70.

Did you ever tried to tell your boss to look at 70 reports? I was happy that they have a look at a single report. And the devs how have to search for the issues. This is not good nor practical.

I know, this is an open-source project and I can contribute if I like to, but this feature is not new, it's broken instead.

The issue label "wont fix" is a very strong signal. Is there any eta when the reports are merged again?

@SeriousM ignoring the dashboard for a minute, running ~100 spec files takes a lot of time, and could easily be the bottleneck of your CI build process. Most users with close to 100 specs likely want to split those specs to be ran on multiple machines- they can do this without the dashboard: You can take those 100 spec files, arbitrarily split them up across 4 machines, and get a speed up close to 4x. The Cypress project actually already does that, because builds would take too long otherwise.

Would you prefer having to then combine spec reports across some arbitrary split of specs, and having to find some way to find the spec you are looking for? or have a stable behavior of one report per spec, that will be the same regardless of how you decide to split your specs across machines? The latter is much easier to reason about for possibly combining/grouping reports to the user's needs.

@SeriousM you couldn't be more wrong, and nobody appreciates your presumptive comments.

The decision to chunk the specs was made months ago - it was effectively communicated and the idea of the reporters being split up was even accounted for as a downside of doing this. https://github.com/cypress-io/cypress/issues/681

As you can see, the primary purpose has nothing to do with "promoting the dashboard rather than support the users". As it stands, it is not feasible for Cypress core to monkey patch every single mocha reporter out there (including 3rd party custom ones we don't even control) to polyfill the behavior to combine reports.

Cypress yields you all of the results of the run making it possible for you to stitch together anything you want. Everything is open source. You could submit a PR to mochawesome to have them do this by default. You could output JUnit reports and then combine them, and then convert that to mochawesome. You could do any number of things to manually aggregate the results yourself, or you could just not upgrade and stay on 2.1.0 forever if you'd like.

With the demand for parallelization and faster CI runs, no matter what - we will have to solve this problem because a single machine will not have all of the details on its physical drive to stitch together the results. They will be disparate and disconnected, and thus require a service to stitch them all together. We've internally talked about this and would be happy to create a microservice as part of the CLI core to do this for you. But there's no way to deliver this as part of the core product because of the aforementioned reasons - a single machine will not have access to all of the results.

@Bkucera

or have a stable behavior

I expect to have a stable execution of one test or 100, on one machine or on 30. Stability shouldn't be an option.

@SeriousM you couldn't be more wrong, and nobody appreciates your presumptive comments.

@brian-mann I guess you missed my point and I hope I can change that:

I'm a new user to cypress and the first-time experience was not quite that good as "advertised".

The first thing to do when I look at new technology is to read the documentation and try it in the described way.

The docker-support is very bad documented, the docker images don't have cypress in it.

The "mochawesome" (as mentioned in the documentation) reporter can't work as it supposed to be (since 3.x) and junit reports need the special "[hash]" in the filename.

These points aren't documented and I worked them out in many hours.

Don't get me wrong, I thing cypress is a very nice tool and I very like the way it changes the way how e2e tests are done but the documentation and get-started-pages does not really help you after the "start ide and try it"-point.

it was effectively communicated and the idea of the reporters being split up was even accounted for as a downside of doing this

how should I know that something was communicated before when I'm a new user?

You could submit a PR to mochawesome to have them do this by default.

Already discussing the problem.

or you could just not upgrade and stay on 2.1.0 forever if you'd like.

I see your "go f* yourself" here, thank you.

With the demand for parallelization and faster CI runs, no matter what - we will have to solve this problem

A problem that a small team or new project never will have for months or years.

because a single machine will not have all of the details on its physical drive to stitch together the results.

I think the decision to enforce parallel execution (thus, split reports) was not well thought thru in terms to support all users, not just the big ones with your dashboard.

A mode to run the tests in serial on one machine to return a single report should be added.

@brian-mann: I would like to discuss these points, not to offend nor get offended with strong words. I hope you want the same.

@SeriousM

This feature-break is about promoting the dashboard rather than support the users.

This isn't a very polite remark. In fact, it's on the edge of being a conspiracy theory ;)

Did you ever tried to tell your boss to look at 70 reports? I was happy that they have a look at a single report. And the devs how have to search for the issues. This is not good nor practical.

Those remarks got me thinking - are you using CI, or launching tests manually each time?

I think the decision to enforce parallel execution (thus, split reports) was not well thought thru in terms to support all users, not just the big ones with your dashboard.

It might be the case that the way you are using Cypress is unorthodox to the point of trying to "swim against the current". I'd love to hear a bit more on what is your testing setup and how exactly Cypress fits in it.

This isn't a very polite remark

you're right, I'm sorry for that.

are you using CI, or launching tests manually each time

both, see below.

I'd love to hear a bit more on what is your testing setup and how exactly Cypress fits in it.

we have a list of products that never have seen any tests and now e2e tests is the first step to create a safety-net for refactoring. my test setup is something like this:

- run the product and all needed services (db, blob, etc) in docker containers in isolation.

- run cypress in a docker container to avoid the hassle of setup node, npm modules, headless browsers.

- use docker-compose to start the product containers and cypress container and let cypress test the product.

- get the reports back from cypress.

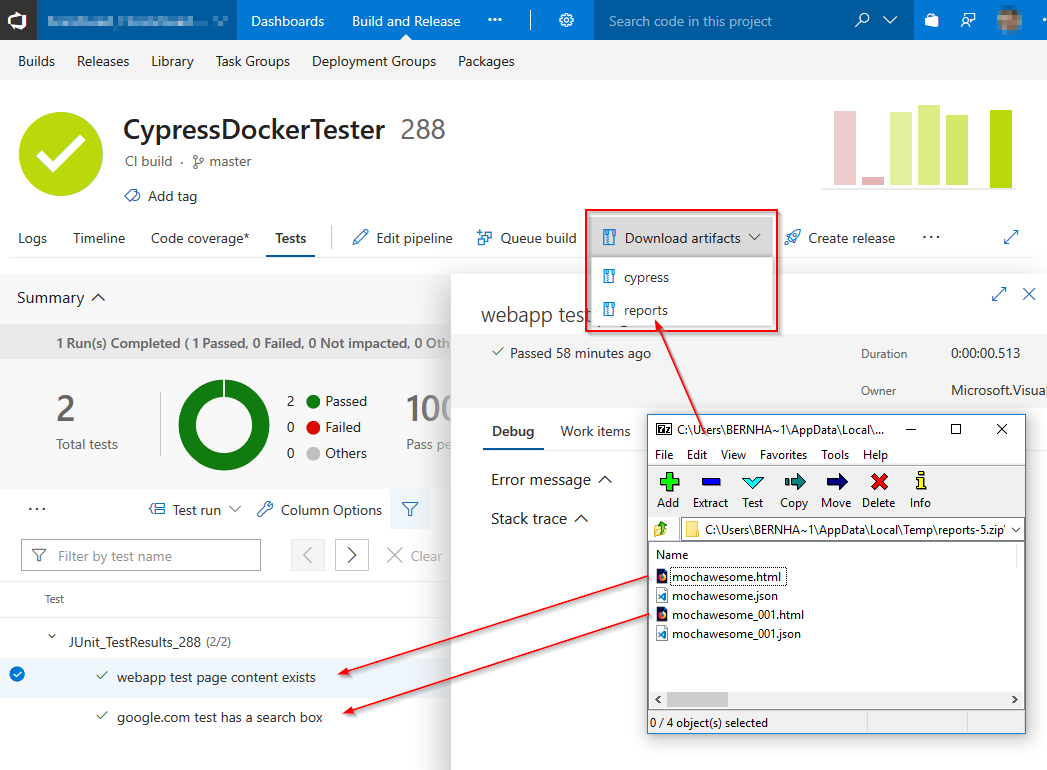

this works locally and on VSTS.

In addition for VSTS I grab the junit results and report them to the build result of VSTS.

Because VSTS doesn't care about detailed test output I expose the html report (mochawesome) along with the screenshot and video as build artifact for later use.

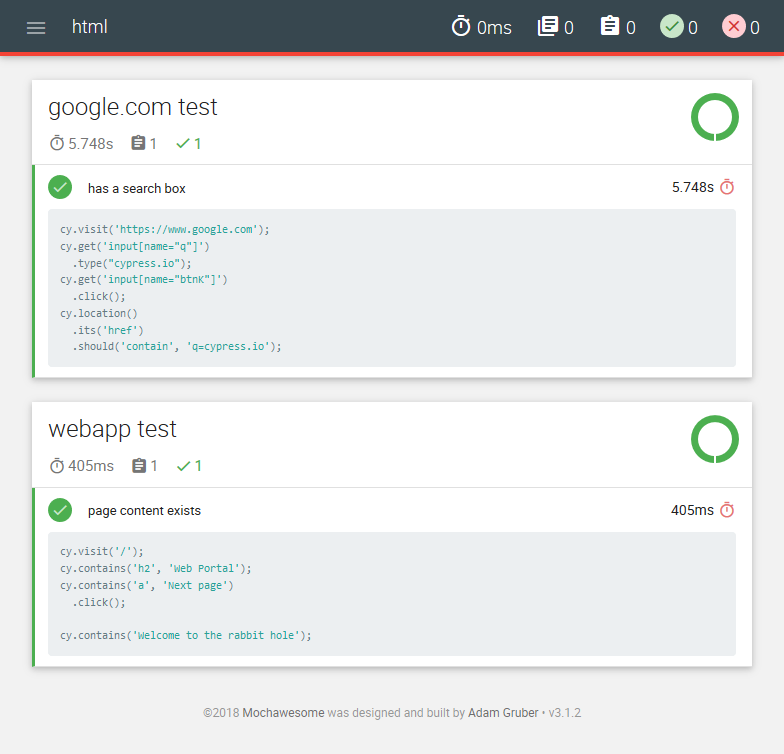

The problem is now just with the html report that it get's split by spec and I expose N html reports named 001, 002, 003 (mochawesome naming style) instead of one single report. Hard to find the one single failing test among N number-named reports to see more details.

I published all work regarding docker and cypress to this repo which I initially intended to gift the community as guide for this kind of setup.

This example has two specs with one test it each. As you can see VSTS doesn't group the tests by the spec nor the describe which makes it even harder to find the failing test in the specs.

@kamituel I hope you see now why it's necessary to have a nice html report.

Edit: here is a self-patched version of the html report which is my desired result of cypress:

Thanks, it's more clear now why it's an issue.

I'm using jUnit myself, but... would it be hard to merge all those JSON files and then generate a single HTML report? (e.g. using mochawesome-report-generator)

I'm using jUnit myself, but... would it be hard to merge all those JSON files and then generate a single HTML report?

That's one way I'm actually trying to do but this requires to either change the generator or, much more likely, stitch them together myself without supporting the whole api/file structure. There wont be any more options than this I guess.

@SeriousM

this feature is not new, it's broken instead.

...

A mode to run the tests in serial on one machine to return a single report should be added.

It seems like you've been convinced this is a feature. I don't think people would mind if you opened up an issue for that to be implemented.

The docker-support is very bad documented

These points aren't documented and I worked them out in many hours.

I agree that Docker and reporters are some of our most poorly documented features. The documentation is open source and we're quite quick to merge recommendations made to them.

the docker images don't have cypress in it.

We've had back and forth discussions on including Cypress in the docker container that I recall at the time had more cons than pros for us.

We specifically factored in Docker in our latest 3.0 release and updated our CI docs.

We are now caching Cypress outside of node_modules which required an update to CI scripts to ensure you properly cache the new location.

Because we're part of the NPM ecosystem, it doesn't make sense to provide Cypress inside of the docker containers. It would mean that for every update you would have to bump the container tags. Instead we've opted to go with the grain like how every other NPM module dependency gets versioned.

In essence there is no need to recreate the dependency wheel that NPM already provides to us - and that means that the docker container experience will always match the local NPM experience (in regards to versioning).

By doing it this way, you can automatically have your CI provider cache the binary in CI, so each subsequent install is blazing fast, while also retaining the ability to upgrade Cypress versions without changing anything about your environment.

https://docs.cypress.io/guides/guides/continuous-integration.html#We-recommend-users

@SeriousM I would love to know specifically what about the Docker docs were confusing to you. We have a whole repo dedicated to this, including a nice grid showing you which ones to use - and its referenced throughout our CI documentation. If you can provide specifics then we can update it.

@kamituel @SeriousM there are existing NPM tools that will automatically merge in JUnit reports for you such as this.

https://github.com/drazisil/junit-merge

I believe from there you can/could then convert that to any other reporter of your choice.

Alternatively - you could also use our module API to get access to the entire array of run results. We don't hide anything from you. Literally every single test, its function body, the test state, correlation to hooks, etc is all in those payloads. It would be possible for you to traverse that JSON data and programmatically create any report you desire.

We specifically built this in to give you a way to work with the data in whatever way you wish.

It is technically possible for us to write code that effectively hacks the way mocha outputs events in order to build up a single reporter instance of all the specs. But it will be gnarly because we will still need to retain the behavior of finalizing a reporter per spec. So we'd have to have two instances of two reporters in order to do this. The problem is that two reporters can conflict with each other. They may read or write from the file system. They will both most certainly log to stdout or stderr, so we'd have to "silence" one of them. We looked into it and there's no generic way we could do this and it work in 100% of all cases. Imagine a custom reporter and having your callbacks get invoked twice!! It would be total craziness.

We think it's better in the long run (especially given that Parallelization is Almost Here™ that we handle this in a service layer outside of the Core Test Runner since that's the only way to guarantee it will always work the way you expect it to.

As for forcing Cypress to run all of your specs together (instead of in isolation) I can say that there is no way we are going back to that model. We didn't move away from that because of the Dashboard, we moved away from it because a huge number of our users were having nondeterministic behavior in their spec files and the browser itself when running in CI.

Cypress pushes the browser to its absolute limit and due to the way garbage collection works (which is also nondeterministic) it is impossible to guarantee that runs will work the same way. It is chance and chance alone that you weren't encountering issues running that many specs together in CI. Putting each spec in its own isolated renderer process is undoubtably the best way to go for all users. Chrome itself does this when opening new tabs, for this reason (and others).

As per one of your other comments: Cypress runs thousands of projects in its Dashboard and likely at least 2x-3x more that aren't using our service. Most of those projects are for small teams. You're really mistaken that parallelization is something that "only big users of the dashboard" will utilize. It's something that every project could use if you have more than a single spec. We anticipate virtually everyone moving over to it, it's a no-brainer. Even a run that is 2 minutes could be reduced by 50% and only take 1 minute. Anything that speeds up CI is an improvement for every single project out there. Many of our users are 2-3 person teams that run hundreds of specs across dozens of specs that they wrote in less than 60 days.

One of the best things about being a real company is that we get to see how real users and real organizations use and scale Cypress - and we adjust our roadmap to accommodate as many people, projects, and situations as possible. If you don't record to our Dashboard service, we don't mind - we treat everyone the same way regardless and spend the majority of our time supporting free users and contributing most of our development bandwidth to the open source Test Runner. That's what we believe in and it's our mission.

Some of the changes we make result in unforeseen consequences, which is the nature of building a tool used by a large community. We've responded immediately to those concerns brought up, offered up solutions, and tried our best to explain why this change was necessary. If there are other ideas out there that get you what you want, then by all means we will always accept PR's fixing it.

Thanks @brian-mann for this very long answer!

@SeriousM I would love to know specifically what about the Docker docs were confusing to you. We have a whole repo dedicated to this, including a nice grid showing you which ones to use - and its referenced throughout our CI documentation. If you can provide specifics then we can update it.

https://docs.cypress.io/examples/examples/docker.html

This was the first information I got about cypress and docker from google. The page doesn't have useful information beside the github link which, in turn, points to the examples page again.

The links to the CI scripts (codeship, gitlab, etc) haven't helped either because I was still on the search for "how to run it in docker".

I didn't looked at the CI-Help yet because I wanted to run it first locally with docker. I guess the title was just misleading. The CI-Help looks definitely more helpful as the examples page.

Because we're part of the NPM ecosystem, it doesn't make sense to provide Cypress inside of the docker containers.

I disagree with you in this point. The benefit of docker is to have an image with all dependencies _inside_ the container, meaning it's agnostic to the hosting environment. That means I can run the container - no matter what - on every machine that has docker installed (and has the right container-architecture). VSTS nor our development machines care about node version or npm packages and there is no caching either.

I will create my own docker images with cypress in it, ready to roll with all dependencies in it. This way I have no install times as the image is already setup.

Alternatively - you could also use our module API to get access to the entire array of run results.

Is there a documentation about it? It sounds very interesting! I think about writing a trx (microsoft testresult format) reporter that could add the screenshot and video so that the whole test experience is embedded in VSTS/TFS or maybe even visual studio.

As for forcing Cypress to run all of your specs together (instead of in isolation) I can say that there is no way we are going back to that model.

It's something that every project could use if you have more than a single spec.

I see your point now and I agree. Thank you for all your information.

I was a bit harsh in the beginning, maybe because the the start was not as easy as I expected, combined with misleading documentation and a major version upgrade which was ahead of the documentation (reporters, ...). I'm sorry for that and I'm happy that we all found a way to step over it 👍

The module API is here. You can console.log the .then() so you can see what is output.

@SeriousM Hi Bernhard Millauer, I'm new in mochawesome-report-generator and I'm using cypress. I run cypress with this options :

```ruby

cypress.run({

...baseMap[bases.pop()] || {},

spec: specs.join(),

reporter: "../node_modules/mocha-multi-reporters/index.js",

reporterOptions: {

configFile: "mocha-config.json"

}

})

.then((results) => {

marge.create('../submodules/auth-ui/reports/json',{reportPageTitle :'HTML report', autoOpen : true});

if (results.failures > 0)

return Promise.reject("Test failed");

})

.catch((err) => {

console.log("Error: " + err);

});

My mocha-config.json :

```ruby

{

"reporterEnabled": "mocha-junit-reporter, mochawesome",

"mochaJunitReporterReporterOptions": {

"mochaFile": "reports/junit/results.[hash].xml",

"toConsole": false,

"overwrite": false

},

"mochawesomeReporterOptions": {

"reportDir": "reports/html",

"overwrite": false,

"html": false,

"json": true

}

}

I have several json reports in this directory

../submodules/auth-ui/reports/html

but when I execute the generated file by mochawesome-report-generator I have a blank page.

It is possible to get one html report for several tests in cypress, do you know?

Please help me, sorry for my english :)

@belimm01

It is possible to get one html report for several tests in cypress, do you know?

It's not possible as cypress is optimized for parallel execution and I hadn't time yet to figure out how to stitch them together, sorry.

If anyone tries running from the command line (or through package.json script), you cannot pass the [hash] param as --reporter-options argument.

Instead, you need to add the following to your cypress.json:

"reporter": "junit",

"reporterOptions": {

"mochaFile": "cypress/results/results-[hash].xml"

}

Thanks everyone. Is there a way to pass the name of the given spec instead of the hash into the file name?

We could use mochawesome's reporterFilename, but Cypress plugins don't support changing config per file/test suite.

A quick win would be implementing in mochawesome a [file] wildcard just like jUnit's [hash].

Looks like the Cypress overwrites the report file with the result of the latest run.

I tried the workaround with [hash] and this does not help. I run the cypress via modules API.

"reporter": "junit",

"reporterOptions": {

"mochaFile": "dist/junit[hash].xml",

"toConsole": false

},

junit.xml always contains the result of the last suite file.

Looks like the only solution is to run cypress once per each spec file, because the reporting issue has no other workaround

@brian-mann @jennifer-shehane Folks, could you provide some the working solution example ?

I've tried everything but still have the only last result.

@Hollander the modules API does not currently support the [hash] option - you need to provide it in your cypress.json. It should be picked up when you run through the module API, however. This is the solution my team is currently using for testing during CI.

@benpolinsky Thanks! But isn't it a defect ? These guys marked it as wontfix which I can't understand.

@Hollander I would start another issue that focuses around not being able to use [hash] in the module API. The issue identified in this thread is more general, thus the Cypress team's rationale for marking it wontfix makes sense.

Any news regarding to mochawesome report? I am getting only the last report out of the whole suite.

I have mochawesome working here -> https://github.com/testdrivenio/cypress-mochawesome-s3

Essentially-

- cypress runs

- each spec generates a new report

- a custom script combines each mochawesome json file and calculates the stats

- html report is then generated

it's a pretty annoying gotcha when using the built-in junit reporter, which you'd think would support collecting reporting from multiple tests. would be worth documenting the [hash] workaround on the reporters page: https://docs.cypress.io/guides/guides/reporters.html#

it's a pretty annoying gotcha when using the built-in

junitreporter, which you'd think would support collecting reporting from multiple tests. would be worth documenting the[hash]workaround on the reporters page: https://docs.cypress.io/guides/guides/reporters.html#

I agree. The lack of a proper response and just the general attitude of the Cypress team on this issue (figure out a hacky workaround yourself) is one of the reasons I decided to go with Testcafé instead of Cypress.

Well, testcafe is $499 per developer, this is not an option for everyone

out there.

My file is still being written with the literal .hash. in the filename even though I'm passing [hash] in the cypress config via Node API. This is what my code looks like:

cypress.run({

reporter: 'mocha-junit-reporter',

reporterOptions: {

mochaFile: "test-reports/test-output.[hash].xml"

},

config: {

baseUrl,

video: false

}

})

What would be the reasons for this not to work as expected? Is there a workaround for this?

^ regarding my previous comment I was originally using v3.0.1. Upgrading Cypress to v3.1.3 fixed this issue for me. Issue should be fixed starting from v3.0.3. PR

Well, testcafe is $499 per developer, this is not an option for everyone out there.

Incorrect. Testcafé is free and open source: https://devexpress.github.io/testcafe/faq/#what-is-the-difference-between-a-paid-and-an-open-source-testcafe-version-what-is-testcafe-studio

It also has proper TypeScript support, supports multiple browsers and supports parallel testing.

^ regarding my previous comment I was originally using v3.0.1. Upgrading Cypress to v3.1.3 fixed this issue for me. Issue should be fixed starting from v3.0.3. PR

No it is not fixed. I'm using Cypress v3.1.3 and I still must use [hash] in mochaFile (reporter: junit)

Hey kids, I may have code to help those not using Mochaawesome.

At work, I need to generate a report of all end to end tests that passed/failed for audit purposes. In Protractor, I'd just put resultJsonOutputFile: 'report.json' in the configuration, and it "just worked". With Cypress, this would only work if you had all your files in 1 file.

So I created a custom reporter to combine them. It's not a library (yet?) but for those of you struggling to combine the reports a single reporter generates, perhaps the code can help you provide a reference on how you'd do it yourself.

https://github.com/JesterXL/cypress-report-combine-example

Specifically the combine function: https://github.com/JesterXL/cypress-report-combine-example/blob/master/reporters/combine-reports.js#L88

It:

- nukes the reports folder

rm -rf reports - then run cypress and our custom reporter writes a uniquely named report (for now just status, test name, and time it took to run)

cypress run - when done, you'll have a reports folder full of files that have the test results for each test in JSON. To combine them, you just pass the folder name, and this script will combine 'em then delete the interim report files:

node reporters/combine-reports.js --reportsFolder=reports

Hopefully this is helpful. I know many people are using existing reporters that broken once Cypress went isolated, but they mentioned in #1946 that they may fix this in the future.

I have released mochawesome-merge to handle merging multiple json reports. After merging the result can be supplied to mochawesome-report-generator to generate html.

@Antontelesh is there a way to clear a previous mochawesome-merge report before each test-run? So that I can see report only about the last test-run

@artemshoiko You can just exec rm -rf mochawesome-report to delete the report folder, or use rimraf npm package for this if you wish to stay cross-platform.

I personally use fs-extra to remove a folder from a JS module.

Closing in favor of the proposal to add option for 1 generated report. https://github.com/cypress-io/cypress/issues/1946

Most helpful comment

If anyone tries running from the command line (or through

package.jsonscript), you cannot pass the[hash]param as--reporter-optionsargument.Instead, you need to add the following to your

cypress.json: