Cosmos-sdk: State load issue when applying upgrades

Summary of Bug

Software upgrades are not happening, failing with following reason

BINARY UPDATED BEFORE TRIGGER! UPGRADE "test1" - in binary but not executed on chain module=main

Version

latest: 0.38+

Steps to Reproduce

I have used wasmd for testing as it is using latest release (Gaia doens't have a release for 0.38+)

- Start Testnet (Use voting period as minimal as possible, I have used 5min window)

- Create a software upgrade proposal, Deposit, Vote -- Ensure proposal is passed

Wait for the binary to stop at expected height

Add an upgrade handler and compile a new binary

app.upgradeKeeper.SetUpgradeHandler("test1", func(ctx sdk.Context, plan upgrade.Plan) {

})

- Run the binary

---- It fails here ----

I tried to add some debug logs here (https://github.com/confio/cosmos-sdk/compare/v0.38.6...vitwit:v_38_6_1?expand=1) and found this:

The state should have the last blockheight i.e., ctx.BlockHeight() as 100 in the example but it's coming as 80

P.S: This might be causing some issues (no strong evidence though): https://github.com/cosmos/cosmos-sdk/pull/5579/

/cc @aaronc @alexanderbez @AdityaSripal

For Admin Use

- [ ] Not duplicate issue

- [ ] Appropriate labels applied

- [ ] Appropriate contributors tagged

- [ ] Contributor assigned/self-assigned

All 26 comments

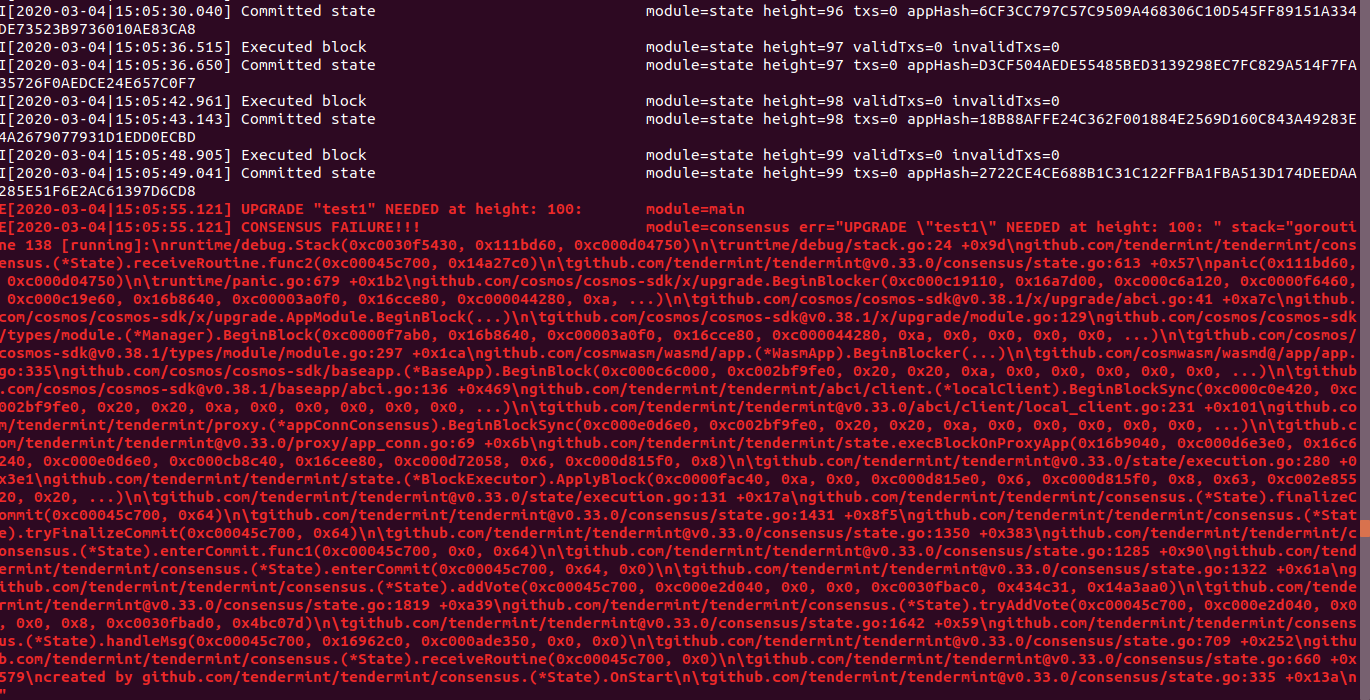

I tried to compile old binary again with these logs enabled, here is what I have. upgrade#BeginBlocker executed from 80-100th block continuously, and panic'd again at 100th height.

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=80

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=81

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=82

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=83

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=84

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=85

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=86

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=87

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=88

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=89

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=90

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=91

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=92

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=93

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=94

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=95

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=96

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=97

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=98

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=99

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

ShouldExecute::: Plan.Height=100, ctx.BlockHeight()=100

ShouldExecute:::p.Time.IsZero()=true, ctx.BlockTime().Before(p.Time)=false

E[2020-03-04|17:58:13.214] UPGRADE "test1" NEEDED at height: 100: module=main

panic: UPGRADE "test1" NEEDED at height: 100:

goroutine 1 [running]:

github.com/cosmos/cosmos-sdk/x/upgrade.BeginBlocker(0xc000117140, 0x16a82c0, 0xc0001cd9e0, 0xc0001181c0, 0xc000198c90, 0x16b8c00, 0xc0000c8010, 0x16cd440, 0xc001008940, 0xa, ...)

github.com/cosmos/[email protected]/x/upgrade/abci.go:41 +0xa7c

github.com/cosmos/cosmos-sdk/x/upgrade.AppModule.BeginBlock(...)

github.com/cosmos/[email protected]/x/upgrade/module.go:129

github.com/cosmos/cosmos-sdk/types/module.(*Manager).BeginBlock(0xc000118e00, 0x16b8c00, 0xc0000c8010, 0x16cd440, 0xc001008940, 0xa, 0x0, 0x0, 0x0, 0x0, ...)

github.com/cosmos/[email protected]/types/module/module.go:297 +0x1ca

github.com/cosmwasm/wasmd/app.(*WasmApp).BeginBlocker(...)

github.com/cosmwasm/wasmd@/app/app.go:339

github.com/cosmos/cosmos-sdk/baseapp.(*BaseApp).BeginBlock(0xc000338780, 0xc0020ae0a0, 0x20, 0x20, 0xa, 0x0, 0x0, 0x0, 0x0, 0x0, ...)

github.com/cosmos/[email protected]/baseapp/abci.go:136 +0x469

github.com/tendermint/tendermint/abci/client.(*localClient).BeginBlockSync(0xc000475380, 0xc0020ae0a0, 0x20, 0x20, 0xa, 0x0, 0x0, 0x0, 0x0, 0x0, ...)

github.com/tendermint/[email protected]/abci/client/local_client.go:231 +0x101

github.com/tendermint/tendermint/proxy.(*appConnConsensus).BeginBlockSync(0xc000b59290, 0xc0020ae0a0, 0x20, 0x20, 0xa, 0x0, 0x0, 0x0, 0x0, 0x0, ...)

github.com/tendermint/[email protected]/proxy/app_conn.go:69 +0x6b

github.com/tendermint/tendermint/state.execBlockOnProxyApp(0x16b9600, 0xc000eec3a0, 0x16c6800, 0xc000b59290, 0xc000e54e00, 0x16cf440, 0xc0000cc518, 0x6, 0xc000bd8ec8, 0x8)

github.com/tendermint/[email protected]/state/execution.go:280 +0x3e1

github.com/tendermint/tendermint/state.(*BlockExecutor).ApplyBlock(0xc000304d90, 0xa, 0x0, 0xc000bd8eb6, 0x6, 0xc000bd8ec8, 0x8, 0x63, 0xc000b6c520, 0x20, ...)

github.com/tendermint/[email protected]/state/execution.go:131 +0x17a

github.com/tendermint/tendermint/consensus.(*Handshaker).replayBlock(0xc0034ff0b0, 0xa, 0x0, 0xc000bd8eb6, 0x6, 0xc000bd8ec8, 0x8, 0x63, 0xc000b6c520, 0x20, ...)

github.com/tendermint/[email protected]/consensus/replay.go:475 +0x233

github.com/tendermint/tendermint/consensus.(*Handshaker).replayBlocks(0xc0034ff0b0, 0xa, 0x0, 0xc000bd8eb6, 0x6, 0xc000bd8ec8, 0x8, 0x63, 0xc000b6c520, 0x20, ...)

github.com/tendermint/[email protected]/consensus/replay.go:455 +0x3f3

github.com/tendermint/tendermint/consensus.(*Handshaker).ReplayBlocks(0xc000ed10b0, 0xa, 0x0, 0xc000bd8eb6, 0x6, 0xc000bd8ec8, 0x8, 0x63, 0xc000b6c520, 0x20, ...)

github.com/tendermint/[email protected]/consensus/replay.go:386 +0xeef

github.com/tendermint/tendermint/consensus.(*Handshaker).Handshake(0xc0034ff0b0, 0x16d0140, 0xc0001ee7e0, 0x80, 0x11653e0)

github.com/tendermint/[email protected]/consensus/replay.go:269 +0x485

github.com/tendermint/tendermint/node.doHandshake(0x16cf440, 0xc0000cc518, 0xa, 0x0, 0xc000bd8eb6, 0x6, 0xc000bd8ec8, 0x8, 0x63, 0xc000b6c520, ...)

github.com/tendermint/[email protected]/node/node.go:281 +0x19a

github.com/tendermint/tendermint/node.NewNode(0xc000e8c780, 0x16b2f00, 0xc00017c000, 0xc0010135d0, 0x16969c0, 0xc000f22120, 0xc001013800, 0x14a2bb8, 0xc001013810, 0x16b9600, ...)

github.com/tendermint/[email protected]/node/node.go:597 +0x343

github.com/cosmos/cosmos-sdk/server.startInProcess(0xc000e7e980, 0x14a33e0, 0x1d, 0x0, 0x0)

github.com/cosmos/[email protected]/server/start.go:157 +0x4c1

github.com/cosmos/cosmos-sdk/server.StartCmd.func1(0xc000e9bb80, 0x21dab20, 0x0, 0x0, 0x0, 0x0)

github.com/cosmos/[email protected]/server/start.go:67 +0xb4

github.com/spf13/cobra.(*Command).execute(0xc000e9bb80, 0x21dab20, 0x0, 0x0, 0xc000e9bb80, 0x21dab20)

github.com/spf13/[email protected]/command.go:826 +0x460

github.com/spf13/cobra.(*Command).ExecuteC(0xc000b84c80, 0x133d5d5, 0xc0001ffe90, 0x58c992)

github.com/spf13/[email protected]/command.go:914 +0x2fb

github.com/spf13/cobra.(*Command).Execute(...)

github.com/spf13/[email protected]/command.go:864

github.com/tendermint/tendermint/libs/cli.Executor.Execute(0xc000b84c80, 0x14a3800, 0x1320600, 0x10)

github.com/tendermint/[email protected]/libs/cli/setup.go:89 +0x3c

main.main()

github.com/cosmwasm/wasmd@/cmd/wasmd/main.go:72 +0x849

The state load works fine when node is started with gaiad start --prune nothing, else it's unable to load height after restart

Yes, wasmd start --pruning nothing is working

This probably ties into some of the pruning changes @AdityaSripal worked on. IIRC, versions are only persisted to disk every snapshot-every interval. Correct me if I'm wrong @AdityaSripal.

My initial thoughts are

The upgrade module should be able to programmatically interact with pruning so that a programmed upgrade block is always persisted to disk.

Let's figure out how to support this.

Yeah so this is probably a 100% blocker for the next major release and should be addressed on the current 0.38 line too.

I'm having a hard time understanding the exact problem. Can the issue be stated more precisely?

In this example, the upgrade plan passed (at some height?) and was to be executed at block 100, right? When block 100 hit, the binary halted due to a consensus failure. Not sure why, but how would the ability (or lack thereof) to load previous state give a consensus failure?

Here is what I'm imagining happens.

You set the upgrade height. The blockchain halts.

The upgrade manager goes to export for-zero-height from the height. The height isn't persisted to disk. This fails and then the upgrade doesn't proceed.

Not sure exactly why the consensus failure happens though.

The panic is expected behavior I think.

To perform the actual halt of the blockchain, the upgrade keeper simply panics which prevents the ABCI state machine

from proceeding but doesn't actually exit the process. Exiting the process can cause issues for other nodes that start

to lose connectivity with the exiting nodes, thus this module prefers to just halt but not exit.

https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/doc.go#L99-L101

Upgrade handler checks for anyof plan.Height and plan.Time to match with ctx block time/ block height.

Relevant code here:

https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/abci.go#L25-L26

Since, ctx.BlockHeight() and ctx.BlockTime() returning the persisted block details, which is different than expected current block, the binary is panicing.

Relevant code here:

https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/abci.go#L60-L64

The panic is expected behavior I think.

To perform the actual halt of the blockchain, the upgrade keeper simply panics which prevents the ABCI state machine

from proceeding but doesn't actually exit the process. Exiting the process can cause issues for other nodes that start

to lose connectivity with the exiting nodes, thus this module prefers to just halt but not exit.https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/doc.go#L99-L101

Yes, its because the upgrades should happen at exact height/time mentioned in the plan.

My initial thoughts are

The upgrade module should be able to programmatically interact with pruning so that a programmed upgrade block is always persisted to disk.

Let's figure out how to support this.

+1

Before panic'ing the old binary, we can force this. Looks straight forward but not sure if there's a way to force persist data to disk. Haven't checked the details on this.

Upgrade handler checks for anyof

plan.Heightandplan.Timeto match withctxblock time/ block height.Relevant code here:

https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/abci.go#L25-L26Since,

ctx.BlockHeight()andctx.BlockTime()returning the persisted block details, which is different than expected current block, the binary is panicing.Relevant code here:

https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/abci.go#L60-L64

Hmm does the process halt/panic before this code is reached and then it is only reached on restart?

Upgrade handler checks for anyof

plan.Heightandplan.Timeto match withctxblock time/ block height.

Relevant code here:

https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/abci.go#L25-L26

and

https://github.com/cosmos/cosmos-sdk/blob/d6575137414caf80c140814b7386c269fd106605/x/upgrade/types/plan.go#L41-L47Since,

ctx.BlockHeight()andctx.BlockTime()returning the persisted block details, which is different than expected current block, the binary is panicing.

Relevant code here:

https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/abci.go#L60-L64Hmm does the process halt/panic before this code is reached and then it is only reached on restart?

Not sure if you are asking about the new binary (upgrade release). There are two binaries (old and new).

Old binary panics as soon as it reaches the upgrade height (or time) mentioned in the plan.

https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/abci.go#L37-L50

New binary panics if the upgrade height (or) doesn't match:

https://github.com/cosmos/cosmos-sdk/blob/master/x/upgrade/abci.go#L60-L64

I think, we should handle something here to ensure the latest block data is persisted to disk

Ok, this makes sense now. When the new binary starts, it doesn't have the height persisted it expects to pick up from, hence the panic.

So in this case, the upgrade height is 100 and for this particular node and it's pruning strategy, when the new binary starts, it doesn't have 100 (99?).

So to summarize: For any given node, the upgrade height has to be a snapshot height, otherwise, when the new binary starts, it will not exist as it was not persisted to disk.

Nodes can run in archive mode (pruning: nothing), but this is obviously not a solution.

I think, we should handle something here to ensure the latest block data is persisted to disk

I don't think this is a viable strategy either as we should not be committing state during an end/begin blocker of a module. The semantics would cause further confusion and potential technical debt.

It really does seem like the best course of action is to revert the recent IAVL changes. It's caused more headache and confusion than the potential benefits IMHO.

Hmm, so either the upgrade height has to be a snapshot height OR as @zmanian mentioned, there needs to be a way for the upgrade height to be specially persisted.

The second approach would require some changes to the iAVL, the first one would be easier to implement and enforce

cc: @alexanderbez

The second approach would require some changes to the iAVL

Can you think of a clean way of doing this?

Is there somewhere where I can track exactly what the IAVL changes were and the rationale behind them? I'm aware this has caused other issues and I assume the benefit is performance?

Likely there's some way to coordinate IAVL pruning and the upgrade height, but I wonder is there likely a larger security issue at play here? It seems like this potentially changes the block finality guarantees of cosmos chains. What if nodes operators were to all choose long persistence intervals and there was some sort of unexpected chain halt (besides just a planned upgrade), would potentially dozens or hundreds of "committed" blocks suddenly be lost?

Is there somewhere where I can track exactly what the IAVL changes were and the rationale behind them? I'm aware this has caused other issues and I assume the benefit is performance?

https://github.com/tendermint/iavl/blob/master/docs/tree/PRUNING.md#nodedb

Likely there's some way to coordinate IAVL pruning and the upgrade height, but I wonder is there likely a larger security issue at play here?

No. I can't see any.

It seems like this potentially changes the block finality guarantees of cosmos chains.

It doesn't.

What if nodes operators were to all choose long persistence intervals and there was some sort of unexpected chain halt (besides just a planned upgrade), would potentially dozens or hundreds of "committed" blocks suddenly be lost?

No, blocks would just be replayed.

I see so it just affects the state store not the tendermint block history.

Is there a way to force a write to disk? Nodes should gracefully shutdown before performing an upgrade (I'll need to double-check that https://github.com/regen-network/cosmosd uses this behavior). So theoretically a SIGINT handler that forced a persist to disk would solve this.

I see so it just affects the state store not the tendermint block history.

Correct.

Is there a way to force a write to disk? Nodes should gracefully shutdown before performing an upgrade (I'll need to double-check that https://github.com/regen-network/cosmosd uses this behavior). So theoretically a SIGINT handler that forced a persist to disk would solve this.

No, this is the crux of the problem (this issue).

Somewhat orthogonal to the issue, but relevant: https://github.com/tendermint/spec/pull/84.

Note this would theoretically make it possible for the following to be true:

What if nodes operators were to all choose long persistence intervals and there was some sort of unexpected chain halt (besides just a planned upgrade), would potentially dozens or hundreds of "committed" blocks suddenly be lost?

I have a thought for an alternative design for fixing the issue with upgrades.

Right now the ABCI interface knows how to get Tendermint to replay blocks until it's caught up with a current height. This is why restarts are safe when pruning.

The export functionality is only able to export state committed to disk.

Is the solution to actually start the ABCI application before exporting so that we just reconstruct state to the target height?

@zmanian there's no exporting with the upgrade module. It's a different design.

The problem is with the new binary trying to replay blocks that should have had their state persisted by the old binary. Some way or another we need to get the old binary to commit to disk before shutting down.

I've already spent a great deal of time into supporting manually flushing a version in IAVL -- I propose we continue with this approach or completely revise how pruning actually works.

I haven't checked in on the progress of flushing. Just had an alternative approch pop into my head and wanted to document it.

Closing this as it should no longer be an issue due to IAVL reverting it's pruning behavior.

Most helpful comment

Closing this as it should no longer be an issue due to IAVL reverting it's pruning behavior.