Core: Higher CPU continues in 0.118

The problem

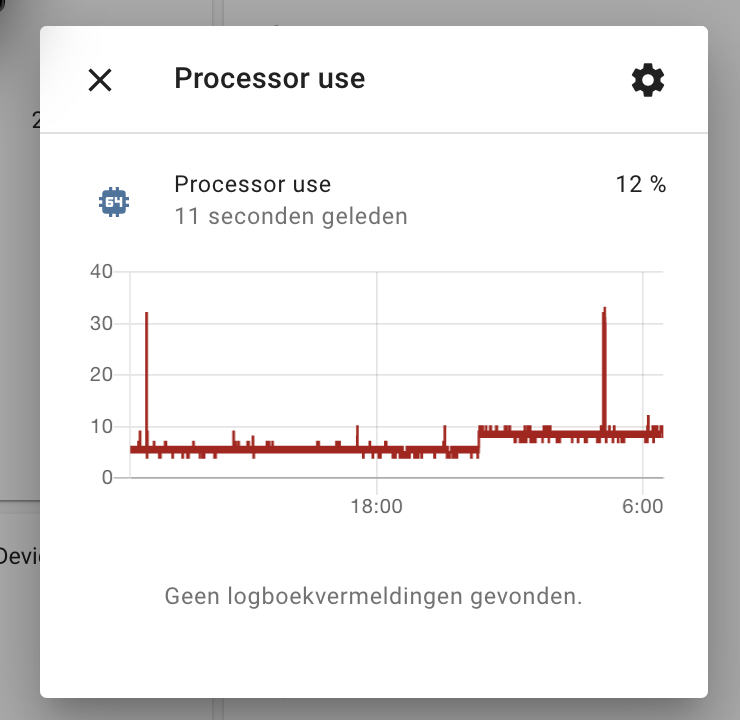

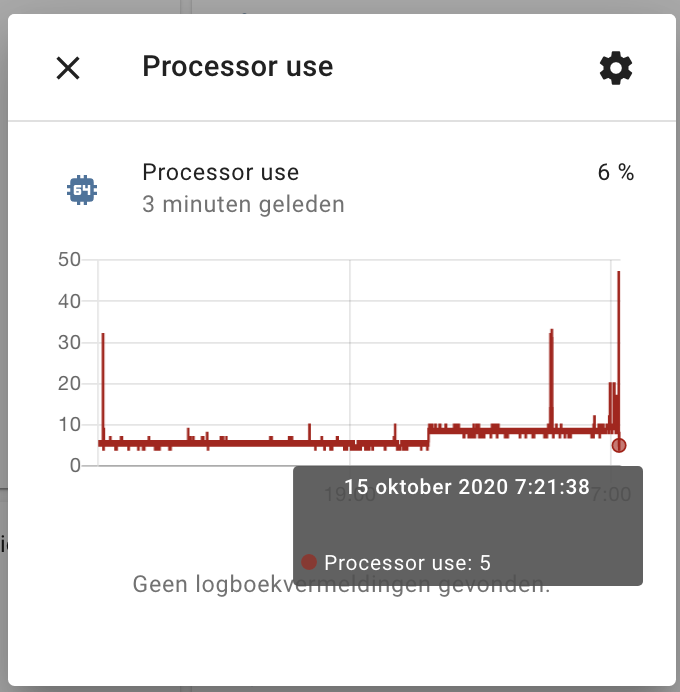

I don't know if it is a core or frontend problem or something else. But I'm seeing a higher CPU use in generally, but especially when clicking on a card in the Lovelace view.

My normal CPU use was 2 a 3 % now it is at 6 to 8 %

When I want to show the history the fans of my NUC immediately start to blow and I see always a turning circle.

And the CPU use peaks at about 18%

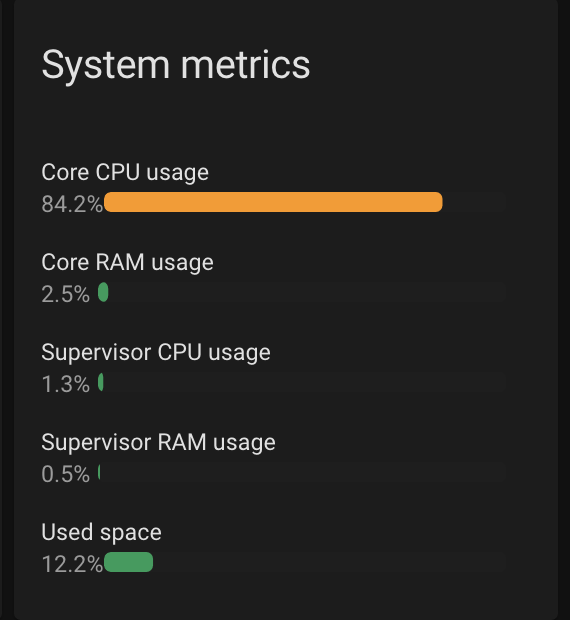

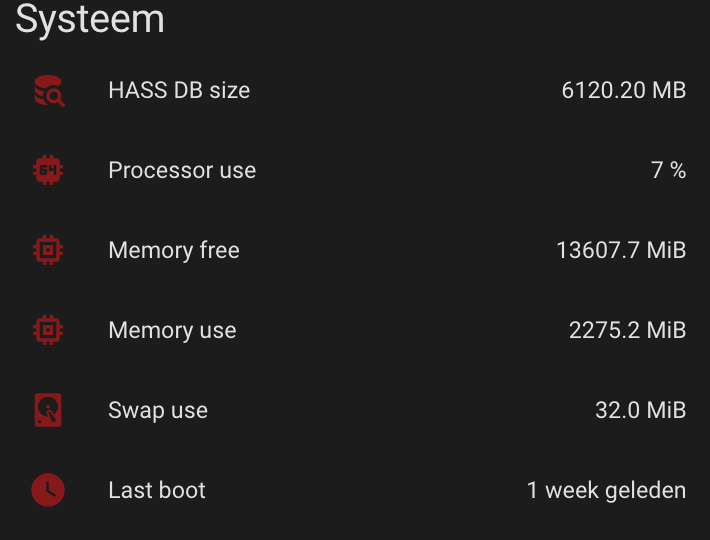

This are some screenshots from Glances when clicking on history in Lovelace

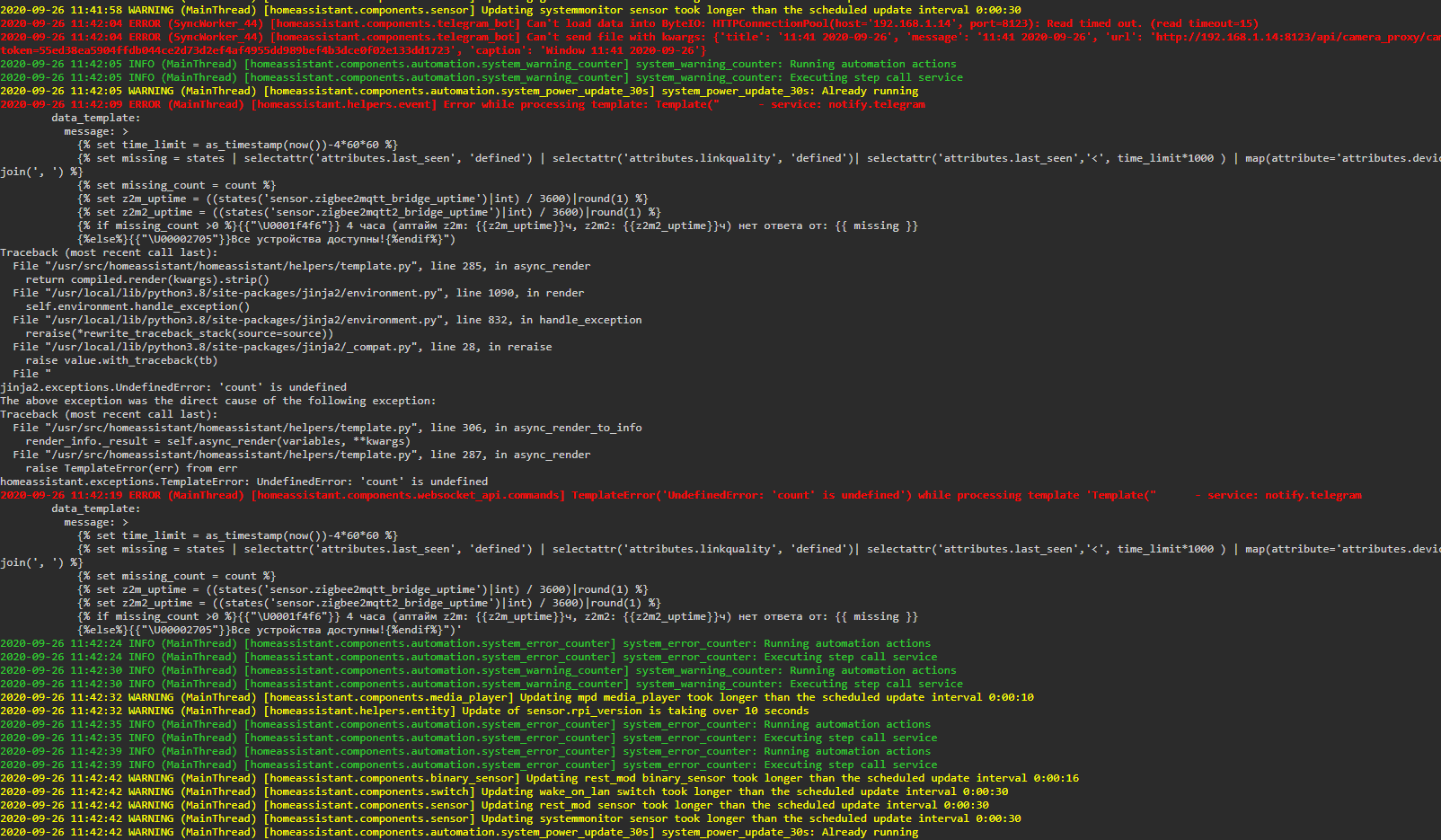

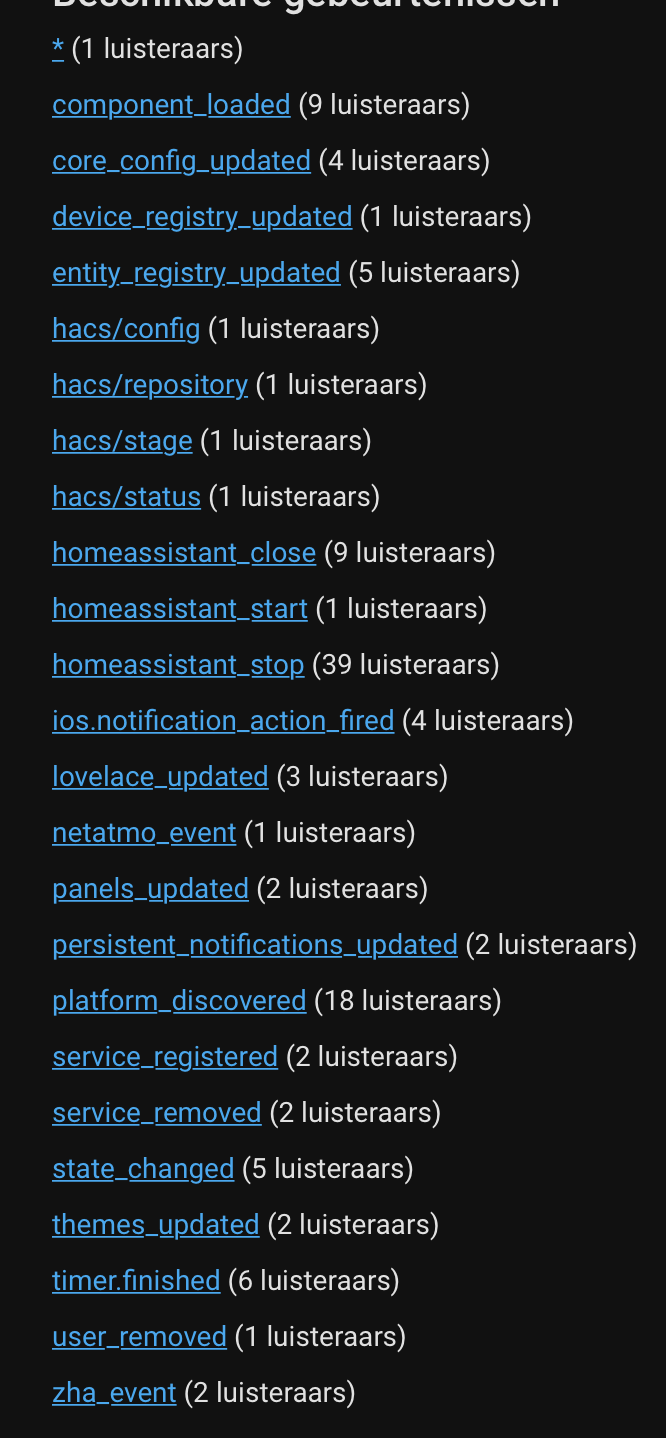

I had debug on for

logs:

homeassistant.event: debug

homeassistant.components.logger: debug

homeassistant.components.history: debug

homeassistant.components.recorder: debug

But not seeing anything special

I've tried the Py-Spy way, but I can not get it installed

Compiling addr2line v0.11.0

Running `rustc --crate-name addr2line /root/.cargo/registry/src/github.com-1ecc6299db9ec823/addr2line-0.11.0/src/lib.rs --error-format=json --json=diagnostic-rendered-ansi,artifacts --crate-type lib --emit=dep-info,metadata,link -C opt-level=3 --cfg 'feature="cpp_demangle"' --cfg 'feature="default"' --cfg 'feature="object"' --cfg 'feature="rustc-demangle"' --cfg 'feature="std"' --cfg 'feature="std-object"' -C metadata=361484a7c1298d46 -C extra-filename=-361484a7c1298d46 --out-dir /tmp/cargo-installWui9CK/release/deps -L dependency=/tmp/cargo-installWui9CK/release/deps --extern cpp_demangle=/tmp/cargo-installWui9CK/release/deps/libcpp_demangle-eb3cfddebaa342f3.rmeta --extern fallible_iterator=/tmp/cargo-installWui9CK/release/deps/libfallible_iterator-a4123992588425b5.rmeta --extern gimli=/tmp/cargo-installWui9CK/release/deps/libgimli-7d5edb096fedfa90.rmeta --extern lazycell=/tmp/cargo-installWui9CK/release/deps/liblazycell-ea0ac196d0b3629c.rmeta --extern object=/tmp/cargo-installWui9CK/release/deps/libobject-477a467cbf075a12.rmeta --extern rustc_demangle=/tmp/cargo-installWui9CK/release/deps/librustc_demangle-1fe779301a7b7227.rmeta --extern smallvec=/tmp/cargo-installWui9CK/release/deps/libsmallvec-9bd83c2162524722.rmeta --cap-lints allow`

Running `rustc --crate-name remoteprocess /root/.cargo/registry/src/github.com-1ecc6299db9ec823/remoteprocess-0.3.4/src/lib.rs --error-format=json --json=diagnostic-rendered-ansi,artifacts --crate-type lib --emit=dep-info,metadata,link -C opt-level=3 --cfg 'feature="default"' --cfg 'feature="unwind"' -C metadata=2427f6658e4e467c -C extra-filename=-2427f6658e4e467c --out-dir /tmp/cargo-installWui9CK/release/deps -L dependency=/tmp/cargo-installWui9CK/release/deps --extern addr2line=/tmp/cargo-installWui9CK/release/deps/libaddr2line-361484a7c1298d46.rmeta --extern benfred_read_process_memory=/tmp/cargo-installWui9CK/release/deps/libbenfred_read_process_memory-e58b01e0194ad895.rmeta --extern goblin=/tmp/cargo-installWui9CK/release/deps/libgoblin-f7d9a003d2b2b860.rmeta --extern lazy_static=/tmp/cargo-installWui9CK/release/deps/liblazy_static-dda545747076ca2e.rmeta --extern libc=/tmp/cargo-installWui9CK/release/deps/liblibc-d6a517a54d66530e.rmeta --extern log=/tmp/cargo-installWui9CK/release/deps/liblog-33247e2f897fd775.rmeta --extern memmap=/tmp/cargo-installWui9CK/release/deps/libmemmap-d558b4e09fe43210.rmeta --extern nix=/tmp/cargo-installWui9CK/release/deps/libnix-003b039a35e9d039.rmeta --extern object=/tmp/cargo-installWui9CK/release/deps/libobject-477a467cbf075a12.rmeta --extern proc_maps=/tmp/cargo-installWui9CK/release/deps/libproc_maps-af79a59c980f3ade.rmeta --extern regex=/tmp/cargo-installWui9CK/release/deps/libregex-bc89e9f6ea47428c.rmeta --cap-lints allow -L native=/usr/local/lib -l static=unwind -l static=unwind-ptrace -l static=unwind-x86_64`

Running `rustc --crate-name py_spy /root/.cargo/registry/src/github.com-1ecc6299db9ec823/py-spy-0.3.3/src/lib.rs --error-format=json --json=diagnostic-rendered-ansi --crate-type lib --emit=dep-info,metadata,link -C opt-level=3 -C metadata=ff12da3be12bb5c3 -C extra-filename=-ff12da3be12bb5c3 --out-dir /tmp/cargo-installWui9CK/release/deps -L dependency=/tmp/cargo-installWui9CK/release/deps --extern clap=/tmp/cargo-installWui9CK/release/deps/libclap-f6bf72e393cc38bc.rmeta --extern console=/tmp/cargo-installWui9CK/release/deps/libconsole-f112d37c0f47569f.rmeta --extern cpp_demangle=/tmp/cargo-installWui9CK/release/deps/libcpp_demangle-eb3cfddebaa342f3.rmeta --extern ctrlc=/tmp/cargo-installWui9CK/release/deps/libctrlc-aad786bac40b3a9d.rmeta --extern env_logger=/tmp/cargo-installWui9CK/release/deps/libenv_logger-e27ea58ddced9d00.rmeta --extern failure=/tmp/cargo-installWui9CK/release/deps/libfailure-3335c4e883f54b39.rmeta --extern goblin=/tmp/cargo-installWui9CK/release/deps/libgoblin-f7d9a003d2b2b860.rmeta --extern indicatif=/tmp/cargo-installWui9CK/release/deps/libindicatif-225faf62a9b3f910.rmeta --extern inferno=/tmp/cargo-installWui9CK/release/deps/libinferno-d5bd4adb491d743b.rmeta --extern lazy_static=/tmp/cargo-installWui9CK/release/deps/liblazy_static-dda545747076ca2e.rmeta --extern libc=/tmp/cargo-installWui9CK/release/deps/liblibc-d6a517a54d66530e.rmeta --extern log=/tmp/cargo-installWui9CK/release/deps/liblog-33247e2f897fd775.rmeta --extern lru=/tmp/cargo-installWui9CK/release/deps/liblru-7c22ae3502192a2e.rmeta --extern memmap=/tmp/cargo-installWui9CK/release/deps/libmemmap-d558b4e09fe43210.rmeta --extern proc_maps=/tmp/cargo-installWui9CK/release/deps/libproc_maps-af79a59c980f3ade.rmeta --extern rand=/tmp/cargo-installWui9CK/release/deps/librand-3b4eb2183b20dcb0.rmeta --extern rand_distr=/tmp/cargo-installWui9CK/release/deps/librand_distr-9984e8ecbf04f38f.rmeta --extern regex=/tmp/cargo-installWui9CK/release/deps/libregex-bc89e9f6ea47428c.rmeta --extern remoteprocess=/tmp/cargo-installWui9CK/release/deps/libremoteprocess-2427f6658e4e467c.rmeta --extern serde=/tmp/cargo-installWui9CK/release/deps/libserde-64b415a5891575a4.rmeta --extern serde_derive=/tmp/cargo-installWui9CK/release/deps/libserde_derive-87637e81ef141bef.so --extern serde_json=/tmp/cargo-installWui9CK/release/deps/libserde_json-8b40eb8bd5b4f85c.rmeta --extern tempfile=/tmp/cargo-installWui9CK/release/deps/libtempfile-2085f7c84ee47d2e.rmeta --extern termios=/tmp/cargo-installWui9CK/release/deps/libtermios-776eb0ed1fc2710a.rmeta --cap-lints allow --cfg unwind -L native=/usr/local/lib`

error: could not find native static library `unwind`, perhaps an -L flag is missing?

error: aborting due to previous error

error: could not compile `remoteprocess`.

Caused by:

process didn't exit successfully: `rustc --crate-name remoteprocess /root/.cargo/registry/src/github.com-1ecc6299db9ec823/remoteprocess-0.3.4/src/lib.rs --error-format=json --json=diagnostic-rendered-ansi,artifacts --crate-type lib --emit=dep-info,metadata,link -C opt-level=3 --cfg 'feature="default"' --cfg 'feature="unwind"' -C metadata=2427f6658e4e467c -C extra-filename=-2427f6658e4e467c --out-dir /tmp/cargo-installWui9CK/release/deps -L dependency=/tmp/cargo-installWui9CK/release/deps --extern addr2line=/tmp/cargo-installWui9CK/release/deps/libaddr2line-361484a7c1298d46.rmeta --extern benfred_read_process_memory=/tmp/cargo-installWui9CK/release/deps/libbenfred_read_process_memory-e58b01e0194ad895.rmeta --extern goblin=/tmp/cargo-installWui9CK/release/deps/libgoblin-f7d9a003d2b2b860.rmeta --extern lazy_static=/tmp/cargo-installWui9CK/release/deps/liblazy_static-dda545747076ca2e.rmeta --extern libc=/tmp/cargo-installWui9CK/release/deps/liblibc-d6a517a54d66530e.rmeta --extern log=/tmp/cargo-installWui9CK/release/deps/liblog-33247e2f897fd775.rmeta --extern memmap=/tmp/cargo-installWui9CK/release/deps/libmemmap-d558b4e09fe43210.rmeta --extern nix=/tmp/cargo-installWui9CK/release/deps/libnix-003b039a35e9d039.rmeta --extern object=/tmp/cargo-installWui9CK/release/deps/libobject-477a467cbf075a12.rmeta --extern proc_maps=/tmp/cargo-installWui9CK/release/deps/libproc_maps-af79a59c980f3ade.rmeta --extern regex=/tmp/cargo-installWui9CK/release/deps/libregex-bc89e9f6ea47428c.rmeta --cap-lints allow -L native=/usr/local/lib -l static=unwind -l static=unwind-ptrace -l static=unwind-x86_64` (exit code: 1)

warning: build failed, waiting for other jobs to finish...

error: failed to compile `py-spy v0.3.3`, intermediate artifacts can be found at `/tmp/cargo-installWui9CK`

Caused by:

build failed

bash-5.0# # pip install py-spy

/bin/ash: pip: not found

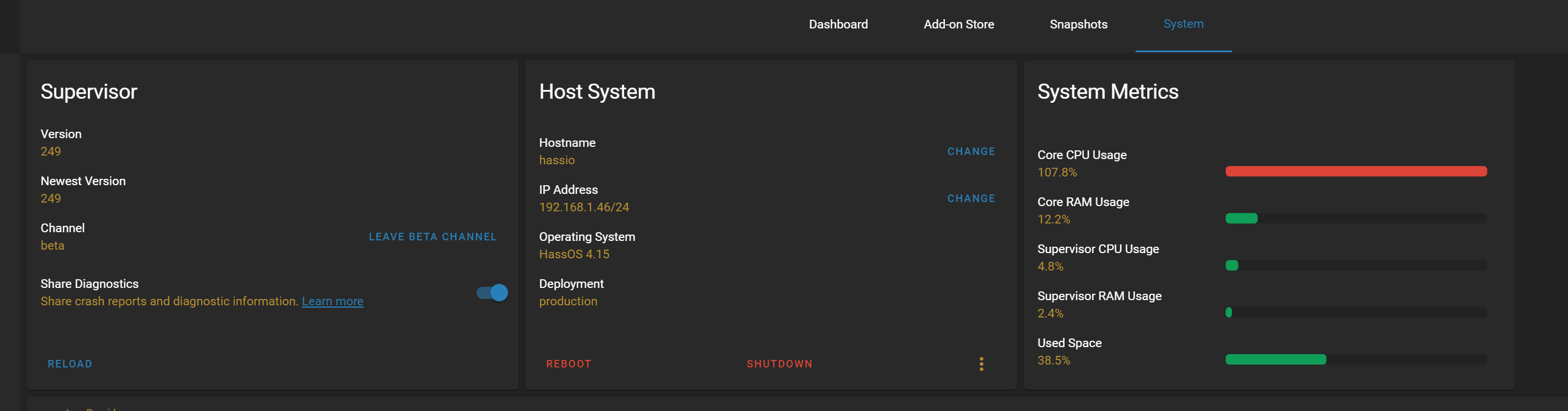

Environment

- Home Assistant Core release with the issue: 0.115

- Last working Home Assistant Core release (if known): 0.114.x

- Operating environment (OS/Container/Supervised/Core): OS

- Integration causing this issue: ?

- Link to integration documentation on our website: ?

arch | x86_64

-- | --

chassis | embedded

dev | false

docker | true

docker_version | 19.03.11

hassio | true

host_os | HassOS 4.13

installation_type | Home Assistant OS

os_name | Linux

os_version | 5.4.63

python_version | 3.8.5

supervisor | 245

timezone | Europe/Brussels

version | 0.115.1

virtualenv | false

Problem-relevant configuration.yaml

I don't know

Traceback/Error logs

None

Additional information

If you need some more info, ask...

All 277 comments

When not using the frontend, once a 50-60 second the fans of my NUC are blowing fast.

This is a screenshot of glances the moment this happens.

Could be related to this issue #39890

There isn't much we can do to help without a py-spy or interesting logs.

Maybe you can tell me how to install....

I've tried

https://developers.home-assistant.io/docs/operating-system/debugging/#ssh-access-to-the-host

and then

apk add cargo

cargo install py-spy

There isn't much we can do to help without a

py-spyor interesting logs.

Which Debug you need?

I've tried

logs:

homeassistant.event: debug

homeassistant.components.logger: debug

homeassistant.components.history: debug

homeassistant.components.recorder: debug

Try turning on full debug mode. (default: debug) and seeing if there is anything interesting. Without a py-spy to narrow the issue down, its not possible to give any more guidance for which area to look.

Is there a way to send you this debug log?

Btw I've tried another 2 times to install Py-Spy, always

error: could not find native static library `unwind`, perhaps an -L flag is missing?

error: aborting due to previous error

error: could not compile `remoteprocess`.

To learn more, run the command again with --verbose.

warning: build failed, waiting for other jobs to finish...

error: failed to compile `py-spy v0.3.3`, intermediate artifacts can be found at `/tmp/cargo-installNtWBUG`

Caused by:

build failed

If you want to send it privately, [email protected] would be fine.

Sent from my Mobile

On Sep 19, 2020, at 9:11 AM, Giel Janssens notifications@github.com wrote:

Is there a way to send you this debug log?—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub, or unsubscribe.

I've send you a wetransfer link because .log is 100MB

2020-09-19 16:16:05 DEBUG (MainThread) [homeassistant.helpers.event] Template update {% if states('sensor.uptime_in_uren')|float > 0.05|float %}

{{states|selectattr('state', 'in', ['unavailable','unknown','none'])

|reject('in', expand('group.entity_blacklist'))

|reject('eq', states.group.entity_blacklist)

|list|length}}

{% else %}

0

{% endif %}

Try disabling this template as its watching all states and you have a massive amount of state changed events happening.

You could replace it with an automation to only happen every few seconds and it should help

Also change

{{ states.sensor | list | length }}

to

{{ states.sensor | count }}

This will avoid creating a list every time a sensor updates

2020-09-19 16:16:05 DEBUG (MainThread) [homeassistant.helpers.event] Template update {% if states('sensor.uptime_in_uren')|float > 0.05|float %}

{{states|selectattr('state', 'in', ['unavailable','unknown','none'])

|reject('in', expand('group.entity_blacklist'))

|reject('eq', states.group.entity_blacklist)

|list|length}}

{% else %}

0

{% endif %}

I've had tried that yesterday, there was no difference. So I turned it back on...

But I will make an automation of that...

{{ states.device_tracker | list | length }} as well

I can tell you what I see is inefficient, but I'm pretty much shooting in the dark without a py-spy.

I'll try to change that all.

Py-Spy, I did try to install another 2 times....

bdraco@Js-MacBook-Pro-3 ~ % grep 'state_changed' home-assistant\ \(1\).log | grep '16:14:40'|wc

315 10547 187923

bdraco@Js-MacBook-Pro-3 ~ % grep 'state_changed' home-assistant\ \(1\).log | grep '16:14:41'|wc

241 7817 135811

bdraco@Js-MacBook-Pro-3 ~ % grep 'state_changed' home-assistant\ \(1\).log | grep '16:14:42'|wc

355 16331 251219

bdraco@Js-MacBook-Pro-3 ~ % grep 'state_changed' home-assistant\ \(1\).log | grep '16:14:43'|wc

259 8447 146329

bdraco@Js-MacBook-Pro-3 ~ % grep 'state_changed' home-assistant\ \(1\).log | grep '16:14:44'|wc

291 9860 176954

It looks like you are getting about 300 state changed events per second 😮

I'd focus on reducing the state change events to only what you need.

I'd focus on reducing the state change events to only what you need.

How do you mean? Delete some sensors?

In 0.114.x I haven't seen these problems...

I have disabled my unavailable sensor and replaced all | list | length }} by | count }} and

When not using the frontend, once a 50-60 second the fans of my NUC are blowing fast.

this appears to be less frequent

I have found this

2020-09-19 16:42:46 23 [Warning] Aborted connection 23 to db: 'homeassistant' user: 'hass' host: '172.30.32.1' (Got an error reading communication packets)

2020-09-19 17:58:12 35 [Warning] Aborted connection 35 to db: 'homeassistant' user: 'hass' host: '172.30.32.1' (Got an error reading communication packets)

in the logs from the MariaDB addon

I'd focus on reducing the state change events to only what you need.

How do you mean? Delete some sensors?

In 0.114.x I haven't seen these problems...

If you disable all your template entities, does the issue go away?

I think I removed all template sensors and template binary sensors

The restart off HA takes still a lot of time

CPU is still higher then 0.114.x

And when I click something in the frontend

So I restore all my templates...

It is likely the mysql load is caused by the processing of all those state changed events.

I'm not sure what has changed between 0.114 and 0.115 that would cause that increase. It may be caused by a change in the integration that is generating these events.

error: could not find native static library

unwind, perhaps an -L flag is missing?

I get the same error with cargo. However, this worked for me inside a 0.115.1 Container install:

echo 'manylinux1_compatible = True' > /usr/local/lib/python3.8/site-packages/_manylinux.py

pip install py-spy

py-spy record --pid $(pidof python3) --duration 120 --output /config/www/spy.svg

(I did have to add the SYS_PTRACE capability to the container but I assume this is handled already in the OS setup.)

bash-5.0# py-spy dump --pid 232

Process 232: python3 -m homeassistant --config /config

Python v3.8.5 (/usr/local/bin/python3.8)

Thread 232 (idle): "MainThread"

select (selectors.py:468)

_run_once (asyncio/base_events.py:1823)

run_forever (asyncio/base_events.py:570)

run_until_complete (asyncio/base_events.py:603)

run (asyncio/runners.py:43)

run (homeassistant/runner.py:133)

main (homeassistant/__main__.py:312)

<module> (homeassistant/__main__.py:320)

_run_code (runpy.py:87)

_run_module_as_main (runpy.py:194)

Thread 254 (idle): "Thread-1"

dequeue (logging/handlers.py:1427)

_monitor (logging/handlers.py:1478)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 255 (idle): "SyncWorker_0"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 256 (idle): "SyncWorker_1"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 257 (idle): "SyncWorker_2"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 258 (idle): "SyncWorker_3"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 259 (idle): "SyncWorker_4"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 260 (idle): "SyncWorker_5"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 263 (idle): "SyncWorker_6"

query (MySQLdb/connections.py:259)

_query (MySQLdb/cursors.py:319)

execute (MySQLdb/cursors.py:206)

do_execute (sqlalchemy/engine/default.py:593)

_execute_context (sqlalchemy/engine/base.py:1276)

_execute_clauseelement (sqlalchemy/engine/base.py:1124)

_execute_on_connection (sqlalchemy/sql/elements.py:298)

execute (sqlalchemy/engine/base.py:1011)

_execute_and_instances (sqlalchemy/orm/query.py:3533)

__iter__ (sqlalchemy/orm/query.py:3508)

yield_events (homeassistant/components/logbook/__init__.py:418)

humanify (homeassistant/components/logbook/__init__.py:251)

_get_events (homeassistant/components/logbook/__init__.py:516)

json_events (homeassistant/components/logbook/__init__.py:228)

run (concurrent/futures/thread.py:57)

_worker (concurrent/futures/thread.py:80)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 264 (idle): "SyncWorker_7"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 265 (idle): "SyncWorker_8"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 266 (idle): "SyncWorker_9"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 267 (idle): "SyncWorker_10"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 268 (idle): "SyncWorker_11"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 269 (idle): "SyncWorker_12"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 270 (idle): "SyncWorker_13"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 271 (idle): "SyncWorker_14"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 272 (idle): "SyncWorker_15"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 273 (idle): "SyncWorker_16"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 274 (idle): "SyncWorker_17"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 275 (idle): "SyncWorker_18"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 276 (idle): "SyncWorker_19"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 277 (idle): "SyncWorker_20"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 278 (idle): "SyncWorker_21"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 279 (idle): "SyncWorker_22"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 280 (idle): "Recorder"

run (homeassistant/components/recorder/__init__.py:346)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 281 (idle): "Thread-2"

dequeue (logging/handlers.py:1427)

_monitor (logging/handlers.py:1478)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 284 (idle): "zeroconf-Engine-284"

run (zeroconf/__init__.py:1325)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 285 (idle): "zeroconf-Reaper_285"

wait (threading.py:306)

run (zeroconf/__init__.py:1451)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 286 (idle): "SyncWorker_23"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 287 (idle): "SyncWorker_24"

query (MySQLdb/connections.py:259)

_query (MySQLdb/cursors.py:319)

execute (MySQLdb/cursors.py:206)

do_execute (sqlalchemy/engine/default.py:593)

_execute_context (sqlalchemy/engine/base.py:1276)

_execute_clauseelement (sqlalchemy/engine/base.py:1124)

_execute_on_connection (sqlalchemy/sql/elements.py:298)

execute (sqlalchemy/engine/base.py:1011)

_execute_and_instances (sqlalchemy/orm/query.py:3533)

__iter__ (sqlalchemy/orm/query.py:3508)

yield_events (homeassistant/components/logbook/__init__.py:418)

humanify (homeassistant/components/logbook/__init__.py:251)

_get_events (homeassistant/components/logbook/__init__.py:516)

json_events (homeassistant/components/logbook/__init__.py:228)

run (concurrent/futures/thread.py:57)

_worker (concurrent/futures/thread.py:80)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 288 (idle): "SyncWorker_25"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 289 (idle): "SyncWorker_26"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 290 (idle): "SyncWorker_27"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 291 (idle): "SyncWorker_28"

query (MySQLdb/connections.py:259)

_query (MySQLdb/cursors.py:319)

execute (MySQLdb/cursors.py:206)

do_execute (sqlalchemy/engine/default.py:593)

_execute_context (sqlalchemy/engine/base.py:1276)

_execute_clauseelement (sqlalchemy/engine/base.py:1124)

_execute_on_connection (sqlalchemy/sql/elements.py:298)

execute (sqlalchemy/engine/base.py:1011)

_execute_and_instances (sqlalchemy/orm/query.py:3533)

__iter__ (sqlalchemy/orm/query.py:3508)

yield_events (homeassistant/components/logbook/__init__.py:418)

humanify (homeassistant/components/logbook/__init__.py:251)

_get_events (homeassistant/components/logbook/__init__.py:516)

json_events (homeassistant/components/logbook/__init__.py:228)

run (concurrent/futures/thread.py:57)

_worker (concurrent/futures/thread.py:80)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 292 (idle): "SyncWorker_29"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 294 (idle): "SyncWorker_30"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 295 (idle): "SyncWorker_31"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 296 (idle): "SyncWorker_32"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 297 (idle): "SyncWorker_33"

query (MySQLdb/connections.py:259)

_query (MySQLdb/cursors.py:319)

execute (MySQLdb/cursors.py:206)

do_execute (sqlalchemy/engine/default.py:593)

_execute_context (sqlalchemy/engine/base.py:1276)

_execute_clauseelement (sqlalchemy/engine/base.py:1124)

_execute_on_connection (sqlalchemy/sql/elements.py:298)

execute (sqlalchemy/engine/base.py:1011)

_execute_and_instances (sqlalchemy/orm/query.py:3533)

__iter__ (sqlalchemy/orm/query.py:3508)

yield_events (homeassistant/components/logbook/__init__.py:418)

humanify (homeassistant/components/logbook/__init__.py:251)

_get_events (homeassistant/components/logbook/__init__.py:516)

json_events (homeassistant/components/logbook/__init__.py:228)

run (concurrent/futures/thread.py:57)

_worker (concurrent/futures/thread.py:80)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 298 (idle): "SyncWorker_34"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 299 (idle): "SyncWorker_35"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 300 (idle): "SyncWorker_36"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 301 (idle): "SyncWorker_37"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 302 (idle): "SyncWorker_38"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 303 (idle): "SyncWorker_39"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 304 (idle): "SyncWorker_40"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 305 (idle): "SyncWorker_41"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 306 (idle): "SyncWorker_42"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 307 (idle): "SyncWorker_43"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 308 (idle): "SyncWorker_44"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 309 (idle): "SyncWorker_45"

query (MySQLdb/connections.py:259)

_query (MySQLdb/cursors.py:319)

execute (MySQLdb/cursors.py:206)

do_execute (sqlalchemy/engine/default.py:593)

_execute_context (sqlalchemy/engine/base.py:1276)

_execute_clauseelement (sqlalchemy/engine/base.py:1124)

_execute_on_connection (sqlalchemy/sql/elements.py:298)

execute (sqlalchemy/engine/base.py:1011)

_execute_and_instances (sqlalchemy/orm/query.py:3533)

__iter__ (sqlalchemy/orm/query.py:3508)

yield_events (homeassistant/components/logbook/__init__.py:418)

humanify (homeassistant/components/logbook/__init__.py:251)

_get_events (homeassistant/components/logbook/__init__.py:516)

json_events (homeassistant/components/logbook/__init__.py:228)

run (concurrent/futures/thread.py:57)

_worker (concurrent/futures/thread.py:80)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 310 (idle): "SyncWorker_46"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 311 (idle): "SyncWorker_47"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 312 (idle): "SyncWorker_48"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 313 (idle): "SyncWorker_49"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 314 (idle): "SyncWorker_50"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 315 (idle): "SyncWorker_51"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 320 (idle): "Thread-5"

loop (paho/mqtt/client.py:1163)

loop_forever (paho/mqtt/client.py:1782)

_thread_main (paho/mqtt/client.py:3428)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 326 (idle): "Thread-6"

select (selectors.py:415)

handle_request (socketserver.py:294)

run (pysonos/events.py:149)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 327 (idle): "Thread-7"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 328 (idle): "Thread-8"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 329 (idle): "Thread-9"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 331 (idle): "Thread-11"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 334 (idle): "Thread-14"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 336 (idle): "Thread-16"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 337 (idle): "Thread-17"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 340 (idle): "Thread-20"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 342 (idle): "Thread-22"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 343 (idle): "Thread-23"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 344 (idle): "Thread-24"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 346 (idle): "Thread-26"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 347 (idle): "Thread-27"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 348 (idle): "Thread-28"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 349 (idle): "Thread-29"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 353 (idle): "Thread-33"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 354 (idle): "Thread-34"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 356 (idle): "Thread-36"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 358 (idle): "Thread-38"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 360 (idle): "Thread-40"

wait (threading.py:306)

wait (threading.py:558)

run (pysonos/events.py:362)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 377 (idle): "SyncWorker_52"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 378 (idle): "SyncWorker_53"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 379 (idle): "SyncWorker_54"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 380 (idle): "SyncWorker_55"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 381 (idle): "SyncWorker_56"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 382 (idle): "SyncWorker_57"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 383 (idle): "SyncWorker_58"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 384 (idle): "SyncWorker_59"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 385 (idle): "SyncWorker_60"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 386 (idle): "SyncWorker_61"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 387 (idle): "SyncWorker_62"

query (MySQLdb/connections.py:259)

_query (MySQLdb/cursors.py:319)

execute (MySQLdb/cursors.py:206)

do_execute (sqlalchemy/engine/default.py:593)

_execute_context (sqlalchemy/engine/base.py:1276)

_execute_clauseelement (sqlalchemy/engine/base.py:1124)

_execute_on_connection (sqlalchemy/sql/elements.py:298)

execute (sqlalchemy/engine/base.py:1011)

_execute_and_instances (sqlalchemy/orm/query.py:3533)

__iter__ (sqlalchemy/orm/query.py:3508)

yield_events (homeassistant/components/logbook/__init__.py:418)

humanify (homeassistant/components/logbook/__init__.py:251)

_get_events (homeassistant/components/logbook/__init__.py:516)

json_events (homeassistant/components/logbook/__init__.py:228)

run (concurrent/futures/thread.py:57)

_worker (concurrent/futures/thread.py:80)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 388 (idle): "SyncWorker_63"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 827 (idle): "zeroconf-ServiceBrowser__printer._tcp.local.-_nut._tcp.local.-_miio._udp.local.-_esphomelib._tcp.local.-_ipps._tcp.local.-_plugwise._tcp.local.-_ssh._tcp.local.-_Volumio._tcp.local.-_elg._tcp.local.-_ipp._tcp.local.-_bond._tcp.local.-_googlecast._tcp.local.-_http._tcp.local.-_hap._tcp.local.-_axis-video._tcp.local.-_dkapi._tcp.local.-_api._udp.local.-_daap._tcp.local.-_viziocast._tcp.local.-_wled._tcp.local.-_xbmc-jsonrpc-h._tcp.local.-_spotify-connect._tcp.local._827"

wait (threading.py:306)

wait (zeroconf/__init__.py:2407)

run (zeroconf/__init__.py:1701)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 828 (idle): "Thread-48"

send_events (pyhap/accessory_driver.py:483)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 829 (idle): "Thread-49"

select (selectors.py:415)

serve_forever (socketserver.py:232)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 831 (idle): "Thread-51"

recv (pyhap/hap_server.py:779)

recv_into (pyhap/hap_server.py:758)

readinto (socket.py:669)

handle_one_request (http/server.py:395)

handle (http/server.py:429)

__init__ (socketserver.py:720)

__init__ (pyhap/hap_server.py:164)

finish_request (pyhap/hap_server.py:943)

process_request_thread (socketserver.py:650)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 832 (idle): "Thread-52"

recv (pyhap/hap_server.py:779)

recv_into (pyhap/hap_server.py:758)

readinto (socket.py:669)

handle_one_request (http/server.py:395)

handle (http/server.py:429)

__init__ (socketserver.py:720)

__init__ (pyhap/hap_server.py:164)

finish_request (pyhap/hap_server.py:943)

process_request_thread (socketserver.py:650)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 6015 (idle): "Thread-81"

recv (pyhap/hap_server.py:779)

recv_into (pyhap/hap_server.py:758)

readinto (socket.py:669)

handle_one_request (http/server.py:395)

handle (http/server.py:429)

__init__ (socketserver.py:720)

__init__ (pyhap/hap_server.py:164)

finish_request (pyhap/hap_server.py:943)

process_request_thread (socketserver.py:650)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 25348 (idle): "Thread-170"

recv (pyhap/hap_server.py:779)

recv_into (pyhap/hap_server.py:758)

readinto (socket.py:669)

handle_one_request (http/server.py:395)

handle (http/server.py:429)

__init__ (socketserver.py:720)

__init__ (pyhap/hap_server.py:164)

finish_request (pyhap/hap_server.py:943)

process_request_thread (socketserver.py:650)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 164849 (idle): "stream_worker"

_stream_worker_internal (homeassistant/components/stream/worker.py:205)

stream_worker (homeassistant/components/stream/worker.py:48)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 259889 (idle): "Thread-624"

recv (pyhap/hap_server.py:779)

recv_into (pyhap/hap_server.py:758)

readinto (socket.py:669)

handle_one_request (http/server.py:395)

handle (http/server.py:429)

__init__ (socketserver.py:720)

__init__ (pyhap/hap_server.py:164)

finish_request (pyhap/hap_server.py:943)

process_request_thread (socketserver.py:650)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 260522 (idle): "Thread-625"

recv (pyhap/hap_server.py:779)

recv_into (pyhap/hap_server.py:758)

readinto (socket.py:669)

handle_one_request (http/server.py:395)

handle (http/server.py:429)

__init__ (socketserver.py:720)

__init__ (pyhap/hap_server.py:164)

finish_request (pyhap/hap_server.py:943)

process_request_thread (socketserver.py:650)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

bash-5.0#

Do you have something that is constantly gathering logbook data? There are at least 5 separate logbook api requests running in your py-spy.

There is a lot going on here, but at least https://github.com/home-assistant/core/pull/40250 and https://github.com/home-assistant/core/pull/40272 should help performance of templates.

It looks like the core issue is that MQTT is flooding home assistant with state changed events.

Do you have something that is constantly gathering logbook data? There are at least 5 separate logbook api requests running in your py-spy.

Not that I know

logbook.yaml

exclude:

domains:

- sun

entities:

- sensor.since_last_boot

- sensor.date

- sensor.cpu_use

- sensor.ram_free

- sensor.ram_use

- sensor.time

- sensor.time_date

- sensor.pihole_ads_blocked_today

- sensor.pihole_ads_percentage_blocked_today

- sensor.pihole_dns_queries_today

logger.yaml

default: error

recorder.yaml

db_url: !secret mysql

exclude:

domains:

- camera

- group

- history_graph

- scene

- sun

- weather

- zone

entities:

- binary_sensor.bluetooth_scan

- sensor.dark_sky_cloud_coverage_1

- sensor.dark_sky_cloud_coverage_2

- sensor.dark_sky_cloud_coverage_3

- sensor.dark_sky_cloud_coverage_4

- sensor.dark_sky_cloud_coverage_5

- sensor.dark_sky_cloud_coverage_6

- sensor.dark_sky_cloud_coverage_7

- sensor.dark_sky_daily_summary

- sensor.dark_sky_daytime_high_temperature

- sensor.dark_sky_daytime_high_temperature_1

- sensor.dark_sky_daytime_high_temperature_2

- sensor.dark_sky_daytime_high_temperature_3

- sensor.dark_sky_daytime_high_temperature_4

- sensor.dark_sky_daytime_high_temperature_5

- sensor.dark_sky_daytime_high_temperature_6

- sensor.dark_sky_daytime_high_temperature_7

- sensor.dark_sky_dew_point_1

- sensor.dark_sky_dew_point_2

- sensor.dark_sky_dew_point_3

- sensor.dark_sky_dew_point_4

- sensor.dark_sky_dew_point_5

- sensor.dark_sky_dew_point_6

- sensor.dark_sky_dew_point_7

- sensor.dark_sky_hourly_summary

- sensor.dark_sky_humidity_1

- sensor.dark_sky_humidity_2

- sensor.dark_sky_humidity_3

- sensor.dark_sky_humidity_4

- sensor.dark_sky_humidity_5

- sensor.dark_sky_humidity_6

- sensor.dark_sky_humidity_7

- sensor.dark_sky_overnight_low_temperature

- sensor.dark_sky_overnight_low_temperature_1

- sensor.dark_sky_overnight_low_temperature_2

- sensor.dark_sky_overnight_low_temperature_3

- sensor.dark_sky_overnight_low_temperature_4

- sensor.dark_sky_overnight_low_temperature_5

- sensor.dark_sky_overnight_low_temperature_6

- sensor.dark_sky_overnight_low_temperature_7

- sensor.dark_sky_precip_1

- sensor.dark_sky_precip_2

- sensor.dark_sky_precip_3

- sensor.dark_sky_precip_4

- sensor.dark_sky_precip_5

- sensor.dark_sky_precip_6

- sensor.dark_sky_precip_7

- sensor.dark_sky_precip_intensity_1

- sensor.dark_sky_precip_intensity_2

- sensor.dark_sky_precip_intensity_3

- sensor.dark_sky_precip_intensity_4

- sensor.dark_sky_precip_intensity_5

- sensor.dark_sky_precip_intensity_6

- sensor.dark_sky_precip_intensity_7

- sensor.dark_sky_summary

- sensor.dark_sky_wind_bearing_1

- sensor.dark_sky_wind_bearing_2

- sensor.dark_sky_wind_bearing_3

- sensor.dark_sky_wind_bearing_4

- sensor.dark_sky_wind_bearing_5

- sensor.dark_sky_wind_bearing_6

- sensor.dark_sky_wind_bearing_7

- sensor.dark_sky_wind_speed_1

- sensor.dark_sky_wind_speed_2

- sensor.dark_sky_wind_speed_3

- sensor.dark_sky_wind_speed_4

- sensor.dark_sky_wind_speed_5

- sensor.dark_sky_wind_speed_6

- sensor.dark_sky_wind_speed_7

- sensor.date

- sensor.date_time

- sensor.moon

- sensor.next_rising

- sensor.notification_hour

- sensor.notification_minute

- sensor.notification_time

- sensor.notification_time_long

- sensor.people_in_space

- sensor.pws_weather_1n_metric

- sensor.pws_weather_2d_metric

- sensor.pws_weather_2n_metric

- sensor.pws_weather_3d_metric

- sensor.pws_weather_3n_metric

- sensor.pws_weather_4d_metric

- sensor.pws_weather_4n_metric

- sensor.since_last_boot

- sensor.time

- sensor.time_date

- sensor.cert_expiry

- sensor.custom_card_tracker

- sensor.custom_component_tracker

- sensor.custom_python_script_tracker

- sensor.dummy

- sensor.dummy_badkamer

- sensor.dummy_deze_maand

- sensor.dummy_slaapkamer

- sensor.dummy_slaapkamer_fien

- sensor.dummy_slaapkamer_noor

- sensor.dummy_vandaag

- sensor.dummy_verluchting

- sensor.dummy_warmtepomp

- sensor.last_boot

- sensor.moon

- sensor.notification_hour

- sensor.notification_minute

- sensor.notification_time

- sensor.notification_time_long

- sensor.uptime_in_dagen

- sensor.uptime_in_uren

- sensor.zonne_energie_omvormers_gefilterd_lowpass

history.yaml

exclude:

domains:

- weblink

- automation

- updater

- sun

- group

entities:

- sensor.since_last_boot

- sensor.date

- sensor.pws_dewpoint_c

- sensor.pws_dewpoint_string

- sensor.pws_feelslike_c

- sensor.pws_feelslike_string

- sensor.pws_heat_index_c

- sensor.pws_heat_index_string

- sensor.pws_precip_today_metric

- sensor.pws_precip_today_string

- sensor.pws_pressure_mb

- sensor.pws_relative_humidity

- sensor.pws_solarradiation

- sensor.pws_station_id

- sensor.pws_uv

- sensor.pws_visibility_km

- sensor.pws_weather

- sensor.pws_wind_string

- sensor.ram_use

- sensor.time

- sensor.time_date

- sensor.weer_morgen_condition

- sensor.weer_morgen_temperature_max

- sensor.weer_morgen_temperature_min

- sensor.weer_overmorgen_condition

- sensor.weer_overmorgen_temperature_max

- sensor.weer_overmorgen_temperature_min

- binary_sensor.den_hof_outdoor_animal

# - binary_sensor.nummer_79_den_hof_outdoor_animal

- binary_sensor.den_hof_outdoor_motion

- binary_sensor.den_hof_outdoor_vehicle

# - binary_sensor.nummer_79_den_hof_outdoor_vehicle

- climate.netatmo_living_2

- binary_sensor.binnen_warm

- binary_sensor.buiten_fris_genoeg

- binary_sensor.buiten_warm

- sensor.badkamertemperatuur_mean

- sensor.beweging_badkamer_count

- sensor.beweging_slaapkamer_count

- sensor.beweging_slaapkamer_fien_count

- sensor.beweging_slaapkamer_noor_count

- sensor.buienradar

- sensor.bureau

- sensor.fien

- sensor.noor

- sensor.opladers

- sensor.slaapkamer

- sensor.temperatuur_living_mean

- sensor.verluchting

- sensor.wc

- zone.home

It looks like the core issue is that MQTT is flooding home assistant with state changed events.

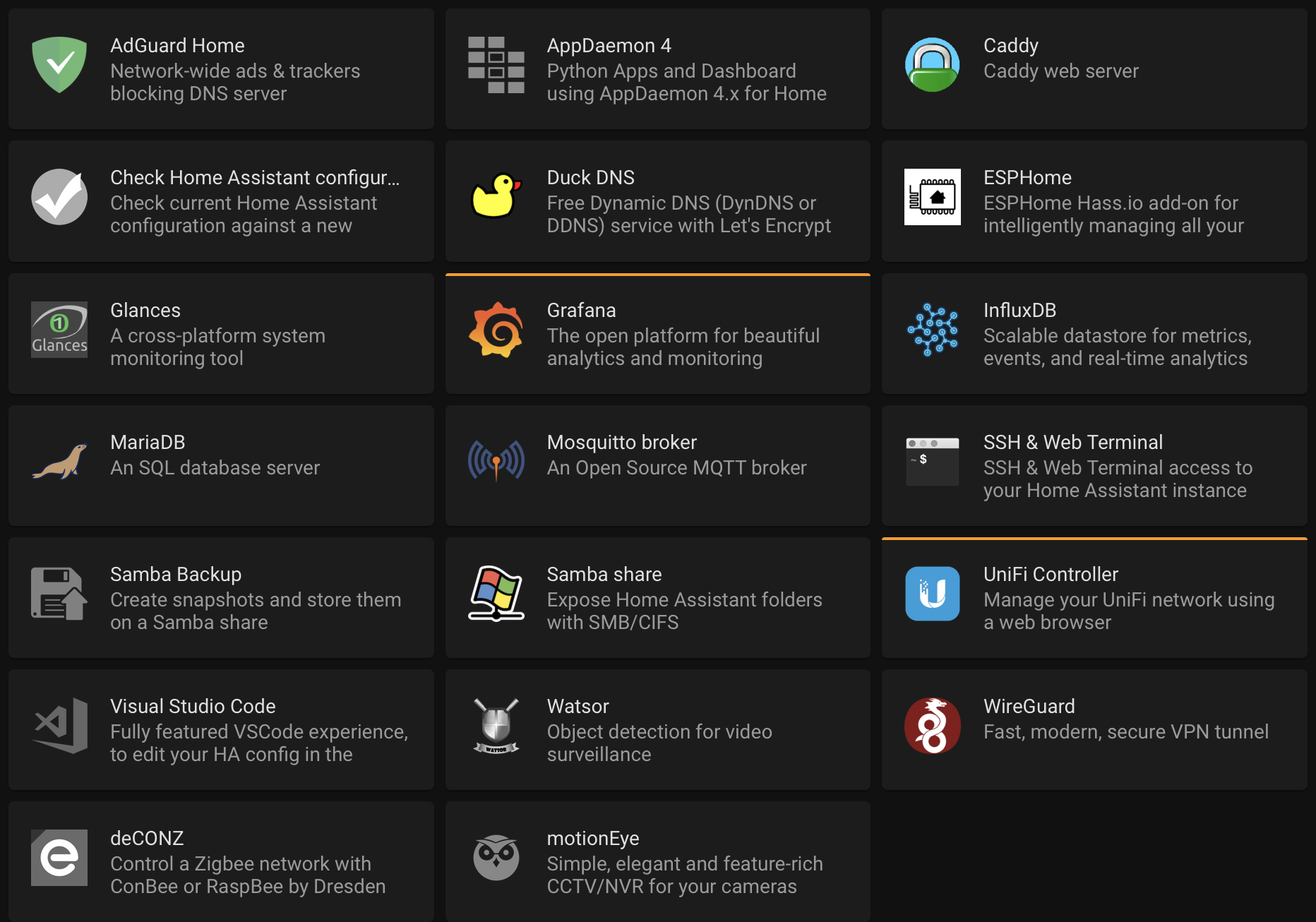

I have Smappee configured via MQTT, Every second I get a realtime update

{

"totalPower": 833,

"totalReactivePower": 528,

"totalExportEnergy": 0,

"totalImportEnergy": -675983779,

"monitorStatus": 0,

"utcTimeStamp": 1600610515811,

"channelPowers": [

{

"ctInput": 0,

"power": 146,

"exportEnergy": 0,

"importEnergy": -2136093549,

"phaseId": 1,

"current": 11

},

{

"ctInput": 1,

"power": 569,

"exportEnergy": 0,

"importEnergy": -1238023984,

"phaseId": 0,

"current": 26

},

{

"ctInput": 2,

"power": 118,

"exportEnergy": 0,

"importEnergy": -1596829640,

"phaseId": 2,

"current": 6

},

{

"ctInput": 3,

"power": 1005,

"exportEnergy": 11198469,

"importEnergy": -1071029410,

"phaseId": 1,

"current": 43

},

{

"ctInput": 4,

"power": 2075,

"exportEnergy": 12407578,

"importEnergy": 1014673999,

"phaseId": 0,

"current": 88

},

{

"ctInput": 5,

"power": 1000,

"exportEnergy": 675661,

"importEnergy": -1210750333,

"phaseId": 2,

"current": 42

}

],

"voltages": [

{

"voltage": 236,

"phaseId": 0

}

]

}

Please try changing the commit interval in recorder to 5 seconds and make a new py-spy recording

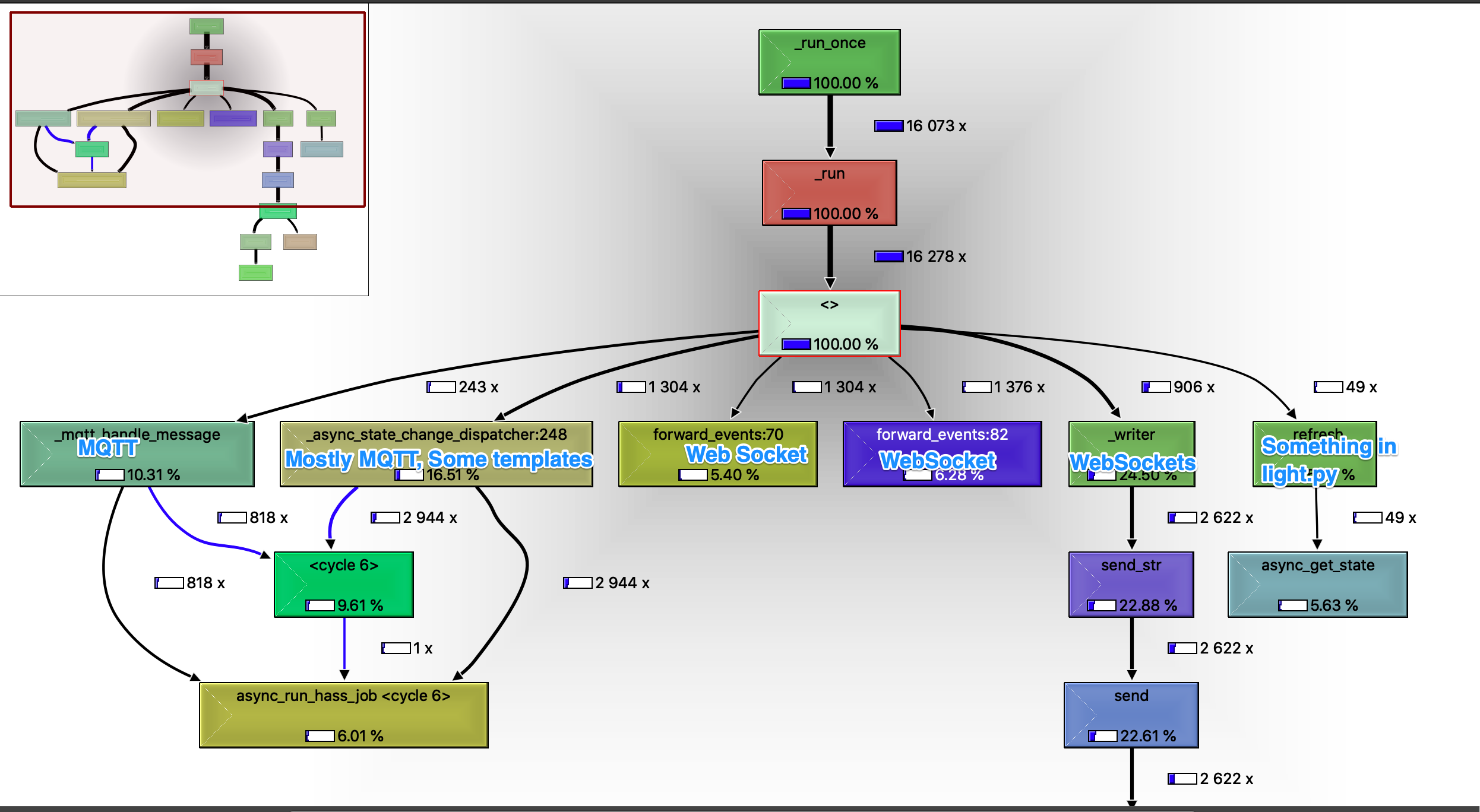

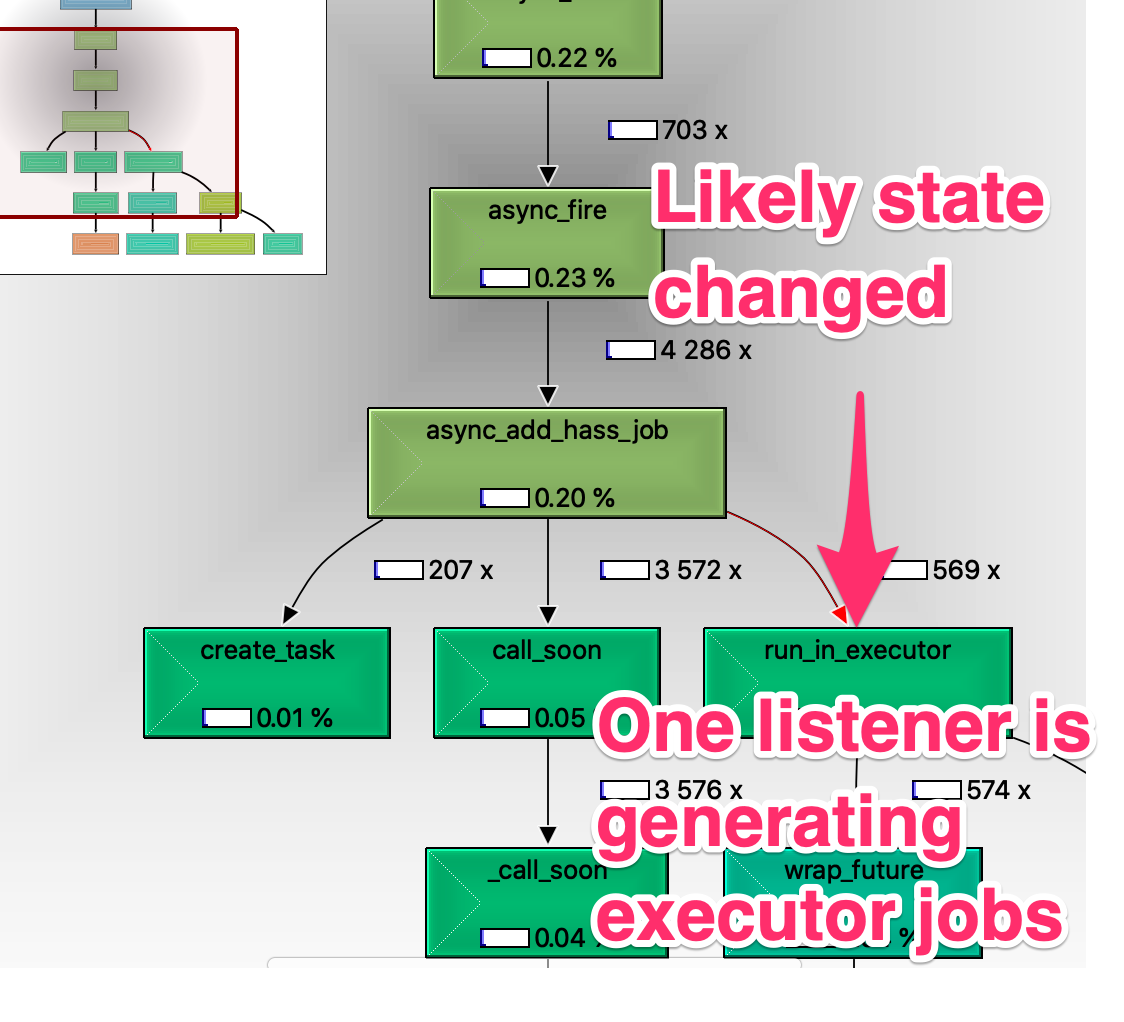

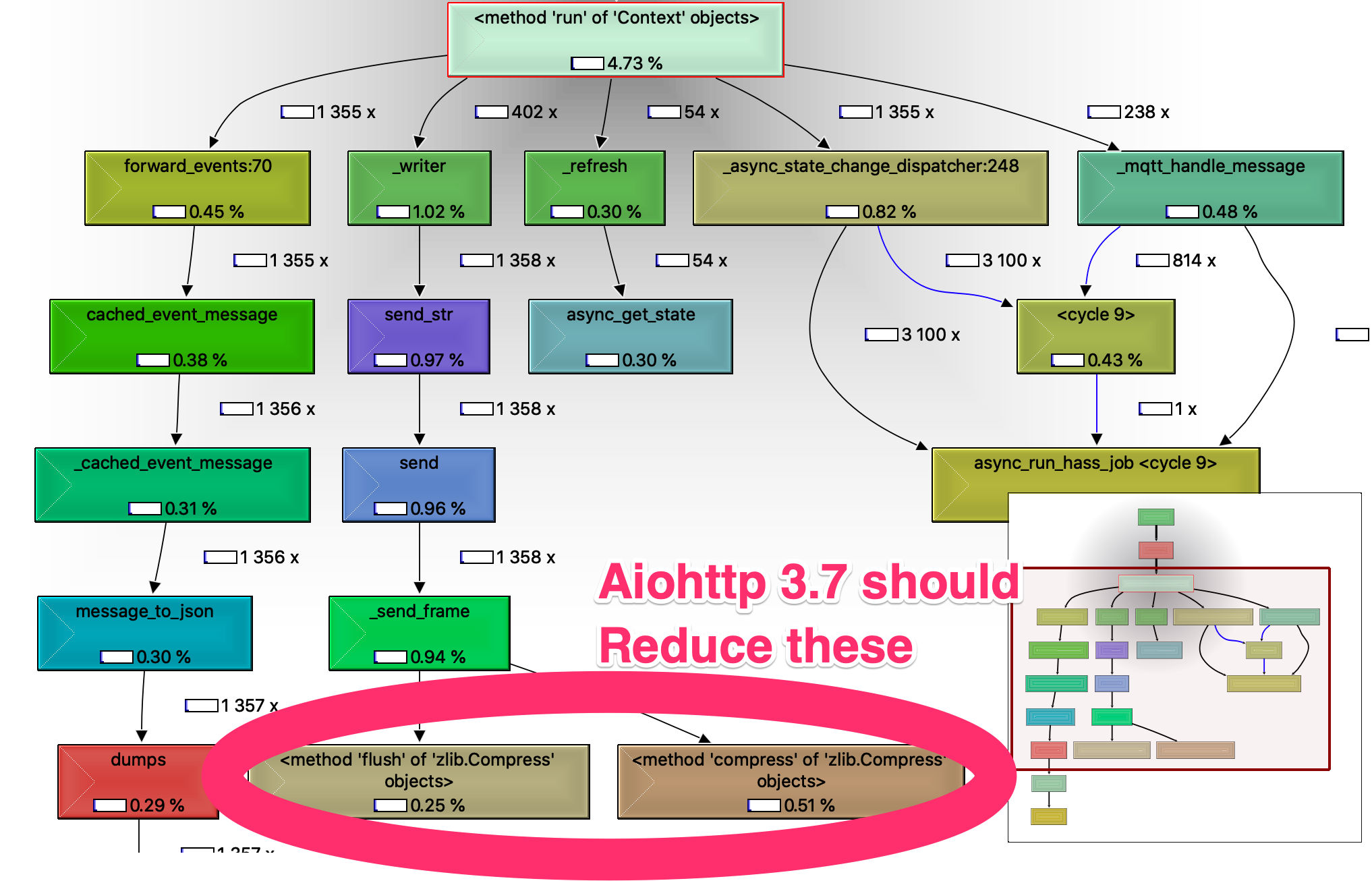

This is a new https://www.dropbox.com/s/elvi42f589055gs/spy.svg?dl=0

That looks better. Is there a way to turn down the Smappee updates to every 5 seconds as well? That should calm things down until we can get https://github.com/home-assistant/core/pull/40345 https://github.com/home-assistant/core/pull/40250 and https://github.com/home-assistant/core/pull/40272 out in 0.116

Is there a way to turn down the Smappee updates to every 5 seconds as well?

I can't change this...

This is a screenshot of glances when I'm clicking on different entity's in a Lovelace view

The high cpu there is caused by showing the logbook part on the more info panel. You have so many state changes feeding into the database that its taking a long time to fetch all the events for the past 24 hours each time you click the popup.

cc @zsarnett

Maybe we should only show the last 12 hours or last x entries? Not sure what the best solution would be for this. I wonder how many others have this same issue

Would be very nice to have the logbook on the More-info card optional in the first place

Secondly, please make that only show on entities included in logbook/history.

Right now it shows on all entities with the swirling icon and after a second or 5 stating nothing to show...

Only posting here since you are discussing optimizing this. Otherwise I would have added a new FR

Maybe we should only show the last 12 hours or last x entries? Not sure what the best solution would be for this. I wonder how many others have this same issue

I'll work on the query on the backend. We may be able to make it faster by excluding all the state change events and then unioning them back in if it doesn't make the query too complex. We may have to add a time_type index

Maybe we should only show the last 12 hours or last x entries? Not sure what the best solution would be for this. I wonder how many others have this same issue

@zsarnett No need to make any changes, I fixed the performance issue looking up single entities in https://github.com/home-assistant/core/pull/40075.

Loading my garage exit door lock status before 1.32s (1324ms) after 94ms

I think the logbook access in the more info is the root cause of some of the other performance issues in 0.115. The problem should just go away after #40075

I made a custom component for logger with your changes in #40075

This much better, when I'm clicking around in Lovelace, no more blowing fans

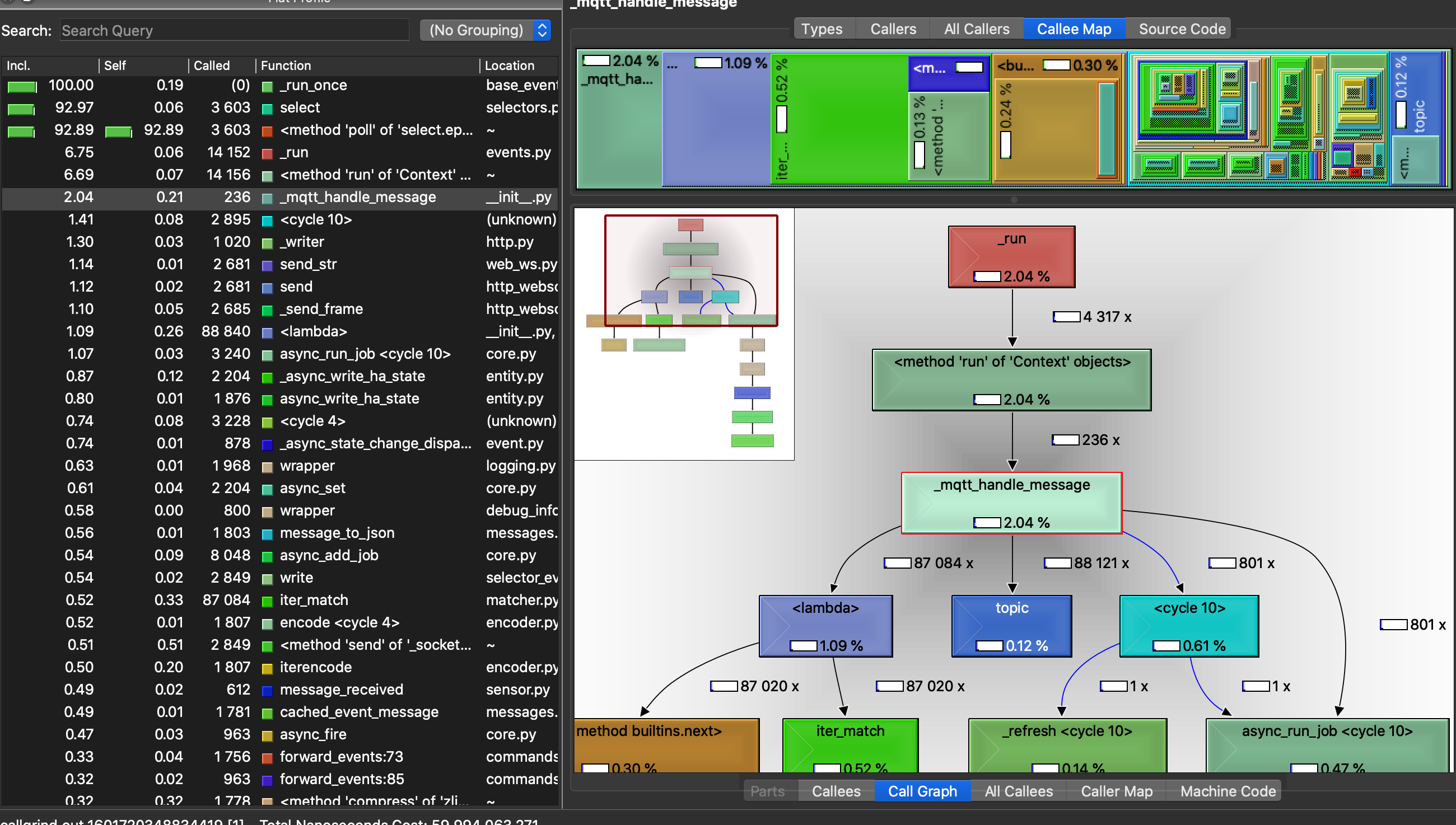

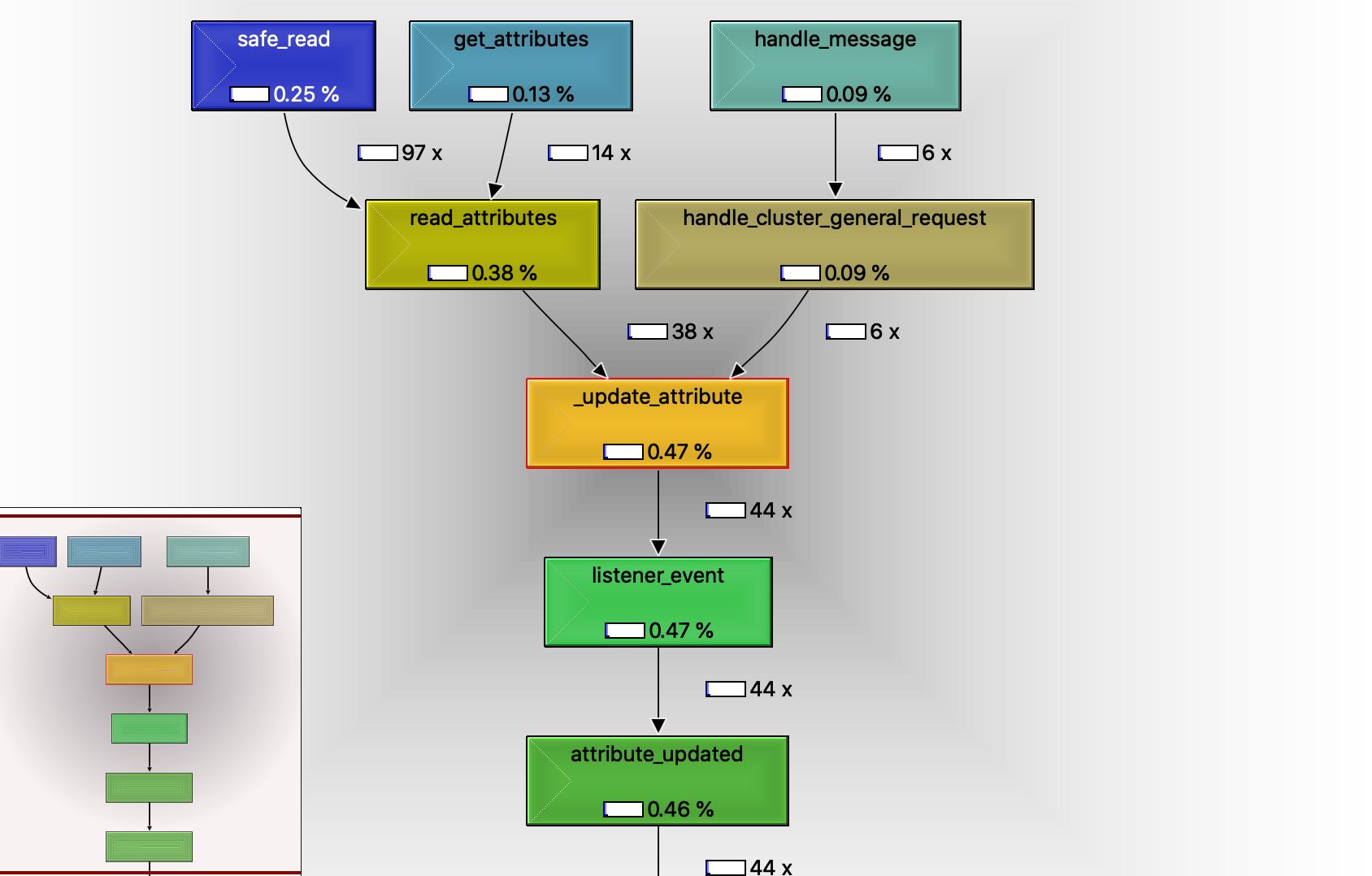

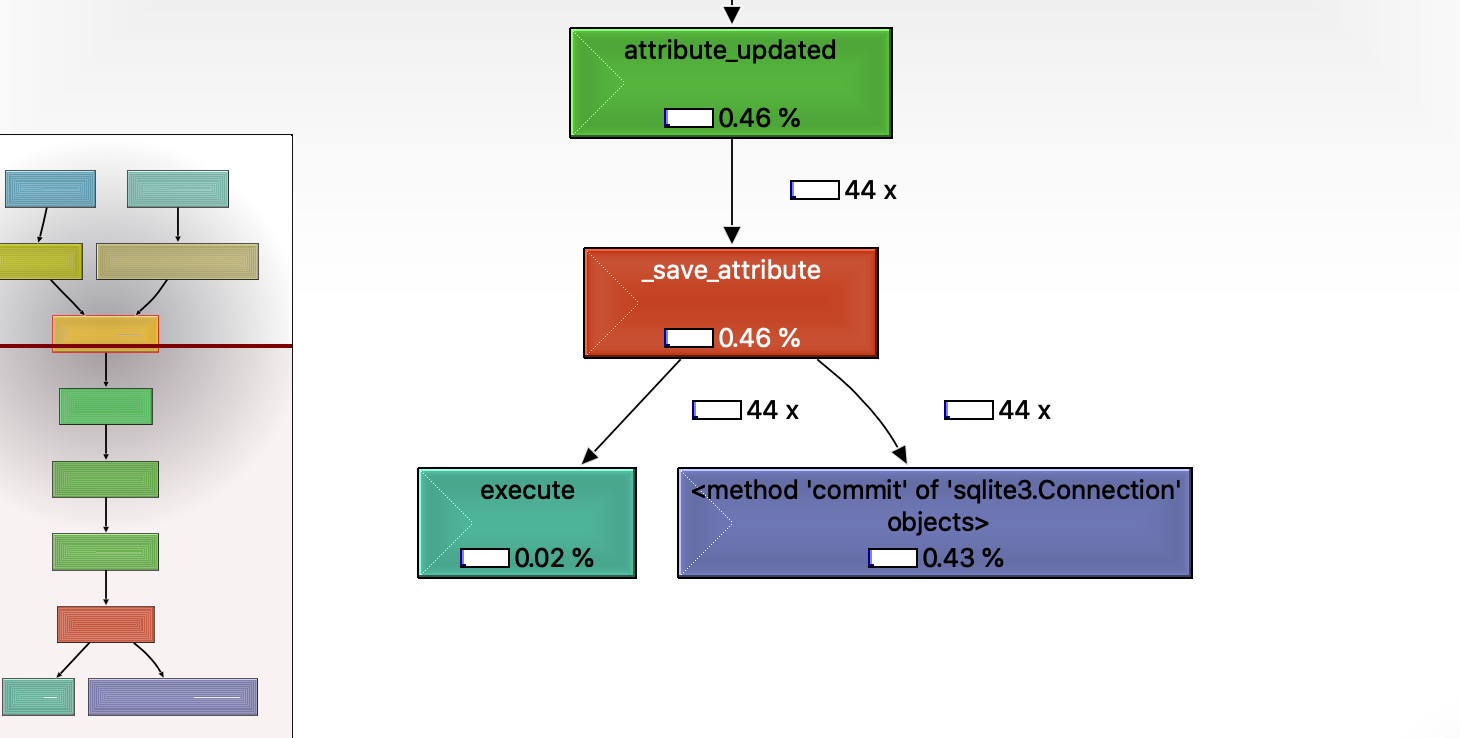

This is a Py-Spy when these changes are applied

https://www.dropbox.com/s/t5zqcq9dzl4v5nk/spy-40075.svg?dl=0

And this is a screenshot of glances

Only my idle CPU use is still more then 0.114.x and I still have occasionally blowing fans

I made another custom component from #40345 (I took the MQTT Component inn DEV and applied your changes)

This is not so good, constantly blowing fans

2 Py-Spy's

https://www.dropbox.com/s/u095q9djk0txmcr/spy-40075%2B40345.svg?dl=0

https://www.dropbox.com/s/yvcxkmrmrk1xo6f/spy-40075%2B40345_2.svg?dl=0

And a screenshot

I don't know how to test #40250 and #40272 so for now I just leave my custom component for logbook applied

This is another Py-Spy, immediately started when the fans of my NUC started to blow

https://www.dropbox.com/s/iustxvojg8v07gn/spy-40075-blowing.svg?dl=0

This is one when the fans started to blow at around 1min 40sec

https://www.dropbox.com/s/c3kq8lc9m30qu2s/spy-40075-blowing-fan-2.svg?dl=0

This are 2 Py-Spy's of 300sec

Can you post your template entities (sensors) configurtion?

The most of my template sensors are in

sensors https://github.com/gieljnssns/My-Hassio-config/tree/master/config/devices/sensors/template_sensors

And some are in

packages https://github.com/gieljnssns/My-Hassio-config/tree/master/config/packages

In here are a lot of binary sensors

binary_sensors https://github.com/gieljnssns/My-Hassio-config/tree/master/config/devices/binary_sensors

In here are some template switches

switches https://github.com/gieljnssns/My-Hassio-config/tree/master/config/devices/switch

Try disabling config/devices/sensors/template_sensors/unavailable.yaml and template_sensors/component_count.yaml

Also if you change the sensors in: template_sensors/component_count.yaml

to

{{ states.input_text | count }}

instead of

{{ states.input_text | list | length }}

since they no longer have to generate a list and throw it away.

I'm sorry, I forgot to tell config/devices/sensors/template_sensors/unavailable.yaml Is already disabled for 2 days, until I make an automation for it

And {{ states.input_text | list | length }} is already converted to {{ states.input_text | count }}

And I also replaced all now() by as_local(states.sensor.time.last_changed)

Sorry for the confusion, my repo isn't updated yet

Do templates as trigger or condition also count?

Do templates as trigger or condition also count?

yes

value_template in other sensors?

I have 1373 results in 225 files for template

Anything that is a trigger condition and has a template has to be watched/entities listened for to see if triggers

Do you know an easy way to extract them all out my config? Regex or something?

Or do you no longer need them?

Anything that is a trigger condition and has a template has to be watched/entities listened for to see if triggers

I don't understand this. Conditions are only checked when the corresponding trigger fires, not when anything mentioned in the condition changes state (right?).

A value_template of another sensor is only updated when the other sensor updates.

Both of these should happen rarely and thus the efficiency matters less.

Anything that is a trigger condition and has a template has to be watched/entities listened for to see if triggers

I don't understand this. Conditions are only checked when the corresponding trigger fires, not when anything mentioned in the condition changes state (right?).

A

value_templateof another sensor is only updated when the other sensor updates.Both of these should happen rarely and thus the efficiency matters less.

I probably shouldn't have used the phrasing trigger condition.

For clarity:

template trigger - listens for state changes and (re)evaluated the template

template condition - evaluated only when triggered by something.

Leaving this open since we have 4 other PRs related to this linked.

@bdraco

If you need more info or Py-Spy, just ask

@gieljnssns Once everything is merged, it would be very helpful to get a new round of py-spys while running the new code to see what remains.

2 down, 3 to go

Will these PRs be tagged with 0.115.x or are they for 0.116?

0.116 as they are too extensive for 0.115

Also it looks like writing state changes is more expensive than I would expect due to the flush.

I'll see if I can get rid of the flush calls

I took care of the flush calls in #40467

@bdraco I also saw this issue, and I think much of it is indeed related to the new state-history function.

This is the query executed for the entity binary_sensor.videosource_1_motion_alarm

DECLARE "c_7f9a850c10_13" CURSOR WITHOUT HOLD FOR SELECT events.event_type AS events_event_type, events.event_data AS events_event_data, events.time_fired AS events_time_fired, events.context_id AS events_context_id, events.context_user_id AS events_context_user_id, states.state AS states_state, states.entity_id AS states_entity_id, states.domain AS states_domain, states.attributes AS states_attributes

FROM events LEFT OUTER JOIN states ON events.event_id = states.event_id LEFT OUTER JOIN states AS old_state ON states.old_state_id = old_state.state_id

WHERE (events.event_type != 'state_changed' OR states.state_id IS NOT NULL AND old_state.state_id IS NOT NULL AND states.state IS NOT NULL AND states.state != old_state.state) AND (events.event_type != 'state_changed' OR states.domain NOT IN ('proximity', 'sensor') OR (states.attributes NOT LIKE '%' || '"unit_of_measurement":' || '%')) AND events.event_type IN ('state_changed', 'logbook_entry', 'call_service', 'homeassistant_start', 'homeassistant_stop', 'script_started', 'automation_triggered') AND events.time_fired > '2020-09-22T09:49:06.809000+00:00'::timestamptz AND events.time_fired < '2020-09-23T09:49:06.809000+00:00'::timestamptz AND (states.last_updated = states.last_changed AND states.entity_id = 'binary_sensor.videosource_1_motion_alarm' OR states.state_id IS NULL AND (events.event_data LIKE '%' || '"entity_id": "binary_sensor.videosource_1_motion_alarm"' || '%')) ORDER BY events.time_fired

All the OR constructs are sings of trouble.

@Expaso please try the logbook with the latest 0.116dev after https://github.com/home-assistant/core/pull/40075

@bdraco

Restarting HA takes a long time

This is a Py-Spy while starting up

https://www.dropbox.com/s/dx8mgkcz4jc22wq/spy-startup.svg?dl=0

Maybe this is useful...

@bdraco

Restarting HA takes a long time

This is a Py-Spy while starting up

dropbox.com/s/dx8mgkcz4jc22wq/spy-startup.svg?dl=0Maybe this is useful...

How many seconds does it take to finish startup?

This was a Py-Spy of 120 seconds, startup was just finished before the PS ended, so I think around 2,5 min

It looks like move of the same issues. Hopefully it gets quite a bit faster when all of the linked PRs are merged

Thanks for the additional py-spy 👍 I've made more progress tracking down CPU issues than any other github issue ever opened.

what is the point to have this kind of template validation tool? any mistake leads to restart via portainer

basically - template editor becomes useless

what is the point to have this kind of template validation tool? any mistake leads to restart via portainer

basically - template editor becomes useless

Please open a separate issue for this as there isn't enough information in your post to understand what is going on.

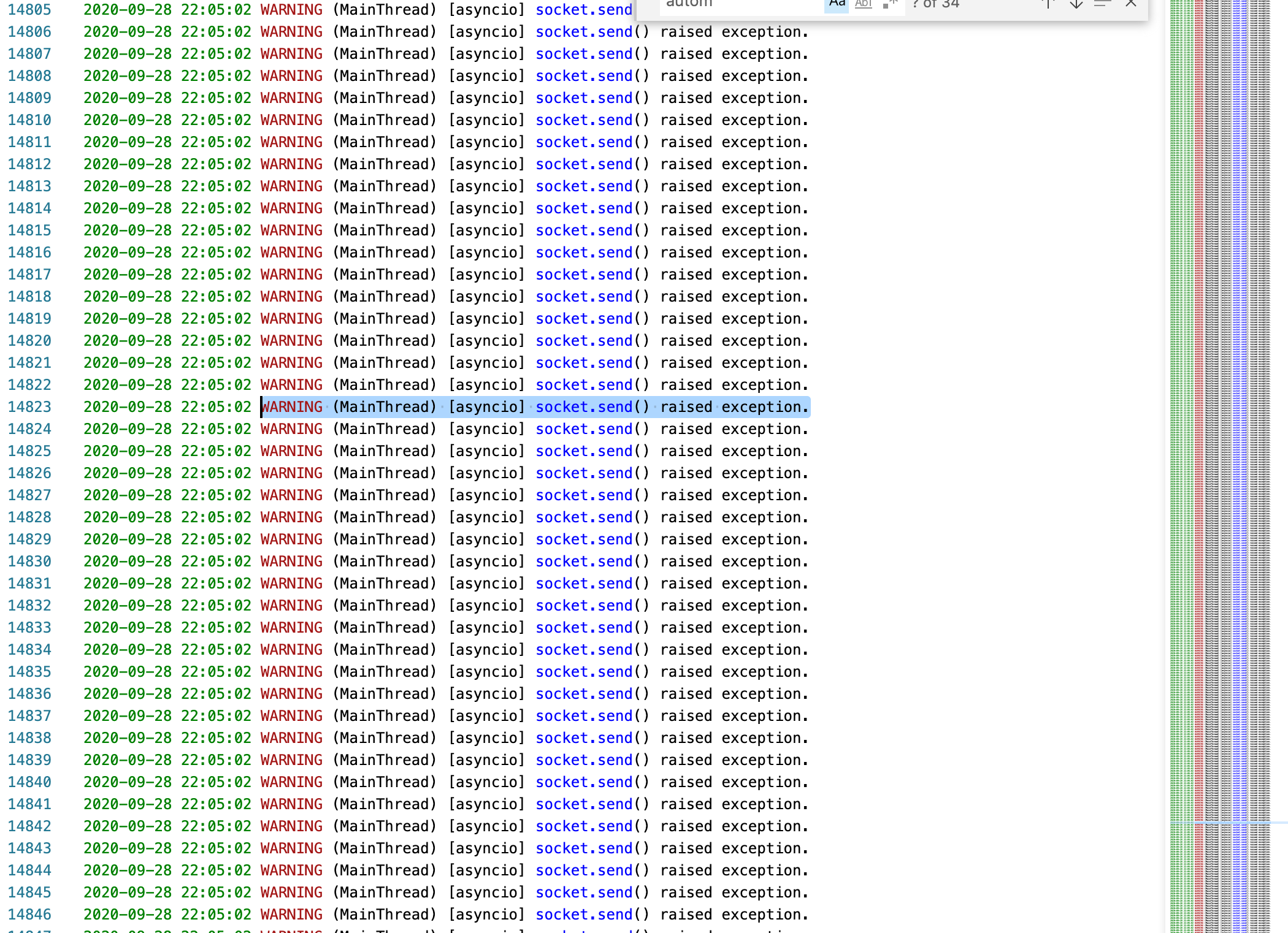

Sometimes HA suddenly hangs up with the following log:

Sometimes HA suddenly hangs up with the following log:

It might be helpful to get an strace of the python process when this is happening

It might be helpful to get an strace of the python process when this is happening

@bdraco

I will try, but the problem is that at that moment docker container becomes unresponsive and I can't run py-spy script.

moving my data from another issue I just submitted and closing that one...

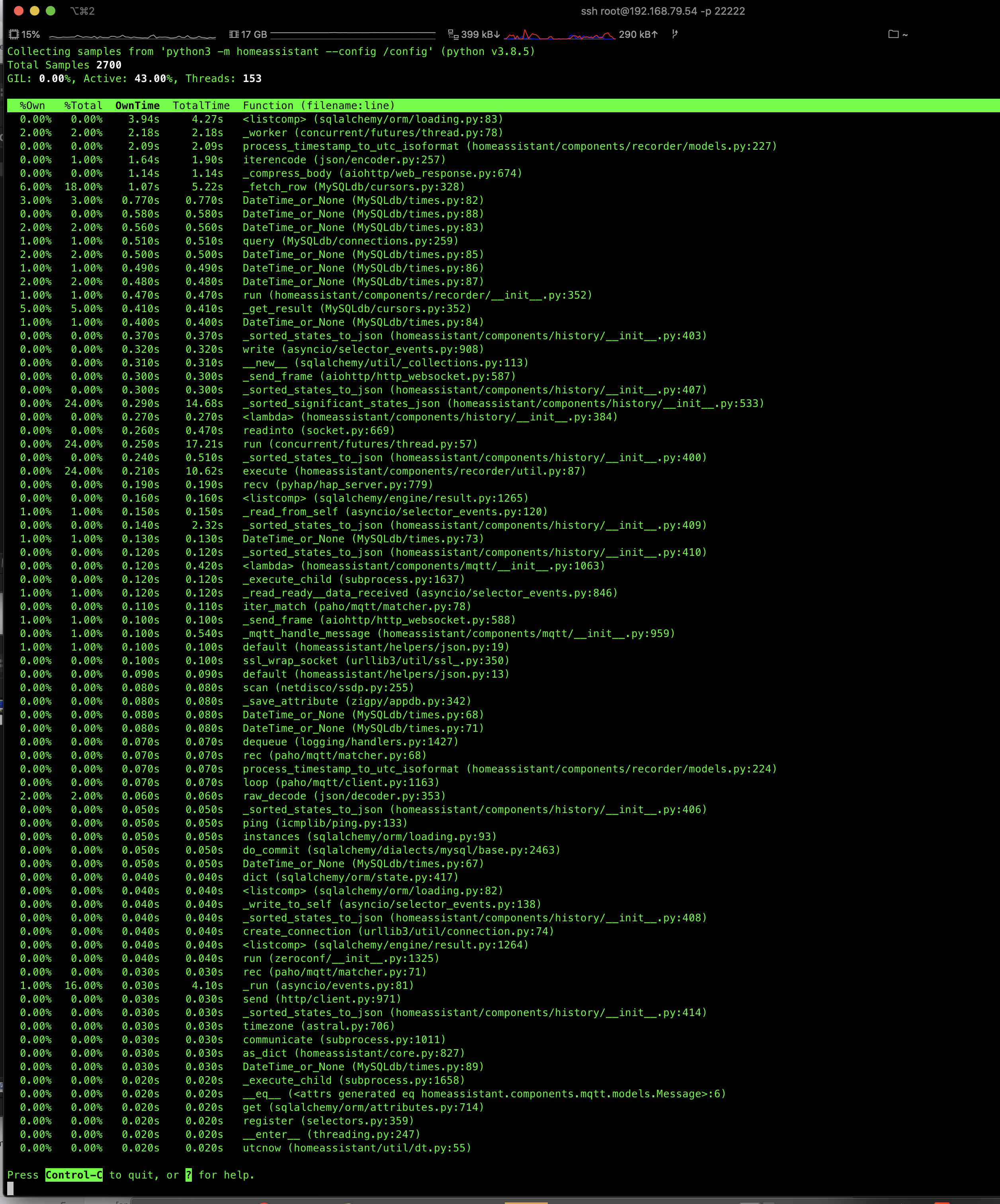

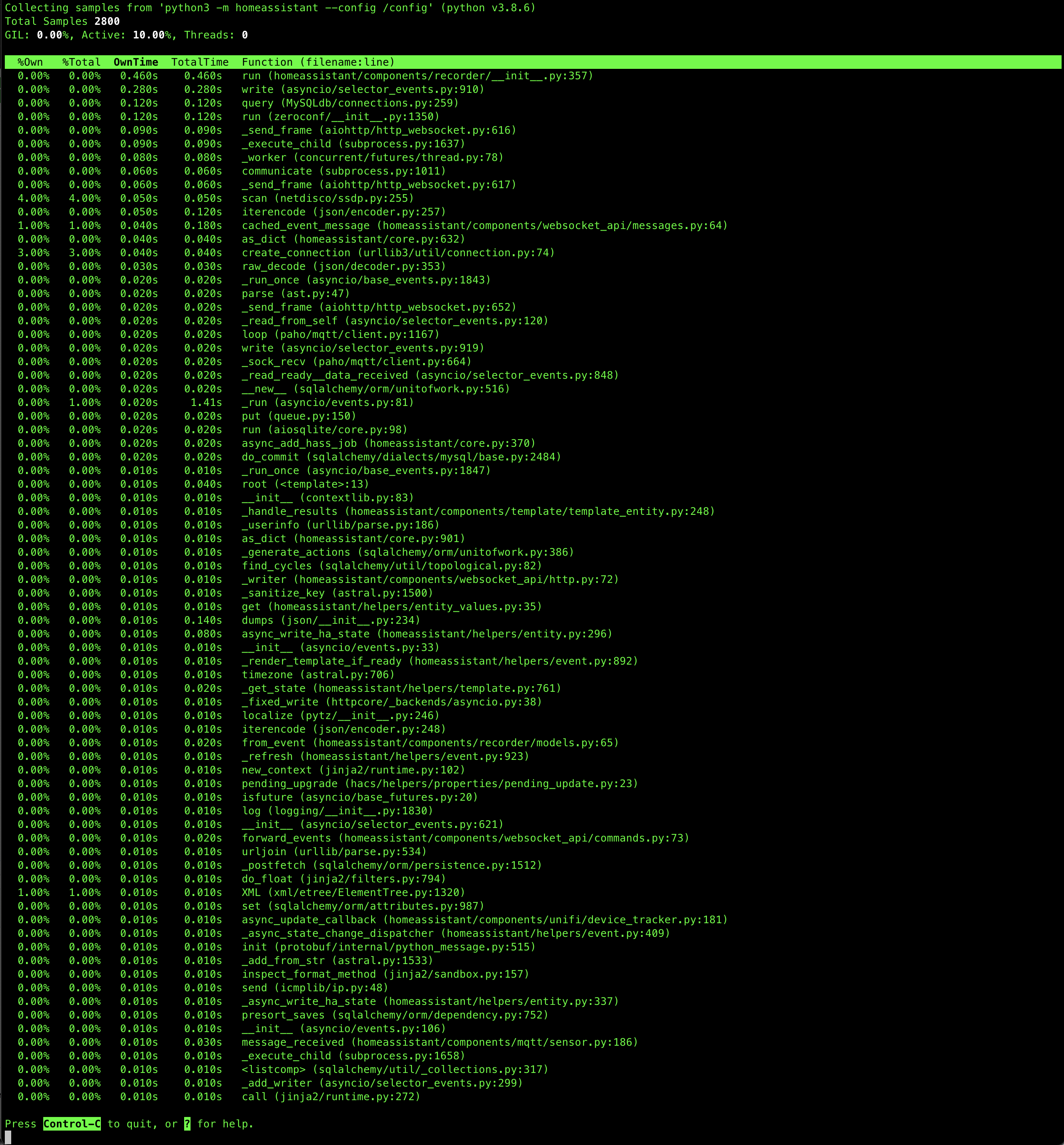

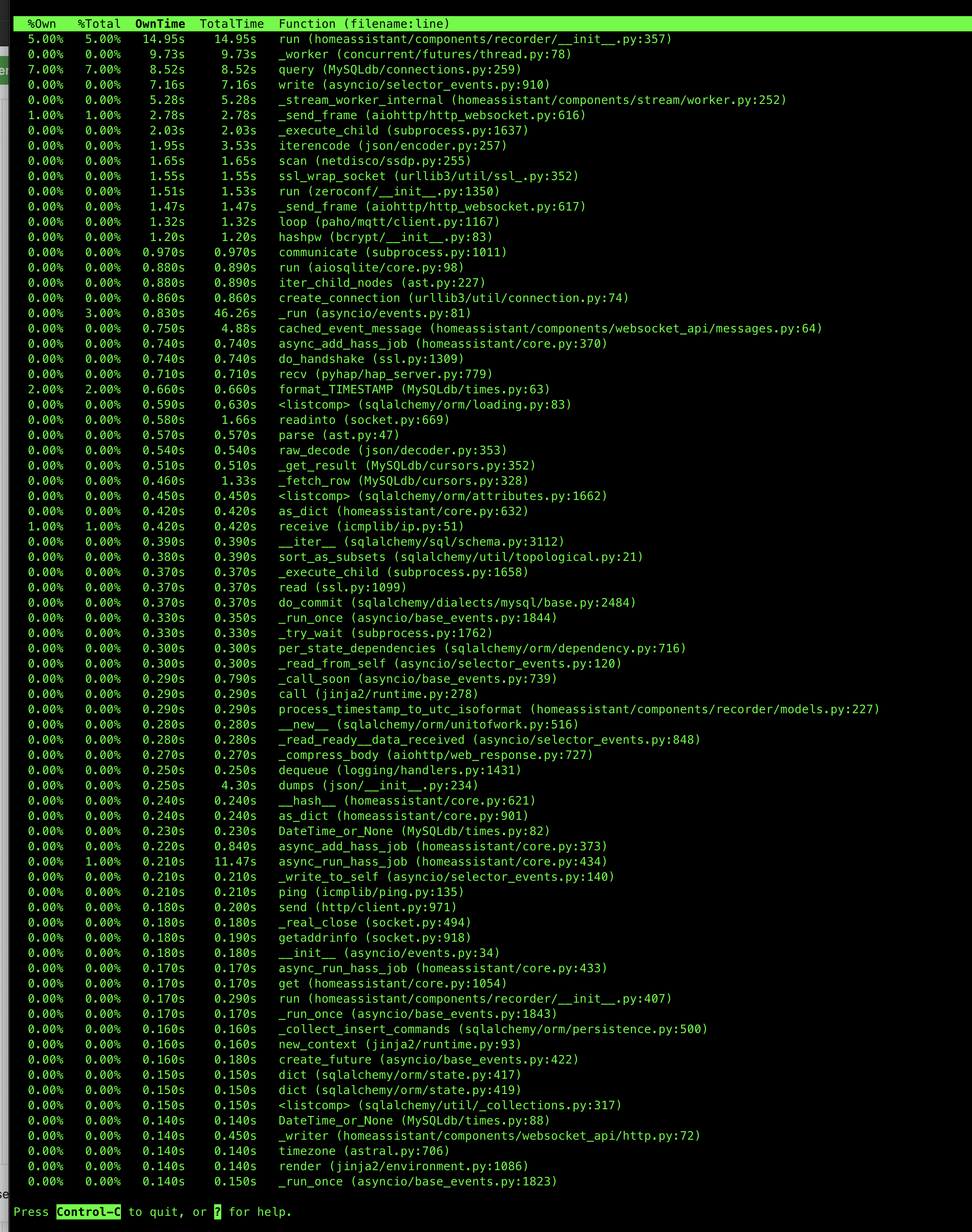

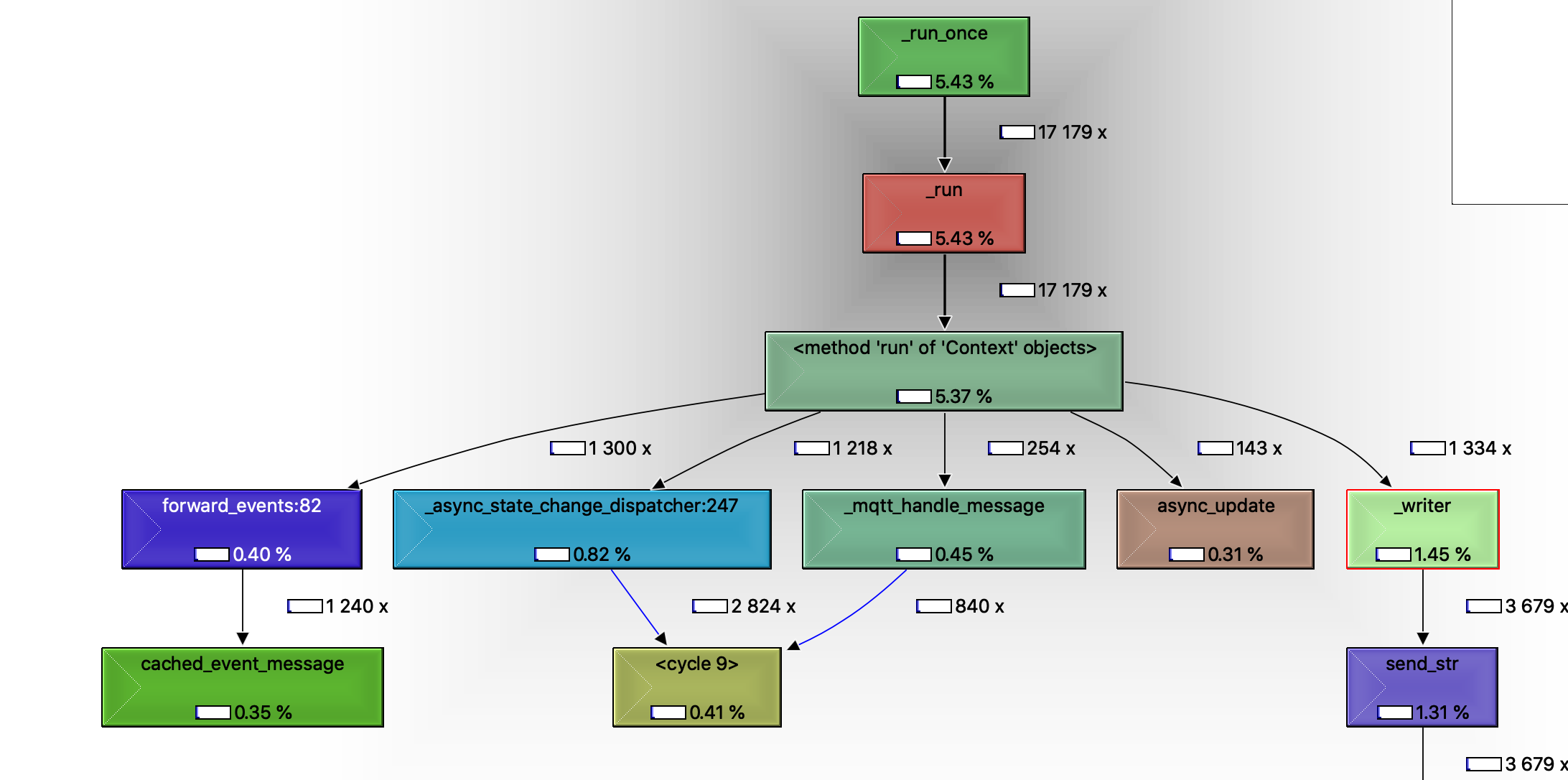

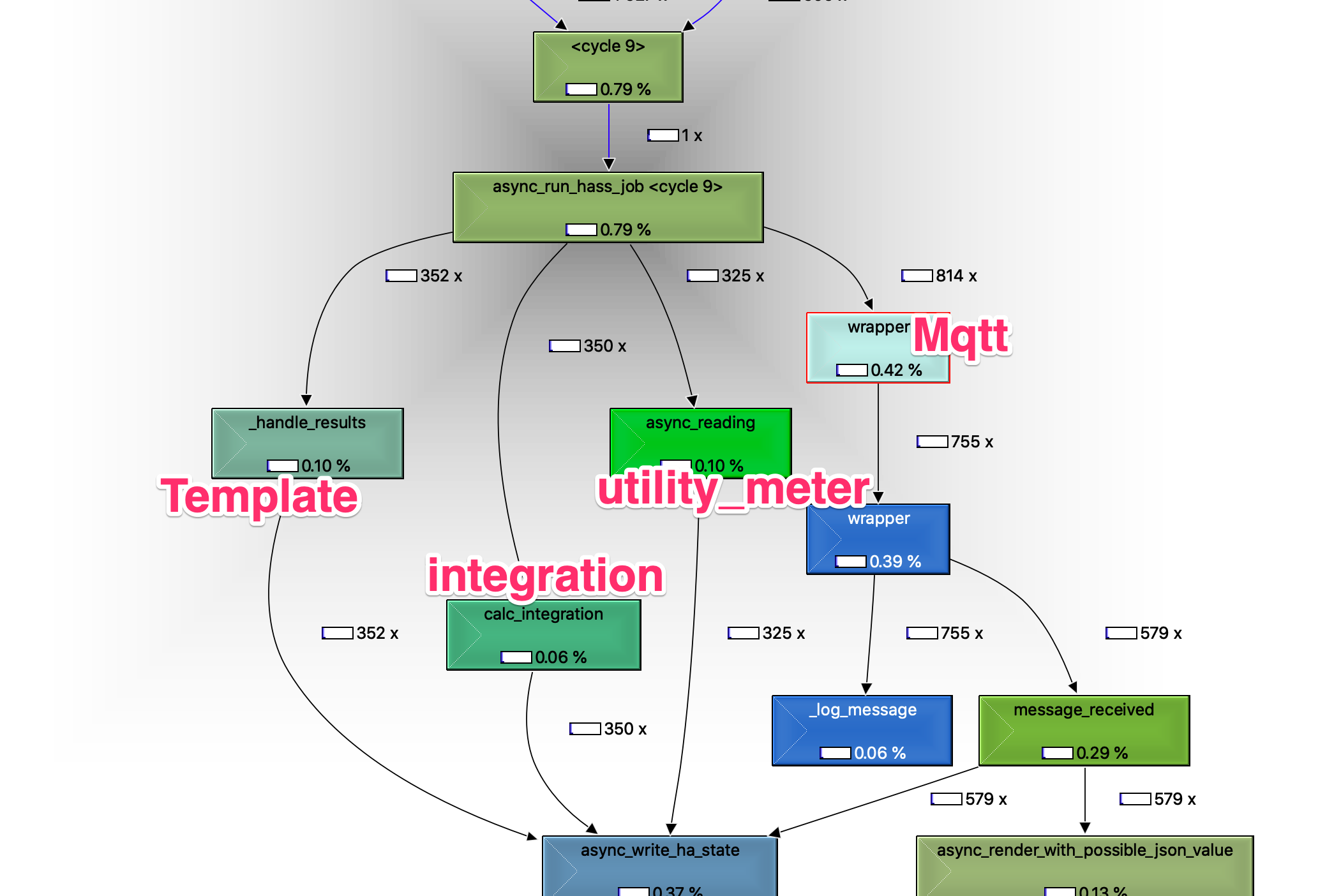

Here is a py-spy recording of the system on V.115.5

Here is a "top" command on the system running v115.5

And for comparison here are the same on v114.2

Thank you for the py-spys. They are helpful and highlight opportunities we have to optimize the template engine. Can you also provide a log with event logging turned on. It would be helpful to know which templates are generating the additional workload in 0.115

logger:

default: warning

logs:

homeassistant.helpers.event: debug

I'm still running 114.2 right now but I put the logging config above in my configuration and I'm not seeing any event debug logging at all in the home-assistant.log.

I didn't want to update again until I knew what I expected to see in the logs but I'm not seeing anything. Should I see something in V114.2 with that logger config?

Here is my current config:

logger:

default: error

logs:

homeassistant.helpers.event: debug

In 0.115 you'll see something like this

2020-09-29 17:56:55 DEBUG (MainThread) [homeassistant.helpers.event] Template update {{ ((states('sensor.energy_usage') | float) + (states('sensor.energy_usage_2') | float)) / 1000 }} triggered by event: <Event state_changed[L]: entity_id=sensor.energy_usage, old_state=<state sensor.energy_usage=1856; attribution=Data provided by Sense.com, unit_of_measurement=W, friendly_name=Energy Usage, icon=mdi:flash @ 2020-09-29T12:54:55.783374-05:00>, new_state=<state sensor.energy_usage=1909; attribution=Data provided by Sense.com, unit_of_measurement=W, friendly_name=Energy Usage, icon=mdi:flash @ 2020-09-29T12:56:55.890390-05:00>>

2020-09-29 17:56:55 DEBUG (MainThread) [homeassistant.helpers.event] Template group [TrackTemplate(template=Template("{{ ((states('sensor.energy_usage') | float) + (states('sensor.energy_usage_2') | float)) / 1000 }}"), variables=None, rate_limit=None)] listens for {'all': False, 'entities': {'sensor.energy_usage', 'sensor.energy_usage_2'}, 'domains': set()}

here is my log file.

Template group [TrackTemplate(template=Template("{{states|count}}

"), variables=None), TrackTemplate(template=Template("{{states|count}} entities in {{states|groupby('domain')|count}} domains

"), variables=None), TrackTemplate(template=Template("{{states.alert|count}}

"), variables=None), TrackTemplate(template=Template("{{states.automation|count}}

"), variables=None), TrackTemplate(template=Template("{{states.binary_sensor|count}}

"), variables=None), TrackTemplate(template=Template("{{states.camera|count}}

"), variables=None), TrackTemplate(template=Template("{{states.climate|count}}

"), variables=None), TrackTemplate(template=Template("{{states.counter|count}}

"), variables=None), TrackTemplate(template=Template("{{states.cover|count}}

"), variables=None), TrackTemplate(template=Template("{{states.device_tracker|count}}

"), variables=None), TrackTemplate(template=Template("{{states.geo_location|count}}

"), variables=None), TrackTemplate(template=Template("{{states.group|count}}

"), variables=None), TrackTemplate(template=Template("{{states.input_boolean|count}}

"), variables=None), TrackTemplate(template=Template("{{states.input_datetime|count}}

"), variables=None), TrackTemplate(template=Template("{{states.input_number|count}}

"), variables=None), TrackTemplate(template=Template("{{states.input_select|count}}

"), variables=None), TrackTemplate(template=Template("{{states.input_text|count}}

"), variables=None), TrackTemplate(template=Template("{{states.light|count}}

"), variables=None), TrackTemplate(template=Template("{{states.media_player|count}}

"), variables=None), TrackTemplate(template=Template("{{states('sensor.count_persistent_notifications')}}

"), variables=None), TrackTemplate(template=Template("{{states.person|count}}

"), variables=None), TrackTemplate(template=Template("{{states.proximity|count}}

"), variables=None), TrackTemplate(template=Template("{{states.remote|count}}

"), variables=None), TrackTemplate(template=Template("{{states.scene|count}}

"), variables=None), TrackTemplate(template=Template("{{states.script|count}}

"), variables=None), TrackTemplate(template=Template("{{states.sensor|count}}

"), variables=None), TrackTemplate(template=Template("{{states.sun|count}}

"), variables=None), TrackTemplate(template=Template("{{states.switch|count}}

"), variables=None), TrackTemplate(template=Template("{{states.timer|count}}

"), variables=None), TrackTemplate(template=Template("{{states.variable|count}}

"), variables=None), TrackTemplate(template=Template("{{states.weather|count}}

"), variables=None), TrackTemplate(template=Template("{{states.zone|count}}

"), variables=None), TrackTemplate(template=Template("{{states.zwave|count}}

"), variables=None), TrackTemplate(template=Template("mdi:home-assistant"), variables=None)] listens for {'all': True, 'entities': set(), 'domains': set()}

TrackTemplate(template=Template("{% set update = states('sensor.time') %} {{expand(states.binary_sensor, states.sensor, states.switch, states.variable, states.media_player, states.light)|selectattr('state', 'in', ['unavailable','unknown','none'])

|reject('in', expand('group.entity_blacklist'))

|reject('eq', states.group.entity_blacklist)

|list|length}}

"), variables=None), TrackTemplate(template=Template("{% set update = states('sensor.time') %} {{expand(states.binary_sensor, states.sensor, states.switch, states.variable, states.media_player, states.light)|selectattr('state', 'in', ['unavailable','unknown','none'])

|reject('in', expand('group.entity_blacklist'))

|reject('eq' , states.group.entity_blacklist)

|reject('eq' , states.group.battery_status)

|map(attribute='entity_id')|list|join(', ')}}

If you disable these two template groups, does the cpu return to normal?

I completely missed that I had that count template in the system. I had done a search on "{{ states |" (with a space after states) but that counting template didn't have the space.

I've been using a Shelly switch that is integrated using the ESPHome API that then toggles a zigbee light bulb as a kind of "canary in the coalmine" thing since that's what alerted me to the CPU issue in the first place. I created an automation to record the delta-T between the switch toggle and the light responding.

For reference, here are the results of that in V114.2 (ignore those wildly extraneous values):

Here are those results in v115.5 with both of the above templates running:

After removing the counts template here are those results:

As you can see the times have come down significantly removing just that one template. But they are still at least twice what the baseline values were.

Then I removed the other template sensor above and now everything seems to be back to "normal":

However I'm surprised that the second template I removed still has such a huge impact on system resources (especially since I'm not running on a Pi but instead an i3 NUC) as that's the "recommended" solution that was put forth in the "heads up!" thread since it doesn't look at all state changes but just a few domains. But I guess the domains that it is still watching are the really active ones (especially the "sensor" domain) so I guess I shouldn't really be surprised after all.

It seems like a good argument for bringing back some form of explicit control over how frequently the templates get rendered.

Hope it helps...and it wasn't too "wordy"... :)

It seems like a good argument for bringing back some form of explicit control over how frequently the templates get rendered.

That is being discussed here https://github.com/home-assistant/architecture/issues/206

Hope it helps...and it wasn't too "wordy"... :)

Thanks, 0.116 should make things quite a bit better regardless because all of those cases are more performant.

@gieljnssns Can you provide new py-spys with 0.116beta or 0.116 when it comes out

I will do tomorrow.

This py-spy is from the second restart of HA after the update to 0.116b0, as soon it was possible

https://www.dropbox.com/s/rz9ywoskzf8m2jb/spy-0.116-startup.svg?dl=0

This py-spy is one of 10min running 0.116b0, once in +/- 1min my fans are spinning up

https://www.dropbox.com/s/0x86vnva13kuckh/spy-0.116-10min.svg?dl=0

and I had errors from ZHA at startup

2020-10-01 09:35:35 ERROR (MainThread) [zigpy_deconz.uart] Lost serial connection: read failed: device reports readiness to read but returned no data (device disconnected or multiple access on port?)

2020-10-01 09:35:36 ERROR (MainThread) [zigpy.application] Couldn't start application

2020-10-01 09:35:36 ERROR (MainThread) [homeassistant.components.zha.core.gateway] Couldn't start deCONZ = dresden elektronik deCONZ protocol: ConBee I/II, RaspBee I/II coordinator

Traceback (most recent call last):

File "/usr/src/homeassistant/homeassistant/components/zha/core/gateway.py", line 147, in async_initialize

self.application_controller = await app_controller_cls.new(

File "/usr/local/lib/python3.8/site-packages/zigpy/application.py", line 68, in new

await app.startup(auto_form)

File "/usr/local/lib/python3.8/site-packages/zigpy_deconz/zigbee/application.py", line 66, in startup

self.version = await self._api.version()

File "/usr/local/lib/python3.8/site-packages/zigpy_deconz/api.py", line 435, in version

(self._proto_ver,) = await self[NetworkParameter.protocol_version]

File "/usr/local/lib/python3.8/site-packages/zigpy_deconz/api.py", line 400, in read_parameter

r = await self._command(Command.read_parameter, 1 + len(data), param, data)

File "/usr/local/lib/python3.8/site-packages/zigpy_deconz/api.py", line 304, in _command

return await asyncio.wait_for(fut, timeout=COMMAND_TIMEOUT)

File "/usr/local/lib/python3.8/asyncio/tasks.py", line 490, in wait_for

raise exceptions.TimeoutError()

asyncio.exceptions.TimeoutError

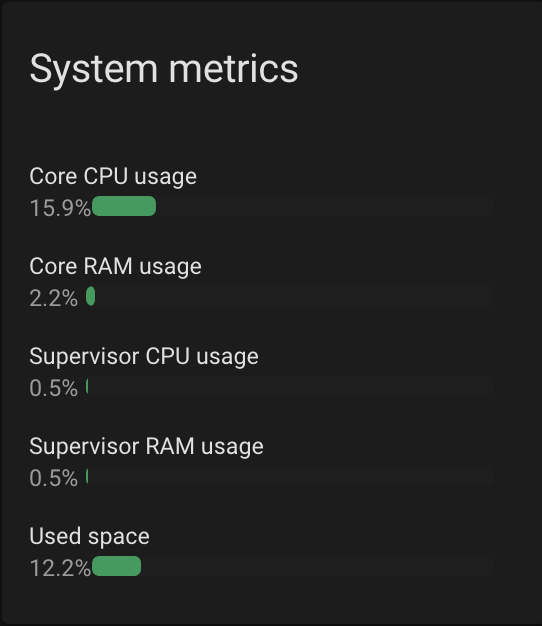

CPU is still around 5 a 6 % while before 0.115.x it was 2 a 3 %

If you need more - just ask....

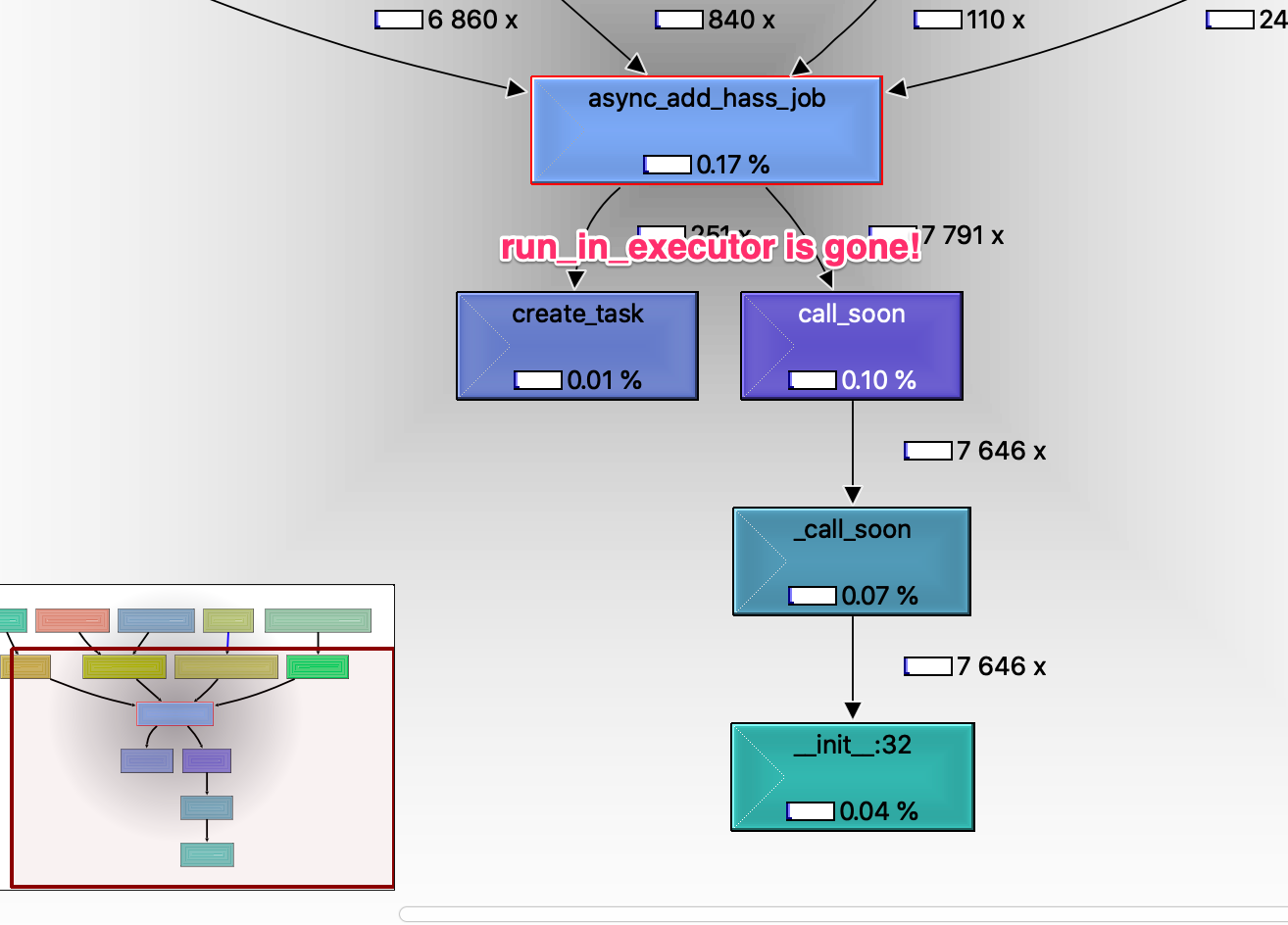

We probably can reduce the relookups with expire_on_commit set to False and only expiring the cache every 5 minutes.

Actually I think we just need to set expire_on_commit to False for recorder as each commit is emptying the cache and causing the data to be refetched from the db every second. In short https://github.com/home-assistant/core/pull/40467 didn't actually solve the issue

@gieljnssns Beta 1 has the additional fix for writing the database. Can you give that a shot and provide additional py-spys?

@bdraco

Some new py-spy's

This one is one of 120s while starting up - it takes about 2min to start up

https://www.dropbox.com/s/1nhyvrbiumlaxae/spy-0.116b1-startup.svg?dl=0

The next one is 600s - still having fans to spin up sometimes

https://www.dropbox.com/s/2wr3sazjsj5ujmj/spy-0.116b1-10min.svg?dl=0

dump

bash-5.0# py-spy dump --pid 232

Process 232: python3 -m homeassistant --config /config

Python v3.8.5 (/usr/local/bin/python3.8)

Thread 232 (idle): "MainThread"

select (selectors.py:468)

_run_once (asyncio/base_events.py:1823)

run_forever (asyncio/base_events.py:570)

run_until_complete (asyncio/base_events.py:603)

run (asyncio/runners.py:43)

run (homeassistant/runner.py:133)

main (homeassistant/__main__.py:312)

<module> (homeassistant/__main__.py:320)

_run_code (runpy.py:87)

_run_module_as_main (runpy.py:194)

Thread 254 (idle): "Thread-1"

dequeue (logging/handlers.py:1427)

_monitor (logging/handlers.py:1478)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 255 (idle): "SyncWorker_0"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 256 (idle): "SyncWorker_1"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 257 (idle): "SyncWorker_2"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 258 (idle): "SyncWorker_3"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 259 (idle): "SyncWorker_4"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 260 (idle): "SyncWorker_5"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 263 (idle): "SyncWorker_6"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 264 (idle): "SyncWorker_7"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 265 (idle): "SyncWorker_8"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 266 (idle): "SyncWorker_9"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 267 (idle): "SyncWorker_10"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 268 (idle): "SyncWorker_11"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 269 (idle): "SyncWorker_12"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 270 (idle): "SyncWorker_13"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 271 (idle): "SyncWorker_14"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 272 (idle): "SyncWorker_15"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 273 (idle): "SyncWorker_16"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 274 (idle): "SyncWorker_17"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 275 (idle): "SyncWorker_18"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)

_bootstrap_inner (threading.py:932)

_bootstrap (threading.py:890)

Thread 276 (idle): "SyncWorker_19"

_worker (concurrent/futures/thread.py:78)

run (threading.py:870)