Core: ZHA Devices becoming unavailable

The problem

I have noticed more and more of my ZHA devices are becoming unavailable. This has happened more so with the 0.112.X updates. I restored back to my 0.111.4 snapshot and everything is working as it was previously.

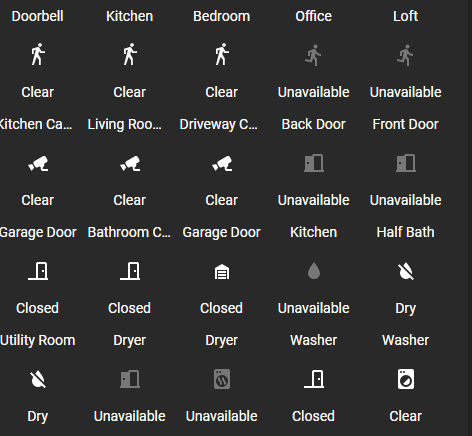

This is a screenshot of some of my zigbee entities unavailable.

Environment

System Health | test

-- | --

arch | x86_64

chassis | desktop

dev | false

docker | true

docker_version | 19.03.6

hassio | true

host_os | Ubuntu 18.04.4 LTS

installation_type | Home Assistant Supervised

os_name | Linux

os_version | 4.15.0-108-generic

python_version | 3.7.7

supervisor | 228

timezone | America/Indiana/Indianapolis

version | 0.112.1

virtualenv | false

- Home Assistant Core release with the issue: 0.112.X

- Last working Home Assistant Core release (if known): 0.111.4

- Operating environment (OS/Container/Supervised/Core): Supervised

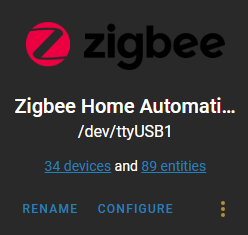

- Integration causing this issue: ZHA

- Link to integration documentation on our website: https://www.home-assistant.io/integrations/zha/

Problem-relevant configuration.yaml

Traceback/Error logs

I have attached a home assistant log file. home-assistant.log

The following loggers are set.

logger:

default: critical

logs:

homeassistant.core: debug

homeassistant.components.zha: debug

bellows.zigbee.application: debug

bellows.ezsp: debug

zigpy: debug

zigpy_cc: debug

zigpy_deconz.zigbee.application: debug

zigpy_deconz.api: debug

zigpy_xbee.zigbee.application: debug

zigpy_xbee.api: debug

zigpy_zigate: debug

zhaquirks: debug

Additional information

Smartthings Multi Sensor

multi by Samjin

Firmware: 0x00000011

IEEE: 28:6d:97:00:01:0b:15:4c

Nwk: 0x00a7

Device Type: EndDevice

LQI: Unknown

RSSI: Unknown

Last Seen: 2020-07-03T11:58:14

Power Source: Battery or Unknown

Quirk: zhaquirks.samjin.multi2.SmartthingsMultiPurposeSensor2019

Smartthings Water Leak Sensor

water by Samjin

Firmware: 0x00000011

IEEE: 28:6d:97:00:01:0b:67:1a

Nwk: 0x0860

Device Type: EndDevice

LQI: Unknown

RSSI: Unknown

Last Seen: 2020-07-03T11:54:25

Power Source: Battery or Unknown

Smartthings Motion Sensor

motion by Samjin

Firmware: 0x00000011

IEEE: 28:6d:97:00:01:0c:28:54

Nwk: 0xba27

Device Type: EndDevice

LQI: Unknown

RSSI: Unknown

Last Seen: 2020-07-03T12:00:58

Power Source: Battery or Unknown

Sengled Light

Device info

E11-G13 by sengled

Firmware: 0x00000009

IEEE: b0:ce:18:14:03:59:c9:34

Nwk: 0xf22f

Device Type: EndDevice

LQI: Unknown

RSSI: Unknown

Last Seen: 2020-07-03T12:00:27

Power Source: Mains

All 44 comments

Might be related to #37372 but I am not using deconz.

zha documentation

zha source

(message by IssueLinks)

Hey there @dmulcahey, @adminiuga, mind taking a look at this issue as its been labeled with an integration (zha) you are listed as a codeowner for? Thanks!

(message by CodeOwnersMention)

switched to ZHA and the issue disappeared. Maybe something to do with the new db structure?

When you trigger an unavailable device, does it come back online?

No. I had tried to turn the lights off/on again, triggering door sensors, etc.. Also tried calling the light services against the entities manually and didn't do anything.

Eventually I restored to 0.111.4 because I had every light unavailable and majority of the multi, water, and motion sensors unavailable.

I have a backup I took of 0.112.1 I can restore back to if needed to test anything.

Are you using ConBee for radio? Hrm, there were no changes in zigpy_deconz between last few releases of HA.

So if you rollback, devices still stay unavailable?

I use a Husbzb-1 and the ZHA page for adding devices. I rolled back to 0.111.4 and some were unavailable for about 15 minutes then everything was back to normal.

make sure you gracefully stop HA instance, so it properly saves when each device was last updated.

When started, devices are marked available/unavailable depending when there was last traffic from the device, in your case

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x838e](sengled E11-G13) restored as 'unavailable', last seen: 8:18:12 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xc9fa](sengled E11-G13) restored as 'unavailable', last seen: 5:44:25 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x2820](sengled E11-G13) restored as 'unavailable', last seen: 5:41:31 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xd388](sengled E11-G13) restored as 'unavailable', last seen: 5:39:26 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xc06a](sengled E11-G13) restored as 'unavailable', last seen: 5:36:50 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x9926](sengled E11-G13) restored as 'unavailable', last seen: 5:36:50 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x00a7](Samjin multi) restored as 'available', last seen: 5:39:54 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x075a](Samjin multi) restored as 'available', last seen: 5:43:09 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x07bf](Samjin multi) restored as 'available', last seen: 5:36:32 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xdd73](Samjin motion) restored as 'available', last seen: 5:37:32 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xf22f](sengled E11-G13) restored as 'unavailable', last seen: 5:37:40 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xe89d](sengled E11-G13) restored as 'unavailable', last seen: 5:39:36 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xc161](Samjin water) restored as 'available', last seen: 5:48:04 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x0860](Samjin water) restored as 'available', last seen: 5:43:42 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xbc4e](Samjin water) restored as 'available', last seen: 5:47:18 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x9821](Samjin motion) restored as 'available', last seen: 5:43:48 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xba27](Samjin motion) restored as 'available', last seen: 5:37:09 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0xacbf](Samjin motion) restored as 'available', last seen: 5:37:47 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x0abf](Samjin multi) restored as 'available', last seen: 5:39:49 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x363d](Samjin multi) restored as 'available', last seen: 5:36:19 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x4bc8](Samjin multi) restored as 'available', last seen: 5:37:51 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x6736](sengled E11-G13) restored as 'unavailable', last seen: 5:36:50 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x9a42](Samjin button) restored as 'available', last seen: 5:39:59 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x8815](Samjin button) restored as 'available', last seen: 5:40:13 ago

2020-07-03 17:38:07 DEBUG (MainThread) [homeassistant.components.zha.core.gateway] [0x9b70](Samjin button) restored as 'unavailable', last seen: 5 days, 15:41:29 ago

most are between 5 and half hour ago. This doesn't make any sense, unless HA instance was forcibly terminated and didn't have a chance to update device storage.

But also some devices are not responding to request

2020-07-03 17:38:17 DEBUG (MainThread) [zigpy.device] [0xc9fa] Delivery error for seq # 0x13, on endpoint id 1 cluster 0x0006: message send failure

2020-07-03 17:38:17 DEBUG (MainThread) [zigpy.device] [0xc9fa] Delivery error for seq # 0x15, on endpoint id 1 cluster 0x0008: message send failure

2020-07-03 17:38:17 DEBUG (MainThread) [homeassistant.components.zha.core.channels.base] [0xc9fa:1:0x0008]: failed to get attributes '['current_level']' on 'level' cluster: [0xc9fa:1:0x0008]: Message send failure

2020-07-03 17:38:17 DEBUG (MainThread) [zigpy.device] [0xc9fa] Delivery error for seq # 0x17, on endpoint id 1 cluster 0x0000: message send failure

2020-07-03 17:38:21 DEBUG (MainThread) [zigpy.device] [0xd388] Delivery error for seq # 0x21, on endpoint id 1 cluster 0x0006: message send failure

2020-07-03 17:38:21 DEBUG (MainThread) [homeassistant.components.zha.core.channels.base] [0xd388:1:0x0006]: initializing channel: from_cache: False

One thing I'm noticing, all your devices are "Zigbee End devices" (Sengled bulbs and battery operated devices) which means they need a Zigbee router/coordinator to connect to the network. I didn't notice any of Zigbee routers in your environment and unless all Sengleds are in the same room as the coordinator that not a good mesh setup.

Also, the number of direct child devices was reduced to 24 from 32 and I think you have about 25. Please consider adding a Zigbee router/repeater device. Generally most mains operated devices (with neutral) are acting as Zigbee routers

I restart HA with the configuration -> server controls and reboot the host with the supervisor tabs.

Was the device count reduced in 0.112? I don't see that in the release notes at all. That could possibly explain some of the issues maybe. I have some plugs I have been sitting on because I haven't had the need for them at all since the LQI/RSSI numbers seem to be okay on my ZHA network card, majority are about -70 RSSI and 255 LQI.

Hrm, I would expect rebooting host using supervisor should properly stop HA, but need the confirm.

The change to 24 end devices might have slipped through the cracks. It was marked s breaking change in bellows but indeed I don't see a breaking label in ZHA dependency bump. There was an issue with Elelabs Zigbee controller failing with 32 end devices

But in my opinion, if you have more than 16 end devices you really should be adding some Zigbee repeaters/routers

Won't disagree with that, I will try adding my plugs some time in the next few days to see if that helps at all.

I did roll forward from 0.111.4 to 0.112.2 today to verify if I was still having issues and after a couple of hours, devices started dropping again. Rolled back to 0.111.4 again and it fixes itself.

Hrm, wonder if it just going bannas because it has 25 children but being configured to support only 24.

To test the theory, add to configuration.yaml

zha:

zigpy_config:

ezsp_config:

CONFIG_MAX_END_DEVICE_CHILDREN: 32

I have rolled forward again and added this to my configuration. I will let this run until tomorrow and see what happens.

I haven't had any issues since I added this into my configuration with 0.112.2. I was also able to add new devices, which I previously couldn't as well, but wasn't sure if it was related or not.

Didn't have any issues with the above configuration change, so good to know about that.

Just to test, I added 4 plugs throughout the house and reverted the above config change. Will let it go and see if the routers/repeaters help out.

Wanted to drop a comment here to say I am having the same issues. I use the HUSBZB-1 hub plugged directly into my RPi running HA. I have been using the ZHA integration with this setup for awhile with no issues. After upgrade to 0.112, my devices started to drop and would not add back. I could monitor the traffic and see the communication but the integration would not properly setup.

Rolling back to 0.111 fixed all issues. Yes, I tried adding the bit above in config, and I am only running 17 devices with 5 of those routers.

Dropping off and not adding back is vague. Debug logs reproducing the problem. Debug log from 112 showing initial status of the devices and when. They were last seen.

Just an update. With the 4 plugs added, I haven't noticed any devices becoming unavailable. Only time devices are unavailable is when I restart or reboot the host via the HA UI, which I think is related to states not saving properly or something.

I'd also like to mention that I had the problem of unavailable device after upgrade from 111.4 to 112.4. In my case it was the Philips Hue outdoor motion sensor.

A rollback to 111.4 let the device to be available again. Before doing that I tried rebooting, etc. with no success. I also have a buch of plugs or other bulbs that can act as a router....

Just an update. With the 4 plugs added, I haven't noticed any devices becoming unavailable. Only time devices are unavailable is when I restart or reboot the host via the HA UI, which I think is related to states not saving properly or something.

Make sure zha.storage is in the storage directory with proper file permissions in the HA config directory and that you don’t force kill HA when you shut down. Let it shut down cleanly.

@as19git67 you need to post essentially the entire issue template with your information and debug logs, especially where it posts the status of device restoration.

@dmulcahey I am not force killing anything. I restart with Configuration --> Server Controls --> Restart and reboot with Supervisor --> System --> Reboot Host or the hassio.host_reboot service. zha.storage looks okay as well since I have all my devices (new and old) listed in it.

@steve-gombos having the devices in it is great but when this happens what is in the "last seen" tag for the devices in question? That is what is used for availability calculations and based on previous logs those times seem way off.

@steve-gombos also, how long do your restarts take?

@flannelman173 @as19git67 please open separate issues and ensure you fill out the entire template. Thanks!

@dmulcahey I will have to follow up with you when I do see them as unavailable. Today it seems like it is fine, but I will have to log when the states come up as unavailable.

All restarts I did today:

- UI Loaded: 15 sec

- HA Started: 25 sec

@flannelman173 @as19git67 please open separate issues and ensure you fill out the entire template. Thanks!

Will do. Is there anything specific you would like to see in the logs?

I've noticed similar issue this morning. I have recently started playing with CC2538 (Modkam) and ZHA integration. I've paired a few buttons and window sensors. Yesterday I was running some tests, opening and closing the window and restarting HA. If I opened the window and allowed about 5 mins before rebooting HA, a correct state would be restored after the reboot, however with about 2 mins it always restored previous state i.e. closed vs open. I've tried doing same this morning after both windows have been left open for over 30 mins and both sensors had Unavailable state after the reboot but once I opened and closed the window, it quickly updated.

I haven't been able to reproduce this since I added the zigbee plugs. Really don't want to remove them either to try to find out haha. Running 34 devices right now, and has been like this for over a week. On reboot it looks like the last seen is saved into the zha storage fine and restored when HA comes up.

I doubt this has anything to do with the actual reception as values are being updated absolutely fine. Perhaps there is some sort of delay saving cached values to storage, I really don't know the mechanism.

@steve-gombos also, how long do your restarts take?

Thank you for the tip. I found my HA restarts were taking >5 minutes due to an unrelated issue. After I fixed that issue, HA restart took only 30s, and all zigbee devices returned operational again.

I did also check that the devices were listed in zha.storage

@resoai last seen is saved upon graceful shutdown /reset . If for some reason it is not shutdown properly then it's going to be lost. During startup when it's restored you can see what ZHA thinks about how long ago it was seen.

This has been happening again for me. Not sure if it is just slow updates after a restart to the coordinate or what. But I am running 37 devices (5 are plugs/repeaters).

One thing I did notice is that it doesn't look like the zha.storage file is getting update when I restart. The last modified date of it is 8/16. So then all of the "Last Seen" dates in the UI are dated 8/16 and are marked as unavailable in the UI until a new state has been set.

zha.storage

{

"data": {

"devices": [

{

"ieee": "b0:ce:18:14:03:1e:af:a8",

"last_seen": 1597562139.419512,

"name": "sengled E11-G13"

},

{

"ieee": "b0:ce:18:14:03:1e:cf:42",

"last_seen": 1597561746.3217895,

"name": "sengled E11-G13"

},

{

"ieee": "b0:ce:18:14:03:59:c9:34",

"last_seen": 1597561783.8611271,

"name": "sengled E11-G13"

},

{

"ieee": "b0:ce:18:14:03:59:3d:28",

"last_seen": 1597562156.5779967,

"name": "sengled E11-G13"

},

{

"ieee": "b0:ce:18:14:03:5a:0d:e3",

"last_seen": 1597561802.8387032,

"name": "sengled E11-G13"

},

{

"ieee": "b0:ce:18:14:03:59:42:11",

"last_seen": 1597562094.850114,

"name": "sengled E11-G13"

},

{

"ieee": "b0:ce:18:14:03:17:19:4f",

"last_seen": 1597562045.438917,

"name": "sengled E11-G13"

},

{

"ieee": "b0:ce:18:14:03:1e:aa:cf",

"last_seen": 1597562097.280833,

"name": "sengled E11-G13"

},

{

"ieee": "b0:ce:18:14:03:1c:a2:5e",

"last_seen": 1597561979.4604867,

"name": "sengled E11-G13"

},

{

"ieee": "28:6d:97:00:01:0b:15:4c",

"last_seen": 1597562190.9935727,

"name": "Samjin multi"

},

{

"ieee": "28:6d:97:00:01:0b:15:5c",

"last_seen": 1597561937.3905475,

"name": "Samjin multi"

},

{

"ieee": "28:6d:97:00:01:0b:15:5e",

"last_seen": 1597561537.7457058,

"name": "Samjin multi"

},

{

"ieee": "28:6d:97:00:01:0c:cb:d4",

"last_seen": 1597561570.189154,

"name": "Samjin motion"

},

{

"ieee": "00:0d:6f:00:11:fe:5d:20",

"last_seen": 1597547053.7711651,

"name": "Silicon Labs EZSP"

},

{

"ieee": "28:6d:97:00:01:0b:66:e3",

"last_seen": 1597561586.7935338,

"name": "Samjin water"

},

{

"ieee": "28:6d:97:00:01:0b:67:1a",

"last_seen": 1597562073.361912,

"name": "Samjin water"

},

{

"ieee": "28:6d:97:00:01:0b:66:d3",

"last_seen": 1597561923.9010892,

"name": "Samjin water"

},

{

"ieee": "28:6d:97:00:01:0c:28:84",

"last_seen": 1597561690.4543164,

"name": "Samjin motion"

},

{

"ieee": "28:6d:97:00:01:0c:28:54",

"last_seen": 1597562108.3603768,

"name": "Samjin motion"

},

{

"ieee": "28:6d:97:00:01:0c:28:49",

"last_seen": 1597562172.0454335,

"name": "Samjin motion"

},

{

"ieee": "28:6d:97:00:01:0c:0e:89",

"last_seen": 1597561903.7162035,

"name": "Samjin multi"

},

{

"ieee": "28:6d:97:00:01:0c:18:0f",

"last_seen": 1597561795.30326,

"name": "Samjin multi"

},

{

"ieee": "28:6d:97:00:01:0c:0e:f2",

"last_seen": 1597562166.5501342,

"name": "Samjin multi"

},

{

"ieee": "28:6d:97:00:01:0c:eb:28",

"last_seen": 1597561939.3533304,

"name": "Samjin button"

},

{

"ieee": "28:6d:97:00:01:0c:ea:2f",

"last_seen": 1597561589.8452544,

"name": "Samjin button"

},

{

"ieee": "28:6d:97:00:01:0c:ea:3a",

"last_seen": 1597562028.3750117,

"name": "Samjin button"

},

{

"ieee": "28:6d:97:00:01:0c:ea:27",

"last_seen": 1597561777.188614,

"name": "Samjin button"

},

{

"ieee": "00:0d:6f:00:0a:79:24:07",

"last_seen": 1597562196.8188615,

"name": "Securifi Ltd. unk_model"

},

{

"ieee": "00:0d:6f:00:0a:79:27:85",

"last_seen": 1597562196.77878,

"name": "Securifi Ltd. unk_model"

},

{

"ieee": "00:0d:6f:00:0a:79:8b:f2",

"last_seen": 1597562196.7995265,

"name": "Securifi Ltd. unk_model"

},

{

"ieee": "00:0d:6f:00:0a:79:80:23",

"last_seen": 1597562196.7479386,

"name": "Securifi Ltd. unk_model"

},

{

"ieee": "00:0d:6f:00:04:a8:68:71",

"last_seen": 1597562196.7622323,

"name": "Securifi Ltd. unk_model"

},

{

"ieee": "00:0d:6f:00:05:61:18:68",

"last_seen": 1594849684.5950613,

"name": "Securifi Ltd. unk_model"

},

{

"ieee": "00:0d:6f:00:0a:79:1f:28",

"last_seen": 1594850057.2728164,

"name": "Securifi Ltd. unk_model"

},

{

"ieee": "28:6d:97:00:01:11:0d:ad",

"last_seen": 1597561761.3759358,

"name": "Samjin multi"

},

{

"ieee": "28:6d:97:00:01:11:10:e0",

"last_seen": 1597562143.2309406,

"name": "Samjin multi"

},

{

"ieee": "28:6d:97:00:01:11:0e:32",

"last_seen": 1597562160.1007037,

"name": "Samjin multi"

}

]

},

"key": "zha.storage",

"version": 1

}

Edit: Also tried to renamed zha.storage so that a new one would be created. It got created, but no device data is in the file.

check what's happening at shutdown. IIRC it would wait 60s but if anything is blocking the shutdown, then the shutdown is aborted and no data is saved.

From what I can see the only way this can happen is if like @donnlee reported and restarts are forced by the supervisor. You are reporting this isn’t the case however. @steve-gombos there aren’t any errors in the logs from the restart? Something funky is happening when you restart. A graceful shutdown should cause this file to be written.

Let’s try something:

- Shut HA down

- Rename the file again

- Start HA

- Use several mains powered devices and ensure they flip to available in the UI

- shut HA down cleanly - do NOT restart

- Check the file to see if it is correct and also post it here

there was a report https://github.com/home-assistant/core/issues/38215 that some open tabs could cause similar behavior. If input_* is not saved, then zha storage won't be saved too i guess

Hm.. I'll give that a try. On my desktop I typically have the PWA version of HA always open, which technically runs in a chome tab, so I could try that.

Yeah it looks like that could be the issue with this as well. I closed the PWA I had running on my desktop, restarted via UI on my mobile and it updated the zha.storage and everything was available afterwards.

Was that running as an ingress add on though? Those reports were specifically about having add on tabs open. If so, please open a supervisor issue and link all 3 issues to it.

I had a couple other tabs that had node-red open. I'll see if there is an issue opened on supervisor already for this, if not I will create one and close this.

I am having similar issue but not leaving any tabs open.

What do I need to look for in logs to see if it shutdown correctly or what's stopping it.

Most helpful comment

Just an update. With the 4 plugs added, I haven't noticed any devices becoming unavailable. Only time devices are unavailable is when I restart or reboot the host via the HA UI, which I think is related to states not saving properly or something.