Core: [Performance] questions and suggestions

Hello folks,

Just wanted to share a few thoughts I have.

With a few big api platform I see some bad tendencies happening again and again that totally crashes the performance in both dev and prod env. In dev env it's quite difficult to live with.

1/ custom normalizers are rarely using the CacheableSupportsMethodInterface interface.

Normalization : we have a fully hydrated entity, it's easy to cache with some instanceof and an interface.

Denormalization : we have an array of yet unknown data and a string representing the class to hydrate. We use class_implements on the string classname to check if it's cacheable but it's not as nice.

If we are not able to use cache on denormalization, it's better to separate the normalizer and the denormalizer in two different classes so the normalizer can be cacheable even if the denormalizer is not.

It's really really important that the normalizer to be cacheable.

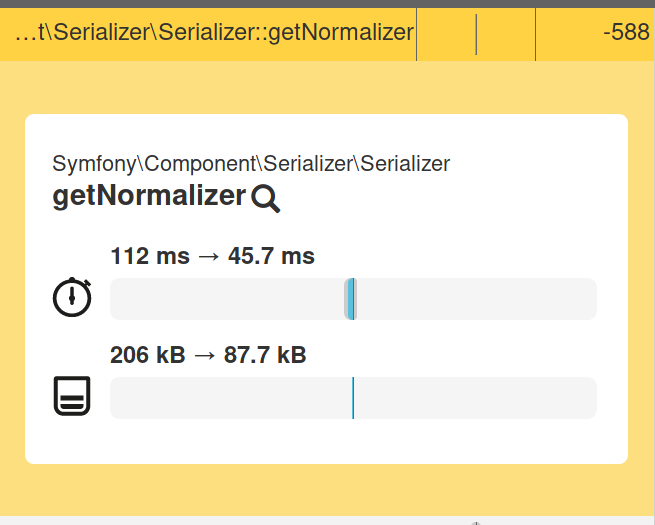

On our application, with a critical route we have 36000 getNormalizer calls. 4 normalizers were not cachable, so it's 360004 supportNormmosation calls. Even for simple stuff as native- stuff.

Of course this route is cached in prod mode, but it's not in dev mode

suggestion : it's so critical that perhaps it should be visible somewhere in the profiler.

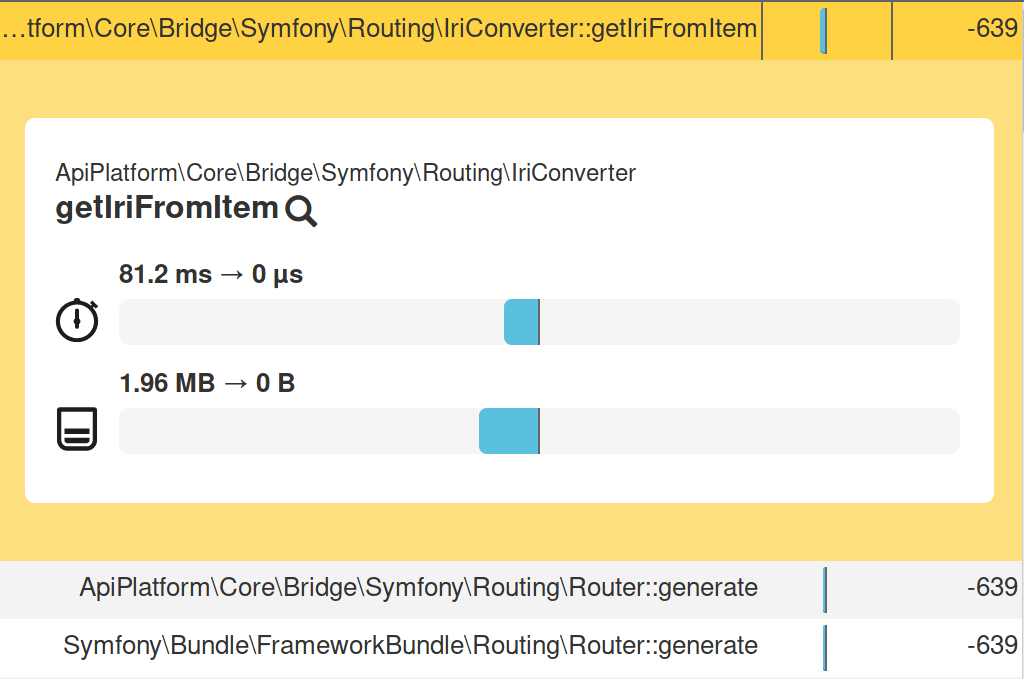

2/ IriConverter::getIriFromItem is costly when it's called.

What happens?

- on normalization, we arrive on

ApiPlatform\Core\Serializer\ItemNormalizerso onApiPlatform\Core\Serializer\AbstractItemNormalizer::normalize - as

$context['resources']seems to be always set, i calls$resource = $context['iri'] ?? $this->iriConverter->getIriFromItem($object); - it does two things:

- find the identifier (in our case "id" 100% on the time). This is the worst part. For each call, it goes straight to phpdocumentor on the file itself just to be able to extract "hey the identifier property is 'id'".

- generate the route via the router

As we have a react app with multiples http calls to the api backend, it creates a concurence on the file read. As we are on mac os x with an nfs and very poor access disk performance, every filesystem call cost us a lot.

There is multiple layer of caching that are all disabled in test/dev mode via the ApiPlatformExtension::registerCacheConfiguration. We can set cache back by enabling the api_platform.metadata_cache parameter to true in our app. But it enables the cache for every part of the stack. If we are in dev mode it's because we touch to the resource & serialization config a lot. But we NEVER change the identifier property. The cache removal is a very big on/off performance setback.

Furthermore, on my demo call, I have 320 differents entities in my response, but some of them is used a lot of time. It loads and extract with phpdoc 1590 times. I supposed it's the role of ApiPlatform\Core\Api\CachedIdentifiersExtractor::getKeys but i didn't see it working. I will check.

patch: static cache on IriConverter::getIriFromItem

1590 => 320 calls

2/ IriConverter::getIriFromItem is called ALL. THE. TIME. Fun things is: there is no iri routes in my response. So we compute it for nothing (in our case). Did I miss something where it's useful ? Removing it give us the exact same json response whlie giving us a real performance gain.

patch: remove forced call to the IriConverter in ApiPlatform\Core\Serializer\AbstractItemNormalizer::normalize

320 calls => 19 (generated by cyclic call and max depth config)

// Questions

- why iri is computed all the time? wan we safely remove it?

- can we set the identifier once and for all in the resource config itself to avoid going to phpdocumentor?

Do you have any advice / information / recommandation ?

Thanks for reading.

All 64 comments

Thanks for this detailed report @bastnic!

1/ custom normalizers are rarely using the CacheableSupportsMethodInterface interface.

Indeed we should:

- report it in the profiler

- report it as a Blackfire suggestion

- add this method by default in the MakerBundle generator

2/ IriConverter::getIriFromItem is costly when it's called.

👍 on my side for both patches, what do you thin @soyuka?

So if I understand correctly, the problem is that we're disabling the cache when kernel.debug is true (or when api_platform.metadata_cache is false), which was done in the past to prevent some issues. We've been having the same discussion around this, and it'd be great if we could get rid of this altogether and just have Symfony automatically rebuild the container as necessary.

custom normalizers are rarely using the CacheableSupportsMethodInterface interface.

I'm not sure I understand. Custom normalizers are what you write in your app. It's not the responsibility of API Platform.

I'm not sure I understand. Custom normalizers are what you write in your app. It's not the responsibility of API Platform.

@teohhanhui you are absolutly right nothing to do as per api platform himself, I just pointing a DX issue on api development in general that is a silent performance killer.

So if I understand correctly, the problem is that we're disabling the cache when

kernel.debugistrue(or whenapi_platform.metadata_cacheisfalse), which was done in the past to prevent some issues. We've been having the same discussion around this, and it'd be great if we could get rid of this altogether and just have Symfony automatically rebuild the container as necessary.

Two sides : 1/ the false is a too big on/off switch. 2/ even with the cache enabled IriConverter::getIriFromItem is called too often without any reason. This can possibly increase performance for every api platfon app. It does on mine.

Here we go: https://github.com/symfony/maker-bundle/pull/371

IriConverter::getIriFromItem is called too often without any reason

I'm not sure about that. I took a quick look yesterday and didn't really see anywhere where it could be removed safely.

Calls like this one:

https://github.com/api-platform/core/blob/bf867e02964271ce36e4355e7bde104f1e57d2da/src/EventListener/WriteListener.php#L80

IIRC we don't need this in every case.

getIriFromItem should not be that costly though, especially that once the metadata is read, it should be cached by the CachedIdentifiersExtractor, any reason why this wouldn't be executed?

IriConverter::getIriFromItem is called too often without any reason.

Same as @teohhanhui I'm not sure that there are places where we could remove this safely.

getIriFromItem should not be that costly though, especially that once the metadata is read, it should be cached by the CachedIdentifiersExtractor, any reason why this wouldn't be executed?

For that to work, we need to stop disabling the cache when kernel.debug is true (i.e. stop changing everything to ArrayAdapter).

Update: See https://github.com/api-platform/core/pull/2629

@soyuka and @teohhanhui I'm takinkg about this call : on normalization, we arrive on ApiPlatform\Core\SerializerItemNormalizer so on ApiPlatform\Core\SerializerAbstractItemNormalizer::normalize, $resource = $context['iri'] ?? $this->iriConverter->getIriFromItem($object); which it seems is computed for nothing in my case.

And then, even with the CachedIdentifiersExtractor enabled (which is never the case in dev mode), IriConverter still calls getRouteName and $this->router->generate that are not simple operations. A simple static cache in this method delivers us a lot of performance improvement. For free.

I dug a little on what happens behind api_platform.metadata_cache and it seems that in my case it's the ApiPlatform\Core\Bridge\Symfony\PropertyInfo\Metadata\Property\PropertyInfoPropertyMetadataFactory called for the getRouteName in $iriConverter->getIriFromItem that destroys everything. I've 78 entities but in total these files are read 1257 times causing way too more jobs on phpDocumentor\Reflection\Types\ContextFactory

createForNamespace.

IriConverter still calls getRouteName and $this->router->generate that are not simple operations. A simple static cache in this method delivers us a lot of performance improvement. For free.

it seems that in my case it's the ApiPlatform\Core\Bridge\Symfony\PropertyInfo\Metadata\Property\PropertyInfoPropertyMetadataFactory called for the getRouteName in $iriConverter->getIriFromItem that destroys everything

But there's a CachedRouteNameResolver, which by the way already uses a local cache.

Still, I think it's wrong for us to use RouteCollection directly. We should definitely dump the parts that we need. See https://github.com/api-platform/core/issues/2032#issuecomment-417892433

don't use the getRouteCollection() method because that regenerates the routing cache and slows down the application.

https://symfony.com/doc/current/components/routing.html#check-if-a-route-exists

There was a PR that got closed: https://github.com/symfony/symfony/pull/19302

Usually we're using getRouteCollection to find the route name that matches a given resource class and operation. I'm not sure that we can remove them that easily.

@soyuka with try { $this->router->match($request) } catch {} and then read data into $request->attributes you can achieve the same isn't ?

@lyrixx We're trying to get the route name based on some route defaults. It's impossible to match as it's not for the current route, and we don't know about the route we're looking for in advance. See https://github.com/api-platform/core/blob/2.4/src/Bridge/Symfony/Routing/RouteNameResolver.php for example.

Sorry wasn't precise enough, what @teohhanhui said. Although, we could maybe decorate the Router and add some kind of caching layer for the RouteCollection? Maybe that should be done in symfony instead though.

IMO Router is not a real bottleneck but normalizers. Have we any metrics?

Hi back,

Some updates:

- symfony maker will soon have CacheableSupportsMethodInterface on new normaizers by default, thanks @lyrixx https://github.com/symfony/maker-bundle/pull/371

- ~with apip release 2.4 last week, metadata cache is back even in dev mode. It clears A LOT of performance issues as we have now cache on routing and cache . Thanks @teohhanhui https://github.com/api-platform/core/pull/2629~

- an unexpected patch has also been landed in 2.4 by @soyuka https://github.com/api-platform/core/pull/2592. It prevent a lot of call going to the unuseful

getIriFromItemthat I was talking about in my scenario. It account of 1/2 of thenormalizepath now. Not sure why, but I enjoy it ;). It seems related to thehandleNonResourceadded. - BUT... on the contrary, with this PR, it seems that when

handleNonResourceis true, the ItemNormalizers are now not cacheable, so a new drop in performance.... even for "native-*" types (native-array,native-string,native-integer,native-NULL,native-boolean,ApiPlatform\Core\Bridge\Doctrine\Orm\Paginator) :man_facepalming: Warning on this point: because of the upgrade, getNormalizer is back to be the most consuming call. - I still think that the warmup of the cache could be optimized with the things I mentioned before but at lease it do bother me at each api calls.

- there is still to much calls on iriConteverter, even if there are still wayyyyyyy less consuming that before, I still generate 600 calls to router::generate despite never using it.

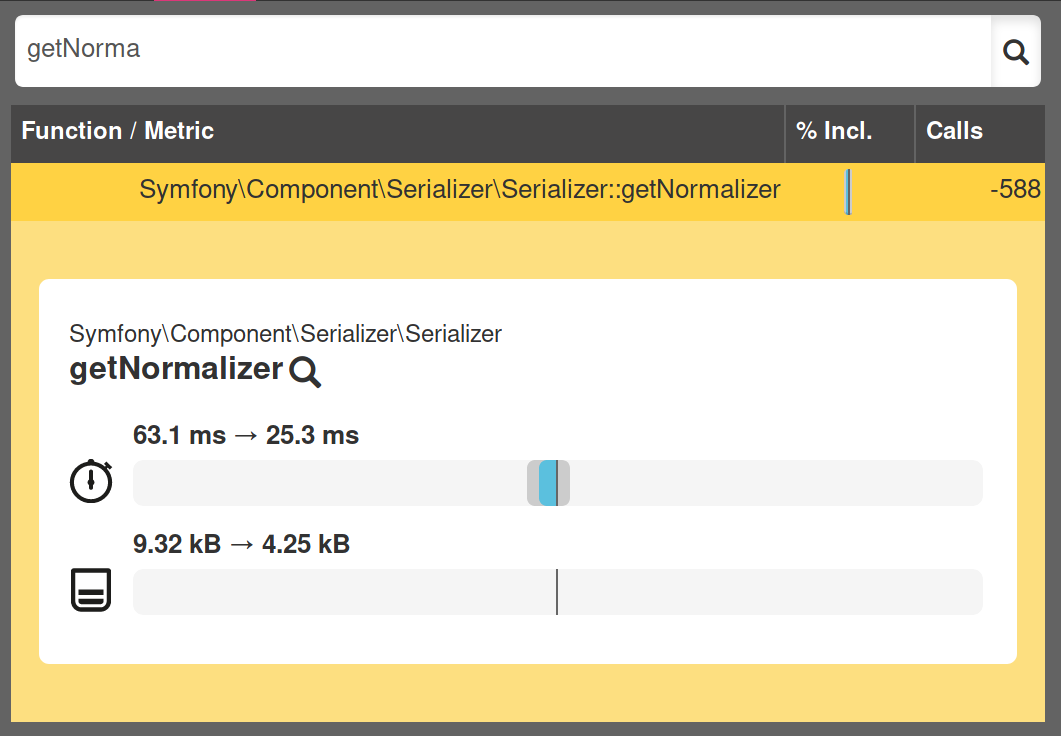

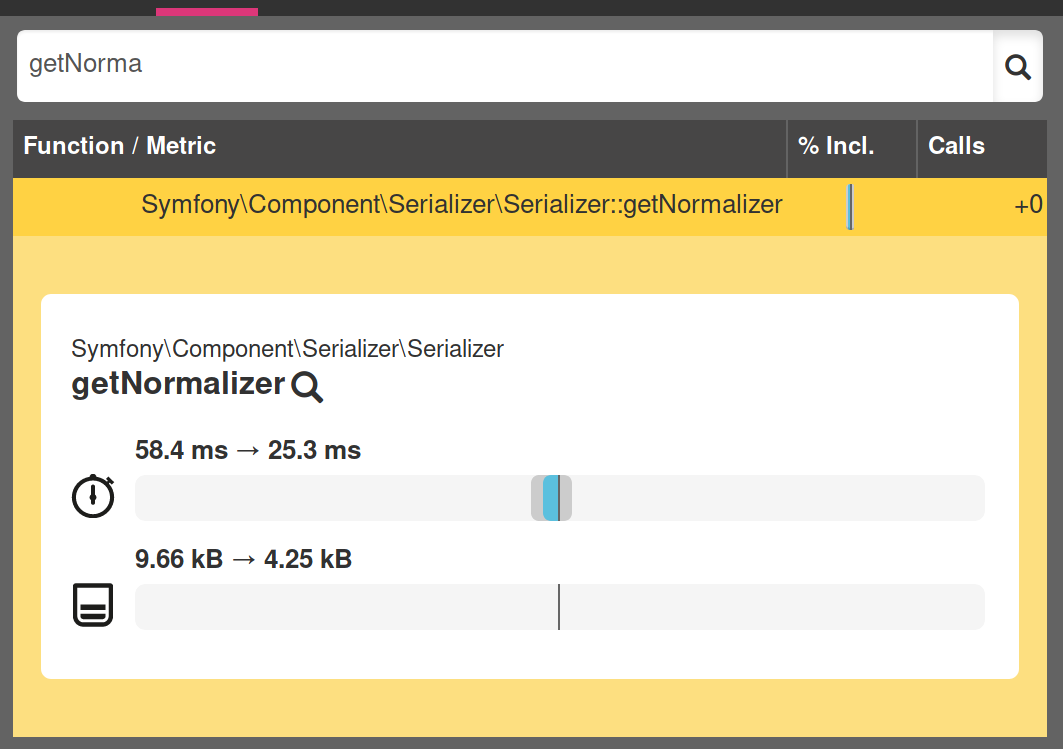

To add some contexts, getNormalizer is called 19707 times:

- 796 times it returns cached normalizers, counting 503

ApiPlatform\Core\Serializer\ItemNormalizerand some custom normalizers. - 37700 calls to supportsNormalization for two normalizers:

ApiPlatform\Core\Serializer\ItemNormalizerandApiPlatform\Core\GraphQl\Serializer\ItemNormalizer, both extending theApiPlatform\Core\Serializer\AbstractItemNormalizer. Most of them arenative-*types. - 294 times only it says that yes it's support...

Note that I do not use graphql, so that's a loooot of calls to a normalizer that I will not use for sure.

Maybe we can set back hasCacheableSupportsMethod to true and let the supportsNormalization do his thing. It will at least improve a lot performances (37700 less calls to supportsNormalization).

Maybe we can set back hasCacheableSupportsMethod to true and let the supportsNormalization do his thing. It will at least improve a lot performances (37700 less calls to supportsNormalization).

I'd be :+1: on this, wdyt @teohhanhui ?

too bad, with the revert and the regression, the 2.4 release is now worst both in dev and prod mode :(. I'm here to help (mainly with test in my environment and analyse) if needed @teohhanhui and @soyuka.

Maybe we can set back hasCacheableSupportsMethod to true and let the supportsNormalization do his thing.

We already do that for the ItemNormalizer instances that handle resources. But we cannot do that for the ItemNormalizer instances that handle non-resources, as we indeed need the context to make the decision.

Note that I do not use graphql, so that's a loooot of calls to a normalizer that I will not use for sure.

GraphQL normalizers should not be registered at all, unless you've enabled graphql support in the configuration:

Edit: Looks like you need to disable it manually (or better, just remove the graphql packages since you're not using them), because we try to have a "smart" default here: https://github.com/api-platform/core/blob/v2.4.0/src/Bridge/Symfony/Bundle/DependencyInjection/Configuration.php#L137 :laughing:

Just remember that I try to be as "dumb" as possible and that's I'm benchmarking the performance out of the box. I've the default api platform installation. With 2.3 I had two normalizers cached, now with 2.4 I have two that are not cached. Having one non useful per default is costly (maybe we just need to update the doc "best practice performance").

We already do that for the

ItemNormalizerinstances that handle resources. But we cannot do that for theItemNormalizerinstances that handle non-resources, as we indeed need the context to make the decision.

I'm not sure how it can be fixed, I know for sure that this is a problem with the current implementation of the serializer. Maybe hasCacheableSupportsMethod should have taken the context as parameter ? Maybe we need to differenciate native type and object as it's clearly the native that kill everything for my json responses.

@bastnic could you try #2650 please?

Also:

Just remember that I try to be as "dumb" as possible and that's I'm benchmarking the performance out of the box.

Out of the box, API Platform doesn't come with GraphQL support, and the GraphQL normalizers shouldn't be registered. It's a bug if it's the case.

Maybe we need to differenciate native type and object as it's clearly the native that kill everything for my json responses.

We already do, but it doesn't help when the supports* methods are not cacheable. Maybe this is something that could be improved in Symfony.

Out of the box, API Platform doesn't come with GraphQL support, and the GraphQL normalizers shouldn't be registered. It's a bug if it's the case.

If you're using the API Platform distribution though...

GraphQL isn't enabled by default, neither in the distribution nor in the Symfony recipe.

Oh yeah... Somehow I thought it was installed by default.

@dunglas So it's a bug: the config file graphql.xml is loaded even when graphql is disabled because the only check is on whether or not $config['graphql'] exists and the dependency injection says addDefaultsIfNotSet so it's always exists and all the class are loaded.

private function registerGraphqlConfiguration(ContainerBuilder $container, array $config, XmlFileLoader $loader)

{

if (!$config['graphql']) {

return;

}

$container->setParameter('api_platform.graphql.enabled', $config['graphql']['enabled']);

$container->setParameter('api_platform.graphql.graphiql.enabled', $config['graphql']['graphiql']['enabled']);

$loader->load('graphql.xml');

}

@bastnic Do you want to send a PR, or I could take care of it?

I'm heading home so feel free ;).

@bastnic Thanks! I've opened #2656

Thanks for the patch and for the cache warmer so we can have a good dev env.

It sill not ok to have the ItemNormalizer not cacheable for stupid things as native-*. And when GraphQL is enabled it's twice as bad.

If we wan to optimize the cache not warmed scenario, we can look at, but it's less an issue now.

- parsing to get which property is the identifier

- reconstruct all the routing on each requests

- that there is no static cache whatsoever when it's disabled and so we do all these heavy operations again and again.

Hmm, I dug a little.

796 times it returns cached normalizers, counting 503

ApiPlatform\Core\Serializer\ItemNormalizerand some custom normalizers.37700 calls to supportsNormalization for two normalizers:

ApiPlatform\Core\Serializer\ItemNormalizerandApiPlatform\Core\GraphQl\Serializer\ItemNormalizer, both extending theApiPlatform\Core\Serializer\AbstractItemNormalizer. Most of them arenative-*types.294 times only it says that yes it's support...

So this scenario has evolved because now graphql is no more in my picture (but still is for the one that use it).

The only classes that uses the non resource item normalizers are DoctrineBehabiors Translations classes. Maybe I need a better (custom or not) normalizer for them that will be marked as cacheable. I do not think that the context evolve at all in this scenario.

I'll dig into how the native types are handled. Maybe there needs to be a cacheable no-op normalizer that just passes through all native types. (I'm not sure why it's not provided out of the box with Symfony Serializer.)

exactly what I'm testing... Maybe with an optin config. I'm pretty sure this is a killer perf thing on many more project than mine.

I'm also thinking about an apcu cache of cachedNormalizers, combine with only cached normalizers, it will totally avoid calling supportNormalization.

The cacheable no-op normalizer for native types does not need to be opt-in because it should have a negative priority. So if the user adds a custom normalizer to do something fancy with the native types, it'd just get used because of higher priority.

I'm also thinking about an apcu cache of cachedNormalizers

Not specifically apcu, but using the cache pools in Symfony. Yeah, that sounds like a good idea. A warmable supports* cache.

public function normalize($data, $format = null, array $context = [])

{

// If a normalizer supports the given data, use it

if ($normalizer = $this->getNormalizer($data, $format, $context)) {

return $normalizer->normalize($data, $format, $context);

}

if (null === $data || is_scalar($data)) {

return $data;

}

// ...

}

I'm almost tempted to move the is_scalar before the getNormalizer ;). I imagine that there is cases where it makes sens to be after getNormalizer, but not in mine.

Have you tried what I suggested? A cacheable no-op normalizer for scalar types that just passes through the value unchanged?

Yep, it's of course better than not having it but not as efficient as not checking at all getNormalizer

class ScalarNormalizer implements NormalizerInterface, CacheableSupportsMethodInterface

{

public function normalize($data, $format = null, array $context = [])

{

return $data;

}

public function supportsNormalization($data, $format = null)

{

return null === $data || is_scalar($data);

}

public function hasCacheableSupportsMethod(): bool

{

return true;

}

}

I put the Scalar prior than the ItemNormalizer, otherwise it will still check the ItemNormalizer for nothing.

In the same way, I added a custom normalizer for the Translation that are currently not cached by ItemNormalizer (non ressource).

class TranslationNormalizer implements NormalizerInterface, CacheableSupportsMethodInterface

{

/** @var AbstractNormalizer */

private $normalizer;

public function __construct(AbstractNormalizer $normalizer)

{

$this->normalizer = $normalizer;

}

public function normalize($object, $format = null, array $context = [])

{

return $this->normalizer->normalize($object, $format, $context);

}

public function supportsNormalization($data, $format = null)

{

return (\is_object($data) && in_array(\Knp\DoctrineBehaviors\Model\Translatable\Translation::class, array_keys(class_uses($data))));

}

public function hasCacheableSupportsMethod(): bool

{

return true;

}

}

So the only thing tested again and again by the not cached are:

- native-array

- ApiPlatform\Core\Bridge\Doctrine\Orm\Paginator

- Doctrine\ORM\PersistentCollection

- Doctrine\Common\Collections\ArrayCollection

=> I can add another normalizer that handle them the same way and voila.

class TraversableNormalizer implements NormalizerAwareInterface, NormalizerInterface, CacheableSupportsMethodInterface

{

use NormalizerAwareTrait;

public function normalize($data, $format = null, array $context = [])

{

$normalized = [];

foreach ($data as $key => $val) {

$normalized[$key] = $this->normalizer->normalize($val, $format, $context);

}

return $normalized;

}

public function supportsNormalization($data, $format = null)

{

return \is_array($data) || $data instanceof \Traversable;

}

public function hasCacheableSupportsMethod(): bool

{

return true;

}

}

It still not perfect because it's a loooooooooooooooot of call to getNormalizer to do very trivial code.

PS : with all these cached normalizers, I have no more not cached stuff. It goes to 26ms for getNormalizer on blackfire. Just moving the cases null/scalar/array/traversable before the normalizer thing in Serializer::normalize make the getNormalizer totally disappear from blackfire (so it costs nothing). So it still some ~20ms too much for my taste.

Note that with the work from https://github.com/api-platform/core/pull/2579 these kind of things should be easier to handle.

@soyuka just so you know: I'm a colleague of @joelwurtz and I'm advocating a lot in the way of the automapper. I'm pointing the trouble of the current serializer implementation.

On the test project I talk about in this thread I both have jane (without automapper) and apip/serializer and jane is 432421341242x more performant.

impact of all three normalizer (scalar, traversable and translation):

impact of scalar and traversable normalizers only:

Back to my previous subject now that I've a solution on normalizer. Hi IriConverter.

There is two types of scenario to generate iri:

- to be displayed if there is cyclic call or max depth. I don't have them anymore because all ours were fixable with better serialization groups.

- to be a key, this is the one in

AbstractItemNormalizer::normalize. For this one I could simply do aspl_object_hashor something like that as the key. Maybe even check if it's useful at all.

In getIriConverter, 37ms / 1.92mo are taken by Router::generate, and 24ms / 0mo all that may be removed.

I talked about this with @Korbeil and what you could do right now is to replace our IriConverter service with your own that would do nothing (or return a spl_object_id indeed). If I understood correctly you don't need IRIs and you're not using any Varnish, this would then be a viable solution.

FYI https://github.com/KnpLabs/DoctrineBehaviors/issues/417, but it seems do be dead over there.

@bastnic I think https://github.com/api-platform/core/pull/2679 will make you happy. :smile:

niiiiiice!

Just wanted to add back something I already mentioned but forgot to pursue because it "only" concerns the bootstrap (warmup) time.

I've a quite big project with a lot of entites that have a lot of properties.

ApiPlatform\Core\Metadata\Property\Factory\CachedPropertyMetadataFactory::getCached took on blackfire 2m12 and 30mo and is called 1323 times. It calls phpDocumentor\Reflection\Types\ContextFactory::createFromReflector (createForNamespace at the end) 1476 times, which takes 1mn35 and calls ArrayIterator::current 8 millions times.

I add a very basic static cache on createFromReflector, that lower calls to createForNamespace to 30 calls and 1.12s and the total of CachedPropertyMetadataFactory::getCached to 30s.

More than 1m30 gain on the warmup time. Maybe we can had a static cache on the class calling ContextFactory.

Looks good to me

that's possibly related to https://github.com/api-platform/core/issues/2618

@dunglas see attached PR ;). https://github.com/symfony/symfony/pull/32188

@bastnic That should have been fixed by https://github.com/symfony/symfony/pull/31452, no?

Hey folks! I have faced the same performance issue, it looks like the normalization process takes a big amount of time.

APP_ENV=prod

APP_DEBUG=0

all caches are enabled with APCu

oPcache enabled

The endpoint is a collection with 20 items per page. I have made two requests with all needed relations for my case and without. Related collections are limited to max 4 items per one collection, I don't think it's a lot.

Blackfire reports

Comparison of both requests

Request without relations

Request with relations

Example of request with relations

[

{

"id": 245,

"token": "gDorVw_kx",

"booking": {

"start_time": "2020-04-14T16:20:00+00:00",

"end_time": "2020-04-14T16:30:00+00:00",

"total_price": 318000,

"origin_price": 318000,

"status": "on reservation",

"confirmed_at": null,

"uuid": "48216f86-bbd0-4316-972a-5ee8a413ab11",

"id": 416525

},

"club": {

"name": "",

"id": 331,

"uuid": "cdf2ff12-6490-4369-b23d-7a264e15d52f"

},

"course": {

"name": "",

"uuid": "b7329cea-7a59-4ded-8a12-d85b7b2602de",

"id": 428

},

"customer": null,

"items": [

{

"slot": {

"player": {

"email": "",

"first_name": "",

"last_name": "",

"phone": "",

"golf_id": "",

"home_club": null,

"hcp": "29.9",

"uuid": "75518080-a0ea-4ac5-9983-7a95e03344bf",

"id": 84076

},

"price": 79500,

"membership": null,

"is_arrival_registration": false,

"is_owner": false,

"is_member": false,

"uuid": "419f857e-4dda-4aaf-bb5e-1ce6b3560c4a",

"id": 594132,

"type": null,

"stub_player_name": null

},

"quantity": 1,

"unit_price": 79500,

"units_total": 79500,

"total": 79500,

"adjustments": [],

"adjustments_total": 0,

"id": 245,

"uuid": "c5204489-435b-471c-9501-6cd6ca8e0907"

},

{

"slot": {

"player": null,

"price": 79500,

"membership": null,

"is_arrival_registration": false,

"is_owner": false,

"is_member": false,

"uuid": "4f0887a3-1240-4dc9-bf0b-d7b7a2bd725e",

"id": 594133,

"type": null,

"stub_player_name": null

},

"quantity": 1,

"unit_price": 79500,

"units_total": 79500,

"total": 79500,

"adjustments": [],

"adjustments_total": 0,

"id": 246,

"uuid": "4bbcddbe-c4d8-441a-b721-bf4d488c5d17"

},

{

"slot": {

"player": null,

"price": 79500,

"membership": null,

"is_arrival_registration": false,

"is_owner": false,

"is_member": false,

"uuid": "26ea3ea3-da94-4bfa-9800-a840b72de9fb",

"id": 594134,

"type": null,

"stub_player_name": null

},

"quantity": 1,

"unit_price": 79500,

"units_total": 79500,

"total": 79500,

"adjustments": [],

"adjustments_total": 0,

"id": 247,

"uuid": "f377e2e0-dfa9-4223-8b3a-aaac34353569"

},

{

"slot": {

"player": null,

"price": 79500,

"membership": null,

"is_arrival_registration": false,

"is_owner": false,

"is_member": false,

"uuid": "af2c5d74-815e-4a21-93f4-4af472633a96",

"id": 594135,

"type": null,

"stub_player_name": null

},

"quantity": 1,

"unit_price": 79500,

"units_total": 79500,

"total": 79500,

"adjustments": [],

"adjustments_total": 0,

"id": 248,

"uuid": "286f70df-9a9d-4642-b656-f3443bbbf648"

}

],

"number": "6190665434815",

"notes": null,

"state": "new",

"items_total": 318000,

"adjustments_total": 0,

"total": 318000,

"currency_code": "SEK",

"locale_code": "SE",

"payment_state": "new",

"payments": [

{

"method": null,

"currency_code": "SEK",

"amount": 318000,

"state": "new",

"details": null,

"id": 245,

"uuid": "92da776b-7078-47a9-bb27-156a8057f7ff"

}

],

"refund_payments": [],

"adjustments": [],

"promotions": [],

"promotion_coupons": [],

"uuid": "e7eeedf6-a3bb-4bbf-9cb0-837b2fee9d93"

}

]

Any suggestions?

Hi @mxkh, sadly you blackfire links are 404. Are they public?

Hi @mxkh, sadly you blackfire links are 404. Are they public?

Ohh, yes they were public. seems they invalidated because of the free plan :( I will recreate backfire reports soon. Thanks for letting me know 👍

Hi @mxkh, sadly you blackfire links are 404. Are they public?

Ohh, yes they were public. seems they invalidated because of the free plan :( I will recreate backfire reports soon. Thanks for letting me know 👍

free plan? Do you provide some plan. please let me know I want to use apt-platform but still wonder and try to checking all issue here.

Thanks

Still now any comment

I've landed here while profiling this same issue, it's still visible on 2.5.x. My question is, wouldn't there be a possibility to cache the route name into APIP's own cached config? Currently the CachedNameResolver works, but it stores into app cache which is not prewarmed, but this doesn't change since it's configuration. Can't this be collected during the container build and stored in APIP cache, read from there?

Namely: read all the route names for resources in a pre-warmer / compiler pass, store it in a hash, add PrecomputedRouteNameResolver, decorate and and inject the hash, read it from there (with or without falling back to the original one). Not sure if that makes sense, WDYT @dunglas @soyuka @bastnic @bendavies?

Note: this suggestion also seems to fix #2032 since the cause is the same.

So my idea is something like this in the compiler pass:

$operationTypes = [OperationType::COLLECTION, OperationType::ITEM];

$routeNames = [];

foreach ($this->collectionFactory->create() as $resourceClass) {

foreach ($operationTypes as $operationType) {

try {

$routeName = $this->routeNameResolver->getRouteName($resourceClass, $operationType);

} catch (InvalidArgumentException $noRouteException) {

$routeName = false;

}

$routeNames[$resourceClass][$operationType] = $routeName;

}

}

$container->set('api_platform.precalculated_route_names', $routeNames);

Then a decorated route resolver:

public function getRouteName(string $resourceClass, $operationType /*, array $context = [] */): string

{

$context = \func_num_args() > 2 ? func_get_arg(2) : [];

if ($context !== []) {

return $this->nameResolver->getRouteName($resourceClass, $operationType, $context);

}

return $this->routeNames[$resourceClass][$operationType] ?? $this->nameResolver->getRouteName($resourceClass, $operationType, $context);

}

This would use the map whenever it can (vast majority of times in my case), with a fallback.

Issue is, I need to access the route resolver in the compiler pass (which in turn requires the router), but cannot access it there because

Constructing service "monolog.logger.router" from a parent definition is not supported at build time.

Moving it to a cache warmer fixes it, it seems to work. I'll profile it tomorrow, will try to PR it if it's OK.

Most helpful comment

Just wanted to add back something I already mentioned but forgot to pursue because it "only" concerns the bootstrap (warmup) time.

I've a quite big project with a lot of entites that have a lot of properties.

ApiPlatform\Core\Metadata\Property\Factory\CachedPropertyMetadataFactory::getCachedtook on blackfire 2m12 and 30mo and is called 1323 times. It callsphpDocumentor\Reflection\Types\ContextFactory::createFromReflector(createForNamespaceat the end) 1476 times, which takes 1mn35 and callsArrayIterator::current8 millions times.I add a very basic static cache on createFromReflector, that lower calls to createForNamespace to 30 calls and 1.12s and the total of

CachedPropertyMetadataFactory::getCachedto 30s.More than 1m30 gain on the warmup time. Maybe we can had a static cache on the class calling

ContextFactory.