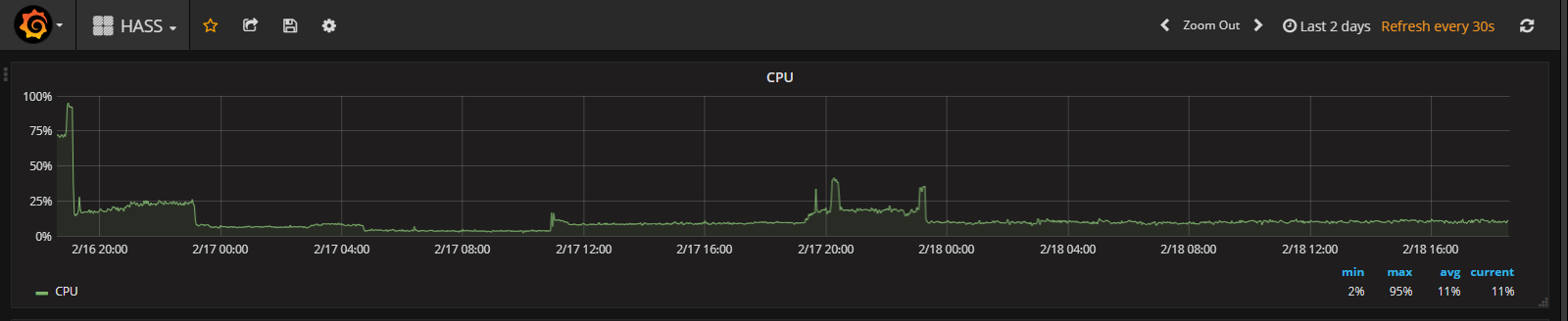

Core: MySQL High CPU usage/long running query against states table

Home Assistant release (hass --version):

0.62

Python release (python3 --version):

3.5.3

Component/platform:

State tracking/database

Description of problem:

MySQL query slams CPU for 12+ hours

Expected:

Query shouldn't hang.

Problem-relevant configuration.yaml entries and steps to reproduce:

Unsure

Traceback (if applicable):

N/A

Additional info:

MariaDB version 10.1.26-0+deb9u1

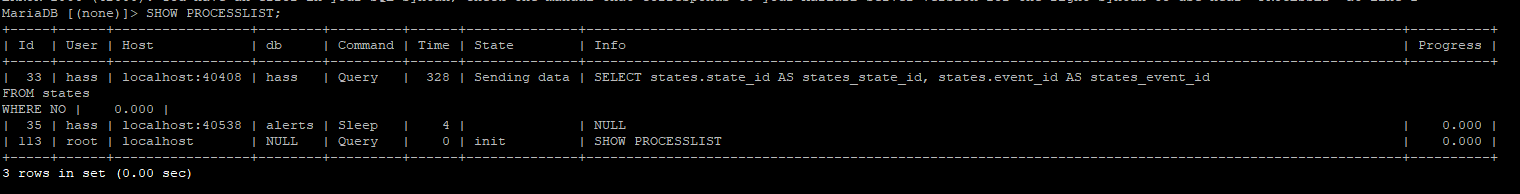

Screenshot from the process list:

Query it appears to be executing:

SELECT states.state_id AS states_state_id, states.event_id AS states_event_id

FROM states

WHERE NO

It's also worth noting that the states table isn't that massive:

All 25 comments

SELECT states.state_id AS states_state_id, states.event_id AS states_event_id

FROM states

WHERE NO;

Is not a valid query. Any possibly way to post the full query that is slow?

How can I get the full query? That was all "SHOW PROCESSLIST;" gave me. Also it's done now so I'll have to wait until tomorrow. :/

Slow query log? Maybe expand the terminal window?

I had the window full-screen on a 1080 monitor so there wasn't going any bigger. I did just enable the slow query log though so I'll take a look at that tomorrow and see if I can track down the actual query, thanks!

I assume it's the purge service.

You can test it in Dev Tools/Services -> recorder.purge -> {"keep_days": "14"}, or whatever you want to keep days.

I'm having 7 days of data and when it runs, the cpu goes up to 100 percent and needs about 10 minutes to complete, even when there's nothing to purge.

HA runs on a VM, so i think on RPi it needs even more time.

Mine is on a VM with 4 CPU cores and 4GB of RAM. So I can't imagine a purge taking 12+ hours. I also only keep 2 days in the DB.

That does appear to be the query though that was showing before, at least up until the truncated part:

It's not in the slow log yet though since it's not finished. So why is the purge taking 12 hours?

I think it's related to this PR https://github.com/home-assistant/home-assistant/pull/11039

Looks like that PR was merged on Jan 1 though which means it should be in the latest build right? Or am I not looking at that right?

Full report from the slow log - query took almost 9 hours:

# Time: 180213 21:47:51

# User@Host: hass[hass] @ localhost [127.0.0.1]

# Thread_id: 33 Schema: hass QC_hit: No

# Query_time: 31707.816497 Lock_time: 0.000283 Rows_sent: 586 Rows_examined: 19812803302

# Rows_affected: 0

use hass;

SET timestamp=1518576471;

SELECT states.state_id AS states_state_id, states.event_id AS states_event_id

FROM states

WHERE NOT (EXISTS (SELECT *

FROM states AS `StatesAlias`

WHERE states.entity_id = `StatesAlias`.entity_id AND `StatesAlias`.last_updated > states.last_updated));

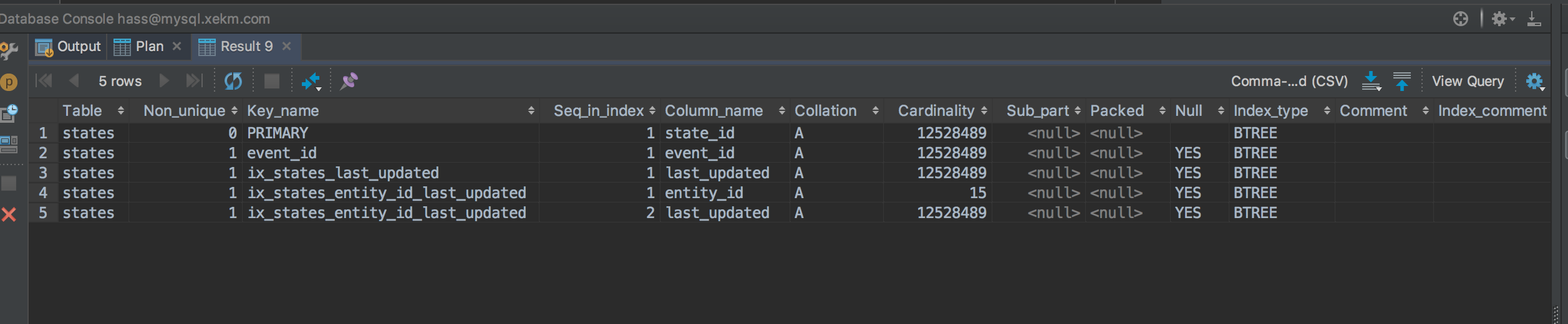

Why is rows examined 19 billion? There are only ~1.5 million rows in the states table.

Damn it. Looks like we managed to get that up to superlinear complexity. But how? The database should have an index on last_updated…

The query is definitely the purge query from #11039, by the way.

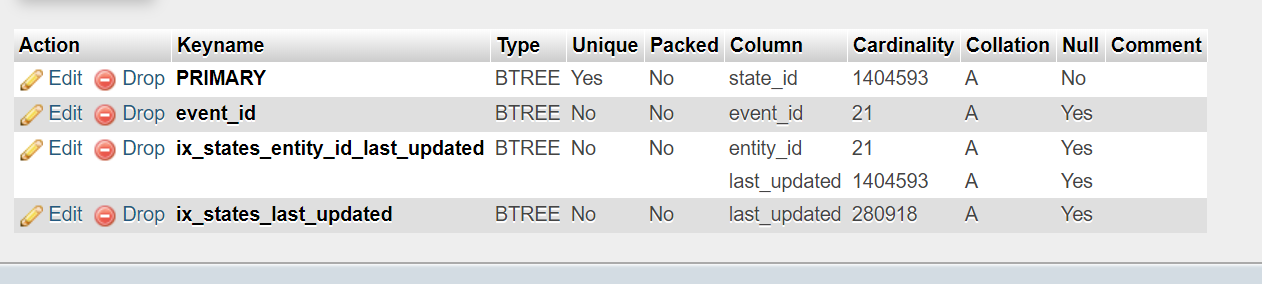

Index is there, at least assuming this is the one you meant:

Yes. So to me it looks like the query should boil down to one lookup in the index for every row in the state table. Why does MySQL crunch so much on that query?

Why does MySQL crunch so much on that query?

The million dollar question. I have no idea. But it explains the performance issues I've been having.

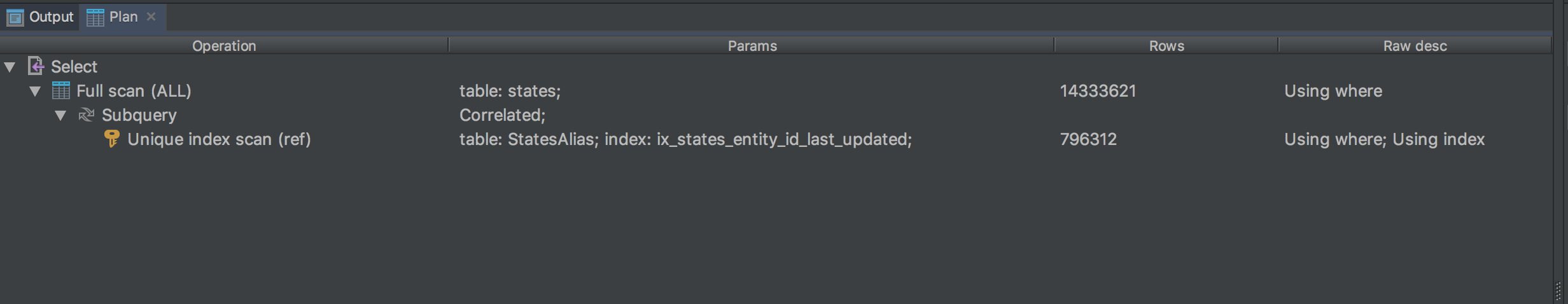

here is the execution plan the above query:

Looks like it's just a very unoptimized query. The outer query seems to use a full scan while the subquery uses an index.

I'm not sure if EXISTS does anything weird which would force the recomputation of that subquery for each row in the outer query.

Looking at the indices on the state table, there doesn't seem to be an index on states.entity_id so this part of the subquery seems expensive:

states.entity_id = `StatesAlias`.entity_id

But there's a composite index on entity_id plus last_updated. Shouldn't that be exactly the right index for this case?

@tinloaf my mistake, you're absolutely right. I had focused specifically on that one column. So it might just be the lack of an index on the outer query, causing the full table scan?

It's not a missing index. The way the query is written right now MySQL has to perform that subquery for every row of the states table. Do we know what sqlalchemy code is writing this query?

This is the code:

states_alias = orm.aliased(States, name='StatesAlias')

protected_states = session.query(States.state_id, States.event_id)\

.filter(~exists()

.where(States.entity_id ==

states_alias.entity_id)

.where(states_alias.last_updated >

States.last_updated))\

.all()

(from https://github.com/home-assistant/home-assistant/blob/dev/homeassistant/components/recorder/purge.py#L25 )

Confirm that I am suffering from the same issue. Due to this issue I had to disable cleanup.

We tried to improve the purge performance in 0.63.3, can you please test how it fares?

I manually updated the purge.py file last night and it seemed to make a massive difference. Query ran super fast (didn't even catch it running). I just update to 0.62.3 so I'll keep an eye on it. Thanks! I'll let you know if it's still being odd.

@amelchio - working great. CPU usage has been minimal since the fix. Appreciate it!!

Thanks, that's good to hear!

Most helpful comment

We tried to improve the purge performance in 0.63.3, can you please test how it fares?