Conan: Use LZMA compression for transmission of binary packages

Gzip is not really efficient for compressing large amounts of binary data. Also, LZMA has special filters improving compression ratio for executable code. You could use 7z or tar.xz for transmission of packages

All 27 comments

Thanks for telling. I think this might be a duplicate to: https://github.com/conan-io/conan/issues/52

We never worked on this feature because a preliminary attempt showed that this will be very complicated from the operations point of view: creating the conan application for PyPi, creating the installers that bundle it for different systems (Win installer, deb, archlinux, OSX homebrew...), as the mainstream python version is still Python2.7, that do not include such functionality.

However, this would be a very nice to have functionality, and very decoupled, not necessary to know about the very internals of conan, if someone knows how to do it and is able to do the work, it will be very welcome.

This is not a duplicate of #52 as that issue is talking about use of 7z in conanfile code, though it seems to be a necessary prerequisite.

Yes, you are right. Basically the underlying problem is the same, once provided support for LZMA in conan, such issue #52 would be trivial.

@memsharded would you be open to having some kind of opt-in to use lzma for packages instead of gzip? lzma makes a LOT of sense for the package storage and transfer, and in our case we can guarantee that all clients are using python3. Would a environment or conan.conf option for uploading .xz packages be something you would accept if we created such a patch?

The problem is maintaining the installers and pypi package, the distinction between py2 and py3 will be a pain. Also managing different compressed files on the server side could be very hard to control if the users start to mix them in the public repositories. It's possible but it looks like a nightmare for us.

I see that - I am not really that worried about the public repos (selfish I know), but in our closed ecosystem this would still be a huge win for us.

I wouldn't oppose to have a branch with it, see how it looks like, and think if there is some way to guarantee 100% that it won't be possible to use it in public repos.

I think we should probably wait for the definitive dropping of python 2 support. But maybe we should start implementing in the next Conan release a kindly error when a conan_package.7z is found to be ready to the future when (probably Conan 2.x) starts to upload 7z packages to public repositories. Then past versions from 1.6 could raise nicely saying: "The package xxx uses 7z compression and your conan version doesn't support it, please upgrade to the latest".

@memsharded @danimtb @markgalpin WDYT?

Yes, I agree, we could start preparing for the future.

I'm creating a new issue to my suggestion of being prepared for the future.

We might want to use zstd compression, it is 6 times faster to decompress: https://raw.githubusercontent.com/facebook/zstd/dev/doc/images/DSpeed3.png

Thanks @tru and @sztomi

Having lzma or zstd would be great!

We have large binary packages where the package-size would decrease significant (gzip: 345M, xz: 218M, zstd: 247M).

Currently we have recursively packed archives (an xz-archive inside the conan_package.tgz) which requires an additional unpack operation after installing the conan package.

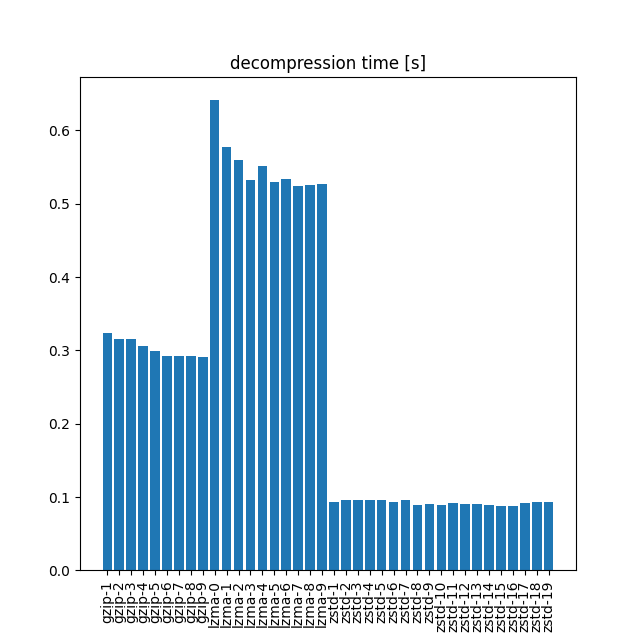

@SSE4 could you please put in your backlog do a small profiling experiment of the different compressions? Not urgent, but something we would like to close for Conan 2.0

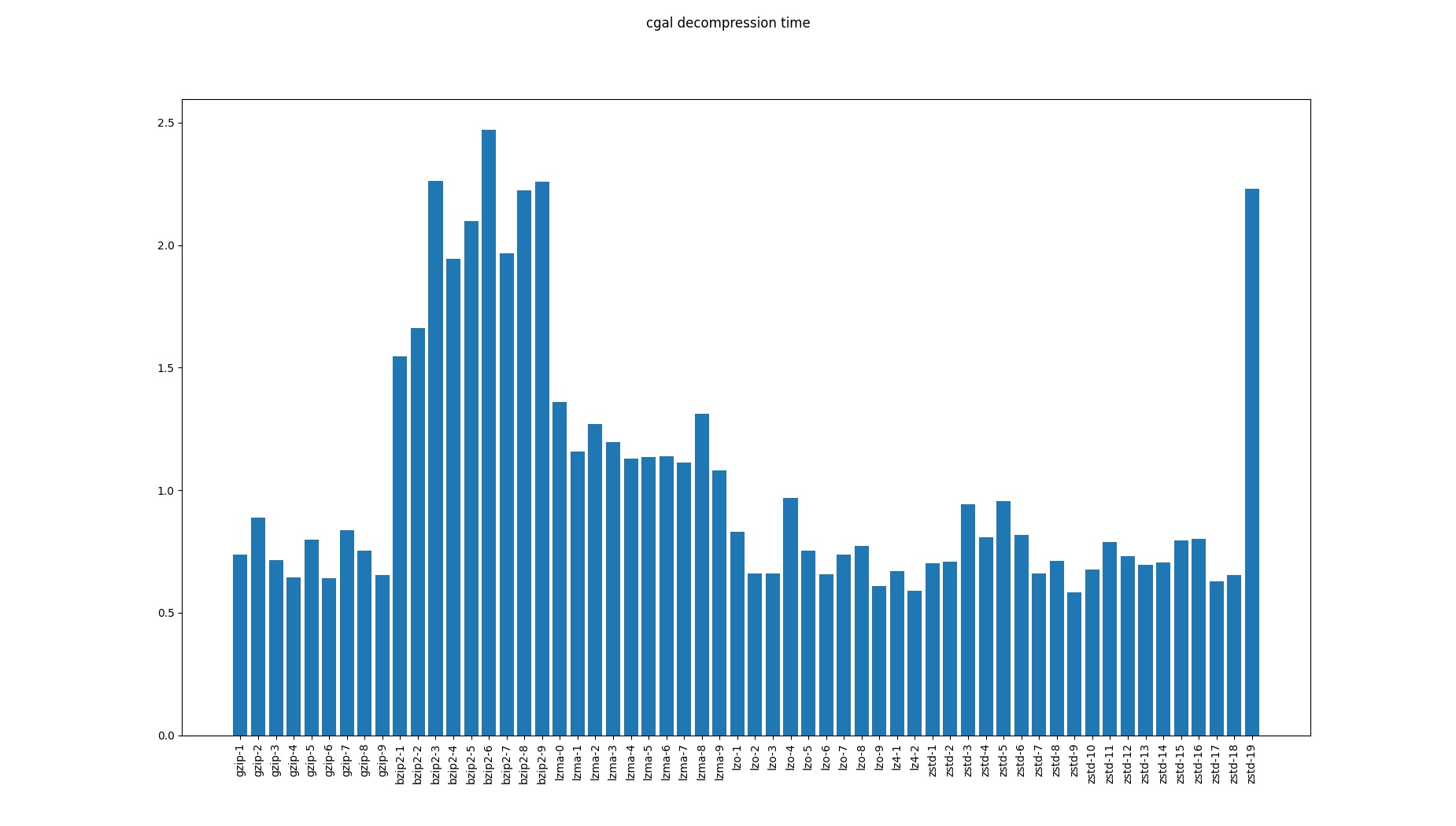

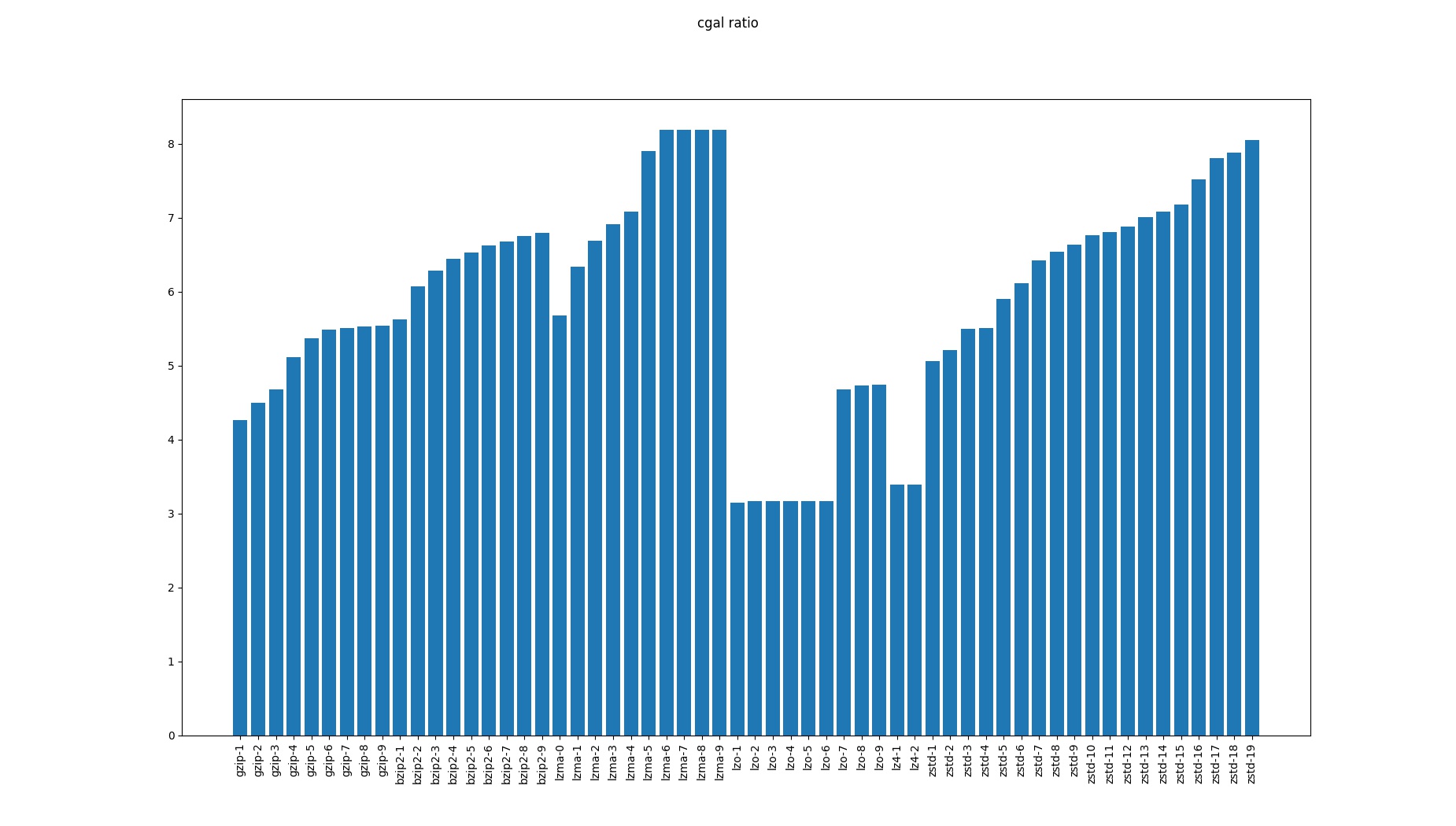

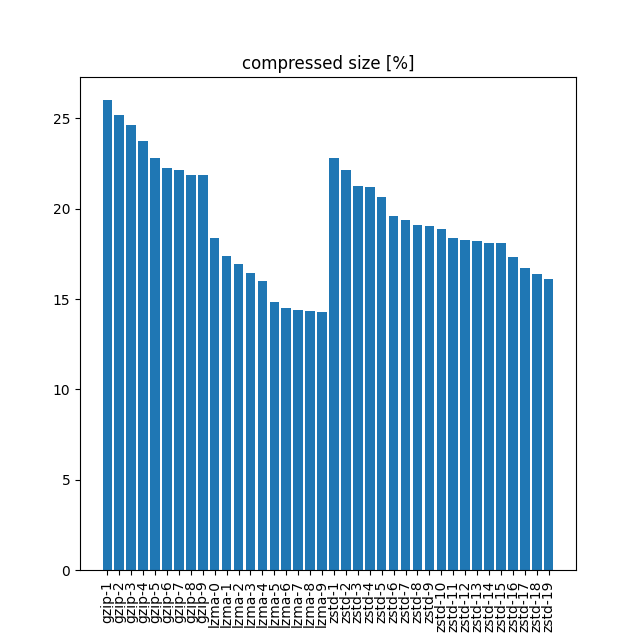

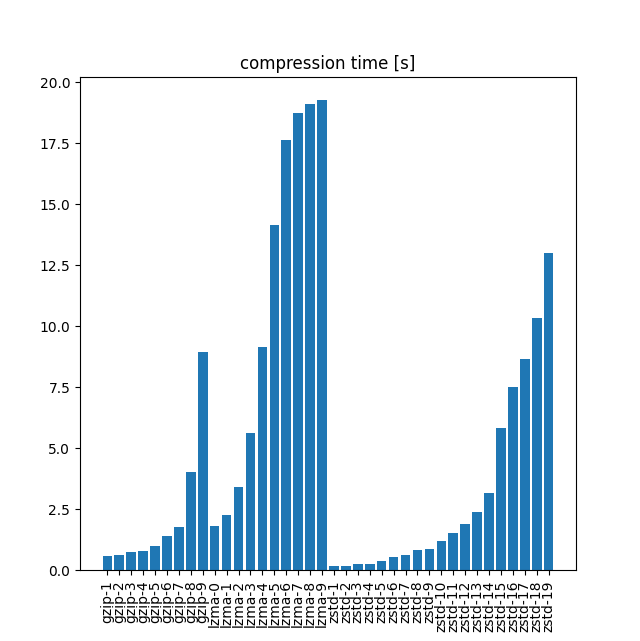

- Use the binaries (headers, libs) produced by Conan for some popular big packages. Lets use 2-3 like Boost, OpenCV, CGAL.

- Measure the compression time, size and decompression time.

- Use standard (not the Conan one that does other processing) tgz, and the other best alternatives (xz/lzma/zstd) that you think would be real competing alternatives.

@walterschneider our most important and driving metric will be decompression time. Transfers are not the bottleneck, and there are mechanisms to optimize this, like the download cache, but decompression is really the biggest bottleneck in Conan

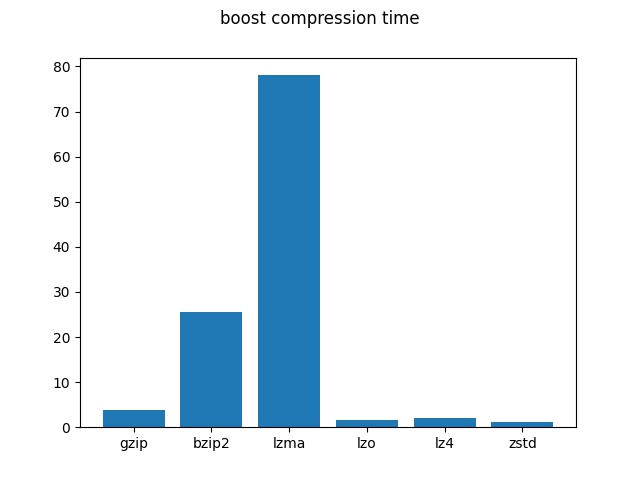

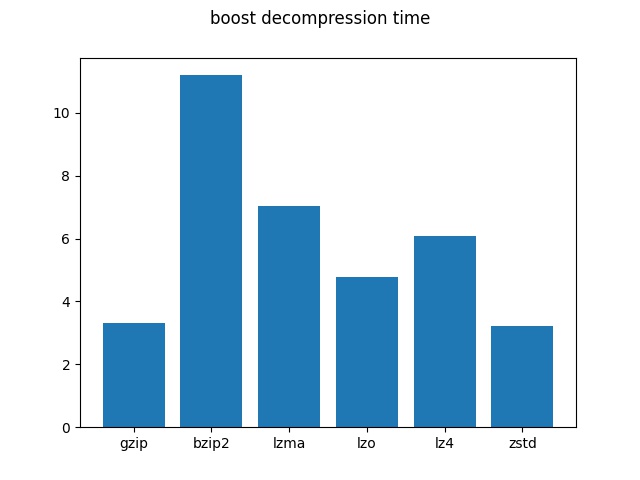

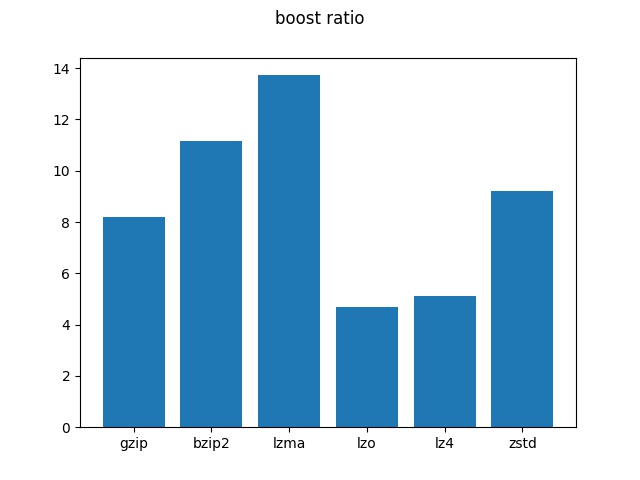

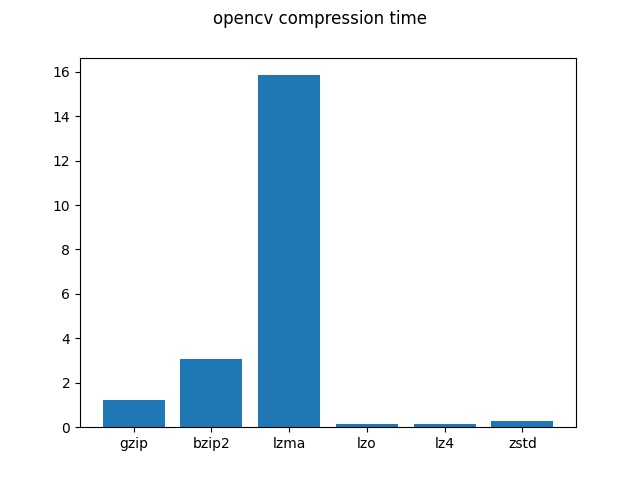

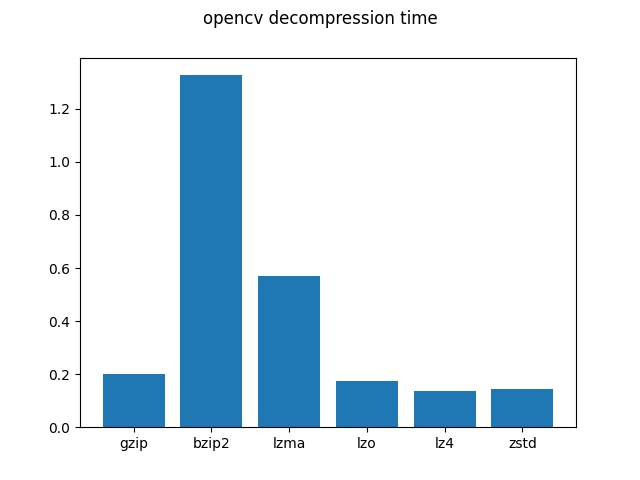

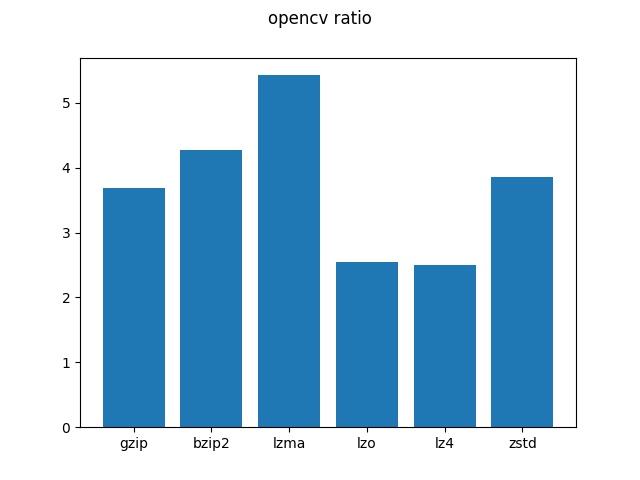

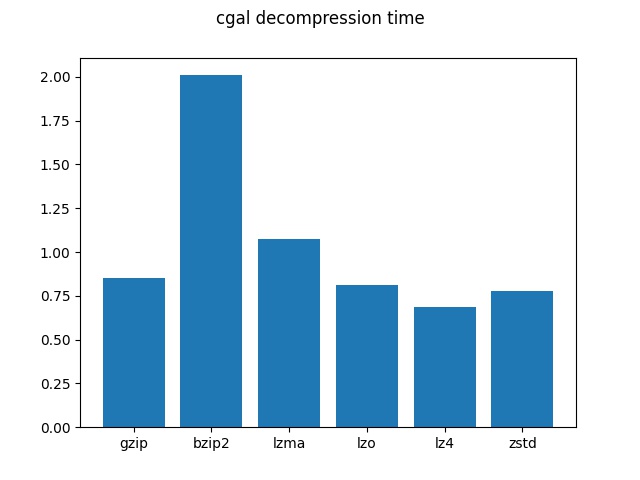

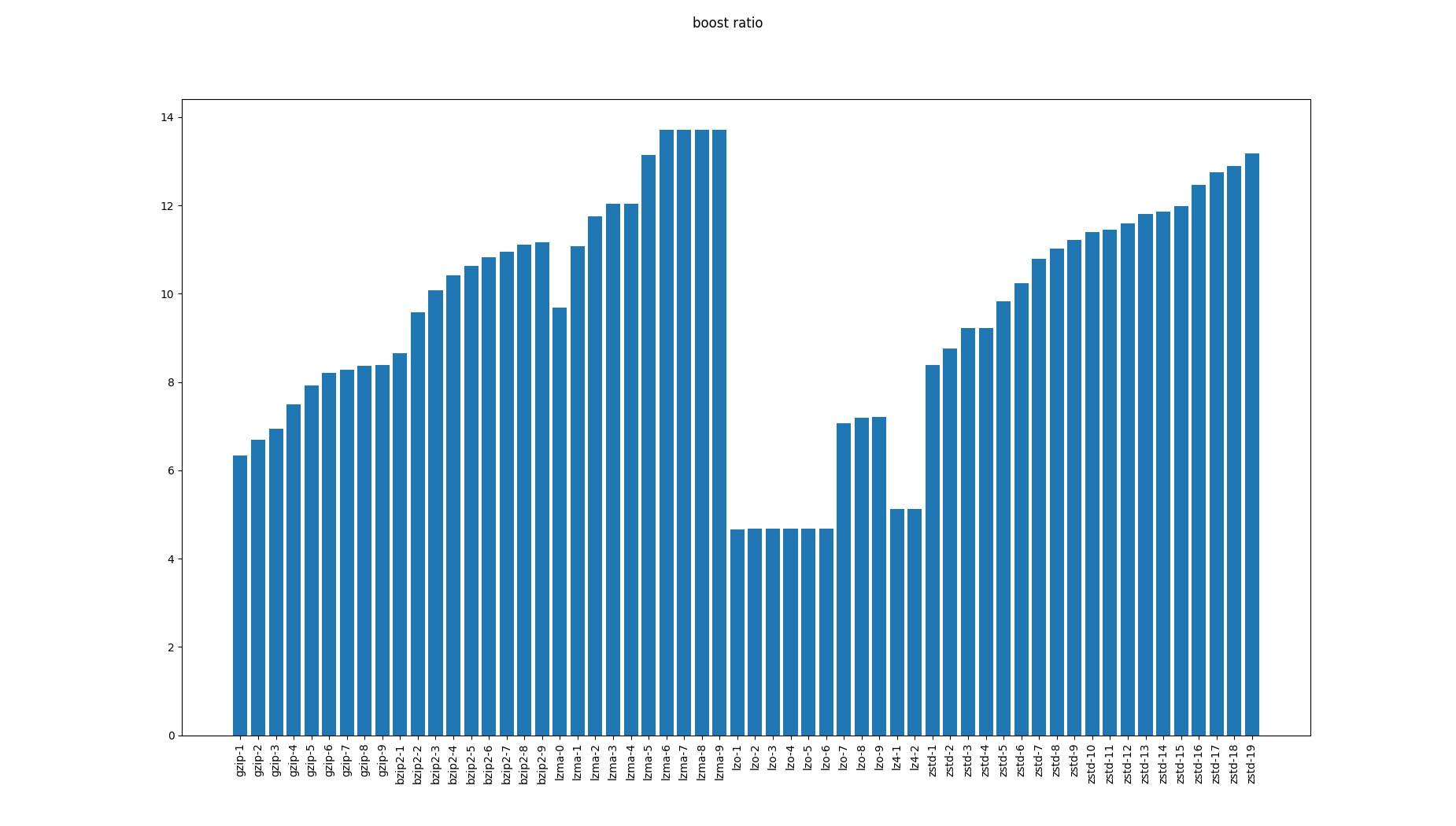

@memsharded here are some preliminary results of benchmarking gzip/bzip2/lzma/lzo/lz4/zstd on boost/opencv/cgal:

NOTE: this is with default compression levels

Good work @SSE4

My conclusions so far:

- Bzip2 and Lzma are not competitive. They can have great ratios, but are really slow in compressing and decompressing, so not an option.

- Zstd seems a slightly better alternative than gzip in terms of performance. But it is not Python builtin, and PyPI packages like https://pypi.org/project/zstd/ are a pure binding, and that is really problematic for distributing Conan. I cannot see very mainstream zstd implementations that would be very reliable and easy to distribute with Conan

- lzo, lz4 are a bit irregular, sometimes better, sometimes worse than gzip. The ratios seems to be worse, the decompression speed would be worse for boost, better for others. It would be also quite difficult to embed and distribute this with Conan in all platforms.

At the light of these results, my proposal is to keep using tgz format for conan server storage and transfers.

Zstd seems a slightly better alternative than gzip in terms of performance. But it is not Python builtin, and PyPI packages like https://pypi.org/project/zstd/ are a pure binding, and that is really problematic for distributing Conan. I cannot see very mainstream zstd implementations that would be very reliable and easy to distribute with Conan

This is true but I think for some users this is very easy to control (like for us).

I think considering that there should be an option to allow users to select the compression format for huge boosts in decompression and storage sizes.

If this can be encoded in the protocol somehow for conan 2 I think that would solve most people's problem. The policy for CCI should still probably be tgz - but you have a lot of users with private installations and that are happy to set something else there for the upsides.

One thing I didn't see in @SSE4 graphs was the compression setting for zstd? we see way better compression in our local tests - with a bit longer compression time but still the same decompression time. This is probably something that should be tweakable.

@tru all tests were run with default compression levels (it means level 3 for zstd, in particular).

I may also run tests for all compression levels during the night, but it will take way longer.

@memsharded what do you think if we leave gzip a default, but add some options for users who want more control:

[compression]

compressor = lzma # use lzma to compress packages

decompressor = zstd # use zstd to decompress packages

compressor.lzma.level = 9 # use custom compression level for lzma

decompressor.zstd.location = /usr/bin/zstd. # use custom executable for zstd compressor

@SSE4

Knowing the location of the zstd in the system is still not enough, and would require a very big development effort. If anything, the approach would be more in the line of users needing to provide some compress(files, output_filename, symlinks, ...) and decompress(file, output_folder, ....), kind of pluggable/plugins behavior, that implement the compression and decompression they want, under their own responsibility. Because there are many details there, like how symlinks are put and extracted, permissions and other metadata, timestamps... (at the moment the .tgz conan manages clears all timestamps).

Even implementing this plugin capability is not simple at all, it will require a relevant effort, providing the functionality as built-in as configurable is not something we have resources to do.

@memsharded the idea of tar is that it decouples compression from archiving, so conan can create tar file and use an external command to compress it (zstd, lzo, lz4 or whatever user wants). the same for decompression.

the archiving part handles symlinks, hardlinks, permission, timestamps, etc, and it's always the same code. the only thing changes is external command to run custom compressor or decompressor.

compressor/decompressor only operates on tar files, so it's easy for conan to be compression format agnostic.

Not completely true, for example the timestamps thing is managed in the gz layer, not the tar level (gzopen_without_timestamps())

And then we are using the builtin tarextract python functionality that handles the decompression at once, so we don't need to decompress first, then untar. What I am saying is that this is a very big effort, it is very far from "it's easy for conan to be compression format agnostic". It will require a relevant investment in changing the way things are compressed and decompressed, it will require a new way to define such plugins, to document everything and the interfaces and then to do the maintenance and support that will come with it.

@tru all tests were run with default compression levels (it means level 3 for zstd, in particular).

We found that level 9 was good for us.

I've made some tests with opencv/4.5.1:0e3623c19c9324197de1788dd20f27a1c0725ece:

- downloaded and extracted conan_package.tgz to conan_package.tar

- evaluated with gzip, lzms and zstd (each with the command-line version)

i see... @memsharded : thank you for your explanations regarding gzopen_without_timestamps, etc..

Thanks very much all of you for the feedback and the data.

I have been thoroughly consider our options, and at the moment we are not considering this feature:

Yes, there are some gains in compression ratio and decompression speed, for some cases (for zstd). Still not that huge, in many cases it is similar.

The complexity of making it the default approach (making it multi-platform, as this is not Python built-in, nor has a relatively stable native Python package, not a binding, that provides it) makes this option impossible.

Developing a new system of plugins, with the ability that users can connect their own compression and decompression routines is a lot of work, that will introduce fragility, more documentation, maintenance, support...

We really need to focus our development efforts in having a very solid Conan 2.0, and there are millions of critical, very required to-do things: from better integration with the build systems, to a more powerful graph model. Unless something it is really not working and needs to be fixed, it would need to wait for later. It could even be Conan 2.1, 2.2.... but not Conan 2.0, that is a different beast. Only managing a as smooth as possible transition from 1.X will be a huge effort.

Most helpful comment

We might want to use zstd compression, it is 6 times faster to decompress: https://raw.githubusercontent.com/facebook/zstd/dev/doc/images/DSpeed3.png

Thanks @tru and @sztomi