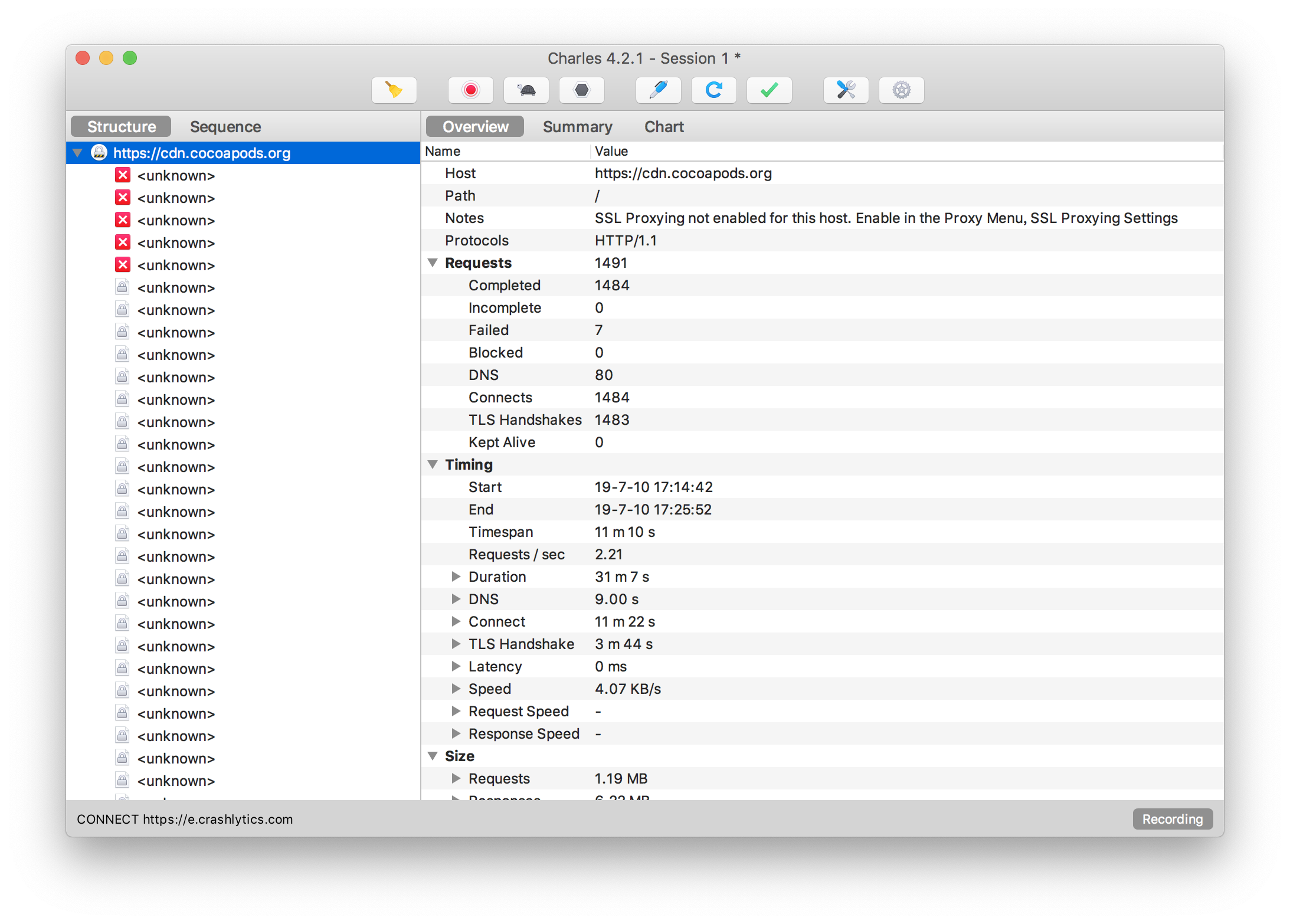

Cocoapods: pod update with CDN costs 30 minutes and almost 1500 api calls.

- [x] I've read and understood the CONTRIBUTING guidelines and have done my best effort to follow.

Report

What did you do?

Run pod update --verbose

What did you expect to happen?

Succeed quickly.

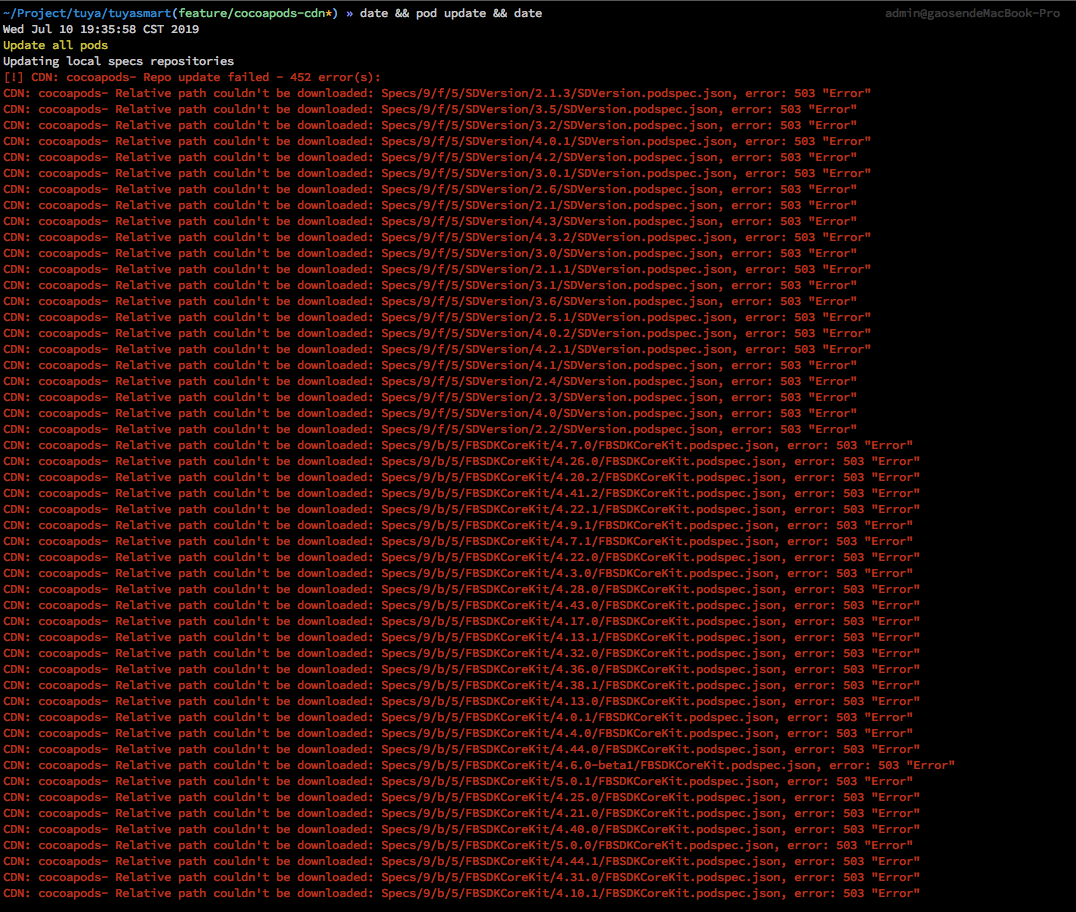

What happened instead?

failed many times and cost neally 30 minutes.

CocoaPods Environment

Stack

CocoaPods : 1.7.4

Ruby : ruby 2.6.0p0 (2018-12-25 revision 66547) [x86_64-darwin18]

RubyGems : 3.0.3

Host : Mac OS X 10.14.5 (18F132)

Xcode : 10.2.1 (10E1001)

Git : git version 2.22.0

Ruby lib dir : /Users/admin/.rvm/rubies/ruby-2.6.0/lib

Repositories :

master - https://github.com/CocoaPods/Specs.git @ 97a777bf3978f2caf7577b93792e58992affdfd5

TYSDKSpecs - https://registry.code.tuya-inc.top/tuyaIOSSDK/TYSDKSPecs.git @ 360ad8083f147a17538c832262e5488035f00323

TYSpecs - https://registry.code.tuya-inc.top/tuyaIOS/TYSpecs.git @ 4a2983581201af762857dead5b59e4327bfbc99c

TYTempSpecs - https://registry.code.tuya-inc.top/tuyaIOSSDK/TYTempSpecs.git @ 5753e40063b94fa2cc11548fb2d4109cecbbc227

wgine-tuyaios-tyspecs - https://code.registry.wgine.com/tuyaIOS/TYSpecs.git @ 13eeebcd46a20a4154608981e7ba356adea7f67f

Installation Source

Executable Path: /Users/admin/.rvm/gems/ruby-2.6.0/bin/pod

Plugins

cocoapods-deintegrate : 1.0.4

cocoapods-dependencies : 1.3.0

cocoapods-plugins : 1.0.0

cocoapods-search : 1.0.0

cocoapods-stats : 1.1.0

cocoapods-trunk : 1.3.1

cocoapods-try : 1.1.0

Podfile

source 'https://cdn.cocoapods.org/'

source 'https://xxx/xxx/xxxSpecs.git'

platform :ios, '9.0'

target 'xxx' do

...

end

Our project have about 200 pods. if a pod update can cost 1500 api calls, any network issue will make the ci system highly unstable. Is there any way to optimize the api call or set up a cdn mirror myself or do something else?

All 22 comments

maybe we can combine these call together or use http 2.0 multiplexing?

cc @igor-makarov

could adjust the number of threads. I dont have great suggestions besides resorting to git?

I'm more concerned with the all the 503's. There's no indication which host is erroring.

This was the logging change I used for tracking down the 502s, might be useful to pull it in permanently https://github.com/cltnschlosser/Core/commit/4260c0a7b76c38ca230cf7b8b81bfdee24f63f0d

@cltnschlosser want to open a PR against Core? We can ship it with 1.8.x

Downloading those files should be a one time cost just like cloning the master git repo would be.

So, I was looking at this a bit more in depth. Is it possible to re-release a podspec? Currently we are checking REST.get(file_remote_url, 'If-None-Match' => etag) for all files (repo.join('**/*.yml'), repo.join('**/*.txt'), repo.join('**/*.json')). So the file may not be downloaded again, but there are still requests being made for every single podspec that is known locally.

In a model where podspecs can only be released once, we wouldn't need to check if podspecs were up to date. We could just download new versions that appear in all_pods_versions_*.txt files.

In a model where podspecs can be released multiple times, what we could do it put etags for every pod version in the all_pods_versions_*.txt files. Maybe would want to change that to a different format like json since it would be a dictionary. Up side to this is it should reduce number of network calls when doing a pod update significantly. On my laptop in the cocoapods- directory there are only 120 txt files vs 1675 json files. Given how the txt files are split, there could be a maximum of 4096=16^3 of them. So maybe there should be an all_pods_versions.txt that has the etags for all the all_pods_versions_x_x_x.txt files.

Maybe everything I've said here won't work with CDNs, I don't have any expertise in that area.

For context, a Pod could be removed or deprecated which are mutations on the Podspec on the Specs repo I'm afraid, which might make that tricky

Okay, then this should still apply.

In a model where podspecs can be released multiple times, what we could do it put etags for every pod version in the all_pods_versions_*.txt files. Maybe would want to change that to a different format like json since it would be a dictionary. Up side to this is it should reduce number of network calls when doing a pod update significantly. On my laptop in the cocoapods- directory there are only 120 txt files vs 1675 json files. Given how the txt files are split, there could be a maximum of 4096=16^3 of them. So maybe there should be an all_pods_versions.txt that has the etags for all the all_pods_versions_x_x_x.txt files.

So does this though

Maybe everything I've said here won't work with CDNs, I don't have any expertise in that area.

ETags are a HTTP protocol feature. We don't actually calculate them, and I don't think we could.

Ultimately, the best solution would be if the index files had not only the pod versions but also all the dependency information. However, there's a lot of this information so that wold be pretty complicated to handle and would increase bandwidth use.

The reason all the specs for a requested pod are downloaded is because the resolver requires all of them to work.

Also, I'm seeing a lot of strange behavior from GH CDN:

CDN: trunk Relative path: Specs/9/6/0/A2DynamicDelegate/2.0.1/A2DynamicDelegate.podspec.json, has ETag? "bf0096d092cfc46f6e5c3c8dbaaf53a8bc412d09"

CDN: trunk Redirecting from https://cdn.cocoapods.org/Specs/9/6/0/A2DynamicDelegate/2.0.1/A2DynamicDelegate.podspec.json to https://raw.githubusercontent.com/CocoaPods/Specs/master/Specs/9/6/0/A2DynamicDelegate/2.0.1/A2DynamicDelegate.podspec.json

CDN: trunk Relative path downloaded: Specs/9/6/0/A2DynamicDelegate/2.0.1/A2DynamicDelegate.podspec.json, save ETag: W/"bf0096d092cfc46f6e5c3c8dbaaf53a8bc412d09"

Even after handling weak ETags, it is still happening. This results in unnecessary traffic.

OK, @cltnschlosser's suggestions have inspired me to try 2 new approaches to CDN structure:

- For each pod, create a single file containing all of its podspecs. This didn't pass Netlify's deployer because 60k files is too much. I now recall having the same problem when just deploying per-directory indices.

- For each shard, create a single file of all the podspecs of all the pods. This deployed successfully, but the median file size is 300KB, which will result in much more volume hitting Netlify directly. Additionally, the largest outliers are 10MB, and I pity the poor souls who use pods from shards

5/b/b(Ethereum) and0/1/9(Material Components).

Both approaches appear to be unsatisfactory.

Hmm, I see now that etags aren't generated in any standard way.

Ideally running two repo updates in a row would result in the second one only making ~1 network requests because there were no changes, and some subset of index files could tell us that.

Would it be possible use to checksums in place of etags in my suggestion? So we could put the checksums in the indices.

Alternatively since everything is backed by git, we could use the commit sha that the file was last changed (Or maybe the sha for the commit before it, if publishing a file and updating the index is one commit). Then we only make cdn requests for podspecs where the commit sha in the latest index doesn't match the index we have saved to disk.

Additionally, the largest outliers are 10MB, and I pity the poor souls who use pods from shards 5/b/b (Ethereum) and 0/1/9 (Material Components).

Are these uncompressed sized? and does the CDN and our rest client support compression? json normally compresses pretty well.

ETags are part of the HTTP standard and are theoretically supposed to be a unique identifier of a URL's content. The implementation depends on the server.

Ideally, this means that repo update would make lots of requests but all of them will be extremely short and empty 304 Not Modified.

I have been seeing what looks like incorrect ETag handling on GitHub (which is part of the CDN we use).

I'm going to try to address the suggestions:

- Checksums instead of ETags - don't see any big problem with it, but seems much more complicated to implement.

- Git commit hashes - impossible to compute efficiently due to the mosntrous repo size.

Additionally, any changes in the structure of the CDN could be breaking for clients, and need to be thoroughly considered.

The GitHub CDN supports Accept-Encoding: gzip. Netlify does not, seemingly.

Addition: I've checked and the native ruby HTTP client handles gzip encoding automatically. So we're already enjoying it where available.

Addition: I've checked and the native ruby HTTP client handles gzip encoding automatically. So we're already enjoying it where available.

Awesome!

Netlify does not, seemingly.

😞

Additionally, any changes in the structure of the CDN could be breaking for clients, and need to be thoroughly considered.

Yeah, I'm just brainstorming here, because there has to be a better way than making thousands of network requests (even if the responses are empty).

@0x5e not sure what we can do as next steps here, does it always happen to you?

@dnkoutso yes, it always happen, not only the first time..

This is one of the error:

# CDN: cocoapods- Relative path couldn't be downloaded: Specs/3/0/9/MQTTClient/0.6.9/MQTTClient.podspec.json, error: 503 "Error"

# curl -H 'If-None-Match: W/"84ab3ca2462328cf9f971c82a3e627ae5aefa812"' -H 'Accept: */*' -H 'User-Agent: Ruby' -H 'Host: cdn.cocoapods.org' --compressed 'https://cdn.cocoapods.org/Specs/3/0/9/MQTTClient/0.6.9/MQTTClient.podspec.json'

HTTP/1.1 301 Moved Permanently

Cache-Control: public, max-age=0, must-revalidate

Content-Length: 125

Content-Type: text/plain; charset=utf-8

Date: Fri, 12 Jul 2019 02:31:36 GMT

Location: https://raw.githubusercontent.com/CocoaPods/Specs/master/Specs/3/0/9/MQTTClient/0.6.9/MQTTClient.podspec.json

Age: 1

Server: Netlify

X-NF-Request-ID: ecc0624a-eabb-439e-b0cc-1c4cf2da21ff-22917461

Connection: Keep-alive

Redirecting to https://raw.githubusercontent.com/CocoaPods/Specs/master/Specs/3/0/9/MQTTClient/0.6.9/MQTTClient.podspec.json

Usually success or failed in about 2 minutes, 1500 calls still.

Sorry about that, I fount 503 error comes from charles:

HTTP/1.0 503 Error

Server: Charles

Cache-Control: must-revalidate,no-cache,no-store

Content-Type: text/html;charset=ISO-8859-1

Content-Length: 758

Proxy-Connection: Close

<html>

<head>

<title>Charles Error Report</title>

<style type="text/css">

body {

font-family: Arial,Helvetica,Sans-serif;

font-size: 12px;

color: #333333;

background-color: #ffffff;

}

h1 {

font-size: 24px;

font-weight: bold;

}

h2 {

font-size: 18px;

font-weight: bold;

}

</style>

</head>

<body>

<h1>Charles Error Report</h1>

<h2>Name lookup failed for remote host</h2>

<p>Charles failed to resolve the name of the remote host into an IP address. Check that the URL is correct.</p>

<p>The actual exception reported was:</p>

<pre>

java.net.UnknownHostException: raw.githubusercontent.com

</pre>

<p>

<i>Charles Proxy, <a href="http://www.charlesproxy.com/">http://www.charlesproxy.com/</a></i>

</p>

</body>

</html>

I'll close charles and test later.

Without charles, 503 error not happened for me anymore. It always success, just worry about availability in bad network, no other issues. Thanks everyone!

Awesome! @igor-makarov anything we can do to circumvent this?

Seems a bit out of scope to try and handle particular HTTP errors, I think.

Most helpful comment

This was the logging change I used for tracking down the 502s, might be useful to pull it in permanently https://github.com/cltnschlosser/Core/commit/4260c0a7b76c38ca230cf7b8b81bfdee24f63f0d