Cms: "Lock wait timeout exceeded" errors in MySQL

Description

Recently, we're seeing a lot of ERROR 1205 (HY000): Lock wait timeout exceeded; try restarting transaction errors when larger queries are executed. We believe that some _SELECT_ queries with _JOIN_ or some _UPDATE_ queries on the queue are causing these problems. The former looks like this for example:

SELECT `elements`.`id`

FROM (SELECT `elements`.`id` AS `elementsId`, `elements_sites`.`id` AS `elementsSitesId`, `content`.`id` AS `contentId`

FROM `elements` `elements`

INNER JOIN `entries` `entries` ON `entries`.`id` = `elements`.`id`

INNER JOIN `elements_sites` `elements_sites` ON `elements_sites`.`elementId` = `elements`.`id`

INNER JOIN `content` `content` ON (`content`.`elementId` = `elements`.`id`) AND (`content`.`siteId` = `elements_sites`.`siteId`)

WHERE (`entries`.`sectionId`='7') AND (`elements_sites`.`siteId`='1') AND (`elements`.`archived`=0) AND (((`elements`.`enabled`=1) AND (`elements_sites`.`enabled`=1)) AND (`entries`.`postDate` <= '2020-04-01 09:19:00') AND ((`entries`.`expiryDate` IS NULL) OR (`entries`.`expiryDate` > '2020-04-01 09:19:00'))) AND (`elements`.`dateDeleted` IS NULL) AND (`elements_sites`.`enabled`=1) AND ((`elements`.`id` IN (SELECT `sources46`.`targetId`

FROM `relations` `sources46`

WHERE `sources46`.`sourceId`='5936')) OR (`elements`.`id` IN (SELECT `targets47`.`sourceId`

FROM `relations` `targets47`

WHERE `targets47`.`targetId`='5936'))) AND (`elements`.`draftId` IS NULL) AND (`elements`.`revisionId` IS NULL)

ORDER BY `entries`.`postDate` DESC) `subquery`

INNER JOIN `entries` `entries` ON `entries`.`id` = `subquery`.`elementsId`

INNER JOIN `elements` `elements` ON `elements`.`id` = `subquery`.`elementsId`

INNER JOIN `elements_sites` `elements_sites` ON `elements_sites`.`id` = `subquery`.`elementsSitesId`

INNER JOIN `content` `content` ON `content`.`id` = `subquery`.`contentId`

ORDER BY `entries`.`postDate` DESC

These errors also cause PHP issues which can only be solved by restarting PHP. I've raised the limits for InnoDB buffer size and timeout massively (see below), but to no avail. I was wondering if there are really some queries created by Craft CMS that cause such long InnoDB locks, or if some queries are malformatted?

Our server has 8 Dedicated CPU cores as well as 32 GB RAM. While these timeouts occur, I can't see problems with the server metrics.

innodb_buffer_pool_size=8G

innodb_buffer_pool_instances=2

innodb_log_file_size=512M

innodb_lock_wait_timeout=600

This might be also related to https://github.com/craftcms/cms/issues/5098?

Additional info

- Craft version: 3.4.10.1

- PHP version: 7.2.24

- Database driver & version: MySQL 8.0.19

All 20 comments

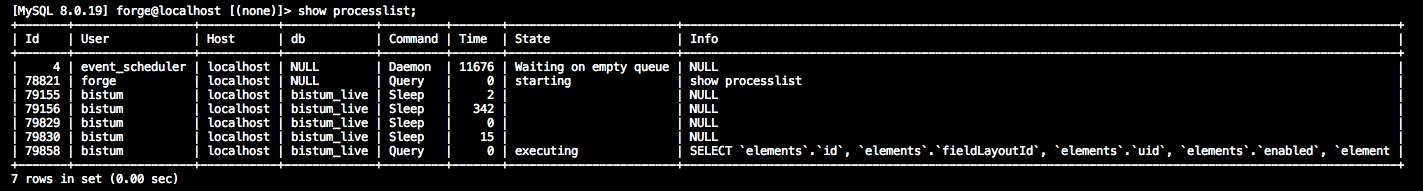

For what it's worth, I could just identify another query by calling show full processlist in the MySQL console while the error occured:

SELECT `elements`.`id`, `elements`.`fieldLayoutId`, `elements`.`uid`, `elements`.`enabled`, `elements`.`archived`, `elements`.`dateCreated`, `elements`.`dateUpdated`, `elements_sites`.`slug`, `elements_sites`.`siteId`, `elements_sites`.`uri`, `elements_sites`.`enabled` AS `enabledForSite`, `matrixblocks`.`fieldId`, `matrixblocks`.`ownerId`, `matrixblocks`.`typeId`, `matrixblocks`.`sortOrder`

FROM (SELECT `elements`.`id` AS `elementsId`, `elements_sites`.`id` AS `elementsSitesId`

FROM `elements` `elements`

INNER JOIN `matrixblocks` `matrixblocks` ON `matrixblocks`.`id` = `elements`.`id`

INNER JOIN `elements` `owners` ON `owners`.`id` = `matrixblocks`.`ownerId`

INNER JOIN `elements_sites` `elements_sites` ON `elements_sites`.`elementId` = `elements`.`id`

WHERE (`owners`.`draftId` IS NULL) AND (`owners`.`revisionId` IS NULL) AND (`elements`.`archived`=0) AND (`elements`.`enabled`=1) AND (`elements`.`dateDeleted` IS NULL) AND (`elements_sites`.`enabled`=1) AND ((`elements`.`id` IN (SELECT `sources1141`.`targetId`

FROM `relations` `sources1141`

WHERE `sources1141`.`sourceId`='470247')) OR (`elements`.`id` IN (SELECT `targets1141`.`sourceId`

FROM `relations` `targets1141`

WHERE `targets1141`.`targetId`='470247'))) AND (`elements`.`draftId` IS NULL) AND (`elements`.`revisionId` IS NULL)

ORDER BY `matrixblocks`.`sortOrder`) `subquery`

INNER JOIN `matrixblocks` `matrixblocks` ON `matrixblocks`.`id` = `subquery`.`elementsId`

INNER JOIN `elements` `elements` ON `elements`.`id` = `subquery`.`elementsId`

INNER JOIN `elements_sites` `elements_sites` ON `elements_sites`.`id` = `subquery`.`elementsSitesId`

ORDER BY `matrixblocks`.`sortOrder`

As soon as you kill this query, things are back to normal. These queries are definitely coming from Craft. What exactly they are doing, and why they are taking this long – I don't know. What I realized though is that when the query is executing along, everything is fine (see screenshot 1). Only when other queries (e.g. "UPDATE queue") are running simultaneously (see screenshot 2), it causes problems.

Is it possible that the timeout problem appears because the searchindex table is still running on the MyISAM engine and therefore can't "access" the InnoDB parameters? I'll change the engine of searchindex to InnoDB (we're running MySQL 8.0, so that's not a problem) and will check if the errors still appear.

@martinhellwagner that likely is the issue. not only will those parameters not apply to the table, but MyISAM does table locking and InnoDB does row-level locking, which greatly reduced the chance of a lock wait timeout occurring. going to go ahead and close this out as that will likely solve your issue, but comment back here if you still have problems.

@angrybrad

I'll monitor more closely tomorrow when there's content editor activity again on the site, but after making the switch I haven't been able to reproduce the error. So there's a good chance that this engine switch has solved the problem! 🙏

Just an idea, but maybe it would be good to include this information prominently in the docs as a reminder for everyone who has to deal with an ever-scaling install of Craft. Apologies is that already is the case, but at least for me it has been a long and winding road to get to the bottom of this. 😛

Actually, my high hopes were crushed just now because the same issue happened again – even though all our tables are running on InnoDB. Any other ideas how we could prevent this from happening? It basically makes using the Control Panel a risk to the whole site.

@angrybrad — wanted to mention you. Unfortunately, this didn't solve the problem, please see the comment of @martinhellwagner above ☝️

(I'm working as the project manager with Martin on this project, unfortunately this became our top-priority problem now as CP changes crash the site periodically)

Will moving the queue to Redis be of any help here? I seemed to only get these errors when a large SELECT query was followed by an UPDATE queue query. We're using Forge to provision our servers, where Redis seems to be baked in (https://forge.laravel.com/docs/1.0/resources/caches.html). I've prepared the following configuration in our config/app.php file:

'components' => [

// Initialize Redis component

'redis' => [

'class' => yii\redis\Connection::class,

'hostname' => 'localhost',

'port' => 6379,

'password' => 'null',

],

// Use queue with Redis

'queue' => [

'class' => yii\queue\redis\Queue::class,

'redis' => 'redis',

'channel' => 'queue',

],

],

@martinhellwagner you can try that... do you have a lot of long-running jobs in the queue when you get the timeout errors?

@angrybrad

Most likely yes. A lot of these queue jobs are deleting stale template caches, sometimes up to 20k of them, since we're actually having 67 multisites on our Craft install and 6k entries as well as 19k assets (hosted on S3). The other queue jobs are just updating search indexes and are very fast.

For the record, we're using two Forge daemons to listen to the queue jobs and process them. We also have the parameter runQueueAutomatically set to false in order to move everything queue-related to the PHP-CLI.

since we're actually having 67 multisites on our Craft install

😯

How many rows are in your template cache tables?

searchindex = 6.976.026 rows

templatecacheelements = 70.399 rows

templatecachequeries = 15.825 rows

templatecaches = 2.824 rows

If the "delete stale template cache" job is what's taking so long (and potentially holding up resources on the database), might be time to revisit your template caching strategy so Craft doesn't have to track as many elements to decide when to bust them?

Maybe you're using some {% cache %} tags on a page with lots of querystring variations in which case https://docs.craftcms.com/v3/dev/tags/cache.html#using-key and globally helps.

Or maybe you'd be better off using https://plugins.craftcms.com/cache-flag where you can more explicitly define when your caches get busted.

Or potentially setting https://docs.craftcms.com/v3/config/config-settings.html#cacheelementqueries to false and coming up with a strategy of when to bust caches based on your site's specific requirements (via plugin, cron job, etc.).

We're actually not sure if it's the SELECT query is holding up the UPDATE queue query, or vice versa. Revising the caching strategy is definitely a good idea (we're experimenting with HTML Caching at the moment), but we are wondering if something about this the above SQL queries or the following query is wrong (I'm not seeing any job or description columns being updated):

SQLSTATE[HY000]: General error: 1205 Lock wait timeout exceeded; try restarting transaction

The SQL being executed was: UPDATE `queue` SET `dateReserved`=NULL, `timeUpdated`=NULL, `progress`=0, `progressLabel`=NULL WHERE ((`channel`='queue') AND (`fail`=FALSE)) AND (`timeUpdated` < 1585764022 - `ttr`)

Also, is it possible the DB is missing some indexes on which the INNER JOINs are carried out?

@angrybrad

We've identified large pages (one with 130 Content Builder elements) to likely be the culprit. Is it possible that when trying to save such large pages, the resulting SELECT statement can't be handled by the MySQL any more (and is running into some MySQL/PHP timeout)?

Also, is it possible the DB is missing some indexes on which the INNER JOINs are carried out?

It's possible some indexes might help in your specific setup, but that's usually on a problem with databases that with elements/relations/etc. in the millions of rows range and it's usually when some site-specific custom fields are part of the where clause.

sometimes up to 20k of them

Honestly, I think this is the root of the issue, so anything you can do to 1) get the number of those jobs down and 2) make the ones that do have to run as light as possible so they finish quicker will help reduce the chance of a database timeout occurring.

Thanks for the clarification!

However, I'm not sure the "Delete Template Caches" queue jobs are the main source of the problem. More likely than that are the _huge_ SELECT queries carried out when trying to save pages/entries with an enormous amount of Content Builder elements.

With MySQL queue queries "out of the way" since the queue is now in Redis (and the searchindex table now running with InnoDB), I guess the best thing we can do is to reduce the size of the Content Builder pages/entries and try to optimize caching (by moving caching to Redis as well or by implementing HTML caching)?

We've found the problem: we had a lot of revisions, some of which seemed to be corrupt. After clearing the revisions table, we haven't been able to reproduce the error.

@martinhellwagner interesting... welp, glad you got to the bottom of it!

Thanks for helping! We now have to find out why revisions are that big of a problem. I guess the new utils/prune-revisions CLI command is a better method of dealing with old (possibly broken) revisions than simply clearing the revisions table? Even without any existing revisions (but with the possibility to create new ones), saving an entry takes a long time. At least we have the error sorted out, but this is the next issue we have to tackle.

Hey @angrybrad — I can move this if this isn't the right place for the topic but it seemed relevant.

I'm also experiencing similar issues (but I'm investigating using Redis for queues and pruning our revisions to see if it helps). I also wonder how much of it is attributable to the "Content Builder"-type of field like @martinhellwagner is using? We use the same field type as well on the site having issues.

The reason I bring this up is specific to the upcoming ☁️ Craft Cloud launch. When it becomes possible in Craft 4 to use nested Matrix fields, and Craft Cloud is running on Craft 4, and the complexity of these "Content Builder" fields grow — how will Craft Cloud performantly process these types of fields for users without exhibiting the same kinds of problems?

I ask—not because of any doubt in Craft Cloud!—but to see if there's something users of a self-hosted Craft can do to combat these problems.