Ckeditor5: Check if tests would work better if we had one entry point in Karma

Right now we tell Karma to load all our test files, which is something around ~200 entry points. This is a lot and makes Webpack compile editor 200 times – so when running npm t we load 200 entry points.

When we've been setting up Karma I noticed that some guys recommend to have just one entry point, generated on-the-fly. This entry point should simply import all the tests which should be loaded.

It's worth checking if this could help cause our 7000 tests can only be run reliably in Chrome and Safari (https://github.com/ckeditor/ckeditor5/issues/573).

All 23 comments

I generated on-the-fly an entry point for Karma and run these tests on Firefox, Safari and Chrome:

Firefox 56.0.0 (Mac OS X 10.12.0): Executed 6886 of 6944 (114 FAILED) (skipped 6) (5 mins 23.85 secs / 40.808 secs)

Safari 11.0.0 (Mac OS X 10.12.6): Executed 6499 of 6944 (144 FAILED) (skipped 6) (5 mins 36.213 secs / 31.416 secs)

Chrome 61.0.3163 (Mac OS X 10.12.6): Executed 6886 of 6944 (110 FAILED) (skipped 6) (5 mins 6.865 secs / NaN secs)

That does not surprise me. I'd ignore FF and Safari results (as they were not green previously) and focus on Chrome's. I'm 99% sure that most of those red tests are caused by the insufficient cleanup. It's very well visible when you run tests and see how the number of elements and some foo/bar texts grows in the browser window. These are all issues in tests.

Maybe, focus on some independent package like ckeditor5-utils and see the result there.

Also, could you compare the results (time) with the current npm t? Just to see if it slowed down or sped up. Please check real time (time npm t) and what Karma says (so the time took by tests). Altough... this we'll be able to on a subset of tests which pass, so first please check if you can make at least one package pass the tests.

I don't know why but today the tests pass. I prepared a comparison of execution time for three calls npm t for multiple entry points vs. single entry point.

Chrome 61.0.3163

Old style

It means the tests have been executed using multiple entry points.

| | I | II | III | Avg |

|-------|--------------------------------|--------------------------------|---------------------------------|-------------------------------------|

| Real | 2m32.347s | 2m13.899s | 2m54.569s | 2m33.605s |

| User | 2m0.952s | 2m2.636s | 2m10.554s | 2m4.714s |

| Sys | 0m13.782s | 0m15.470s | 0m17.240s | 0m15.497s |

| Karma | 1 min 6.806 secs / 17.118 secs | 1 min 7.767 secs / 19.215 secs | 1 min 18.224 secs / 21.152 secs | 1 min 10.932 secs / 19.161 secs |

New style

It means the tests have been executed using single entry point generated on-the-fly.

| | I | II | III | Avg |

|-------|---------------------------------|---------------------------------|---------------------------------|--------------------------------------|

| Real | 2m51.523s | 2m18.829s | 2m10.351s | 2m14.129s |

| User | 2m8.654s | 2m7.536s | 2m2.793s | 2m6.327 |

| Sys | 0m16.906s | 0m16.024s | 0m16.779s | 0m16.569s |

| Karma | 1 min 17.634 secs / 20.118 secs | 1 min 10.955 secs / 18.807 secs | 1 min 10.544 secs / 18.608 secs | 1 min 13.044 secs / 19.177 secs |

All the test pass when I called npm t. But if I wanted to execute the tests manually (npm t -- --server and open localhost:9876 in browser), 4 tests failed for both "styles":

- Bogus BR integration insertText space is inserted on the end of the line (paragraph)

- Bogus BR integration insertText space is inserted on the end of the line (bold)

- Spellchecking integration Plain text spellchecking (insertText) should replace with longer word (collapsed)

- Spellchecking integration "before each" hook for "should replace with longer word (non-collapsed)".

Maybe it's related to my browser extension, though I run the tests in anonymous mode.

Haha :) So since now the tests pass, could you compare results in Firefox too? You can expect around 10-20 tests to fail there in both modes – that's ok. It will let us understand if Chrome is not some kind of exception and the reason for this change was actually the problem with other browsers.

Maybe it's related to my browser extension, though I run the tests in anonymous mode.

That's related to focus – if browser loses it some methods start to be unstable.

Firefox 56.0.0

Old style

| | I | II | III | Avg |

|-------|--------------------------------|--------------------------------|---------------------------------|-------------------------------------|

| Real | 2m22.865s | 2m30.054s | 2m41.292s | 2m31.403s |

| User | 2m26.450s | 2m31.947s | 2m38.624s | 2m30.114s |

| Sys | 0m18.112s | 0m20.695s | 0m21.300s | 0m20.035s |

| Karma | 1 min 27.948 secs / 38.666 secs | 1 min 27.411 secs / 38.199 secs | 1 min 40.177 secs / 41.769 secs | 1 min 31.845 secs / 39.544 secs |

Executed 6940 of 6951 (8 FAILED) (skipped 6)

New style

| | I | II | III | Avg |

|-------|--------------------------------|--------------------------------|---------------------------------|-------------------------------------|

| Real | 2m34.257s | 2m28.418s | 3m14.194s | 2m45.623s |

| User | 2m35.910s | 2m32.347s | 2m47.330s | 2m38.529s |

| Sys | 0m20.432s | 0m19.941s | 0m24.318s | 0m21.563s |

| Karma | 1 min 38.213 secs / NaN secs | 1 min 31.199 secs / 39.568 secs | 1 min 54.731 secs / 43.659 secs | 1 min 41.381 secs / 41.6135 secs |

Executed 6940 of 6951 (10 FAILED) (skipped 6)

npm t -- --server

Executed 6940 of 6951 (8 FAILED) (skipped 6)

Tests which fail on Chrome, fails on Firefox as well.

Interesting. The avg time is so much worse due to the last run (3m14s). Interesting to see how much the time varies anyway.

One last bit, then – how's the memory consumption? Does this method fix the memory leak problem with Webpack? Does it reduce general memory consumption (I mean a combined Node+browser consumption)?

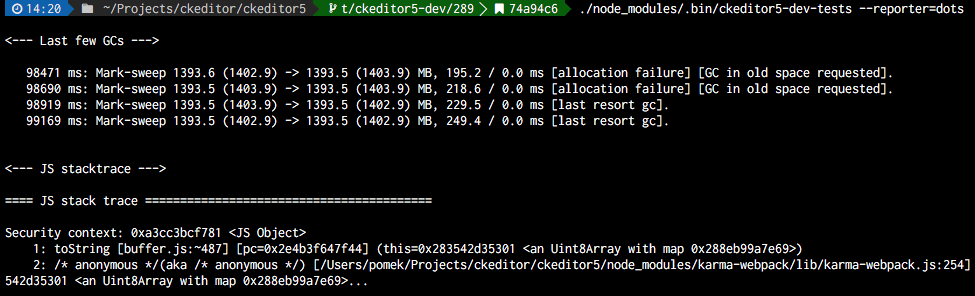

In both cases, ./node_modules/.bin/ckeditor5-dev-tests --reporter=dots fails because of:

If you expect more precise report, let me know.

That's funny... Or sad.

So, I hope one last check – how's memory consumption looking on the browser side?

Could you write me an instruction (step by step) how I can prepare the report?

I tried to use "Performance" tab in dev-tools (Chrome) but it's quite unreadable for me.

I meant OS's memory, so you can do this:

- Open Activity Monitor in MacOS. Go to the memory tab.

- Run tests.

- Try to catch how much memory did the browser consumed.

You could also use the Task Manager in Chrome, but I'm not sure if FF has one too. OS's memory will be fine.

Old style

A critical moment before starting tests execution:

- Node: 2.97 GB

- Chrome: 907 MB

During tests execution:

- Node: 2.44 GB

- Chrome: 1.41 GB

New style

A critical moment before starting tests execution:

- Node: ~3 GB

- Chrome: ~2 GB

During tests execution:

- Node: ~2 GB

- Chrome: ~1.5 GB

Let's say – it almost looks the same. With single entry file, Chrome needs more RAM before starting.

OK... So it seems that this method doesn't give us anything and may even look worse. So, I don't think we should do this change.

However, let's keep this ticket open. Please also link here the branch with the changes that you tested this on. Maybe, we'll learn something new with time.

Link to changes: https://github.com/ckeditor/ckeditor5-dev/compare/t/290.

Working on https://github.com/ckeditor/ckeditor5-ui/issues/144 I discovered that since the way styles are compiled has changed (stylesheet per–view) a massive duplication of styles occurred when running automated tests.

Previously, the styles were compiled "per–package" so as long as the tests were run for a single package, there was no problem with duplication. At this moment, because each component imports own styles and Karma compiles each test file in a separate thread, PostCSS importer cannot resolve duplicates.

Note that this is not only about performance etc.. Because styles duplicate, the UI gets seriously broken. It makes the debugging much harder or at least–less obious. This is exactly the same case as in https://github.com/ckeditor/ckeditor5/issues/420.

The single entry point approach works like a charm here (checked) so I'd welcome it in our project.

Bonus: With a single entry point + PostCSS you can run tests for multiple packages and still there's no duplication.

It sounds really good. Now we have a good reason to switch to single entry point.

I polished the solution and now it waits for a review – https://github.com/ckeditor/ckeditor5-dev/pull/304.

All files executed as single entry point does not pass. Let's revert changes from ckeditor/ckeditor5-dev#304 in order to have stable dev tool.

I will look at this once again.

Because we reverted the changes if you would like to test a single entry point, you need to check out to master-before-revert-304-t/290 branch.

Ok. The whole test engine is ok but few tests contain invalid mocks or don't clean after execution.

See https://github.com/ckeditor/ckeditor5-image/issues/159 and https://github.com/ckeditor/ckeditor5-engine/issues/1189.

I hope we will restore the _single entry point_ mechanism next week.

There is also a problem with our engine debug tools. Once they are enabled they will stay that way for the rest of the testing phase. This causes that this one test is failing. This is not a big deal (toJSON() method is missing from operation object literal). Bigger problem is that these tools are enabled all the time and we are not flooded with logs during tests because of a coincidence that last initialization of the utilities sets log and error methods to spies.

There is no more blocker, so I will create the PR one more time.