Charts: [nfs-client-provisioner] Error getting server version: Get https://10.96.0.1:443/version?timeout=32s: dial tcp 10.96.0.1:443: i/o timeout

Describe the bug

nfs-nfs-client-provisioner-5c9b5cc567-5mph9 0/1 Error 2 109s

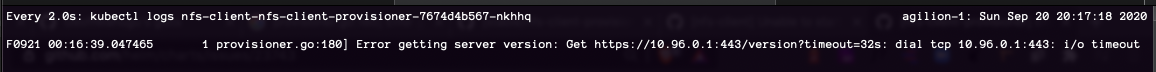

error log:

kubectl logs nfs-nfs-client-provisioner-5c9b5cc567-5mph9

F0910 09:31:34.728344 1 provisioner.go:180] Error getting server version: Get https://10.96.0.1:443/version?timeout=32s: dial tcp 10.96.0.1:443: i/o timeout

but if I run (tested on every nodes)

curl https://10.96.0.1:443/version?timeout=32s -k

it returns immediately:

{

"major": "1",

"minor": "18",

"gitVersion": "v1.18.0",

"gitCommit": "9e991415386e4cf155a24b1da15becaa390438d8",

"gitTreeState": "clean",

"buildDate": "2020-03-25T14:50:46Z",

"goVersion": "go1.13.8",

"compiler": "gc",

"platform": "linux/amd64"

}

Version of Helm and Kubernetes:

Helm version.BuildInfo{Version:"v3.2.4", GitCommit:"0ad800ef43d3b826f31a5ad8dfbb4fe05d143688", GitTreeState:"clean", GoVersion:"go1.13.12"}

Kubernetes v1.18.0

Which chart:

nfs-client-provisioner

What happened:

nfs not run

What you expected to happen:

nfs running

How to reproduce it (as minimally and precisely as possible):

It happened after I deleted pv/pvc and the directory in mount. I've tried helm delete the chart and reinstall but errors still.

Anything else we need to know:

All 6 comments

I'm having the same issue, sort of came up when I updated my server's packages.

Current nfs-server version: vers=4.2

Hi @BigeYoung and @GeoffMahugu did anyone of you found the solution to this? If yes, please explain the steps that worked for you?

I solved this. I just ignored it. After waiting for ten minutes, it worked normally and did not restart.

hello ! i used to have exactly the same problem!

TL;DR :

--pod-network-cidr=10.252.0.0/16 MUST BE EQUAL "Network": "10.244.0.0/16" in kube-flannel.yml

The longer explanation: when you initialze kubernetes contol plane you specify pod network like this

# kubeadm init --pod-network-cidr=10.252.0.0/16 ...

if you then install flannel it has TOTALlY DIFFERENT ip network setting by default for pod network in its yaml

like this

https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

...

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

...

the point is that flannel eventually will use 10.252.0.0/16 but sometimez and somehow 10.252.0.0/16 and "10.244.0.0/16" will conflict with each other.

you can easily check if smth is wrong with your flannel install

# cat /run/flannel/subnet.env

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.252.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

the connection via flannel works but how is that possible ?

okay. the real troubl happens when you try to make to work nfs-client-provisioner.

the pod will start crushloop and the log will show

provisioner.go:180] Error getting server version: Get https://10.96.0.1:443/version?timeout=32s: dial tcp 10.96.0.1:443: i/o timeout

The root of the problem is beacause ( --pod-network-cidr=10.252.0.0/16 ) IS NOT EQUAL ( Network": "10.244.0.0/16" in kube-flannel.yml )

THIS IS THE CASE.

How to solve the problem:

1)Edit flannel mapping in kubernetes by changing network from 10.244.0.0/16 to 10.252.0.0/16

# kubectl edit cm -n kube-system kube-flannel-cfg

2) remove flannel pods

# kubectl delete pod -n kube-system -l app=flannel

3) after you redeploy flannel pods everything with nfs-client-provisioner pods and pvc will get OKAY instantly!

Usefull links:

1) https://www.programmersought.com/article/11255262090/

2) https://gravitational.com/blog/kubernetes-flannel-dashboard-bomb/#fnref:OPTIONAL-I-also

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Any further update will cause the issue/pull request to no longer be considered stale. Thank you for your contributions.

Encountered same issue with Kubernetes v1.20.2 (also v1.20.1):

# kubectl logs nfs-provisioner-554f54966d-k99dg

F0116 00:02:23.922169 1 provisioner.go:180] Error getting server version: Get https://10.96.0.1:443/version?timeout=32s: dial tcp 10.96.0.1:443: i/o timeout

I'm using kube-router and set up the Service' (10.96.0.0/16) and Pod' (10.244.0.0/16) subnets correctly, so the @aceqbaceq solution will not wok for me.

Any ideas?