Charts: [stable/prometheus-operator] not recognizing matchNames in *NamespaceSelector

Describe the bug

A clear and concise description of what the bug is.

Version of Helm and Kubernetes:

helm: v3.0.3, k8s: v1.15.8-gke.2

Which chart:

stable/prometheus-operator

What happened:

I got the error below when I try to install a chart via terraform

Error: unable to build kubernetes objects from release manifest: error validating "": error validating data: [ValidationError(Prometheus.spec.ruleNamespaceSelector): unknown field "matchNames" in com.coreos.monitoring.v1.Prometheus.spec.ruleNamespaceSelector, ValidationError(Prometheus.spec.serviceMonitorNamespaceSelector): unknown field "matchNames" in com.coreos.monitoring.v1.Prometheus.spec.serviceMonitorNamespaceSelector]

So I checked code in CRD

https://github.com/helm/charts/blob/a97695fcdfbe61599ffd3a92f1ca672e5319ee54/stable/prometheus-operator/crds/crd-prometheus.yaml#L3247-L3290

https://github.com/helm/charts/blob/a97695fcdfbe61599ffd3a92f1ca672e5319ee54/stable/prometheus-operator/crds/crd-prometheus.yaml#L3890-L3934

https://github.com/helm/charts/blob/a97695fcdfbe61599ffd3a92f1ca672e5319ee54/stable/prometheus-operator/crds/crd-prometheus.yaml#L4136-L4169

and it turns out there is no matchNames in the spec but instead there is matchLabels which I think maybe wrong? according to https://github.com/coreos/prometheus-operator/blob/master/Documentation/api.md#namespaceselector

What you expected to happen:

recognise matchNames in *NamespaceSelector and properly install

How to reproduce it (as minimally and precisely as possible):

pick any NamespaceSelector and add matchNames and try installing

# values.yaml

prometheus:

prometheusSpec:

serviceMonitorNamespaceSelector:

matchNames:

- my-namespace

Anything else we need to know:

even though I don't think it's related to terraform or helm provider I will leave the version info here.

So I used terraform 0.12.20 and terraform helm provider 1.0.0

All 37 comments

Version of Helm and Kubernetes:

helm: v3.0.3, k8s: v1.16.3

Error:

error validating "": error validating data: ValidationError(Prometheus.spec.storage.volumeClaimTemplate): unknown field "selector" in com.coreos.monitoring.v1.Prometheus.spec.storage.volumeClaimTemplate

I don't know if my issue is directly linked with this one..

I'm getting the same error when trying to use the any: true option that is also described in the documentation. It looks like either the documentation or the CRD is incorrect. How can one have it select all namespaces?

Error: UPGRADE FAILED: error validating "": error validating data: [ValidationError(Prometheus.spec.podMonitorNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.podMonitorNamespaceSelector, ValidationError(Prometheus.spec.ruleNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.ruleNamespaceSelector, ValidationError(Prometheus.spec.serviceMonitorNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.serviceMonitorNamespaceSelector]

Same here with Helm v3.1.1 and latest chart 8.10.0 with any: true

error validating data: [ValidationError(Prometheus.spec.ruleNamespaceSelector): unknown field \"any\" in com.coreos.monitoring.v1.Prometheus.spec.ruleNamespaceSelector, ValidationError(Prometheus.spec.serviceMonitorNamespaceSelector): unknown field \"any\" in com.coreos.monitoring.v1.Prometheus.spec.serviceMonitorNamespaceSelector]"

Same here with Helm v3.0.0

Also getting this error with Helm v3:

prometheus:

prometheusSpec:

ruleNamespaceSelector:

any: true

serviceMonitorNamespaceSelector:

any: true

error validating data: [ValidationError(Prometheus.spec.ruleNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.ruleNamespaceSelector, ValidationError(Prometheus.spec.serviceMonitorNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.serviceMonitorNamespaceSelector]

Edit: Removing the any fields allows the deploy to continue. Looking at the CRD the any field is removed as well, seems like Prometheus might be watching all namespaces by default now? I can see existing service monitors are still active with the fields removed.

@servo1x The comments say, it would only watch its own namespace by default:

Also getting this error with Helm v3:

prometheus: prometheusSpec: ruleNamespaceSelector: any: true serviceMonitorNamespaceSelector: any: trueerror validating data: [ValidationError(Prometheus.spec.ruleNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.ruleNamespaceSelector, ValidationError(Prometheus.spec.serviceMonitorNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.serviceMonitorNamespaceSelector]Edit: Removing the any fields allows the deploy to continue. Looking at the CRD the any field is removed as well, seems like Prometheus might be watching all namespaces by default now? I can see existing service monitors are still active with the fields removed.

I'm also having the same problem. How do I get Prometheus to watch all namespaces?

the same s.it happened.

So I've set serviceMonitorSelectorNilUsesHelmValues: false but I still get only storage metrics for the monitoring namespace, am I doing something wrong? Is this the same issue or a different one?

Ok one addition, it looks like I am able to monitor the other PVCs in the other namespaces, however the query kube_persistentvolume_capacity_bytes prints only the PVs in the monitoring namespace. Any idea?

Hi there!

I have a similar issue

prometheus:

prometheusSpec:

ruleNamespaceSelector:

any: "true"

serviceMonitorNamespaceSelector:

any: "true"

helm version

version.BuildInfo{Version:"v3.2.0", GitCommit:"e11b7ce3b12db2941e90399e874513fbd24bcb71", GitTreeState:"clean", GoVersion:"go1.13.10"}

helm upgrade --install monitoring --namespace monitoring -f /opt/ansible/configs/infra/monitoring/resources/helm_values/helm_values.yaml stable/prometheus-operator --version 8.13.2 --set prometheusOperator.createCustomResource=false

Error: UPGRADE FAILED: error validating "": error validating data: [ValidationError(Prometheus.spec.ruleNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.ruleNamespaceSelector, ValidationError(Prometheus.spec.serviceMonitorNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.serviceMonitorNamespaceSelector]

The solution works for me:

prometheus:

prometheusSpec:

ruleNamespaceSelector:

matchLabels:

prometheus: somelabel

serviceMonitorNamespaceSelector:

matchLabels:

prometheus: somelabel

then create some PrometheusRule

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

role: alert-rules

app: strimzi

prometheus: somelabel

name: prometheus-k8s-rules

....

prometheus-operator has been deployed, but it doesn't see any ServiceMonitor or PrometheusRule in any NS in the cluster

UPD

Finally I've resolved the issue by adding the label to my NS

kubectl label ns cassandra prometheus=somelabel

I also get the error:

error validating data: ValidationError(Prometheus.spec.serviceMonitorNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.serviceMonitorNamespaceSelector;

For me this is working equivalent for any: true:

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

name: some-prometheus

spec:

serviceMonitorSelector:

matchLabels:

prometheus: some

serviceMonitorNamespaceSelector: {}

CHART: prometheus-operator-8.13.0

APP VERSION: 0.38.1

@delfer if you just want to access all namespaces, try setting: serviceMonitorSelectorNilUsesHelmValues: false

@tux-00 did you get your error resolved?

k8s version: v1.16.7

helm version: v3.1.2

i also get error validating data: ValidationError(Prometheus.spec.storage.volumeClaimTemplate): unknown field "selector" in com.coreos.monitoring.v1.Prometheus.spec.storage.volumeClaimTemplate

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Any further update will cause the issue/pull request to no longer be considered stale. Thank you for your contributions.

This issue is being automatically closed due to inactivity.

storage still have some problem on chart 9.1.1

helm version: v3.2.4

Error: unable to build kubernetes objects from release manifest: error validating "": error validating data: ValidationError(Prometheus.spec.storage.volumeClaimTemplate): unknown field "selector" in com.coreos.monitoring.v1.Prometheus.spec.storage.volumeClaimTemplate

same issue here

Error: UPGRADE FAILED: error validating "": error validating data: [ValidationError(Prometheus.spec.ruleNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.ruleNamespaceSelector, ValidationError(Prometheus.spec.serviceMonitorNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.serviceMonitorNamespaceSelector]

matchNames also can not be recognized

helm 3.3.4

kube-prometheus-stack-9.4.4

Also got the same problem. I am running/have been running different versions of the chart with different versions of helm and k8s and it feels like this problem has been there since before I started using this chart. I usually end up deleting and recreating the release resulting in a loss of metrics for a short period of time since I don't understand what is going on and I need it to work and don't have that much time to spend on proper resolution 😞

In case this helps anyone out, I've got a workaround that seems to work for the issue of the any: true namespace selector being deprecated.

Because this issue has ended up talking about a few different problems, I just want to make it clear which particular problem I'm talking about first.

Currently we rely on the following configuration so that we can deploy rules to any namespace, and Prometheus will automatically pick them up:

serviceMonitorSelectorNilUsesHelmValues: false

ruleNamespaceSelector:

any: true

After updating to the latest version of the kube-prometheus-stack chart (which used to be the prometheus-operator chart), we started getting the following error when doing a helm upgrade:

Error: UPGRADE FAILED: error validating "": error validating data: ValidationError(Prometheus.spec.ruleNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.ruleNamespaceSelector

The reason for this error is that the ruleNamespaceSelector field in the Prometheus CRD doesn't support any: true anymore as a valid option. Unfortunately there doesn't seem to be an equivalent option anymore.

TL;DR; what I ended up doing was using the matchExpressions property instead, and matching on a non-existent label:

serviceMonitorSelectorNilUsesHelmValues: false

ruleNamespaceSelector:

matchExpressions:

- key: "non-existent-label"

operator: "DoesNotExist"

This matches any namespace that doesn't have a label called non-existent-label, which causes it to match every namespace in our clusters because we don't have a label called non-existent-label on any of our namespaces.

@yudar1024 @vfiset does that help you at all?

Also, just a warning - the documentation at https://coreos.com/operators/prometheus/docs/latest/api.html#namespaceselector is completely out of date. I suspect that's where the confusion about matchNames has come from. The safest thing seems to be to read the CRD definition here even though they're a bit tricky to understand.

Thanks for the feedback @adam-resdiary for me it's not the rules namespace selector the problem but rather:

Error: error validating "": error validating data: ValidationError(Prometheus.spec.storage.volumeClaimTemplate): unknown field "selector" in com.coreos.monitoring.v1.Prometheus.spec.storage.volumeClaimTemplate

Your comment might help me tho, I need to dig deeper in the CRD to understand what is really going on here.

I'll report back

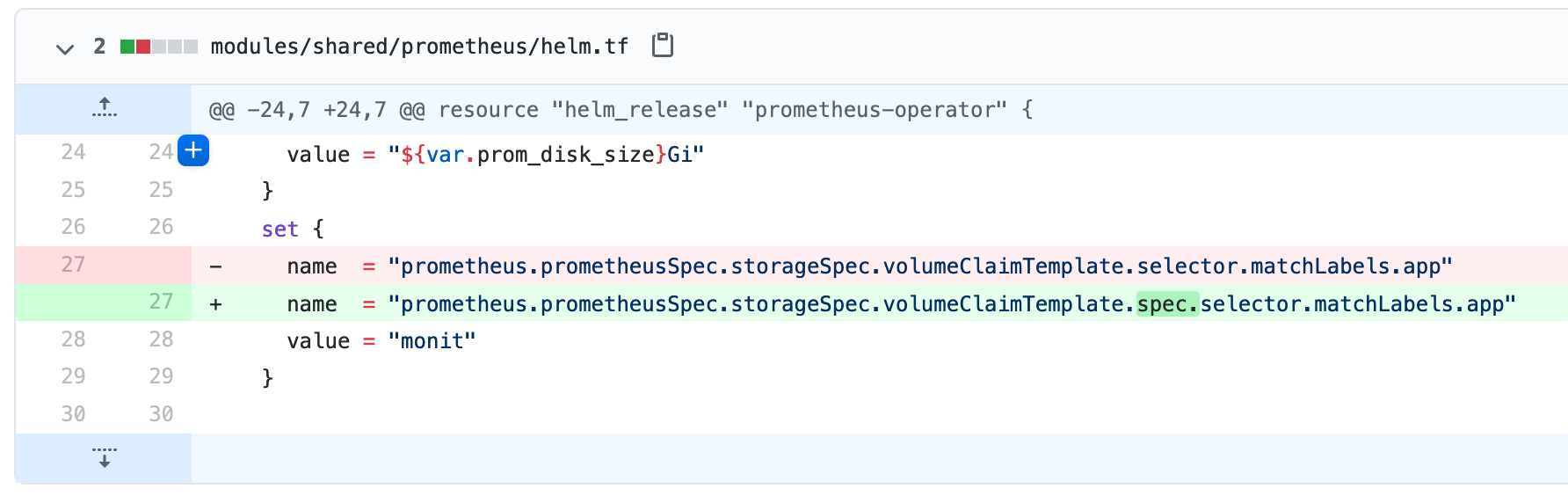

Turns out it was a completely unrelated terraform configuration issue in my helm release 🤦

I believe this issue needs to be re-opened, because the CRDs have not been properly fixed.

Attempt at using any can not even be deployed:

prometheus:

prometheusSpec:

ruleNamespaceSelector:

any: true

serviceMonitorNamespaceSelector:

any: true

podMonitorNamespaceSelector:

any: true

Error: UPGRADE FAILED: error validating "": error validating data: [ValidationError(Prometheus.spec.podMonitorNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.podMonitorNamespaceSelector, ValidationError(Prometheus.spec.ruleNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.ruleNamespaceSelector, ValidationError(Prometheus.spec.serviceMonitorNamespaceSelector): unknown field "any" in com.coreos.monitoring.v1.Prometheus.spec.serviceMonitorNamespaceSelector]

Attempt at using matchLabels:

prometheus:

ruleSelectorNilUsesHelmValues: false

ruleNamespaceSelector:

matchLabels:

prometheus: monitoring

serviceMonitorSelectorNilUsesHelmValues: false

serviceMonitorNamespaceSelector:

matchLabels:

prometheus: monitoring

podMonitorSelectorNilUsesHelmValues: false

podMonitorNamespaceSelector:

matchLabels:

prometheus: monitoring

probeSelectorNilUsesHelmValues: false

probeNamespaceSelector:

matchLabels:

prometheus: monitoring

Works perfectly for all namespaces labeled with prometheus=monitoring.

Using kube-prometheus-stack 11.0.4, Helm 3.1.3.

@gw0 this is the wrong repository.. the chart moved to prometheus-community/kube-prometheus-stack

Turns out it was a completely unrelated

terraformconfiguration issue in my helm release

can you elaborate? having a similar issue

@GuyCarmy In my case this was it 🤦

@vfiset thanks, it is helpful! had the same issue.

I think it started on k8s version upgrade from 1.15 to 1.16.

and Im also pretty sure its not related to the OPs issue, but since we are all coming here cause someone hijacked the issue, then its good.

@GuyCarmy clearly not OPs issue. I think we hijacked the thread because:

- We are lazy

- But we still want to help our fellow developers

Oh, I got the similar error called

$ helm install prometheus-operator prometheus-community/kube-prometheus-stack -n monitoring

Error: unable to build kubernetes objects from release manifest: error validating "": error validating data: [ValidationError(Alertmanager.spec): unknown field "alertmanagerConfigNamespaceSelector" in com.coreos.monitoring.v1.Alertmanager.spec, ValidationError(Alertmanager.spec): unknown field "alertmanagerConfigSelector" in com.coreos.monitoring.v1.Alertmanager.spec]

$ helm version

version.BuildInfo{Version:"v3.0.3", GitCommit:"ac925eb7279f4a6955df663a0128044a8a6b7593", GitTreeState:"clean", GoVersion:"go1.13.6"}

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:50:19Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"windows/amd64"}

Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.10", GitCommit:"62876fc6d93e891aa7fbe19771e6a6c03773b0f7", GitTreeState:"clean", BuildDate:"2020-10-16T20:43:34Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"}

Can anyone give me some suggests? /捂脸哭

@yanhuibin315 try to delete old CDRs

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com

kubectl delete crd alertmanagers.monitoring.coreos.com

kubectl delete crd podmonitors.monitoring.coreos.com

kubectl delete crd probes.monitoring.coreos.com

kubectl delete crd prometheuses.monitoring.coreos.com

kubectl delete crd prometheusrules.monitoring.coreos.com

kubectl delete crd servicemonitors.monitoring.coreos.com

kubectl delete crd thanosrulers.monitoring.coreos.com

Oh, I got the similar error called

$ helm install prometheus-operator prometheus-community/kube-prometheus-stack -n monitoring Error: unable to build kubernetes objects from release manifest: error validating "": error validating data: [ValidationError(Alertmanager.spec): unknown field "alertmanagerConfigNamespaceSelector" in com.coreos.monitoring.v1.Alertmanager.spec, ValidationError(Alertmanager.spec): unknown field "alertmanagerConfigSelector" in com.coreos.monitoring.v1.Alertmanager.spec]$ helm version version.BuildInfo{Version:"v3.0.3", GitCommit:"ac925eb7279f4a6955df663a0128044a8a6b7593", GitTreeState:"clean", GoVersion:"go1.13.6"} $ kubectl version Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:50:19Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"windows/amd64"} Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.10", GitCommit:"62876fc6d93e891aa7fbe19771e6a6c03773b0f7", GitTreeState:"clean", BuildDate:"2020-10-16T20:43:34Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"}Can anyone give me some suggests? /捂脸哭

I got the same problems too.

@yanhuibin315 try to delete old CDRs

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com kubectl delete crd alertmanagers.monitoring.coreos.com kubectl delete crd podmonitors.monitoring.coreos.com kubectl delete crd probes.monitoring.coreos.com kubectl delete crd prometheuses.monitoring.coreos.com kubectl delete crd prometheusrules.monitoring.coreos.com kubectl delete crd servicemonitors.monitoring.coreos.com kubectl delete crd thanosrulers.monitoring.coreos.com

Thanks @freemanlutsk, your suggestion solved my problems.

I too have the same problem..

Deleting CRDs especially for servicemonitor CRD is not a good solution.Because we will loose the servicemonitors created by our applications when we are doing upgrade and delete old servicemonitor crd.

I think this is a bug in the upgrade, it will be good to release a patch version for this.

Because we will loose the servicemonitors created by our applications when we are doing upgrade and delete old servicemonitor crd.

@KR411-prog you're right, but, simply backup your servicemonitor on your workstation before deleting and it becomes lesser of a problem! kubectl get servicemonitor -A -o yaml > /tmp/svcmonit.yaml

Most helpful comment

In case this helps anyone out, I've got a workaround that seems to work for the issue of the

any: truenamespace selector being deprecated.Because this issue has ended up talking about a few different problems, I just want to make it clear which particular problem I'm talking about first.

Currently we rely on the following configuration so that we can deploy rules to any namespace, and Prometheus will automatically pick them up:

After updating to the latest version of the kube-prometheus-stack chart (which used to be the prometheus-operator chart), we started getting the following error when doing a helm upgrade:

The reason for this error is that the

ruleNamespaceSelectorfield in the Prometheus CRD doesn't supportany: trueanymore as a valid option. Unfortunately there doesn't seem to be an equivalent option anymore.TL;DR; what I ended up doing was using the

matchExpressionsproperty instead, and matching on a non-existent label:This matches any namespace that doesn't have a label called

non-existent-label, which causes it to match every namespace in our clusters because we don't have a label callednon-existent-labelon any of our namespaces.@yudar1024 @vfiset does that help you at all?