Charts: [stable/prometheus] unable to install chart on K8S 1.16.0

Describe the bug

I'm trying to install Prometheus on a cluster of Raspberry Pis. I am using --set to specify images that conform to the ARM architecture but it's not even getting that far. It seems to fail on creating some of the most basic resources because they're not in the correct API version.

Version of Helm and Kubernetes:

> kubectl version

Client Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.3", GitCommit:"b3cbbae08ec52a7fc73d334838e18d17e8512749", GitTreeState:"clean", BuildDate:"2019-11-18T14:56:51Z", GoVersion:"go1.12.13", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.0", GitCommit:"2bd9643cee5b3b3a5ecbd3af49d09018f0773c77", GitTreeState:"clean", BuildDate:"2019-09-18T14:27:17Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/arm"}

> helm version

version.BuildInfo{Version:"v3.0.0", GitCommit:"e29ce2a54e96cd02ccfce88bee4f58bb6e2a28b6", GitTreeState:"clean", GoVersion:"go1.13.4"}

Which chart:

stable/prometheus

What happened:

I run this command:

helm install prometheus stable/prometheus --set alertmanager.image.repository=prom/alertmanager-linux-armv7 --set nodeExporter.image.repository=prom/node-exporter-linux-armv7 --set server.image.repository=prom/prometheus-linux-armv7 --set pushgateway.image.repository=prom/pushgateway-linux-armv7

And then I almost immediately see this error message:

Error: unable to build kubernetes objects from release manifest: [unable to recognize "": no matches for kind "DaemonSet" in version "extensions/v1beta1", unable to recognize "": no matches for kind "Deployment" in version "extensions/v1beta1"]

What you expected to happen:

I see in the chart code that it's doing some semver comparisons to determine which API version to use. As you can see my cluster is running Kubernetes 1.16.0 so it _should_ be outputting apps/v1 for several of these. However as you can see from the error message it's instead outputting extensions/v1beta1 which is incorrect. I'm not sure how semantic version comparisons work in Helm but clearly something is off. Unfortunately there's no way to override these defined prometheus.*.apiVersion variables with --set so I'm stuck -- this chart is totally unusable at the moment.

Anything else we need to know:

Since it's only the DaemonSet and the Deployment objects that it's complaining about, the problem seems to lie with this logic:

{{- if semverCompare "<1.9-0" .Capabilities.KubeVersion.GitVersion -}}

{{- print "extensions/v1beta1" -}}

{{- else if semverCompare "^1.9-0" .Capabilities.KubeVersion.GitVersion -}}

{{- print "apps/v1" -}}

{{- end -}}

I wonder if the semverCompare is brittle enough that it erroneously thinks 1.16.0 is less than 1.9-0, because 1.1 is less than 1.9? Just a hypothesis...

All 12 comments

I'm seeing similar errors after with a fresh install of the chart on 1.16.3, except I get past install and things spin up running. Let me know if this is too far off and I'll open a new issue!

In the kube-state-metrics pod it's logging this error constantly:

E1204 19:31:09.753872 1 reflector.go:126] k8s.io/kube-state-metrics/internal/collector/builder.go:482: Failed to list *v1beta1.ReplicaSet: the server could not find the requested resource

Kubernetes

Client Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.2", GitCommit:"c97fe5036ef3df2967d086711e6c0c405941e14b", GitTreeState:"clean", BuildDate:"2019-10-15T23:41:55Z", GoVersion:"go1.12.10", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.3", GitCommit:"b3cbbae08ec52a7fc73d334838e18d17e8512749", GitTreeState:"clean", BuildDate:"2019-11-13T11:13:49Z", GoVersion:"go1.12.12", Compiler:"gc", Platform:"linux/amd64"}

Helm

version.BuildInfo{Version:"v3.0.0", GitCommit:"e29ce2a54e96cd02ccfce88bee4f58bb6e2a28b6", GitTreeState:"clean", GoVersion:"go1.13.4"}

You should bump your kube-state-metrics to v1.8.0, that fixes this issue

Bumping kube-state-metrics to v1.8.0 got rid of the *v1beta1 errors, but then produced these:

E1204 20:25:16.157383 1 reflector.go:125] k8s.io/kube-state-metrics/internal/store/builder.go:314: Failed to list *v1.StorageClass: storageclasses.storage.k8s.io is forbidden: User "system:serviceaccount:monitoring:prometheus-kube-state-metrics" cannot list resource "storageclasses" in API group "storage.k8s.io" at the cluster scope

E1204 20:25:16.163943 1 reflector.go:125] k8s.io/kube-state-metrics/internal/store/builder.go:314: Failed to list *v1.ReplicaSet: replicasets.apps is forbidden: User "system:serviceaccount:monitoring:prometheus-kube-state-metrics" cannot list resource "replicasets" in API group "apps" at the cluster scope

I worked around this by disabling kube-state-metrics install from the prometheus chart and installing it separately from its own chart.

I have the exact same issue.

The permissions defined in the clusterrole for kube-state-metrics are not in sync with other versions of kube-state-metrics than 1.6.0.

Setting another version (from 1.7.2 to 1.9.0) fixes the compatibility issues of kube-state-metrics but not of the stable/prometheus Chart, which produce the permission error above.

@mccrackend's solution works fine but it would be great to have a way to set the clusterrole in sync with the used kube-state-metric image version.

Missing access controls for me below along with crash info.

I0109 21:11:40.819874 1 main.go:86] Using default collectors

I0109 21:11:40.819961 1 main.go:98] Using all namespace

I0109 21:11:40.819973 1 main.go:139] metric white-blacklisting: blacklisting the following items:

W0109 21:11:40.819996 1 client_config.go:543] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I0109 21:11:40.821386 1 main.go:184] Testing communication with server

I0109 21:11:40.832461 1 main.go:189] Running with Kubernetes cluster version: v1.16. git version: v1.16.3. git tree state: clean. commit: b3cbbae08ec52a7fc73d334838e18d17e8512749. platform: linux/amd64

I0109 21:11:40.832520 1 main.go:191] Communication with server successful

I0109 21:11:40.832739 1 main.go:225] Starting metrics server: 0.0.0.0:8080

I0109 21:11:40.832895 1 main.go:200] Starting kube-state-metrics self metrics server: 0.0.0.0:8081

I0109 21:11:40.833047 1 metrics_handler.go:96] Autosharding disabled

I0109 21:11:40.834983 1 builder.go:146] Active collectors: certificatesigningrequests,configmaps,cronjobs,daemonsets,deployments,endpoints,horizontalpodautoscalers,ingresses,jobs,limitranges,mutatingwebhookconfigurations,namespaces,networkpolicies,nodes,persistentvolumeclaims,persistentvolumes,poddisruptionbudgets,pods,replicasets,replicationcontrollers,resourcequotas,secrets,services,statefulsets,storageclasses,validatingwebhookconfigurations,volumeattachments

E0109 21:11:40.846966 1 reflector.go:156] pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:108: Failed to list *v1.StorageClass: storageclasses.storage.k8s.io is forbidden: User "system:serviceaccount:monitoring:prometheus-kube-state-metrics" cannot list resource "storageclasses" in API group "storage.k8s.io" at the cluster scope

E0109 21:11:40.846969 1 reflector.go:156] pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:108: Failed to list *v1beta1.ValidatingWebhookConfiguration: validatingwebhookconfigurations.admissionregistration.k8s.io is forbidden: User "system:serviceaccount:monitoring:prometheus-kube-state-metrics" cannot list resource "validatingwebhookconfigurations" in API group "admissionregistration.k8s.io" at the cluster scope

E0109 21:11:40.849190 1 reflector.go:156] pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:108: Failed to list *v1beta1.VolumeAttachment: volumeattachments.storage.k8s.io is forbidden: User "system:serviceaccount:monitoring:prometheus-kube-state-metrics" cannot list resource "volumeattachments" in API group "storage.k8s.io" at the cluster scope

E0109 21:11:40.852446 1 reflector.go:156] pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:108: Failed to list *v1.Namespace: networkpolicies.networking.k8s.io is forbidden: User "system:serviceaccount:monitoring:prometheus-kube-state-metrics" cannot list resource "networkpolicies" in API group "networking.k8s.io" at the cluster scope

E0109 21:11:40.852491 1 reflector.go:156] pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:108: Failed to list *v1beta1.MutatingWebhookConfiguration: mutatingwebhookconfigurations.admissionregistration.k8s.io is forbidden: User "system:serviceaccount:monitoring:prometheus-kube-state-metrics" cannot list resource "mutatingwebhookconfigurations" in API group "admissionregistration.k8s.io" at the cluster scope

E0109 21:11:40.852740 1 runtime.go:78] Observed a panic: "invalid memory address or nil pointer dereference" (runtime error: invalid memory address or nil pointer dereference)

goroutine 87 [running]:

k8s.io/apimachinery/pkg/util/runtime.logPanic(0x13c4de0, 0x2178810)

/go/pkg/mod/k8s.io/[email protected]/pkg/util/runtime/runtime.go:74 +0xa3

k8s.io/apimachinery/pkg/util/runtime.HandleCrash(0x0, 0x0, 0x0)

/go/pkg/mod/k8s.io/[email protected]/pkg/util/runtime/runtime.go:48 +0x82

panic(0x13c4de0, 0x2178810)

/usr/local/go/src/runtime/panic.go:679 +0x1b2

k8s.io/kube-state-metrics/internal/store.glob..func81(0xc00015e000, 0xc00000d580)

/go/src/k8s.io/kube-state-metrics/internal/store/hpa.go:274 +0x187

k8s.io/kube-state-metrics/internal/store.wrapHPAFunc.func1(0x153f360, 0xc00015e000, 0xc0003b9200)

/go/src/k8s.io/kube-state-metrics/internal/store/hpa.go:290 +0x5c

k8s.io/kube-state-metrics/pkg/metric.(*FamilyGenerator).Generate(...)

/go/src/k8s.io/kube-state-metrics/pkg/metric/generator.go:39

k8s.io/kube-state-metrics/pkg/metric.ComposeMetricGenFuncs.func1(0x153f360, 0xc00015e000, 0x7f67697153c0, 0xc00015e000, 0x0)

/go/src/k8s.io/kube-state-metrics/pkg/metric/generator.go:78 +0x122

k8s.io/kube-state-metrics/pkg/metrics_store.(*MetricsStore).Add(0xc00050e1c0, 0x153f360, 0xc00015e000, 0x0, 0x0)

/go/src/k8s.io/kube-state-metrics/pkg/metrics_store/metrics_store.go:76 +0xf6

k8s.io/kube-state-metrics/pkg/metrics_store.(*MetricsStore).Replace(0xc00050e1c0, 0xc0000aa980, 0x4, 0x4, 0xc00014ca20, 0x9, 0x2a46966, 0x219a560)

/go/src/k8s.io/kube-state-metrics/pkg/metrics_store/metrics_store.go:138 +0xa4

k8s.io/client-go/tools/cache.(*Reflector).syncWith(0xc00051a240, 0xc0000aa940, 0x4, 0x4, 0xc00014ca20, 0x9, 0x0, 0xc0000ac900)

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:354 +0xf8

k8s.io/client-go/tools/cache.(*Reflector).ListAndWatch.func1(0xc00051a240, 0xc00014ea80, 0xc0004741e0, 0xc000027bb8, 0x0, 0x0)

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:250 +0x8fa

k8s.io/client-go/tools/cache.(*Reflector).ListAndWatch(0xc00051a240, 0xc0004741e0, 0x0, 0x0)

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:257 +0x1a9

k8s.io/client-go/tools/cache.(*Reflector).Run.func1()

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:155 +0x33

k8s.io/apimachinery/pkg/util/wait.JitterUntil.func1(0xc0004d5778)

/go/pkg/mod/k8s.io/[email protected]/pkg/util/wait/wait.go:152 +0x5e

k8s.io/apimachinery/pkg/util/wait.JitterUntil(0xc000027f78, 0x3b9aca00, 0x0, 0x1, 0xc0004741e0)

/go/pkg/mod/k8s.io/[email protected]/pkg/util/wait/wait.go:153 +0xf8

k8s.io/apimachinery/pkg/util/wait.Until(...)

/go/pkg/mod/k8s.io/[email protected]/pkg/util/wait/wait.go:88

k8s.io/client-go/tools/cache.(*Reflector).Run(0xc00051a240, 0xc0004741e0)

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:154 +0x16b

created by k8s.io/kube-state-metrics/internal/store.(*Builder).reflectorPerNamespace

/go/src/k8s.io/kube-state-metrics/internal/store/builder.go:337 +0x265

panic: runtime error: invalid memory address or nil pointer dereference [recovered]

panic: runtime error: invalid memory address or nil pointer dereference

[signal SIGSEGV: segmentation violation code=0x1 addr=0x0 pc=0x125ff27]

goroutine 87 [running]:

k8s.io/apimachinery/pkg/util/runtime.HandleCrash(0x0, 0x0, 0x0)

/go/pkg/mod/k8s.io/[email protected]/pkg/util/runtime/runtime.go:55 +0x105

panic(0x13c4de0, 0x2178810)

/usr/local/go/src/runtime/panic.go:679 +0x1b2

k8s.io/kube-state-metrics/internal/store.glob..func81(0xc00015e000, 0xc00000d580)

/go/src/k8s.io/kube-state-metrics/internal/store/hpa.go:274 +0x187

k8s.io/kube-state-metrics/internal/store.wrapHPAFunc.func1(0x153f360, 0xc00015e000, 0xc0003b9200)

/go/src/k8s.io/kube-state-metrics/internal/store/hpa.go:290 +0x5c

k8s.io/kube-state-metrics/pkg/metric.(*FamilyGenerator).Generate(...)

/go/src/k8s.io/kube-state-metrics/pkg/metric/generator.go:39

k8s.io/kube-state-metrics/pkg/metric.ComposeMetricGenFuncs.func1(0x153f360, 0xc00015e000, 0x7f67697153c0, 0xc00015e000, 0x0)

/go/src/k8s.io/kube-state-metrics/pkg/metric/generator.go:78 +0x122

k8s.io/kube-state-metrics/pkg/metrics_store.(*MetricsStore).Add(0xc00050e1c0, 0x153f360, 0xc00015e000, 0x0, 0x0)

/go/src/k8s.io/kube-state-metrics/pkg/metrics_store/metrics_store.go:76 +0xf6

k8s.io/kube-state-metrics/pkg/metrics_store.(*MetricsStore).Replace(0xc00050e1c0, 0xc0000aa980, 0x4, 0x4, 0xc00014ca20, 0x9, 0x2a46966, 0x219a560)

/go/src/k8s.io/kube-state-metrics/pkg/metrics_store/metrics_store.go:138 +0xa4

k8s.io/client-go/tools/cache.(*Reflector).syncWith(0xc00051a240, 0xc0000aa940, 0x4, 0x4, 0xc00014ca20, 0x9, 0x0, 0xc0000ac900)

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:354 +0xf8

k8s.io/client-go/tools/cache.(*Reflector).ListAndWatch.func1(0xc00051a240, 0xc00014ea80, 0xc0004741e0, 0xc000027bb8, 0x0, 0x0)

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:250 +0x8fa

k8s.io/client-go/tools/cache.(*Reflector).ListAndWatch(0xc00051a240, 0xc0004741e0, 0x0, 0x0)

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:257 +0x1a9

k8s.io/client-go/tools/cache.(*Reflector).Run.func1()

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:155 +0x33

k8s.io/apimachinery/pkg/util/wait.JitterUntil.func1(0xc0004d5778)

/go/pkg/mod/k8s.io/[email protected]/pkg/util/wait/wait.go:152 +0x5e

k8s.io/apimachinery/pkg/util/wait.JitterUntil(0xc000027f78, 0x3b9aca00, 0x0, 0x1, 0xc0004741e0)

/go/pkg/mod/k8s.io/[email protected]/pkg/util/wait/wait.go:153 +0xf8

k8s.io/apimachinery/pkg/util/wait.Until(...)

/go/pkg/mod/k8s.io/[email protected]/pkg/util/wait/wait.go:88

k8s.io/client-go/tools/cache.(*Reflector).Run(0xc00051a240, 0xc0004741e0)

/go/pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:154 +0x16b

created by k8s.io/kube-state-metrics/internal/store.(*Builder).reflectorPerNamespace

/go/src/k8s.io/kube-state-metrics/internal/store/builder.go:337 +0x265

I have same problem.

At first, prometheus-operator-admission-patch can't start properly.

I changed values, add nodeSelector to stable/prometheus-operator/values.yaml, node prometheusOperator->admissionWebhooks->patch->nodeSelector->"kubernetes.io/arch".

After it I had problem with

- prometheus-operator-kube-state-metrics

- prometheus-operator-operator

(standard_init_linux.go:211: exec user process caused "exec format error")

I started fix it in fork for PR but I can't understand what style I have to use in values.yaml files.

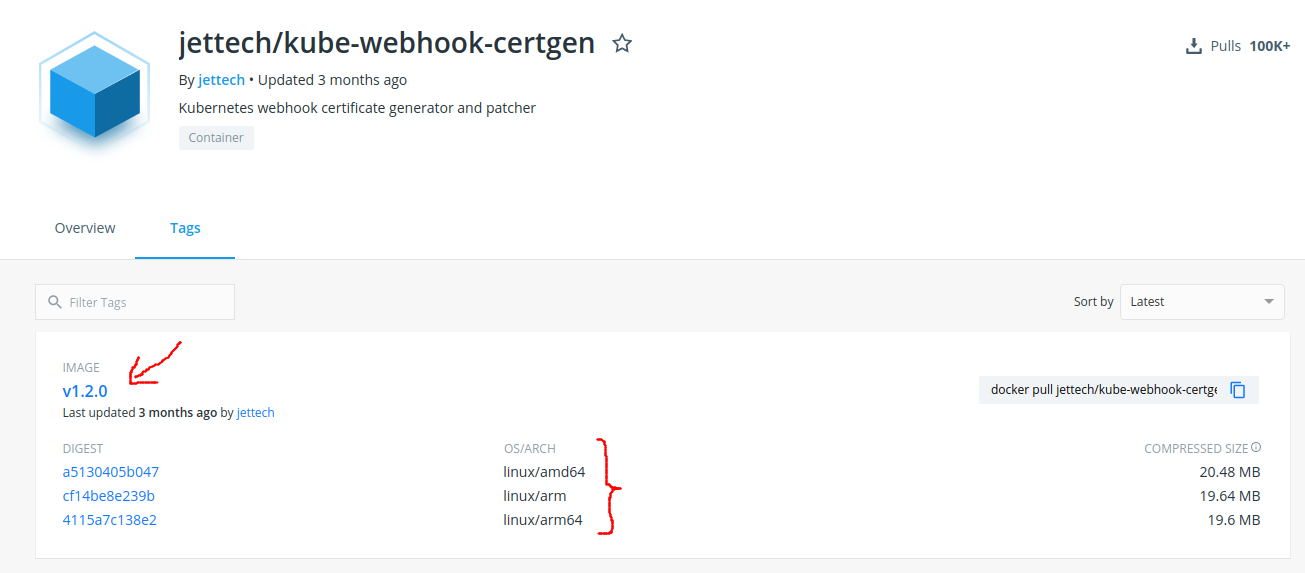

@uocxp experimental features in docker (docker buildx build ... --push) allows to create one tag with multiple layouts for different architectures. For example jettech/kube-webhook-certgen:v1.2.0

I think, I hope, image's owners will provide multi-arch docker images soon and this problem disappear.

@uocxp ok, if I understand right, you talk about child/dependency charts. In this case I create fork of this repository (helm/charts), in the file https://github.com/helm/charts/blob/master/stable/prometheus-operator/requirements.yaml I change every dependencies[].repository node with value like "file:///../kube-state-metrics" etc and after it I can change tag of kube-state-metrics in source code and use local kube-state-metrics like dep for prometheus-operator (link local directory). I think this is the only way.

I am in the same boat. I just deployed the chart and everything came up except prometheus-kube-state-metrics which looks like it is not arm compatible.

My Pi4 cluster is on 1.17. This is a real bummer.

follow the instructions in this repo https://github.com/uocxp/prometheus-operator-chart-arm

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Any further update will cause the issue/pull request to no longer be considered stale. Thank you for your contributions.

This issue is being automatically closed due to inactivity.

Most helpful comment

You should bump your kube-state-metrics to v1.8.0, that fixes this issue