Charts: helm creates efs-provisioner only with fs-12345678 default setting

Is this a request for help?:

Is this a BUG REPORT or FEATURE REQUEST? (choose one):

BUG REPORT

Version of Helm and Kubernetes:

1.10

Which chart:

efs-provisioner

What happened:

my command

helm install stable/efs-provisioner --name=provis --set efsFileSystemId=fs-2c2f4685 --set awsRegion=us-west-2

kubectl describe pods provis-efs-provisioner-xxxxx

Output: Running scope as unit run-29329.scope.

mount.nfs: Failed to resolve server fs-12345678.efs.us-east-2.amazonaws.com: Name or service not known

mount.nfs: Operation already in progress

What you expected to happen:

pod config must have correct value

How to reproduce it (as minimally and precisely as possible):

follow readme in efs-provisioner

Anything else we need to know:

All 9 comments

The docs are incorrect. You need to use e.g.

helm install stable/efs-provisioner --set efsProvisioner.efsFileSystemId=fs-ae098765 --set efsProvisioner.awsRegion=eu-west-1 --set efsProvisioner.path=/ --set efsProvisioner.storageClass.reclaimPolicy=Retain --name efs-provisioner --version 0.1.1

This should become part of the docs!

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Any further update will cause the issue/pull request to no longer be considered stale. Thank you for your contributions.

This issue is being automatically closed due to inactivity.

Has anyone had any recent success with this chart? I have an EFS in the same region as my k8s nodes and with the same sg policy, but consistently failing to mount:

mount.nfs: Failed to resolve server <EFS DNS>: Name or service not known

I also noticed this issue is struggling to pass, just based on the readme update:

https://github.com/helm/charts/pull/7603

Thanks!

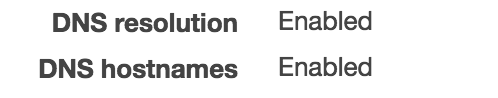

:arg: From https://docs.aws.amazon.com/efs/latest/ug/troubleshooting-efs-mounting.html

If you are using a custom VPC, make sure that DNS settings are enabled.

Desired state on VPC:

@jl-gogovapps Was you able to find a solution to the error

mount.nfs: Failed to resolve server <EFS DNS>: Name or service not known

when using this chart?

I'm using on an default vpc, so DNS resolution and hostnames are enabled by default. However I'm trying to connect my EFS (in us-east-2) from inside my EKS cluster (in sa-east-1) and then getting this error: Name or service not known. Has there any tutorial for using this chart with inter-region VPC connection @wongma7 @drakedevel @humblec @msau42 @cofyc @raffaelespazzoli @tsmetana?

@kevinfaguiar according to AWS docs at https://docs.aws.amazon.com/efs/latest/ug/manage-fs-access-vpc-peering.html

You can't use DNS name resolution for EFS mount points in another VPC. To mount your EFS file system, use the IP address of the mount points in the corresponding Availability Zone.

I've never personally done this, but it sounds like DNS not working for EFS across the peering connection is expected behavior as far as Amazon's docs are concerned. I would try setting the efsProvisioner.dnsName value to one of the EFS endpoint IPs directly and seeing if that works. If it does, then you may want to consider setting up custom DNS as suggested in the docs I linked so you can avoid hardcoding the IP. If that doesn't work, then you may want to contact Amazon support.

Thank you so much @drakedevel! My scenario is one where I need the API and the UI (two different services, with two different deployments, which can scale to as many pods as possible) to share a folder (/tmp). So, studying a little, I thought that using EFS was the best solution.

However, my region (sa-east-1) does not has EFS available... Do you happen to know another solution for my scenario (using some AWS storage which permits ReadWriteMany) which doesnt means rewriting my app? I can't help but think this is a probably common scenario for putting legacy applications into pods, and it feels really hard. But there is the other side too: that sharing a folder which is essential to both services is an anti-pattern in Kubernetes.

EDIT: If anyone is on the same boat where file system performance isn't that critically, but you still needs a shared volume between two deployments (ReadWriteMany PVC) because your application is a legacy one which shares some folder, then you can simply use S3 Bucket with goofys mounter (which permits ReadWriteMany), using something like that: https://github.com/ctrox/csi-s3

Most helpful comment

The docs are incorrect. You need to use e.g.

helm install stable/efs-provisioner --set efsProvisioner.efsFileSystemId=fs-ae098765 --set efsProvisioner.awsRegion=eu-west-1 --set efsProvisioner.path=/ --set efsProvisioner.storageClass.reclaimPolicy=Retain --name efs-provisioner --version 0.1.1