Cert-manager: Strange errors with 0.11, but still works

Bugs should be filed for issues encountered whilst operating cert-manager.

You should first attempt to resolve your issues through the community support

channels, e.g. Slack, in order to rule out individual configuration errors.

Please provide as much detail as possible.

Describe the bug:

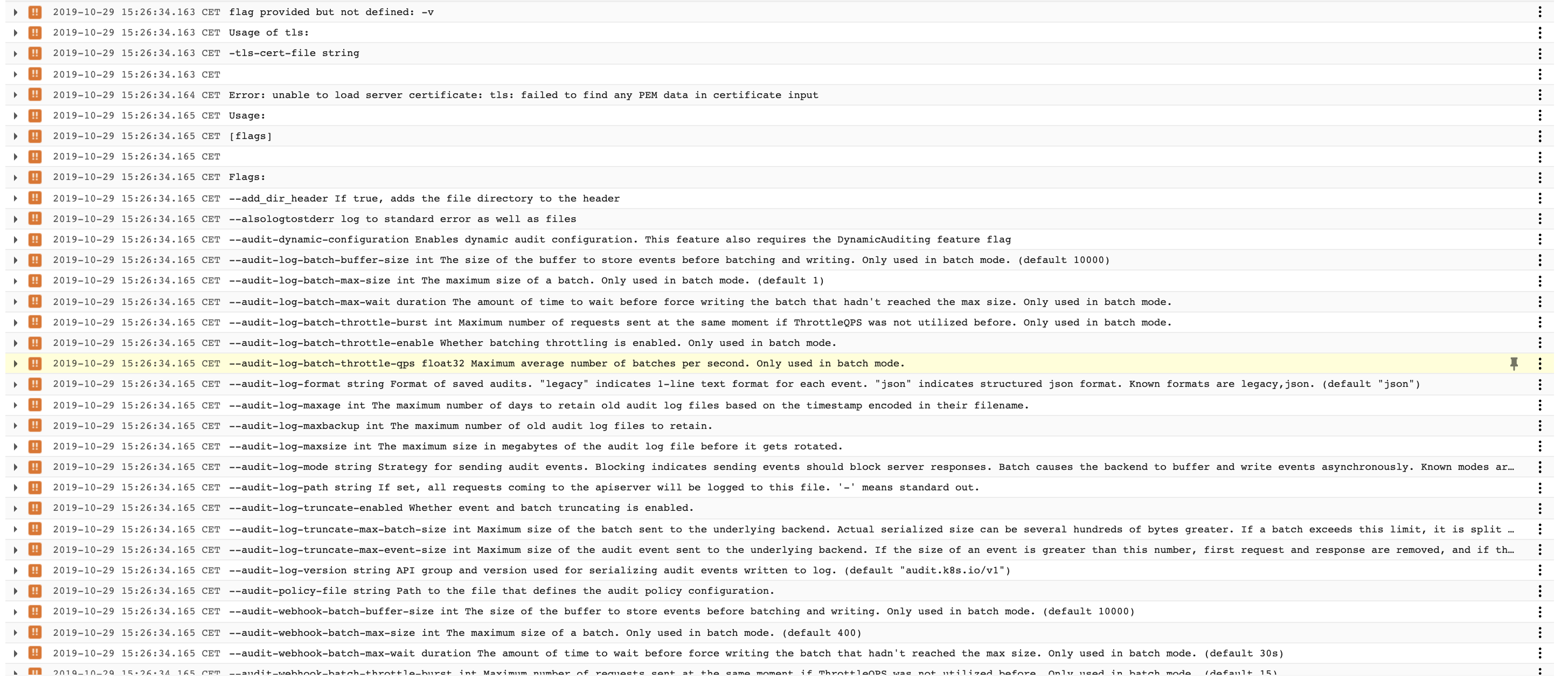

I started a cert manager 0.11 from scratch and I get error messages in my log looking like this:

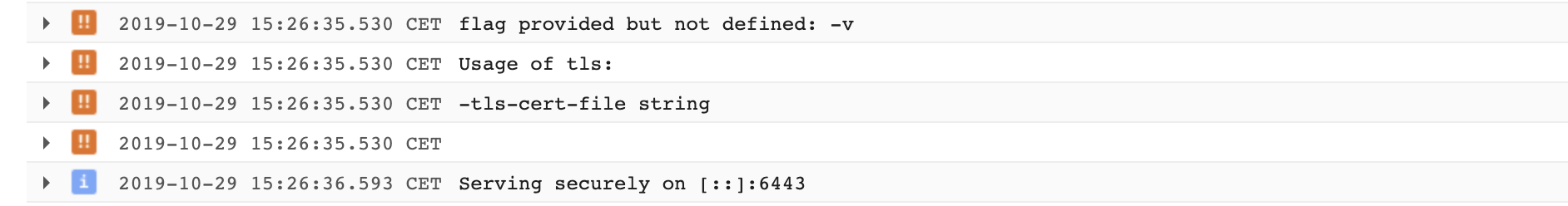

I end up getting this so I think it works?

Expected behaviour:

Start without a errorlog, since it is working

Steps to reproduce the bug:

Just start a cert-manager from kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v0.11.0/cert-manager.yaml --validate=false

Anything else we need to know?:

Not really

Environment details::

- Kubernetes version (e.g. v1.10.2): 1.13.9

- Cloud-provider/provisioner (e.g. GKE, kops AWS, etc): gke.3

- cert-manager version (e.g. v0.4.0): v0.11.0

- Install method (e.g. helm or static manifests): Static manifest

/kind bug

All 4 comments

Just to confirm, I guess this is coming from the webhook component?

@JoshVanL yeah that is a fair point. And I can confirm this is the webhook. Was just showing in the filtering of the cert-manager as well, but that included webhooks logs too.

This is expected and normal behaviour, the webhook needs to wait for the CA injector to do its thing to bootstrap the CA for the webhook and typically means the webhook will restart once or twice on install. We should probably do a better job of signalling this and reduce false negatives on the webhook failing

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to jetstack.

/lifecycle stale

Most helpful comment

This is expected and normal behaviour, the webhook needs to wait for the CA injector to do its thing to bootstrap the CA for the webhook and typically means the webhook will restart once or twice on install. We should probably do a better job of signalling this and reduce false negatives on the webhook failing